AI agents increasingly operate inside real products, where decisions depend on data spread across many systems, constantly changing, and governed by strict access rules. Without a clear way to manage that information, reliability breaks down quickly.

Context engineering addresses this by defining how data is selected, structured, and delivered to an agent at the moment it needs to act. It provides a practical framework for keeping agent behavior consistent as systems scale.

TL;DR

- Context engineering is the practice of preparing, structuring, and maintaining the data AI agents use to reason and act. It controls which data is eligible, how it is structured, how fresh it is, and which access rules apply at decision time.

- Most agent failures come from missing, stale, or incorrectly scoped context, not from limited model capabilities. Prompting and model selection cannot fix these problems because they operate after data has already been retrieved.

- Context engineering is broader than RAG. RAG handles document retrieval through embeddings and semantic search. Context engineering manages all information entering the context window, including structured records, metadata, conversation history, and tool outputs, plus freshness and permission rules.

- Production agents need both real-time fetch and centralized data replication. Real-time fetch works for single-record lookups; centralized replication enables cross-source reasoning, entity mapping, and search on tools that lack native search APIs.

What Is Context Engineering?

Context engineering is the practice of preparing, structuring, and maintaining the data AI agents use to reason and act. It ensures each agent receives the correct information when making a decision.

This happens close to decision time. While an agent may have access to large volumes of enterprise data, its behavior depends on the context assembled for a given task. Context engineering controls which data is eligible, how it is structured, how fresh it is, and which access rules apply.

An agent’s context window often includes multiple types of inputs, not only retrieved documents, so context engineering must govern how all of that information is assembled.

For this reason, model choice is rarely the main constraint in production systems. Most agent failures come from missing, stale, or incorrectly scoped context, not from limited model capabilities. Even a strong model will produce unreliable results if the context is wrong.

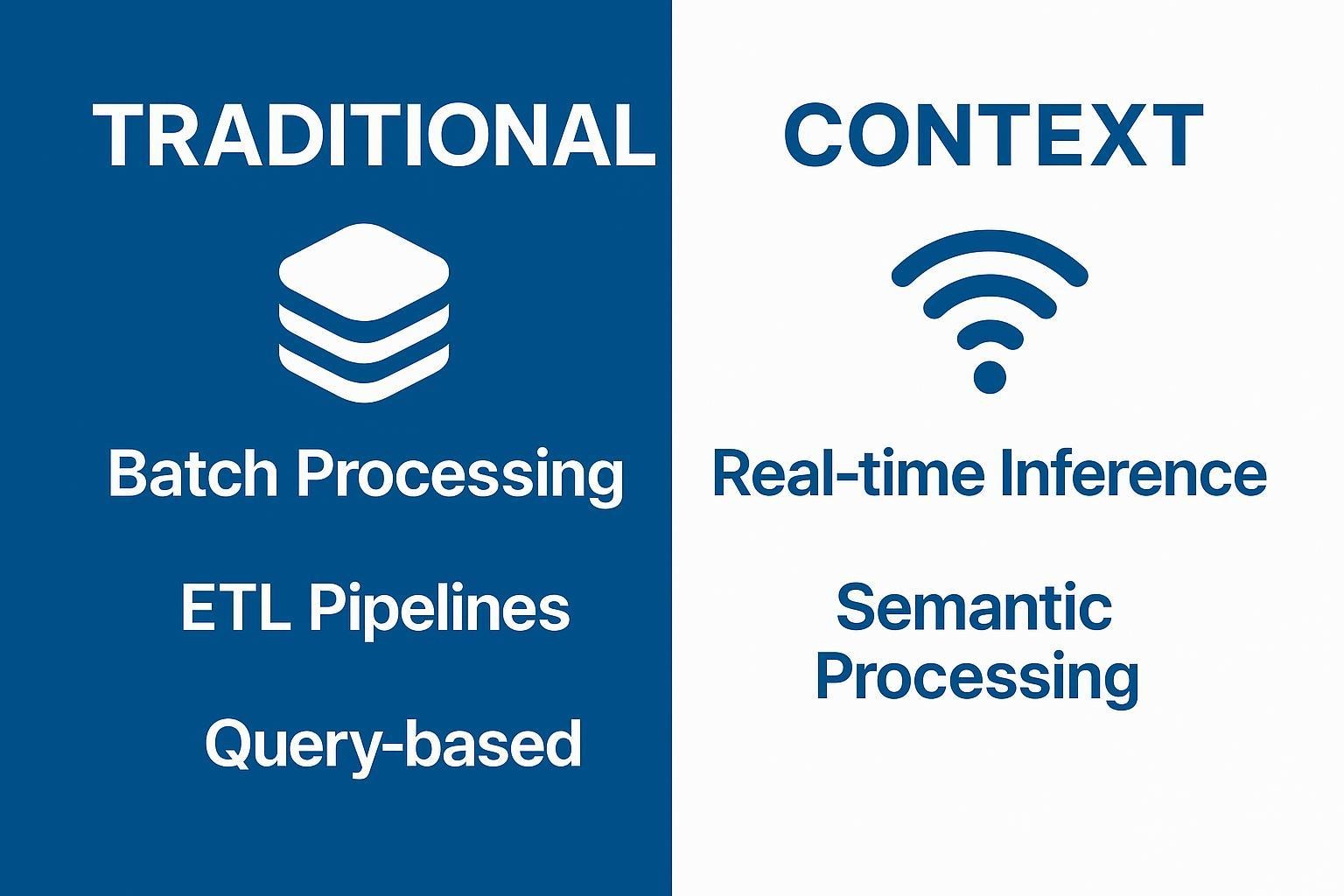

While traditional data engineering focuses on moving and transforming data through batch ETL pipelines for analytics and storage, context engineering serves a different purpose. It prepares data specifically for agent consumption, often assembling it in real time with strict requirements around relevance, latency, and access control.

Rather than optimizing for reports or query performance, it optimizes for decision quality at inference time.

How Is Context Engineering Different from RAG?

RAG (Retrieval-Augmented Generation) handles one specific step in the context pipeline. It retrieves unstructured content by chunking documents, generating embeddings, and running semantic search at query time. RAG is helpful when an agent needs information from files, tickets, or documentation, but it covers only a small part of how context is prepared.

Context engineering is the broader discipline that determines what information is allowed into the model’s context window and how it is structured before the model sees it. It handles structured records, metadata, vector indexes, conversation history, workflow state, tool outputs, and real-time fetches. It also manages freshness, eligibility, and permission rules across all sources, not only documents.

As agents move into production, teams treat RAG as one retrieval technique within a broader context engineering framework rather than as a discipline.

Why Do AI Agents Need Context Engineering to Work Reliably?

Naive data ingestion leads to predictable failures in production. Agents hallucinate when context is missing, recommend outdated APIs when data is stale, and expose sensitive information when access checks happen too late.

Prompting and model selection cannot fix these problems. They operate after data has already been retrieved and cannot correct information once it enters the context window.

Here’s why AI agents require context engineering to work as expected:

Permission Issues

Enterprise systems enforce access in various ways. Google Drive, Notion, and Slack all define permissions differently. For AI agents, those permission checks need to happen before anything is retrieved. That means making sure the agent only pulls data the user is allowed to see, before semantic search runs.

Stale Content

Information changes constantly, but an agent’s context often lags. Your knowledge base may have been updated yesterday with new API documentation, while your vector database is still serving embeddings from last month. As a result, agents use outdated information. Solving this requires pipelines that detect source changes and automatically refresh embeddings, so context stays current as the underlying data evolves.

Missing Embeddings

An agent cannot retrieve documents that were never indexed. A folder added to Google Drive last week does not exist in the agent’s context until the ingestion pipeline processes it. Until that happens, those documents are invisible during retrieval.

Low-Quality Retrieval

Poorly structured documents and missing metadata break retrieval quality. Files that mix tables and paragraphs need to be handled section by section, while OCR artifacts from scanned PDFs cause embeddings to degrade. Fixing these issues requires preprocessing pipelines that clean and properly structure content before embeddings are generated.

Inaccessible Sources

Agents often need data from dozens of SaaS tools, each with its own authentication model, API structure, and rate limits. Building and maintaining custom scripts for every integration quickly turns into days spent on documentation and edge cases. Solving this connector problem requires data infrastructure that can handle integrations systematically.

Context engineering addresses these systematic challenges at the infrastructure layer, managing what information a model receives throughout its execution lifecycle.

How Does Context Engineering Work?

Context engineering operates through processes that transform raw data into agent-ready context:

- Document chunking: Breaks content into appropriately sized pieces with strategic overlap to prevent information loss

- Embedding generation: Converts text chunks into vectors that capture semantic meaning for retrieval

- Vector databases: Store embeddings for fast similarity search at query time

- Metadata extraction: Enriches text chunks with contextual information that improves retrieval accuracy

- Permission management: Ensures agents only access data users are authorized to see

- Freshness pipelines: Keep context current as source data changes through streaming architectures

These components work together in a continuous cycle to maintain a reliable agent context.

Which Context Engineering Patterns Do Teams Typically Use?

Teams building production agents converge on four architectural patterns. Each offers different trade-offs between latency, accuracy, and infrastructure complexity.

Why Agents Need Both Real-Time Fetch and Centralized Data Replication

Most context engineering discussions focus on either real-time API calls or batch data pipelines. In practice, production agents need both and understanding when to use each determines whether your agent can reason across enterprise data or only within single-source silos.

Single-Record Fetch (Real-Time)

Real-time fetch retrieves individual records on demand. For example, when a user asks "What's the status of ticket #4521?", the agent calls the Zendesk API directly and gets the current state. This pattern works well for:

- Point lookups where you know exactly what you need

- Write operations that must reflect immediately

- Sources with excellent native search capabilities

Centralized Data Replication (Bulk Sync)

Replicating data to a central location enables capabilities that real-time fetch cannot provide:

When to Use Each

The decision depends on what the agent needs to accomplish:

Production systems typically implement both. MCP servers can expose fetch operations for real-time lookups while centralized pipelines maintain indexed, searchable context for cross-source reasoning.

What Real-World Use Cases Depend on Context Engineering?

Context engineering covers several use cases, each with different architectural requirements.

Enterprise Search Agents

These agents help employees search across scattered company systems, such as looking for information in Confluence, Slack conversations, and Google Drive simultaneously. They require unified data access, permission-aware retrieval, and fresh context to avoid returning unauthorized or outdated results.

Customer Support Copilots

These copilots retrieve customer account history from Salesforce, previous tickets from Zendesk, and relevant documentation to assist support teams. Data access rules ensure staff only see information appropriate to their role, while freshness pipelines prevent recommendations based on deprecated products.

Internal Knowledge Assistants

These agents answer questions about company processes by accessing documentation across Notion, code comments in GitHub, decisions in Linear, and Slack threads. Permission boundaries ensure each team sees only materials appropriate to their role.

Compliance-Sensitive Copilots

Operating in regulated industries, these agents require row-level security filtering, comprehensive audit logging, encryption for sensitive embeddings, and data residency controls to maintain HIPAA, SOC 2, and similar compliance standards.

Complex Multi-Agent Workflows

When specialized agents work toward a shared goal, they split tasks such as researching requirements, generating specifications, and validating compliance. Context engineering manages how information moves between those agents. It prevents context collapse as tasks fan out. It also preserves audit trails so decisions and sources remain traceable.

Each use case shares common infrastructure requirements around data access, permissions, freshness, and reliability.

How Is Context Engineering Related to AI Agent Orchestration?

AI agent orchestration coordinates behavior across multiple agents in a system. It controls how agents communicate, which tools they can access, and how tasks move between them. Frameworks like LangChain, LlamaIndex, CrewAI, and AutoGPT focus on this coordination logic.

Orchestration relies on context engineering. The orchestrator decides which agent should handle a customer question, while context engineering ensures that agent can access the right, permission-aware data to answer correctly.

Orchestration frameworks assume context is available when needed, but they do not handle data connectivity, authentication across SaaS tools, incremental syncing, or permission management. Context engineering addresses these requirements by making data available when and where agents need it.

How Do You Give AI Agents the Right Context Every Time?

Context engineering depends on systems that can reliably ingest data, process it at scale, and apply the same access rules used by the source systems. A context engineer supports this by checking data quality, enforcing permissions, and keeping agent context fresh so pipelines stay dependable.

Airbyte's Agent Engine provides the underlying infrastructure that makes this work possible. It offers embedded connectors for hundreds of data sources, unified pipelines for structured and unstructured data, automatic metadata extraction, and built-in row-level permissions. The platform includes SOC 2, PCI, and HIPAA compliance packs, comprehensive audit logging, and flexible deployment options for teams with strict data residency requirements.

This lets your engineering team focus on agent capabilities rather than data plumbing. Talk to us to learn more about how Airbyte Embedded supports context engineering at scale.

Frequently Asked Questions

Should I use real-time API calls or batch data replication for agent context?

Most production systems need both. Real-time fetch works for single-record lookups and write operations. Centralized replication enables cross-source reasoning, entity mapping, and search on tools that lack native search capabilities. MCP servers can expose both patterns: fetch for real-time operations and search against replicated, embedded data for complex queries.

What is the difference between context engineering and prompt engineering?

Prompt engineering focuses on how you phrase instructions to language models. Context engineering manages what data the model receives, including retrieval, permission filtering, and freshness. Better prompts cannot fix stale data or permission violations.

How much does context engineering infrastructure cost to build in-house?

At scale with dozens of data sources, teams typically need multiple full-time engineers focused on maintaining pipelines and debugging context issues. Purpose-built platforms eliminate this ongoing maintenance burden.

Can I use open-source frameworks like LangChain or LlamaIndex instead of a context engineering platform?

These frameworks handle orchestration and retrieval logic well but do not solve data connectivity, authentication, incremental syncing, or permission management. Most teams use them in combination with data infrastructure platforms.

How do you handle data sovereignty requirements with context engineering?

Regulated industries require regional vector database deployment, FIPS 140-2 encryption, data residency controls, and comprehensive audit logging. Embeddings derived from personal data are subject to the same jurisdictional laws as source data.

What are the most common failure modes in production context engineering systems?

The three most critical failures are poor retrieval quality from incorrect chunking, stale context from lack of continuous sync, and permission violations from authorization checks happening after retrieval instead of before.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)