Agentic RAG (Retrieval-Augmented Generation) is a way of building retrieval systems where answering a question is no longer a single lookup followed by generation. Instead, an agent takes responsibility for finding the information it needs, deciding which sources to consult, and determining when it has enough context to respond.

This shift matters when questions cannot be satisfied by one search pass or one data source. As applications move beyond static knowledge bases and into live systems, retrieval becomes an active process rather than a fixed step. Agentic RAG reflects that change by placing decision-making around retrieval inside the system itself.

TL;DR

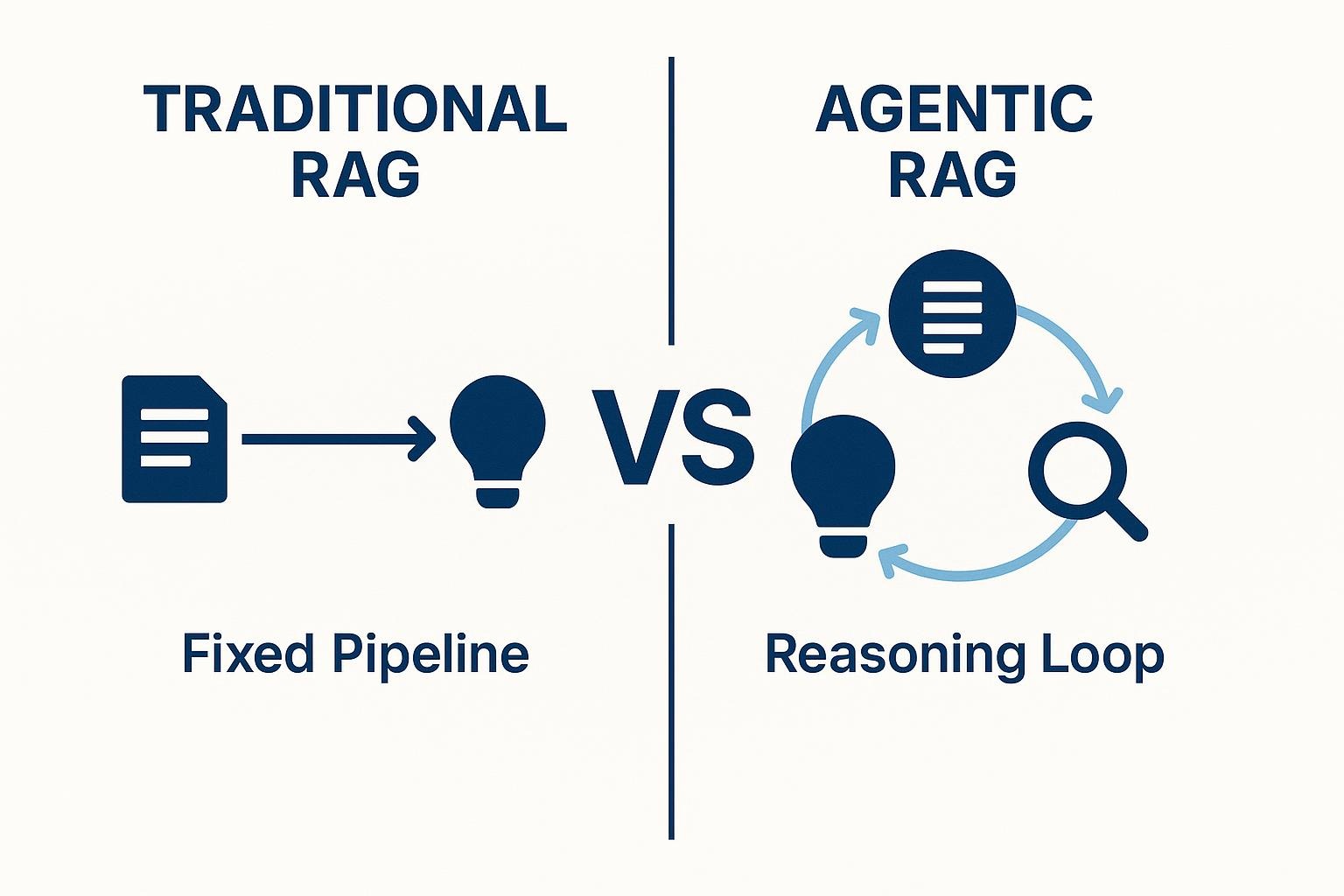

- Agentic RAG places retrieval inside a reasoning loop rather than treating it as a fixed preprocessing step. The agent decides when to retrieve, what to retrieve, and whether more information is needed before generating a response.

- The key difference from traditional RAG is adaptive control flow. Agents can decompose queries, reformulate searches, switch tools, and iterate until they have sufficient context, rather than running a single retrieval pass.

- Use Agentic RAG for multi-step reasoning, multi-source synthesis, and queries that require adaptive retrieval. Avoid it for straightforward question-answering where traditional RAG is faster, cheaper, and easier to operate.

- Production challenges center on data freshness, latency, cost, and observability. Agents can only reason reliably when data pipelines are instrumented, permissions are enforced at retrieval time, and each reasoning step is inspectable.

What Is Agentic RAG?

Agentic RAG is a retrieval architecture where an AI agent actively controls retrieval as part of its reasoning process. Instead of treating retrieval as a fixed preprocessing step, the agent decides when to retrieve, what to retrieve, and whether more information is needed before generating a response.

The defining trait of Agentic RAG is that retrieval runs inside a reasoning loop. The agent evaluates whether the current context is sufficient, identifies gaps, and iterates until it has enough information to answer.

This approach is used when questions require synthesis across multiple documents, handling conflicting information, or adapting retrieval strategy as understanding evolves. It adds orchestration overhead and runtime cost, but it solves failure modes that static pipelines cannot.

How Is Agentic RAG Different From Traditional RAG?

The difference comes down to whether retrieval runs as a fixed pipeline or as part of an agent’s reasoning loop.

How Does Agentic RAG Work?

Agentic RAG runs as an execution loop that adapts based on what the agent learns at each step. Instead of assuming a single retrieval is enough, the system evaluates progress continuously and decides what to do next before generating a response.

At a high level, the loop works as follows:

- Query intake: Receive the question and initialize execution state to track decisions and intermediate results

- Query evaluation: Assess the complexity of the request and select an initial retrieval strategy

- Initial retrieval: Fetch information from the most relevant source

- Context evaluation: Determine whether the retrieved data is relevant and sufficient

- Strategy refinement: Reformulate queries, select a different tool, or retrieve again if gaps remain

- Response generation: Produce the final answer once sufficient context is available

The agent maintains this loop throughout execution. For simple questions, the process may complete after a single retrieval. For more complex questions, the agent decomposes the request into sub-questions and retrieves information across multiple steps.

For example, if you ask "What is our return policy?", the agent routes straight to your documentation vector store. But if you ask "What factors contributed to Q2 revenue decline and how do they compare to Q1?", the agent breaks this into sub-questions. It first retrieves Q1 and Q2 financial data, then pulls relevant operational metrics, and then synthesizes across all sources.

Some implementations use ReAct-style reasoning, where retrieval and tool use are treated as explicit actions within the agent’s reasoning process. After each action, the agent evaluates what it has learned and adjusts its strategy. This ability to self-correct and adapt during execution is what allows agentic RAG systems to handle questions that break fixed retrieval pipelines.

What Components Make Up an Agentic RAG System?

An Agentic RAG system needs five core components that work together:

1. Agent Orchestration Logic

Agent orchestration logic is the control layer that manages how an agent executes a query end-to-end. It classifies the request, selects the appropriate tools or data sources, tracks intermediate results, and decides whether to continue retrieving information or generate a response. This loop repeats until the agent determines it has enough context to answer confidently.

2. Retrieval Layer

The retrieval layer combines vector databases for semantic search with structured query systems. In production, this often uses hierarchical structures such as section → paragraph → sentence, with confidence-based drill-down to balance context breadth and precision. The system can start broad, then zoom in only when more detail is needed.

3. Dual-Memory Architecture

Dual-memory architecture separates short-term and long-term context. Short-term memory holds recent conversational state inside the prompt, while long-term memory relies on RAG to retrieve historical information from persistent storage using vector search. This separation allows the agent to reason over what was just said while still grounding answers in facts that may be weeks or months old.

4. Tool Invocation Layer

Tool invocation layer is where the agent decides when and how to call external systems. Based on its understanding of the task and intermediate results, the agent invokes tools such as databases, APIs, or external services. These decisions are driven by LLM-based reasoning at runtime rather than predefined execution sequences, allowing the agent to adapt its actions as the task unfolds.

5. Security and Governance

Security and governance enforce access controls and traceability throughout execution. Production systems apply user-specific permissions at retrieval time through unified policy engines, filtering data at the database layer before it reaches the agent. Audit logs capture retrieved data, tool invocations, and generated outputs to support compliance and post-hoc review.

When Should You Use Agentic RAG?

Use Agentic RAG when retrieval needs to adapt during execution, and avoid it when a single, predictable retrieval step is enough.

- Multi-step reasoning: Use Agentic RAG when a question must be decomposed into sub-questions and answered iteratively. Queries like “What factors contributed to project delays and how do they relate to resource allocation?” require multiple retrieval passes that fixed pipelines cannot handle.

- Multi-source synthesis: Adopt Agentic RAG when answers depend on combining information from different systems, such as vector databases, SQL tables, APIs, or external services. The agent can decide which sources to query and in what order instead of relying on hardcoded retrieval paths.

- Adaptive retrieval: Choose Agentic RAG when the system must evaluate results and adjust retrieval strategy mid-query. This includes refining queries, switching tools, or retrieving additional context based on what has already been learned.

- Simple knowledge access: Avoid Agentic RAG for straightforward question-answering over a single, well-structured knowledge base. Traditional RAG is usually faster, cheaper, and easier to operate when retrieval patterns are predictable.

- Hybrid routing: Many teams combine both approaches by routing queries at runtime. Simple requests go through traditional RAG, while complex or ambiguous queries trigger agentic workflows, adding complexity only when it is actually needed.

What Are Common Agentic RAG Use Cases?

Agentic RAG shows up most often in applications where retrieval must adapt in real time, span multiple systems, or support extended reasoning rather than single-pass answer.

Enterprise Search and Internal Copilots

Organizations are extending traditional enterprise search into agent-driven copilots. The agent layer adds reasoning on top of existing indices, allowing systems to dynamically select data sources and iterate retrieval strategies. Tools like Microsoft Copilot retrieve from documentation, internal knowledge bases, and external systems while enforcing permission-trimmed access.

Customer Support Agents With Live System Access

Support agents benefit when answers require both static knowledge and real-time data. DoorDash deployed Agentic RAG for Dasher support, combining documentation search with live system queries. The agent retrieves tracking details, monitors sub-minute updates, and evaluates response quality across metrics such as correctness, coherence, and relevance.

Multi-Step Operational Workflows

Complex operational queries benefit from agentic orchestration when answers require coordinating data across multiple systems. Queries like “Compare Q1 and Q3 revenues, check for regulatory filing changes in Q2, and generate a risk assessment” cannot be answered in a single retrieval pass. The agent pulls financial data from multiple periods, cross-references regulatory filings, and synthesizes the results into a single assessment, coordinating each step based on what it learns along the way.

Research and Analysis Agents

Research tasks often involve sustained investigation rather than single-pass retrieval. Multi-agent setups assign specialized roles for retrieval, query refinement, synthesis, and verification. This approach supports multi-hop reasoning across heterogeneous sources, including vector databases, web search, computational tools, and domain-specific APIs.

What Are the Main Challenges With Agentic RAG?

Agentic RAG introduces new production challenges that are less visible in demos and more prevalent in long-running systems, especially around data reliability, latency, cost, and governance.

What Makes Agentic RAG Work in Practice?

Agentic RAG works in production when retrieval is treated as infrastructure rather than a prompt pattern. Agents can only reason reliably when data is fresh, access controls are enforced at retrieval time, and execution across multiple steps is observable.

Airbyte’s Agent Engine is designed to handle these requirements. It provides managed connectors across hundreds of data sources, keeps data up to date through incremental syncs and CDC, and enforces permission-aware access before data reaches the agent. Through PyAirbyte and its MCP tooling, teams can configure and maintain these pipelines programmatically, without coupling agent logic to fragile data plumbing.

Talk to us to see how Airbyte Embedded can help you build reliable Agentic RAG systems.

Frequently Asked Questions

What makes RAG "agentic"?

The agent decides what data to retrieve and how to get it, instead of following the same steps every time. The agent analyzes queries, selects appropriate tools and data sources, evaluates whether retrieved information is sufficient, and iterates until it has adequate context.

Is Agentic RAG always better than standard RAG?

No. Agentic RAG adds latency, costs, and complexity. It makes sense for queries requiring multi-hop reasoning, synthesis across sources, or dynamic tool selection. For straightforward question-answering against static knowledge bases, traditional RAG's simplicity, speed, and lower cost are more appropriate.

Do Agentic RAG systems require vector databases?

Vector databases provide semantic search capabilities that most systems use, but agents orchestrate across multiple data source types. Production implementations integrate vector databases for document retrieval, SQL databases for structured queries, REST APIs for current data, and web search. The agent evaluates query requirements and autonomously selects which sources to query.

How do agents decide what data to retrieve?

Agents implement reasoning loops using the ReAct framework where they analyze the user's query, determine information needs, and select appropriate retrieval tools. The agent maintains awareness of its goal, tracks what it has learned, identifies gaps, and chooses data sources that can fill those gaps.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)