AI agent observability is a system that gives you visibility into how autonomous agents make decisions, construct reasoning chains, and execute multi-step workflows in production.

This is necessary because AI agents rarely fail in obvious ways. They can return valid responses, show normal latency, and produce no errors while still being wrong. Traditional observability cannot detect these semantic failures.

That gap has already caused real damage. In one high-profile case, Air Canada’s chatbot invented a bereavement fare discount that did not exist. The airline was later required by a court to honor the fabricated policy, even though the system appeared to function correctly from an operational perspective.

AI agent observability closes this gap by making agent behavior inspectable at the level where decisions are actually made.

TL;DR

- AI agent observability reveals how agents make decisions, not just whether they're running. Traditional monitoring misses semantic failures where agents return valid responses but wrong answers.

- Agents fail differently than deterministic software. The same input can produce different paths, and failures hide inside "successful" requests that show green on infrastructure metrics.

- Effective observability captures reasoning chains, tool calls, and cost signals. You need traces that explain what the agent did and why, not just latency and error rates.

- Production deployment is the adoption trigger. Most teams with agents in production have implemented observability infrastructure.

What Is AI Agent Observability?

AI agent observability is the ability to inspect how an agent behaves during execution. It shows how a task unfolded step by step, including the agent’s decisions, the tools it called, the inputs and outputs of those calls, and the cost of the run in tokens and latency.

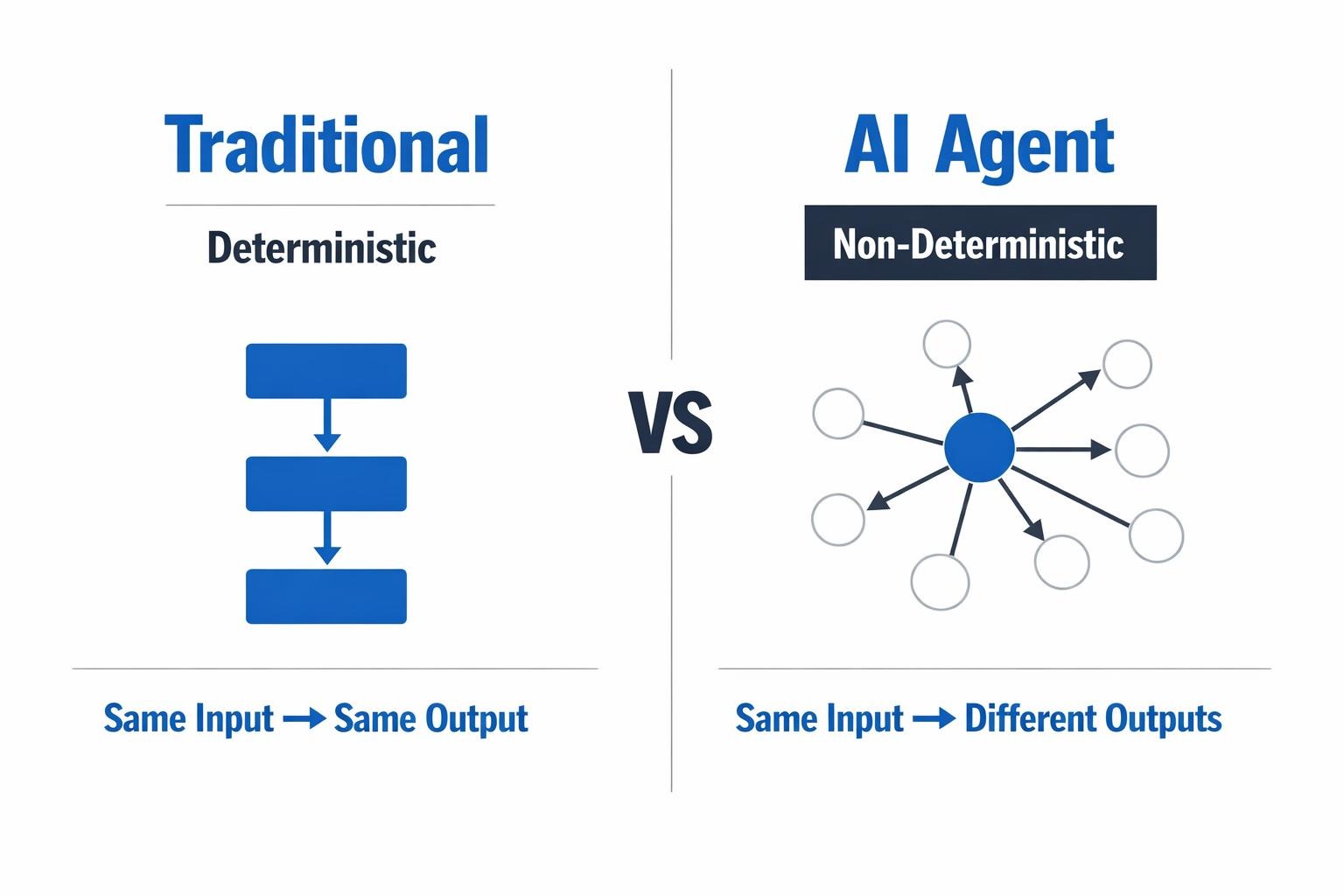

This matters because agents behave differently from deterministic software. The same input can lead to different paths because the model’s reasoning is non-deterministic, so failures often hide inside “successful” requests.

Many agent failures are semantic. You can get a clean HTTP 200 while the agent produces the wrong answer or takes the wrong action. To debug that, you need execution traces that explain what the agent did and why, not only traditional metrics.

How Is AI Agent Observability Different from Traditional Observability?

Traditional observability answers one question: Is the system working? It tracks infrastructure and application health through signals like uptime, error rates, and latency. When something fails, the failure is explicit. A request errors out, or a service stops responding. Engineers can trace the issue back to a specific component.

AI agent observability answers a different question: Did the agent make the right decisions?An agent can return a valid response, stay within latency budgets, and produce no errors while still behaving incorrectly. Traditional metrics show green, but the outcome is wrong.

The key difference is what counts as a failure. In traditional systems, failure is technical. In agent systems, failure is behavioral. An agent can hallucinate, reason incorrectly, or choose the wrong tool without triggering any infrastructure-level signal.

Cost further reinforces this difference. Traditional observability treats cost as a background infrastructure concern. Agent observability treats it as part of behavior. How an agent reasons, how many steps it takes, and which tools it calls directly affect per-request cost.

In short, traditional observability monitors system health. AI agent observability monitors decision quality, execution paths, and their consequences.

How Does AI Agent Observability Work?

AI agent observability instruments the agent’s execution lifecycle so every decision, action, and outcome can be inspected after the fact. Here’s how that works in practice:

- A user request starts an agent execution: The agent receives a prompt or task, along with system instructions, memory context, and any retrieved data. This marks the start of a trace.

- Instrumentation attaches tracing context: Observability hooks are initialized using frameworks such as LangChain or LlamaIndex. These hooks create trace and span identifiers that persist across the agent’s execution.

- Reasoning steps emit structured signals: Each planning step, intermediate decision, or loop iteration produces telemetry describing what the agent decided to do and why.

- Tool and function calls generate trace events: When the agent calls a tool or function, observability captures the tool name, input parameters, outputs, execution latency, retries, and failure states.

- LLM invocations generate execution records: Every model call logs prompt inputs, output tokens, finish reasons, latency metrics, and token usage for cost attribution.

- The system correlates all events into a single trace: Reasoning steps, LLM calls, and tool executions are nested as spans within a unified execution trace, preserving the full decision path.

- Automated evaluators assess semantic quality: Automated evaluators score outputs for relevance, groundedness, safety, hallucinations, or policy violations based on the captured context.

- Observability pipelines export traces to backend systems: OpenTelemetry-compatible pipelines send traces to observability platforms where teams can search, analyze, alert, and audit agent behavior.

- Engineers analyze failures and optimize behavior: Teams use traces to debug silent failures, tune prompts and tools, reduce cost, and validate that agents behave correctly in production.

What Signals Do You Need to Observe AI Agents?

When observing AI agents, you need to capture several signal categories. Start with the most critical signals, then layer in operational detail.

Input and Output

Capture everything that goes into and comes out of the agent. This includes user prompts, system instructions, request metadata, and input token counts. On the output side, record generated responses, output tokens, finish reasons, and model metadata that reflects how the response was produced.

Reasoning and Decisions

Expose how the agent decided what to do. Track reasoning summaries, action sequences, and decision points where one path was chosen over another. Include why specific tools were selected, how memory was used, and whether guardrails and planning steps were followed.

Tool and Function Calls

Track how the agent interacts with external systems. Record tool names, inputs, outputs, execution latency, and success or failure status. Include retry behavior, API response codes, and token usage for embedded model calls, with sensitive parameters properly redacted.

Performance, Cost, and Quality

Measure whether the agent is effective and efficient. Track total execution time, step-level latency, token usage, and per-request cost. Evaluate output quality using automated checks, retrieval accuracy for RAG workflows, grounding signals, and safety validations.

Errors and Trace Structure

Make failures easy to debug. Capture model errors, tool failures, validation issues, hallucination flags, and safety triggers. Use structured traces with request-level identifiers, step-level spans, and consistent metadata to analyze behavior end to end.

When Do Teams Need AI Agent Observability?

Five adoption triggers indicate when teams need AI agent observability:

- Development to production transition: Teams can start with basic logging for initial prototyping, but should integrate observability infrastructure when development moves beyond simple request-response patterns or approaches to the first production deployment.

- Multi-agent systems development: When multiple agents interact, coordinate, or share state, you need specialized capabilities to trace multi-agent workflows and correlate latency, errors, and costs across agent interactions. Multi-agent interactions create cascading failure patterns where issues in one agent propagate to others. Without distributed tracing and correlation capabilities, root cause analysis becomes impractical.

- Customer-facing deployment: Teams need immediate issue detection to respond before customer complaints arrive, quality assurance mechanisms to verify outputs meet standards, and the ability to correlate user reports with specific agent behaviors.

- Scaling beyond initial capacity: Horizontal scaling, increased request volume, and performance optimization all require observability.

- Enterprise deployment and compliance requirements: Enterprise customers require audit trails showing what decisions agents made and why. Regulatory requirements mandate demonstrable control over agent behavior. These requirements cannot be satisfied through basic logging.

Langchain’s report shows that 94% of teams with agents in production have implemented observability infrastructure, establishing it as a production requirement.

How Does AI Agent Observability Fit Into Production AI Systems?

AI agent observability fits into production systems as the cognitive transparency layer that sits alongside operational monitoring. While traditional observability tracks whether services are running, agent observability reveals whether they're reasoning correctly.

Besides observability infrastructure, production AI agents require reliable, permission-aware data access that traces how data flows through reasoning chains. When agents retrieve context from various sources, you need visibility into what was accessed, whether permissions were enforced, and how information shaped decisions.

Airbyte’s Agent Engine provides secure data access for production AI agents with built-in observability for data flows. Talk to us to see how Airbyte Embedded powers production AI agents with reliable, permission-aware data.

Frequently Asked Questions

What's the difference between monitoring and observability for AI agents?

Monitoring tracks predefined metrics and alerts when thresholds are crossed. Observability provides complete visibility into system behavior, allowing teams to investigate unknown failure modes. For agents specifically, observability captures reasoning chains and decision paths that monitoring cannot measure.

Can I use my existing APM tool for AI agent observability?

Traditional application performance monitoring (APM) tools miss agent-specific failure modes such as hallucinations, tool-selection errors, and semantic degradation. You need specialized agent observability platforms that capture the full execution narrative through hierarchical spans, showing what agents did, what inputs they processed, and how decisions flowed between reasoning steps.

When should I implement observability rather than relying on console logs?

Implement observability before production deployment, when building AI agent systems beyond simple prototypes, or when cost control matters. Console logs work for initial experimentation, but cannot capture the distributed reasoning traces, semantic quality evaluations, or token-level cost attribution that production systems require.

Do I need full reasoning traces if I already evaluate outputs?

Yes. Output evaluation tells you that an agent was wrong, not why. Reasoning traces show which decision, tool call, or context choice caused the failure, which is required to fix behavior reliably in production.

Suggested Read

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)