Large language models (LLMs) feel fast and conversational because a lot of work happens behind the scenes to make token-by-token generation efficient. As prompts get longer and conversations continue over time, inference performance quickly becomes a bottleneck. Small architectural optimizations can have a large impact on latency, throughput, and cost at scale.

KV caching is one such optimization. It is a practical technique that directly determines whether long context conversations, agents, and streaming outputs feel responsive or slow in production.

TL;DR

- KV caching stores key and value vectors from the attention mechanism so LLMs don't recompute them for every token. This eliminates redundant work during text generation, directly reducing latency and serving costs.

- The optimization trades compute for memory, shifting the bottleneck from GPU cycles to VRAM. Cache size grows linearly with sequence length and batch size, often exceeding model weights at scale.

- KV caching differs from prompt caching in scope and lifetime. KV caching operates within a single request during generation; prompt caching persists across requests for repeated prefixes.

- Production systems need intelligent memory management, not just caching enabled. Block-based allocation, quantization (FP8/INT4), and paging reduce fragmentation and maximize concurrent request capacity.

What Is KV Caching in LLMs?

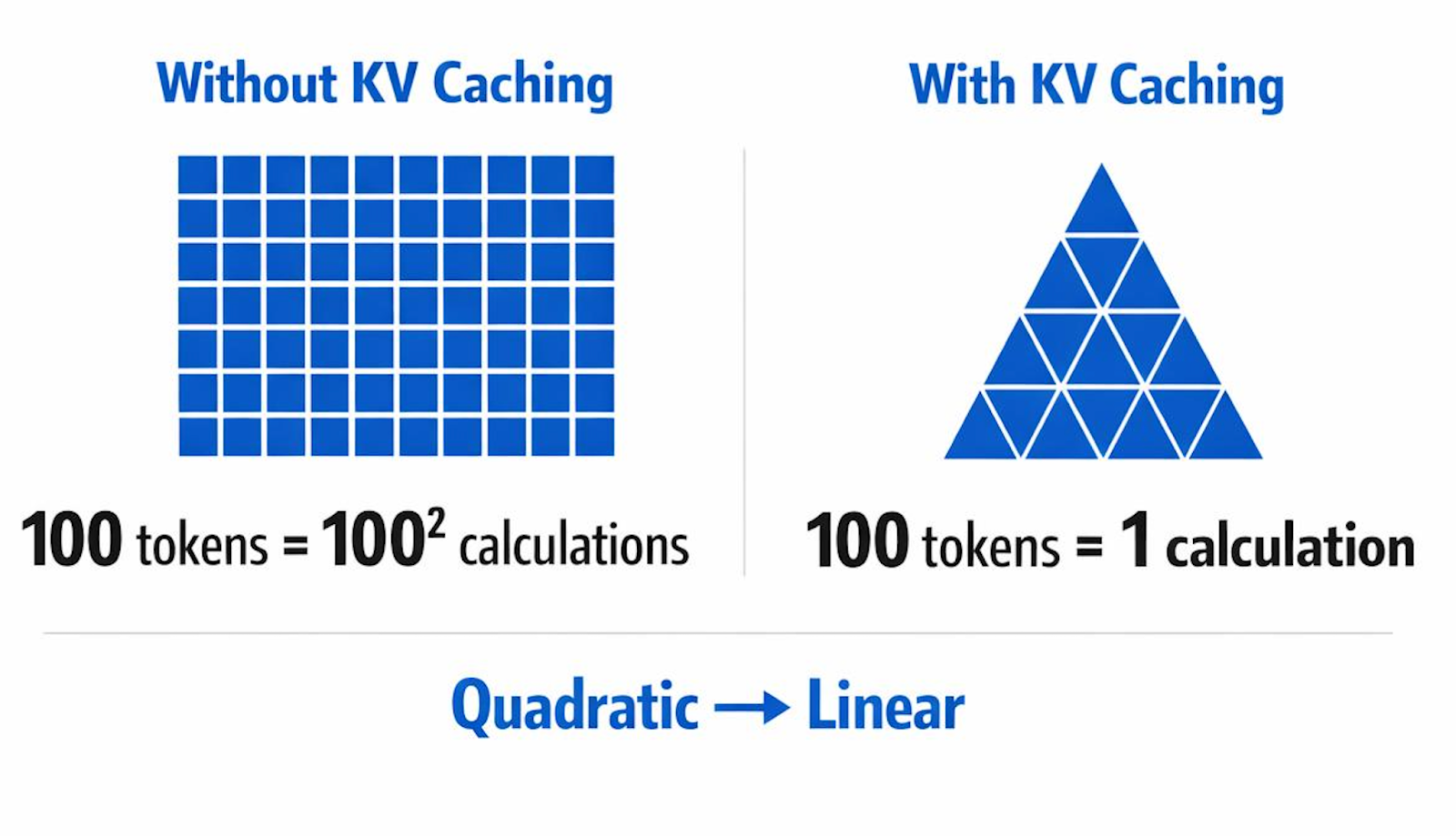

KV caching is an inference-time optimization that stores intermediate attention states so the model doesn’t redo the same work on every token. When an LLM generates text token by token, a naive implementation recomputes attention scores over all previous tokens at each step. KV caching avoids this by reusing information that was already computed.

Specifically, the cache stores the key and value vectors produced for past tokens during the attention mechanism. Once these vectors are computed, they never change. As generation continues, the model only needs to compute a new query for the current token and attend over the cached keys and values instead of recomputing them from scratch.

This optimization removes redundant key and value projections for earlier tokens, which significantly reduces compute cost and latency. For engineers building chatbots, copilots, or agent systems, this translates directly into faster responses and lower serving costs.

Under the hood, this works because of how transformers are structured. Each token is projected into three representations:

- Queries (Q)

- Keys (K)

- Values (V)

You can think of the query as “what I’m looking for,” the key as “what I contain,” and the value as “what information I provide.” As new tokens are generated, only the query changes. The keys and values for past tokens remain fixed, making them ideal candidates for caching.

Why Is KV Caching Important for LLM Performance?

KV caching eliminates a major source of wasted computation during text generation. After the model computes the key and value vectors for the initial prompt, those tensors are stored in GPU memory and reused. When a new token is generated, the model only computes attention for that single token against the cached keys and values, instead of recomputing projections for the entire history.

This changes the performance profile of inference in three important ways:

- Latency per token drop: Each generation step does less work

- Throughput increase: The model can produce more tokens per second

- Predictable memory access: Overall efficiency improvement on modern GPUs

These gains grow with sequence length, so the impact is most noticeable for long prompts and multi-turn conversations.

Consider a chatbot maintaining a 4K-token conversation history. Without KV caching, every new token would trigger recomputation of key and value projections for all 4,000 previous tokens. With caching, those projections are computed once and reused on every step that follows. As context grows, the savings compound. This makes KV caching essential for any production system serving long-running or interactive LLM workloads.

How Does KV Caching Work?

KV caching changes how attention is computed after the first token. During the prefill phase, the model processes the entire prompt in parallel, computes key and value projections for every input token across all attention layers, and stores them in cache tensors. The first output token is generated only after this step completes, which is why long prompts can produce noticeable startup latency.

Once generation begins, the model enters the decode phase, which runs one token at a time. For each new token, it:

- Computes fresh query, key, and value projections only for that token

- Appends the new key and value to the existing per-layer caches

- Reuses all cached keys and values when computing attention

Attention always spans the full cached history, but no projections are recomputed for earlier tokens. Each transformer layer maintains its own growing key and value cache that represents the sequence so far.

This design trades repeated computation for memory access. Instead of recalculating projections for the entire context at every step, the model reads cached tensors and performs minimal new work. On modern GPUs, this becomes more efficient as sequence length grows.

As a result, inference performance naturally splits into two metrics. Time to First Token (TTFT) reflects the parallel, compute-heavy prefill step. Time Per Output Token (TPOT) reflects the sequential, often memory-bound decode step.

What Are the Tradeoffs of KV Caching?

KV caching dramatically improves inference speed, but it shifts the primary constraint from compute to memory, forcing a set of unavoidable production tradeoffs.

- High GPU memory usage: KV cache memory grows linearly with sequence length and batch size, often exceeding model weights at scale and becoming the dominant memory cost.

- Lower batch sizes: Reducing batch size frees memory for longer contexts, but directly reduces throughput.

- Shorter maximum context: Capping sequence length lowers memory requirements, but limits conversation history and document size.

- Cache quantization: Using FP8 or INT4 significantly reduces memory usage, but introduces small accuracy risks that must be validated per model.

- More GPUs: Adding GPUs increases available memory and capacity, but raises infrastructure and operational costs.

- Complex memory management: Block-based, non-contiguous KV cache allocation reduces fragmentation and improves utilization, but adds system complexity.

- Concurrency limits: Each active request requires its own cache, so memory saturation directly caps concurrent requests and can create bursty capacity under load.

How Does KV Caching Differ from Prompt Caching?

KV caching and prompt caching both reduce redundant computation, but they operate at different layers of the inference stack and solve different performance problems.

In production systems, these techniques are complementary. Prompt caching reduces the cost and latency of processing repeated prompt prefixes, while KV caching accelerates token-by-token generation once decoding begins. Used together, they deliver faster TTFT, higher throughput, and lower serving costs than either approach alone.

When Does KV Caching Matter Most?

KV caching matters most when context keeps growing, and the model would otherwise redo the same attention work again and again. That’s exactly how most AI agents behave in production.

Chatbots and Multi-Turn Conversations

Chatbots and multi-turn conversations see immediate throughput gains from KV caching because every turn builds on prior context. User messages, assistant responses, and system prompts accumulate over time. Without caching, each new response would recompute attention over the entire conversation history. KV caching avoids that repeated work, which is why conversational AI is economically viable at scale.

Agentic Workflows

Agentic workflows benefit even more, particularly in Time to First Token. Agents maintain large shared context across reasoning steps, tool calls, and observations. Each step reuses cached keys and values from previous steps, avoiding redundant projections. As workflows grow longer and more iterative, caching becomes essential.

Long Context Windows

Long context windows make KV caching non-negotiable. At very large sequence lengths, uncached attention can spend several seconds in the prefill phase alone. KV cache quantization reduces this cost by lowering memory requirements. Using FP8 instead of FP16 significantly shrinks cache size, making long-context workloads feasible on practical hardware.

Streaming Outputs

Streaming outputs depend on KV caching to keep latency predictable. When tokens are streamed as they are generated, each step adds overhead. Without caching, the model would recompute key and value projections for the entire history on every token, creating visible delays between tokens. KV caching limits computation to the newly generated token, producing the smooth, continuous output users expect.

Production Systems with Concurrent Requests

Production systems handling concurrent requests require KV caching combined with intelligent memory management. Naive cache allocation leads to severe memory fragmentation that wastes GPU VRAM and limits concurrency. Modern systems treat the KV cache like virtual memory, using block-based allocation and paging to reduce waste. This should be implemented first, with techniques like prefix caching and quantization layered on afterward for additional gains.

What Really Matters for KV Caching in Production?

The primary engineering challenge now lies in memory management rather than cache implementation. Naive KV cache designs waste significant GPU memory through fragmentation, which limits throughput and concurrency. In production systems serving concurrent requests, cache efficiency directly defines achievable batch sizes and the number of requests a system can handle simultaneously.

Airbyte's Agent Engine handles the data infrastructure that feeds your optimized inference pipeline. While KV caching reduces computational overhead during generation, agents still need fresh, permissioned context to reason over. Agent Engine provides governed connectors, automatic metadata extraction, and Change Data Capture (CDC) for sub-minute data freshness, so your agents always work with current information.

Talk to us to see how Airbyte Embedded delivers reliable, permission-aware data to production AI agents.

Frequently Asked Questions

Can you disable KV caching to save memory?

You can disable it in Hugging Face Transformers with `use_cache=False`, but this makes inference significantly slower. Instead of disabling, use quantization (FP8, INT4) to reduce memory substantially while maintaining performance.

How much GPU memory do you actually need for KV cache?

For Llama 2 7B, budget roughly 1 MB per token in FP16. A single 4K context needs approximately 4 GB just for cache. For Llama 3.1-70B, you need approximately 2.5 MB per token depending on configuration. A batch of 32 requests at 8K context requires roughly 640 GB of KV cache alone in this setup.

Does KV caching work with quantized models?

Yes, and you can quantize the model and KV cache independently. TensorRT-LLM performs best when both use FP8. NVIDIA's NVFP4 (4-bit floating point) quantization stores cache in 4-bit format, reducing memory substantially versus FP8 with minimal accuracy loss.

What happens to KV cache between conversation turns?

For API providers, whether KV cache persists depends on the provider. Anthropic Claude, Google Cloud Vertex AI, and AWS Bedrock support prompt caching for cross-request reuse, while most others discard cache between requests. For self-hosted systems, KV caching maintains cache in GPU memory during token generation within a single request, automatically supported by modern inference frameworks.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)