Large context windows have changed how teams build with language models. Tasks that once required careful chunking, retrieval logic, and multi-step orchestration can now be handled in a single request. Entire documents, long conversations, and accumulated agent state can stay in scope at once.

But this shift has also exposed a common misconception. Bigger context windows do not automatically produce better results. As inputs grow, models become slower, more expensive, and more prone to subtle reasoning failures. Important details can be missed, irrelevant information can dilute attention, and costs can spiral without clear gains in accuracy.

To use large context windows effectively, teams need to understand how models behave as context grows and how context quality affects outcomes. The real question is not how much a model can see, but how deliberately that information is selected, structured, and managed.

TL;DR

- Large context windows let models process entire documents, long conversations, and accumulated agent state in a single request. Tasks that once required chunking and multi-step orchestration can now be handled at once.

- Bigger context windows do not automatically produce better results. As inputs grow, models become slower, more expensive, and more prone to missing details buried in the middle of long prompts.

- Large context windows and RAG solve different problems. RAG filters large document collections before sending anything to the model; large windows enable deep reasoning over complete documents after retrieval.

- Context quality matters more than context size. Well-selected, well-placed information consistently outperforms large, noisy inputs. Design systems to write, select, compress, and isolate context deliberately.

What Is a Context Window in an LLM?

A context window is the maximum amount of text, measured in tokens, that a large language model can process in a single request. It includes the prompt, conversation history, retrieved documents, and the model’s response. Once you hit the limit, older content is truncated, or the task must be split across multiple calls.

Tokens are not the same as words. In English, one word averages about 1.33 tokens. Longer or compound words may be split into multiple tokens, while shorter words may be a single token. As a rule of thumb, 10,000 English words equal roughly 13,300 tokens. Languages like Spanish and French typically use slightly more tokens per word than English.

This limit exists because of how transformer models work. Their self-attention mechanism compares every token with every other token, which creates quadratic O(n²) compute and memory costs. Doubling the context length roughly quadruples the required computation and memory. That’s why very large context windows are expensive to run, even on modern hardware.

What Does a Large Context Window Change?

When context windows grow from 4K to 100K+ tokens, many tasks no longer require chunking. You can pass an entire contract, research paper, or technical spec in a single request. The model sees the full document at once, so cross-references and long-range relationships are preserved instead of being split across isolated chunks.

Long conversations can retain full history without aggressive summarization. A support agent can reference a configuration detail mentioned 45 minutes earlier without relying on a compressed summary that may drop exact values or constraints.

For code analysis, large context windows allow models to inspect sizable codebases in one pass. This enables tracking dependencies across files and catching bugs that only manifest when multiple modules are considered together.

What Are Large Context Windows Used For?

Here are some common use cases for large context windows:

Full Document Analysis

Large context windows let models process entire documents in one pass. This is useful for long earnings call transcripts, research papers, legal contracts, or medical records, where understanding relationships across sections matters. Instead of comparing clause 5 and clause 47 through fragmented chunks, the model can reason over both at the same time.

Extended Conversation History

In customer support or troubleshooting, long context windows allow the full conversation to remain available. When a user says “that didn’t work either,” the AI agent can reference an hour of prior steps and specific configuration details without relying on lossy summaries.

Multi-File Code Analysis

Large windows make it possible to analyze multiple files or entire modules together. This helps with debugging issues that span several files, tracing dependencies, and generating documentation that reflects how components interact, not just how they work in isolation.

Multi-Document Reasoning with RAG

Retrieval-Augmented Generation (RAG) can narrow thousands of documents down to a small set. Large context windows then allow those full documents to be analyzed without chunking, preserving structure and cross-document relationships during synthesis.

Agent Workflows with Accumulated Context

Agents often collect context over time, such as error logs, documentation, Slack conversations, and database query results. Large context windows allow all of this information to stay in scope during reasoning. Smaller windows force compression or dropping sources, which can remove the detail that connects symptoms to root causes.

What Are the Trade-Offs of Large Context Windows?

Large context windows come with real trade-offs. While they expand what models can process at once, they introduce cost, latency, and reliability considerations that teams have to manage carefully.

- Higher costs at scale: Token pricing is linear, but large windows add up quickly. Processing very large inputs repeatedly can become expensive, especially for models with higher per-token rates. Costs vary widely across providers, which makes model choice and usage patterns a real budget consideration.

- Latency increases with context size: Larger contexts take longer to process. Very large inputs can introduce noticeable delays before the model starts generating output, which matters for interactive or real-time use cases.

- Uneven performance across long contexts: Models tend to perform best at the beginning and end of the context and worse in the middle. This U-shaped behavior means important details buried deep in a long prompt are more likely to be missed.

- Attention dilution: More context is not always better context. When only a small portion of a large input is relevant, the rest adds noise. The model spreads attention across everything, which can reduce accuracy and cause it to focus on irrelevant details.

- Operational complexity: Using large context windows effectively requires careful prompt design and context management. Techniques like prompt caching can reduce costs, but they add planning overhead and force teams to think about what truly belongs in context.

How Do Large Context Windows Compare to RAG?

Large context windows and Retrieval-Augmented Generation (RAG) solve different parts of the same problem, and most production systems use them together rather than choosing one over the other.

How Should Teams Design Systems Around Context Limits?

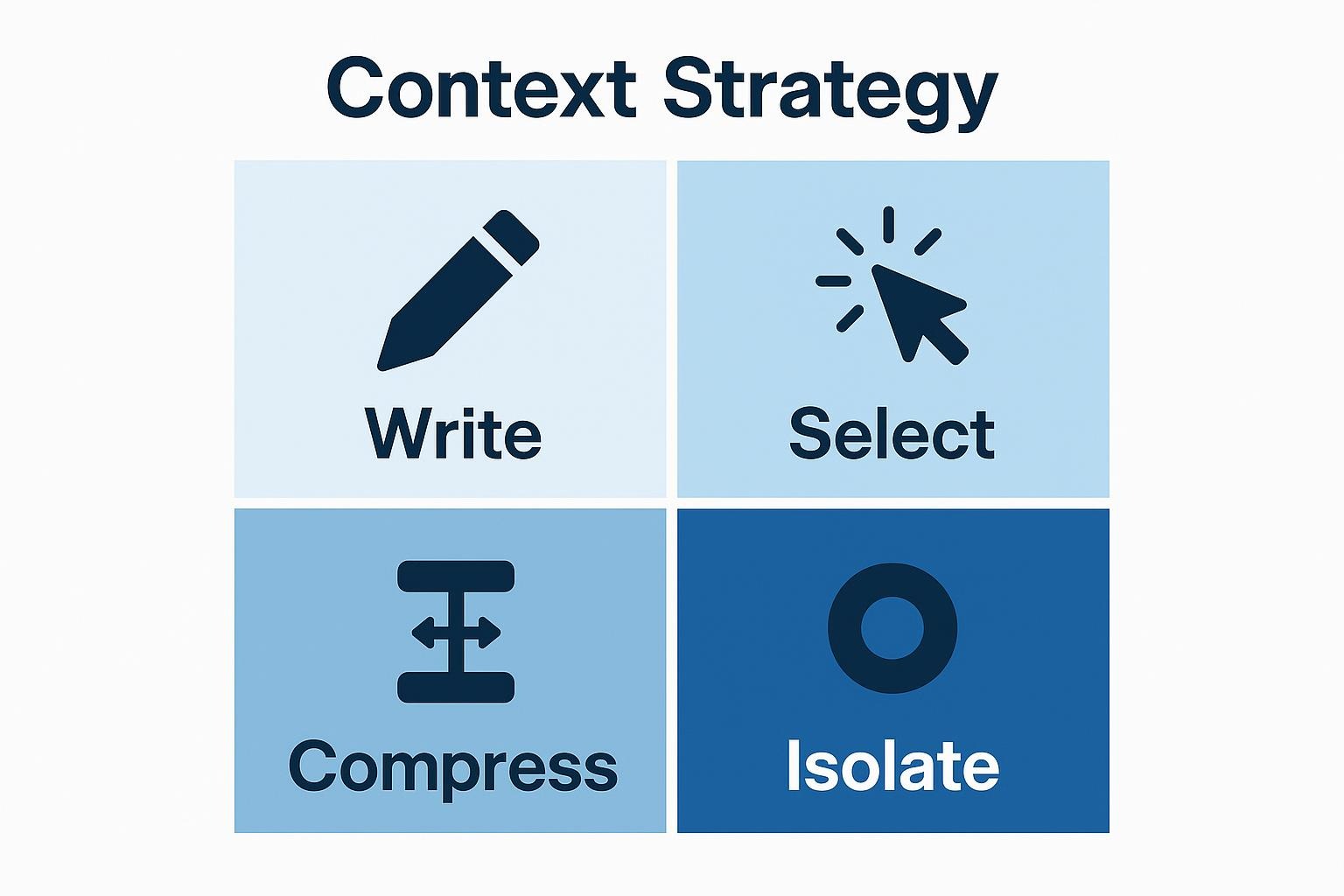

Design systems around four strategies: Write (capture what matters), Select (filter to query-relevant information), Compress (reduce tokens while maintaining value), and Isolate (separate contexts for different components).

1. Write: Capture Only What the Model Needs

Focus on writing context intentionally. System instructions, task goals, and constraints should be explicit and stable. Avoid carrying forward raw logs, verbose tool outputs, or background information that does not affect reasoning.

2. Select: Filter Context Before It Enters the Window

Prioritize query-relevant information through selective context injection. Use retrieval to pre-filter large collections, rank results by relevance, and include only documents above a defined threshold. Prefer document-level retrieval over chunk-level retrieval to preserve structure and reduce fragmentation.

3. Compress: Reduce Tokens Without Losing Meaning

Apply semantic chunking to preserve meaning across boundaries, and use document-level retrieval to eliminate chunking artifacts where possible. As you approach context limits, compress completed subtasks into summaries. Use reversible compaction when full fidelity may be needed later, and reserve lossy summarization for defined thresholds.

4. Isolate: Separate Contexts That Should Not Compete

Isolate different concerns into separate contexts. Multi-turn conversations may require head truncation when focus shifts, or middle truncation to preserve system instructions and recent exchanges. Agent workflows should isolate subtasks so completed steps do not crowd active reasoning.

Monitor how context size affects output quality. If accuracy degrades as conversations or documents grow, you are likely hitting the “lost in the middle” problem. The solution is not larger windows, but better placement, filtering, and isolation of information.

What's the Right Way to Think About Context Windows?

Large context windows are a capability to use deliberately, not a number to maximize. What matters most is context quality. Well-selected, well-placed information consistently outperforms large, noisy inputs, because models fail more often from poor context management than from insufficient context size.

The real challenge is context engineering. Teams need infrastructure that controls what gets captured, filtered, compressed, and prioritized over time. Airbyte’s Agent Engine handles this layer as production infrastructure, with permission-aware retrieval, automated chunking and metadata extraction, and fresh data delivery through Change Data Capture (CDC). That way, agents reason over accurate, current, and governed context instead of brittle prompt assemblies.

Talk to us to see how Airbyte Embedded supports reliable context engineering for production AI agents.

Frequently Asked Questions

How large do context windows need to be in practice?

Most production use cases work well within 8K–32K tokens when context is curated properly. Larger windows are useful for full-document analysis, but they are not required for most retrieval or conversational tasks.

Do larger context windows eliminate the need for RAG?

No. Large context windows and Retrieval-Augmented Generation (RAG) solve different problems. RAG is still the most cost-effective way to filter large document collections before deeper reasoning.

Why does model performance drop with very long contexts?

Models tend to perform best at the beginning and end of long inputs. As context grows, attention spreads across more tokens, increasing the risk that important information in the middle is missed.

Is increasing the context window the easiest way to improve accuracy?

Usually not. Accuracy improves more reliably through better context selection, compression, and retrieval than by simply increasing window size. Poorly managed large contexts often perform worse than smaller, well-curated ones.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)