MCP (Model Context Protocol) servers are processes that expose tools, data sources, and actions to AI agents through standardized protocols. Think of it as a translator that sits between your agent and the systems it needs to access. The server handles authentication, formats responses, and manages permissions, so the agent can focus on reasoning rather than on integration details.

These servers solve a coordination problem that arises in agent development. Your agent needs to interact with databases, SaaS tools, file systems, and APIs, but each of these systems speaks a different language.

MCP servers provide a standard interface, letting agents discover and use tools without needing hardcoded knowledge of how each system works under the hood.

TL;DR

- MCP servers are processes that expose tools, data sources, and actions to AI agents through standardized protocols. They handle authentication, format responses, and manage permissions so agents can focus on reasoning rather than integration details.

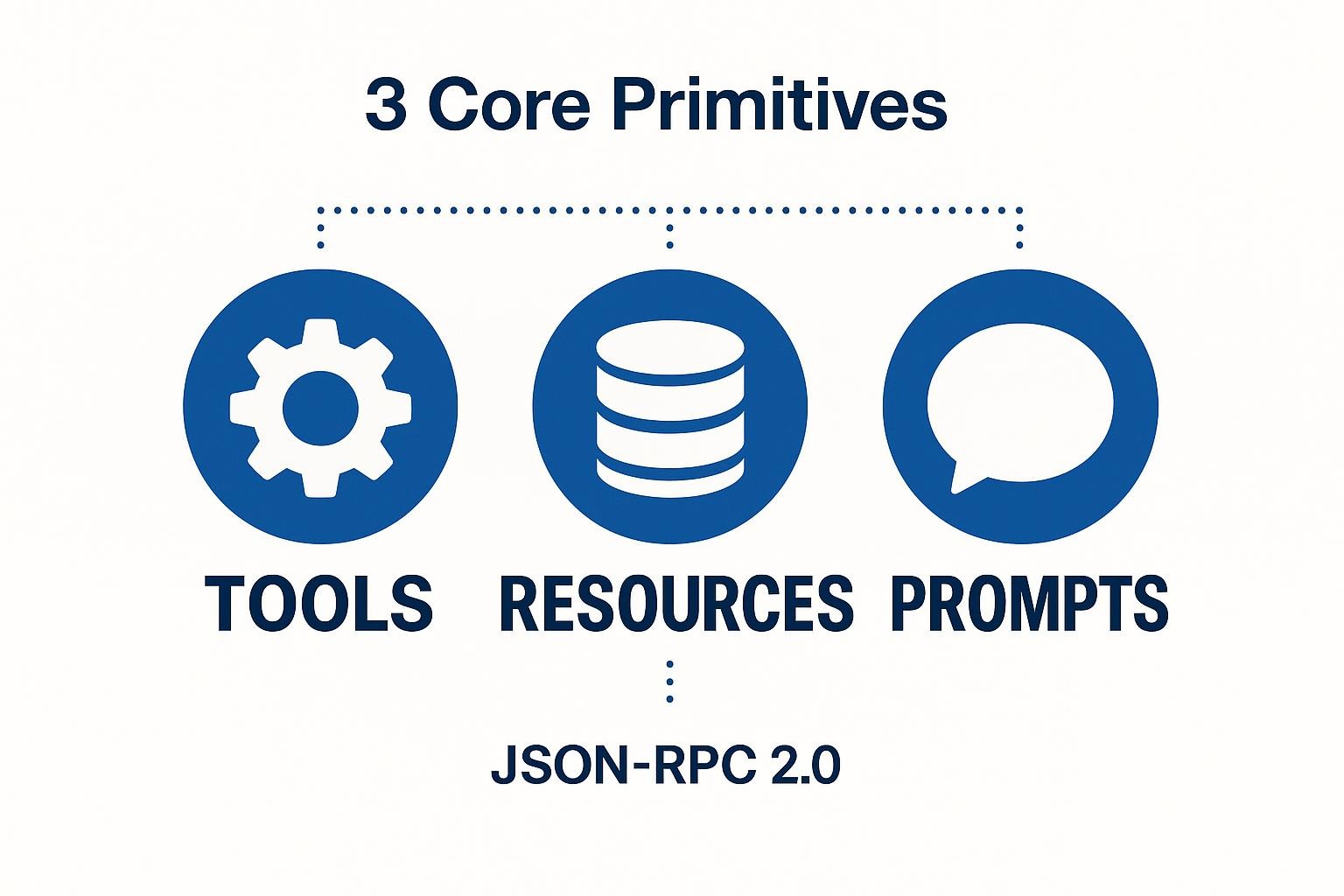

- Servers expose three capability types: tools for actions, resources for readable data, and prompts for reusable templates. Agents discover these capabilities through a schema and make requests without hardcoded knowledge of how each system works.

- MCP servers solve the inconsistency problem across APIs. Instead of writing custom integration code for OAuth, pagination, and error handling per system, agents interact through a uniform protocol while servers handle system-specific logic.

- Tradeoffs include operational overhead and added latency. Each server is a long-running service that needs deployment and monitoring. For agents with minimal integration needs, direct API calls may be simpler.

What Are MCP Servers?

MCP servers are processes that implement the Model Context Protocol, which defines how AI agents discover and use external capabilities. When an agent connects, the server exposes its available capabilities through a schema. The agent uses that schema to make requests, and the server executes those requests against underlying systems and returns structured responses.

MCP servers expose three types of capabilities:

- Tools: Callable functions for actions like querying a database, sending messages, or creating records.

- Resources: Readable data sources such as documents, configuration files, or API endpoints.

- Prompts: Reusable templates that guide the agent on how to use the server’s capabilities correctly.

This approach avoids the limits of prompt-only integrations, where all context must be provided upfront. Prompt-based context fills quickly, becomes outdated, and cannot trigger real actions. MCP servers let agents request only the information they need, when they need it, and perform operations on demand. Context stays focused and current.

MCP servers also remove tight coupling between agents and external systems. Instead of hardcoding integrations into agent code, agents interact through the MCP interface while the server handles system-specific logic. When APIs change or new systems are added, updates happen in the server layer, not inside the agent itself.

Why Do AI Agents Need MCP Servers?

Agents face several challenges when trying to access external data and systems directly:

- APIs are inconsistent: One service uses OAuth 2.0 with refresh tokens, another wants API keys in headers, and a third expects signed requests. Data comes back as JSON, XML, CSV, or some proprietary format. Rate limits, pagination, and error-handling conventions vary by integration. Building an agent that handles all this variation means writing a lot of code that has nothing to do with what your agent actually does.

- Prompts can't hold everything: Context windows have limits. A 200,000-token window sounds generous until you try loading a meaningful slice of your company's documentation, customer records, and operational data. Even when data fits, it goes stale immediately. That customer record from two hours ago might not reflect reality anymore. The docs changed since you last synced. Prompt-based context gives your agent a snapshot frozen in time, and it only gets more outdated as the conversation continues.

- MCP servers standardize access: Your agent doesn't need to know whether it's talking to Postgres, Notion, or a custom internal API. It makes MCP requests, and the server handles the translation. The agent can pull fresh data when needed rather than relying on pre-loaded snapshots. When it needs to take action, it invokes a tool through the same protocol instead of requiring custom code for each system.

How Do MCP Servers Work?

Once an agent connects to an MCP server, every interaction follows the same structured lifecycle.

1. The Agent Discovers Available Capabilities

Your agent connects to an MCP server and asks what it can do. The server responds with a schema describing available tools, resources, and prompts. The agent stores this schema and uses it throughout the conversation to figure out what operations are possible.

2. The Agent Makes Requests Through the Protocol

When the agent needs to interact with an external system, it builds an MCP request specifying the tool or resource and any required parameters. The MCP server receives this, validates it against the schema, and executes the corresponding operation. That might mean running a SQL query, calling a REST API, reading a file, or triggering a webhook. The server formats the response according to the MCP spec and sends it back.

3. The Server Handles Authentication and Normalization

MCP servers manage authentication with external systems, keeping credentials fresh and refreshing tokens as needed. They normalize data formats so agents get consistent structures regardless of where the data came from. These severs also handle errors, translating system-specific failures into responses the agent can understand and recover from.

4. The Server Enforces Permissions

Before returning any data, the server checks whether the current user or session can access requested resources. This happens at the infrastructure layer, not in the agent's reasoning. The agent simply cannot access data the server doesn't return.

5. The Architecture Decouples Agents from Systems

Your agent framework talks to MCP servers using the protocol spec. MCP servers talk to databases, APIs, and file systems using whatever methods those systems require. This layered approach means you can swap out underlying systems without touching agent code, add new capabilities by deploying new servers, and update auth logic in one place rather than scattered throughout your codebase.

What Can MCP Servers Connect AI Agents To?

MCP servers can expose a wide range of systems and data sources to your agents:

- SaaS tools: An MCP server for Notion can expose pages, databases, and blocks as resources while providing tools to create, update, and search content. A Slack server lets agents read channels, post messages, and react to threads. Calendar servers expose events and enable scheduling.

- Databases and data pipelines: An MCP server wrapping Postgres can expose tables as queryable resources and provide tools for filtered reads or writes. Pipeline servers might let agents check sync status, trigger refreshes, or read from staging tables.

- Files and unstructured content: MCP servers for document systems expose individual files, provide search across content, and return text or structured extractions. A SharePoint server might offer folder browsing, document retrieval, and metadata queries. A local file system server exposes directory structures and file contents for agents running in development environments.

- Operational tools: A deployment server might let agents check service health, trigger rollbacks, or scale resources. A ticketing server could enable agents to create, update, and resolve issues.

How Are MCP Servers Used in Real AI Agent Workflows?

In practice, MCP servers show up in various agent workflows:

Local Development Environments

This is where most people start. IDEs (Integrated Development Environments) such as Cursor and assistants like Claude Desktop support MCP servers that connect to local file systems, Git repositories, and development databases. Engineers use these to let coding assistants read project context, understand codebases, and run development operations without constant copy-paste.

Internal Enterprise Agents

Enterprise agents use MCP servers to access organizational data securely. An employee assistant might connect through servers for HR systems, knowledge bases, and communication tools. The servers authenticate against corporate identity providers, enforce role-based access, and make sure the agent only sees data the employee is actually allowed to see.

Customer-Facing Applications

Customer-facing apps use MCP servers to let end-user agents access customer-owned data. A support agent might connect to the customer's ticketing system, documentation, and product data through servers the customer has authorized. The servers scope access to what each customer has permitted, preventing data leakage between tenants while enabling personalized experiences.

Multi-Agent Systems

Multiple specialized agents can coordinate through shared MCP servers. When agents work together on a task, they might share access to a common data layer exposed through MCP. A research agent gathers information and stores findings through an MCP server. A synthesis agent reads those findings through the same server. The protocol provides a common interface without requiring agents to understand each other's internal workings.

What Are the Tradeoffs and Limitations of MCP Servers?

MCP servers solve real integration problems, but they also introduce tradeoffs that matter at the architecture level.

- Operational overhead: Each MCP server is a long-running service that needs to be deployed, monitored, and kept healthy. As the number of integrations grows, so does the surface area you have to operate. You also need to make decisions about where these servers run and how they are secured. For small agents with minimal integration needs, this overhead can outweigh the benefits.

- Added latency: Every MCP tool invocation introduces an extra network hop before the underlying system is reached. When agents gather context from multiple sources, these hops can compound and slow responses. You can reduce the impact with caching or parallel requests, but that shifts complexity back into the system design. Poorly structured call patterns can quickly become performance bottlenecks.

- Not always necessary: MCP servers are not a requirement for every agent. If an agent only reads from a single data source and never takes external actions, a direct connection is often simpler and easier to maintain.

How Do MCP Servers Compare to Other Agent Integration Approaches?

MCP servers sit between direct API calls and high-level automation tools, offering a standardized way for agents to access many systems without hardcoding integrations. They add some operational overhead, but in return, you get reusable, decoupled integrations that scale much better as agent complexity and data sources grow.

How Do MCP Servers Fit Into Modern Context Engineering?

MCP servers sit at the delivery layer of context engineering. Embedding pipelines prepare data ahead of time by chunking documents and generating embeddings. MCP servers are how agents access that context at runtime. They provide a consistent interface for querying prepared data, pulling fresh information from source systems, and taking actions without hardcoding system-specific logic into the agent.

Airbyte’s Agent Engine supports this layer with production-ready MCP servers built on a proven data infrastructure foundation. Through PyAirbyte MCP, agents can query prepared data, retrieve fresh source data, and respect existing access controls. When teams need to connect a new or proprietary system, Connector Builder MCP lets them create and publish custom connectors without building MCP servers from scratch. Reusable connectors reduce ongoing maintenance as systems and APIs evolve.

Talk to us to see how Airbyte Embedded helps teams build and operate MCP servers for production AI agents.

Frequently Asked Questions

Are MCP servers only for large enterprise agents?

Not at all. MCP servers work at any scale, from local development assistants to enterprise deployments. The value shifts depending on complexity. Smaller agents benefit from standardized tool interfaces and clean separation between agent logic and integration code. Larger deployments benefit from reusable servers, centralized permissions, and consistent patterns across many agents.

Do MCP servers replace vector databases?

No, they serve different purposes. MCP servers expose capabilities to agents. Vector databases store and search embedded content. An MCP server might wrap a vector database, exposing semantic search as a tool the agent can call. The server handles query formatting and result parsing while the vector database does the actual similarity search.

Can MCP servers be open source?

Absolutely. The MCP specification is open, and many implementations are open source. This lets teams inspect server code, understand exactly how integrations work, contribute fixes for edge cases, and fork implementations for custom needs. Open source servers also help build a community around shared patterns and best practices.

How hard is it to run an MCP server in production?

It depends on the server. Simple servers wrapping a single API can run as lightweight processes with minimal operational overhead. Servers managing credentials, handling high request volumes, or enforcing complex permissions need more careful planning around deployment, monitoring, and scaling.

Do all AI agents need MCP servers?

No. Agents with limited integration needs or those operating in controlled environments with direct system access may not benefit from the protocol overhead. MCP servers add the most value when agents need standardized access to multiple systems, when integrations should be reusable across agents, or when permission enforcement needs to happen at the infrastructure layer rather than through prompt instructions.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)