What is Model Context Protocol (MCP): Definition, Uses & Benefits

Model Context Protocol (MCP) is an open standard introduced by Anthropic in November 2024. It defines how AI agents discover and interact with external tools and data sources. Instead of writing custom integration code for every API, MCP provides a single, consistent protocol that agents can use to access capabilities across systems.

This matters because AI agents rarely operate in isolation. As soon as an agent needs to read from multiple tools, take actions, or adapt to changing systems, integration complexity becomes a bottleneck. Without a standard like MCP, teams end up maintaining brittle, framework-specific connections that break as tools, schemas, and permissions evolve.

This article explains what MCP is, how it works, and when it makes sense to use it to build production AI agents.

TL;DR

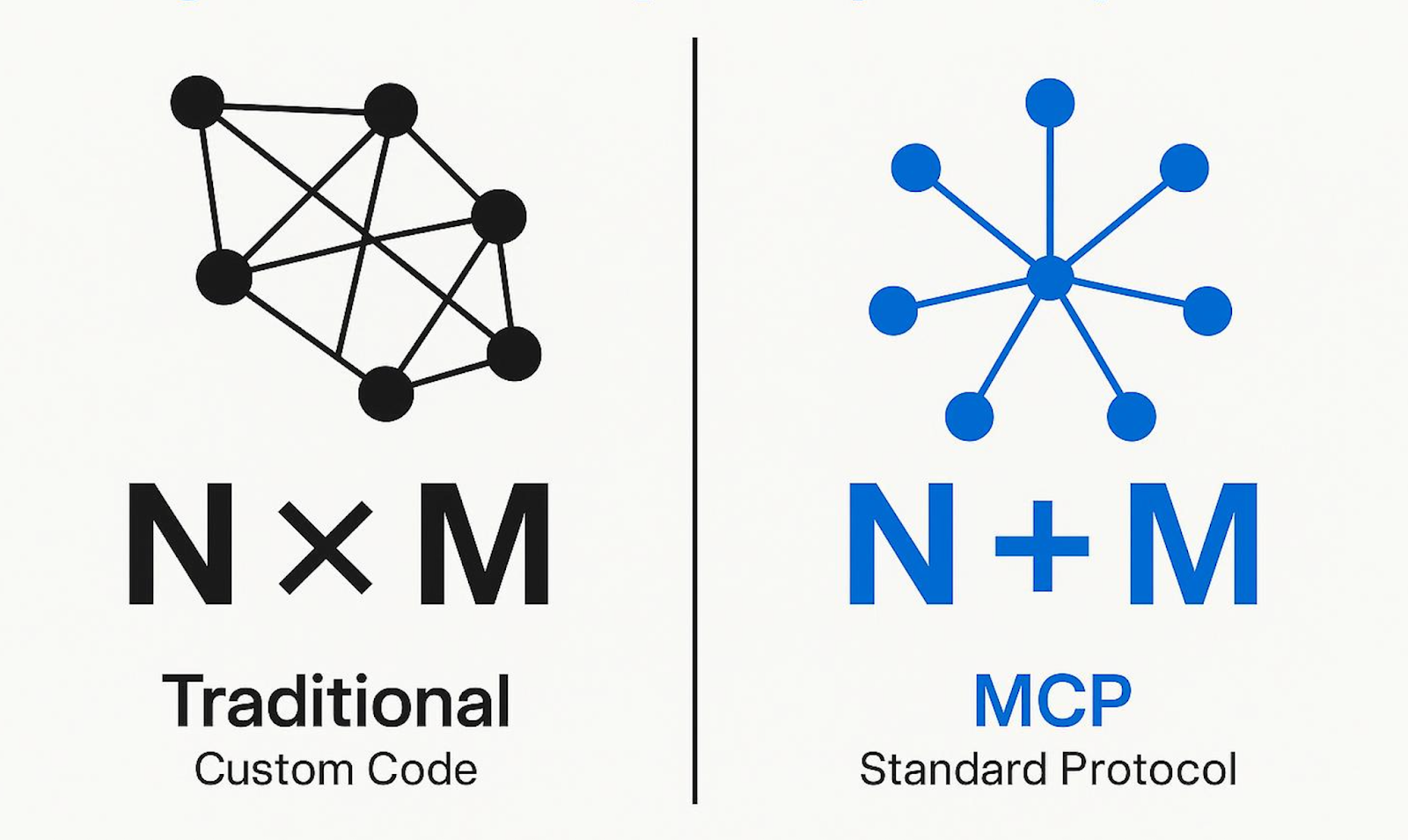

- MCP is an open standard that defines how AI agents discover and interact with external tools and data sources. It solves the N×M integration problem by letting teams build one MCP server per tool that any MCP-compatible client can use.

- The protocol works through capability declaration and dynamic discovery. Servers expose tools, resources, and prompts during initialization, and agents decide which to use at runtime instead of relying on hardcoded logic.

- MCP is most valuable when integration complexity becomes the bottleneck. It fits teams building agents that touch 3+ services, shipping multiple agents on shared backends, or struggling with integration sprawl.

- MCP does not replace APIs, vector databases, or RAG systems. It is a standardization layer that sits on top of existing infrastructure, shifting complexity from scattered agent-side code to centralized server implementations.

What Is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is an open standard that defines how AI agents connect to external tools and data sources through a client–server model.

MCP solves the N×M integration problem. Before MCP, 5 AI frameworks connecting to 10 tools required up to 50 separate integrations. MCP turns this into N+M: build one MCP server per tool, and any MCP-compatible client can use it.

The protocol works through a capability declaration. According to the MCP documentation, servers declare their capabilities (tools, resources, and prompts) during initialization. This allows clients to discover what tools are available before attempting to use them, eliminating the need for custom wrapper code for each framework-tool combination.

MCP is not a replacement for APIs, vector databases, or RAG systems. It's a standardization layer that sits on top of existing infrastructure. Most MCP servers wrap existing APIs with a standardized interface that AI agents can discover and use automatically. The complexity shifts from scattered agent-side code to centralized server implementations.

What is the Difference: MCP vs Traditional Integrations

The difference between MCP and traditional API integrations comes down to where integration logic lives and how agents discover capabilities.

How Does Model Context Protocol Work?

Model Context Protocol (MCP) follows a pattern that defines how clients discover capabilities, access data, and execute actions through servers.

Client–Server Architecture and Isolation

The host application creates and manages MCP client instances. Each client maintains a strict 1:1 connection with a single MCP server over JSON-RPC 2.0. This isolation prevents capability bleed, so one agent session cannot access another server’s tools or data.

Servers and Exposed Capabilities

MCP servers run as independent processes. Each server exposes access to a specific system, such as a GitHub repository, a PostgreSQL database, or an internal documentation store. Servers declare their capabilities using three primitives: tools for executable actions, resources for readable data, and prompts for reusable interaction templates.

Initialization and Capability Handshake

Every connection starts with an initialization step. The client sends an initialize request declaring its protocol version and supported features. The server responds with its own capabilities, including exposed tools and supported features like resource subscriptions. After this exchange, both sides know what is available.

Tool Discovery Without Hardcoding

After initialization, the client discovers available operations using tools/list. The server returns a structured list where each tool includes a name, description, and JSON Schema for required parameters. This lets agents decide which tools to use at runtime instead of relying on hardcoded logic.

Tool Execution and Progress Reporting

When an agent decides to act, the client sends a tools/call request with the tool name and arguments. The server executes the request and returns results in a standard format. For long-running operations, the server can send progress updates if the client includes a progress token.

Resource Access and Subscriptions

Resources use the same request–response model but focus on data access rather than actions. They are addressed by URIs such as /documents/finance/policies/q4-2024. Clients can list resources, read specific ones, and subscribe to updates when supported.

Transport Layers and Deployment Options

MCP supports multiple transport mechanisms depending on deployment needs. Local servers use stdio (standard input/output), ideal for development and local tool integration. Remote servers use HTTP with Server-Sent Events (SSE) for persistent connections and real-time updates. The transport choice affects deployment architecture and latency, but the core protocol remains consistent across both.

What Are the Benefits of Using MCP?

The benefits become most apparent once teams move beyond single agents and start managing multiple tools, frameworks, and environments.

- Reduced integration complexity: Standardizing on MCP replaces framework-specific adapters with reusable MCP servers, collapsing the N×M integration problem into a manageable N+M model.

- Faster development for multi-agent teams: Teams building multiple agents avoid rewriting the same integrations for each framework and tool combination, which significantly shortens build cycles.

- Reuse across agents and frameworks: Once MCP servers exist for core tools and data sources, new agents can use them immediately without additional integration work.

- Simpler onboarding of new tools: Adding a new external system requires building a single MCP server, rather than updating every agent or framework-specific wrapper.

- Improved focus on agent behavior: Engineers spend less time maintaining connection code and more time improving reasoning, workflows, and agent logic.

- Framework portability: MCP servers are framework-agnostic, allowing teams to switch or support multiple agent frameworks without reworking integrations, reducing long-term lock-in.

What Can AI Agents Do with MCP?

MCP gives AI agents a set of capabilities when interacting with external systems. The agents can:

- Access external data without custom integrations: Agents can read data from databases, SaaS tools, and internal systems through MCP servers instead of maintaining one-off connectors for each source.

- Adapt automatically as new capabilities appear: When new MCP servers or tools are added, agents can use them without code changes or redeployments.

- Take actions across systems, not just read data: Agents can trigger workflows, update records, and execute operational tasks across connected systems as part of their reasoning loop.

- React to changes instead of polling for updates: Agents can respond to updates as they happen, which supports monitoring, alerting, and event-driven behavior.

- Reason across multiple systems at the same time: A single agent can combine context from CRM data, product systems, knowledge bases, and order platforms to understand situations holistically.

- Coordinate multi-step workflows: Agents can sequence actions across systems, handle dependencies between steps, and adjust behavior based on intermediate results.

- Operate within existing security boundaries: Agents inherit each system’s authentication and authorization rules, ensuring access stays scoped and auditable.

- Scale behavior as systems grow: Adding new data sources or capabilities increases what agents can do without increasing integration complexity.

These capabilities let agents move beyond single-tool interactions and operate across real systems in coordinated workflows.

What are Common MCP Use Cases

These use cases illustrate how agents apply MCP to read data, take actions, and coordinate workflows across multiple systems.

Who Should Use MCP?

Model Context Protocol (MCP) is most valuable when integration complexity becomes the bottleneck. It is a good fit for:

- AI engineers building agents that touch 3+ services: When agents must dynamically choose tools based on context rather than follow a fixed flow.

- Product teams shipping multiple agents on the same backend systems: MCP enables a “build once, reuse everywhere” model where new agents inherit existing integrations immediately.

- Enterprises standardizing agent access to internal systems: MCP works well as a common integration layer when paired with external governance, identity, and access controls.

- Teams struggling with integration sprawl: If maintaining API code is consuming more time than building agent logic, MCP reduces long-term maintenance load.

In contrast, MCP adds unnecessary complexity for single-purpose agents with fixed workflows and integrations involving only one or two services. For high-throughput or ultra-low-latency paths where every millisecond matters, direct APIs are usually a better fit. The same applies to teams that are not ready to take on the maturity and ecosystem risk of an evolving protocol.

What Does MCP Mean for Building Production AI Agents?

Model Context Protocol (MCP) provides a clean, scalable way for AI agents to interact with real systems without rewriting integrations for every tool and framework. By standardizing how agents discover capabilities, access data, and take actions, MCP removes much of the hidden complexity that shows up once agents move beyond simple demos.

In practice, MCP works best when it is paired with data infrastructure that can support how agents actually operate. Agents still depend on fresh data, consistent schemas, and permissions that reflect real user access across systems.

Airbyte’s Agent Engine supports this by managing source connectivity, incremental updates through Change Data Capture, and row-level and user-level access controls across both structured records and unstructured files. This allows MCP-based agents to operate on the current, governed context as they scale.

Talk to us to see how Airbyte Embedded supports MCP-based agents with reliable, permission-aware access to real enterprise data.

Frequently Asked Questions

What does MCP stand for?

MCP stands for Model Context Protocol. OpenAI introduced it in November 2024 as an open standard for connecting AI assistants to data sources and tools. It uses a client–server model that exposes tools, resources, and prompts through standardized interfaces, so AI applications can use external capabilities without custom integrations.

Is MCP only for large enterprises?

No. MCP benefits any team building agents with multiple integrations, regardless of company size. Startups building AI-first products use MCP to move faster by reusing integration infrastructure across agents. The protocol's open-source nature and available SDKs make it accessible to teams of any size.

Does MCP replace RAG or vector databases?

MCP doesn't replace RAG systems or vector databases. It provides a standardized way to access them. RAG is an architectural pattern for retrieval, while MCP is a communication protocol. You might build an MCP server that exposes your RAG system, letting agents access it through standardized interfaces alongside other tools.

Is MCP open source?

Yes. MCP is a protocol supported by Airbyte for AI agent orchestration, enabling programmatic management of connectors for enterprise data infrastructure. Details about its governance, standardization, and SDK licensing are not publicly confirmed.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)