Incremental Load vs Full Load ETL

Summarize this article with:

✨ AI Generated Summary

Most ETL failures don’t come from load strategy decisions made early and never revisited.

A pipeline that worked fine when a table had 50,000 rows starts timing out once it hits 50 million. A nightly full refresh silently reprocesses the same data again and again, burning warehouse credits and stretching batch windows. Or an incremental job misses updates for a day, and no one notices until metrics drift.

Choosing between incremental and full load ETL directly affects cost, reliability, data freshness, and how painful failures are when they happen.

This article breaks down how each approach works, where each one breaks, and how teams actually use them in production.

TL;DR: Incremental Load vs Full Load ETL at a Glance

- Full load ETL reprocesses the entire dataset every run

- Incremental load ETL only ingests new or changed data

- Full loads are simpler but don’t scale

- Incremental loads scale better but require stronger guarantees

- Most real systems use a mix of both

What Is Full Load ETL?

Full load ETL means exactly what it sounds like. Every time the pipeline runs, it extracts the entire dataset from the source and reloads it into the destination.

The destination table is usually truncated or replaced, then rebuilt from scratch using the latest snapshot of the source data. Each run is self-contained and doesn’t depend on state from previous runs.

This approach is common in early analytics setups and legacy batch systems because it’s straightforward. If the job succeeds, you know the destination reflects the source at that point in time.

When Full Load ETL Makes Sense

Full loads still have a place.

They work well when datasets are small and relatively static. If a table fits comfortably within your batch window and doesn’t grow quickly, reloading it can be cheaper than engineering a more complex solution.

They’re also useful for one-time migrations, historical backfills, or rebuilding corrupted tables. In these cases, correctness matters more than efficiency, and simplicity wins.

For teams just getting started, full loads reduce cognitive overhead. There’s no cursor state to manage and no question about whether changes were missed.

Where Full Load ETL Breaks Down

The problems start when data grows.

As tables get larger, full loads consume more network bandwidth, more source database resources, and more warehouse compute. Batch windows stretch from minutes to hours. Failures become harder to recover from because rerunning the job means reprocessing everything again.

Full loads can also impact production systems. Extracting entire tables can lock rows, spike I/O, or slow down operational workloads. What was once a harmless nightly job becomes a source of risk.

At scale, full load ETL stops being inefficient and starts being dangerous.

What Is Incremental Load ETL?

Incremental load ETL takes a different approach. Instead of reloading everything, it only ingests records that are new or have changed since the last successful run.

Each job depends on some notion of progress. That might be a timestamp, an auto-incrementing ID, or a CDC log that captures inserts, updates, and deletes as they happen.

The goal is to minimize data movement while keeping the destination in sync with the source.

How Incremental Loads Track Change

There are several common patterns for incremental loading.

Some pipelines rely on append-only tables where new rows are easy to detect. Others track updates using a last_updated_at timestamp. More advanced setups use CDC replication to capture row-level changes directly from database logs.

Deletes require special handling. Some systems use soft deletes, others rely on tombstone events, and some periodically reconcile data with a targeted full refresh.

Each approach trades simplicity for completeness.

Where Incremental ETL Gets Risky

Incremental pipelines fail quietly when assumptions break.

A timestamp column that stops updating correctly can cause records to be skipped forever. Schema changes can invalidate cursors. Late-arriving data can land outside the incremental window.

Unlike full loads, incremental jobs can succeed while still producing incorrect results. If state tracking is wrong, you may not notice until downstream metrics drift or users lose trust in the data.

Incremental ETL scales better, but it demands stronger operational discipline.

What it the Difference between Incremental Load and Full Load

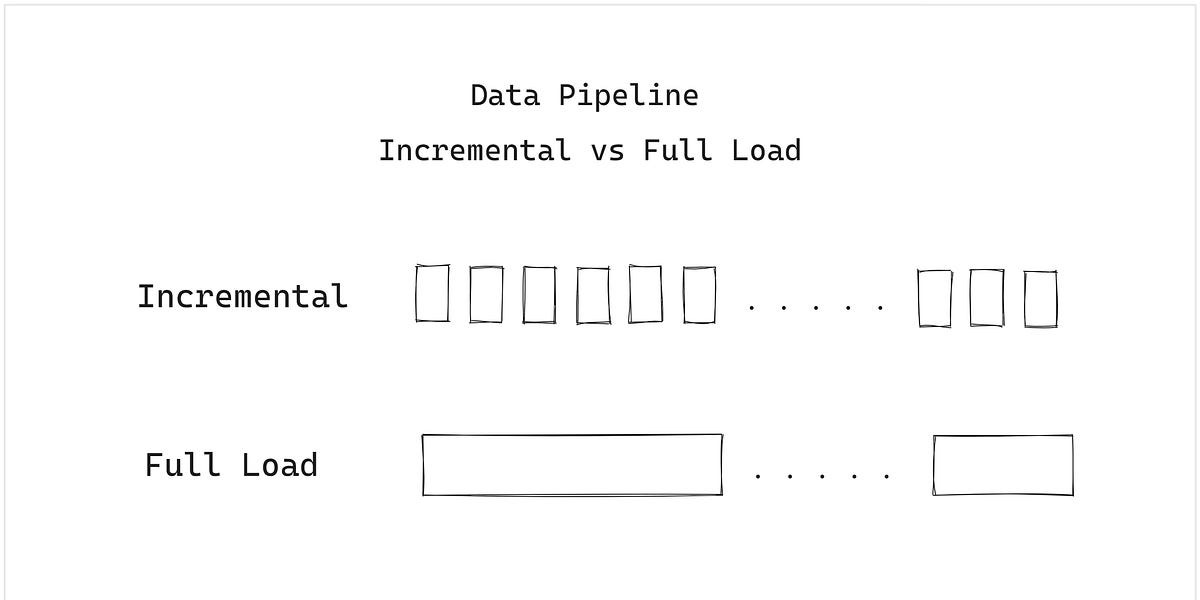

The difference between these two approaches shows up in day-to-day operations.

Full loads move large volumes of data every run. Incremental loads move only what changed. That alone changes cost profiles, failure recovery, and how often you can run pipelines.

When a full load fails, rerunning it means starting over. When an incremental load fails, recovery often means resuming from the last checkpoint.

Full loads are stateless and simple. Incremental loads are stateful and fragile if not monitored properly.

Neither approach is universally better. They optimize for different failure modes.

How Data Teams Actually Choose Between Them

In practice, teams rarely pick one approach and apply it everywhere.

Small dimension tables often use full loads because the overhead is negligible. Large fact tables almost always use incremental loading because full refreshes would be too slow or expensive.

Many teams combine both strategies. Incremental pipelines run frequently, while periodic full refreshes act as safety nets to correct drift or missed changes.

The real decision comes to where each one makes operational sense.

How Incremental and Full Loads Show Up in Modern Stacks

Traditional batch ETL leaned heavily on full loads because tooling was limited and data volumes were smaller.

Modern stacks favor incremental approaches, especially CDC-based replication, because they support fresher data and lower operational overhead.

Analytics workloads still tolerate batch updates, but operational use cases and AI systems expect data to be current. That expectation pushes teams toward incremental designs by default.

Full loads haven’t disappeared. They’ve become targeted tools rather than the default pattern.

How Airbyte Handles Incremental and Full Loads

Airbyte supports both incremental and full load strategies across its 600+ connectors.

For many sources, incremental sync modes are available out of the box, including CDC replication where supported. These modes handle cursor tracking, checkpointing, and retries without forcing teams to build custom logic.

At the same time, full refreshes remain available for backfills, schema changes, or recovery scenarios. Teams can choose the right approach per source and per table, rather than locking themselves into a single pattern.

That flexibility matters as data volumes grow and requirements change. Pipelines that start simple don’t have to be thrown away when scale, freshness, or reliability expectations increase.

Try Airbyte to test both incremental and full load sync modes against real data and see how each behaves in your own environment.

Frequently Asked Questions

Is incremental load always better than full load ETL?

No. Incremental load scales better, but it isn’t always the right choice. For small or low-change datasets, full loads are often simpler and more reliable. Incremental pipelines introduce state, which means more things can break if not monitored carefully.

Can I switch from full load to incremental load later?

Yes, and many teams do. A common pattern is to start with full loads, then migrate to incremental loads as data volume grows. The transition usually involves adding change tracking logic and running an initial full backfill to establish a clean baseline.

What happens if an incremental load misses data?

Missed data doesn’t always cause a job failure. That’s the risk. Records can be skipped silently if cursors, timestamps, or CDC logs break. Teams often mitigate this by adding monitoring, reconciliation checks, or periodic full refreshes for validation.

Do I still need full loads if I use CDC?

Often, yes. CDC handles continuous change capture, but full loads are still useful for historical backfills, schema resets, and recovery scenarios. Even CDC-based pipelines usually rely on an initial full snapshot to establish starting state.

.webp)