What’s Causing Bottlenecks In My ETL Pipeline?

Summarize this article with:

✨ AI Generated Summary

An ETL pipeline slowdown is often caused by bottlenecks at various stages such as slow data sources, inefficient extraction queries, complex transformations, insufficient hardware, large data volumes, network latency, poor workflow orchestration, slow data loading, lack of error handling, or platform limitations.

- Identify bottlenecks by monitoring logs, resource usage, query performance, and network speed.

- Resolve issues by optimizing queries, scaling infrastructure, partitioning data, automating workflows, and improving error handling.

- Prevent bottlenecks through regular monitoring, incremental processing, scalable infrastructure, and optimized pipeline design.

An efficient ETL (Extract, Transform, Load) pipeline is the backbone of any data-driven organization. It’s the process that ensures data flows smoothly from various sources into your data warehouse or analytics platform, ready for analysis and decision-making.

But when your pipeline starts to slow down, or worse, come to a halt, it can disrupt operations and delay crucial insights.

One of the biggest culprits of these slowdowns? Bottlenecks. These are the points in the pipeline where processes get delayed, causing a ripple effect that impacts overall performance.

Whether it’s a slow data source, a complex transformation, or inadequate resources, bottlenecks are often difficult to identify but critical to resolve.

In this post, we’ll explore the common causes of bottlenecks in ETL pipelines and share practical tips on how to spot them before they cause significant damage to your data operations.

By the end, you’ll have a roadmap to identify the bottlenecks slowing you down and the tools to fix them, ensuring your pipeline runs as smoothly as possible.

Common Causes of Bottlenecks in an ETL Pipeline

There’s no one-size-fits-all answer when it comes to bottlenecks. They can appear anywhere in your ETL pipeline, and the source of the problem can be just as varied. Below, we’ll go through the most common causes, and for each, we’ll provide tips on how to check whether it's the culprit behind your pipeline's slowdown.

1. Data Source Issues

Cause: Unreliable or Slow Data Sources

The data source is where your pipeline begins. If it’s slow to respond or if there are frequent connectivity issues, the entire pipeline can be delayed right from the start. Common problems include network instability, poorly optimized databases, or services that aren’t scalable.

How to Check:

- Monitor the response times of the data sources you’re pulling from.

- Check for any timeouts or failures when extracting data.

- Use network diagnostic tools to test latency or bandwidth between the source and the pipeline.

Tip: Look for slow response times in your logs and see if certain sources are consistently problematic. If so, consider optimizing the query, switching to a more reliable source, or even caching data where possible.

2. Data Extraction Problems

Cause: Overloaded or Inefficient Extraction Queries

If the queries used to extract data are inefficient or not optimized, your ETL pipeline can experience significant delays. Queries that fetch large amounts of data or involve complicated joins can become bottlenecks in this stage.

How to Check:

- Profile the extraction queries to measure their execution time.

- Use EXPLAIN plans or query performance tools to identify slow or resource-heavy queries.

- Check if the extraction process is returning more data than necessary.

Tip: Optimize extraction queries by minimizing the data fetched (e.g., using filters), using indexed columns for searches, or breaking down large queries into smaller, more manageable ones.

3. Transformation Inefficiencies

Cause: Complex Transformation Logic or Inadequate Resource Allocation

The transformation phase of your ETL pipeline is where the bulk of the processing power is required. If transformations involve complex calculations, data cleansing, or aggregation over large datasets, this step can become a major bottleneck.

How to Check:

- Profile the transformation scripts or steps to see where most of the processing time is spent.

- Track resource usage (CPU, memory) to see if your system is being overloaded.

- Look for any unnecessary or redundant transformations in the pipeline.

Tip: Consider breaking down complex transformations into smaller, parallelizable tasks. Use more efficient algorithms and distribute workloads across multiple nodes or machines to improve performance.

4. Insufficient Hardware or Resources

Cause: Lack of CPU, RAM, or Storage Causing Slow Processing

ETL processes, particularly during transformation, can be resource-intensive. If your hardware isn’t up to the task, or if there’s not enough memory or storage, your pipeline will run slower than expected.

How to Check:

- Monitor system resource usage (CPU, memory, disk I/O) during pipeline runs.

- Check for high usage of resources during heavy processing steps.

- Use tools like top or htop (Linux) to track system load.

Tip: If hardware limitations are the issue, consider scaling your infrastructure (e.g., moving to the cloud or upgrading your machines) or optimize the memory usage of your pipeline processes.

5. Data Volume & Size

Cause: Large Datasets That Overwhelm the Pipeline’s Capacity

As your datasets grow, they can overwhelm the pipeline, causing slowdowns, particularly in the extraction or loading phases. Too much data in a single batch can also delay processing times or even cause failures.

How to Check:

- Monitor the size of the datasets being processed, particularly during peak times.

- Identify if certain datasets are unusually large or if data volume is growing faster than expected.

Tip: Consider using data partitioning, where data is broken down into smaller chunks. Alternatively, implement batch processing or stream data incrementally to prevent large volumes from overwhelming the pipeline.

6. Network Latency

Cause: Slow Network Connections Between Pipeline Stages

In many ETL pipelines, data needs to travel across networks from one system to another. If your network is slow or experiencing interruptions, it can introduce latency, causing bottlenecks, especially in cloud environments or distributed systems.

How to Check:

- Measure network throughput between different stages of the pipeline.

- Use tools like ping or traceroute to detect slow network hops.

Tip: To mitigate network issues, consider optimizing your infrastructure or using data compression techniques to reduce the size of data transferred over the network. Additionally, ensure that your network is designed to handle the data throughput needed by your ETL pipeline.

7. Poorly Designed Workflow Orchestration

Cause: Inefficient Scheduling or Task Dependencies

If your ETL pipeline is manually scheduled or the tasks are poorly orchestrated, delays and inefficiencies can arise. Task dependencies can slow things down if they aren’t properly managed or if there are unnecessary delays between tasks.

How to Check:

- Review pipeline scheduling logs and task dependencies.

- Look for missed deadlines, unnecessary delays, or tasks that could run in parallel.

Tip: Implement workflow orchestration tools (like Apache Airflow or Prefect) to automate task scheduling and dependencies. This will allow for more efficient pipeline execution and minimize delays between stages.

8. Inefficient Data Loading Processes

Cause: Slow or Blocked Data Loading Operations

Once your data has been extracted and transformed, it’s time to load it into the destination, often a data warehouse or database.

If this step is not optimized, it can create a significant bottleneck. This could be caused by slow write times, lack of indexing, or inefficient batch loading mechanisms.

How to Check:

- Monitor database write times and look for slow load operations.

- Check for locking issues or database constraints that may be slowing down the insert operations.

- Analyze the load queries to see if they are optimized for performance.

Tip: To speed up data loading, ensure that your destination database is indexed correctly, and consider using parallel loading techniques. If you’re loading large volumes of data, consider staging the data in smaller batches to avoid overwhelming the system.

9. Lack of Error Handling & Monitoring

Cause: Unclear Error Handling or Failure to Catch Issues Early

Without robust error handling and monitoring, it can be challenging to identify where things went wrong, especially if the pipeline fails without clear feedback. Missing or vague error messages, or failing to catch errors early, could cause delays in fixing issues and lead to prolonged bottlenecks.

How to Check:

- Set up logging and monitoring tools to track pipeline performance and errors at every stage.

- Review error messages and logs for vague or unhelpful error handling that may be hindering troubleshooting.

- Look for recurring errors that could be indicative of an underlying issue.

Tip: Implement centralized logging systems (such as ELK stack, or AWS CloudWatch) that provide clear error messages and tracebacks. Set up alerts for failure conditions and ensure that proper retry mechanisms are in place to automatically resolve minor errors without manual intervention.

10. Software & Platform Limitations

Cause: Restrictions Imposed by Your ETL Tool or Platform

Sometimes, the bottleneck may not be within your pipeline itself but the ETL tool or platform you’re using. It could be limitations in processing power, support for parallel processing, or even platform bugs that cause performance issues.

How to Check:

- Review your ETL platform’s documentation for known performance issues or limitations.

- Test the pipeline with smaller datasets to check if the problem persists.

- Monitor the ETL tool’s resource usage (memory, CPU, disk I/O) and identify if the tool is reaching its limits.

Tip: Evaluate alternative ETL tools or platforms that offer more robust features such as parallel processing, better scalability, and more comprehensive error handling. Regularly check for updates to your current tool that may resolve performance issues.

Step-by-Step Troubleshooting Guide

Identifying the root cause of a bottleneck in your ETL pipeline is just the first step. Once you’ve pinpointed where the slowdown is occurring, you can start taking action to resolve it.

Here’s a general approach to troubleshooting and fixing these issues:

Step 1: Identify the Point of Failure

- Monitor Logs and Metrics: Use monitoring tools to gather data on each stage of the pipeline. Look for timeouts, slow queries, high resource usage, or failed tasks.

- Reproduce the Problem: Try running smaller test batches or focusing on the problem area to see if the issue persists.

Step 2: Narrow Down Likely Causes

- Based on the symptoms and performance data, determine if the problem is with the source, transformation, loading process, or another area.

- Use the information from your checks (e.g., network speed, query performance, resource usage) to confirm the cause.

Step 3: Apply Targeted Solutions

- If the bottleneck is due to inefficient queries, optimize them.

- For resource limitations, consider scaling your infrastructure or breaking tasks into smaller, parallelized chunks.

- Address network or connectivity issues by optimizing your infrastructure or reducing data transfer size.

Step 4: Test and Measure

- After applying fixes, run your pipeline again to see if the issue has been resolved.

Measure the performance improvements using the same metrics you used during the troubleshooting phase.

General Best Practices for Avoiding Bottlenecks

While troubleshooting is a crucial skill, it’s even better to prevent bottlenecks from occurring in the first place. Here are some best practices to keep your ETL pipeline running smoothly:

- Regularly Monitor Your Pipeline: Use monitoring tools to keep track of the health of your pipeline and spot any potential issues early.

- Automate Data Partitioning: Break large datasets into smaller chunks and process them in parallel to reduce the load on your system.

- Use Incremental Processing: Instead of loading and transforming the entire dataset, extract only the changed data (delta) to minimize overhead.

Optimize Your Queries and Transformations: Ensure that your SQL queries and transformation steps are optimized for speed and efficiency. - Keep Your Infrastructure Scalable: As your data grows, your infrastructure needs to grow with it. Regularly assess your resource requirements and scale your infrastructure as needed.

- Improve Error Handling and Logging: Implement comprehensive error handling and centralized logging to catch issues early and address them before they escalate into major problems.

Conclusion

Bottlenecks in your ETL pipeline can significantly slow down data flow, leading to delays in insights and decisions. By identifying common causes and applying targeted solutions, you can keep your pipeline running smoothly and efficiently. Regular monitoring, optimized queries, and scalable infrastructure are key practices to help avoid these bottlenecks in the first place.

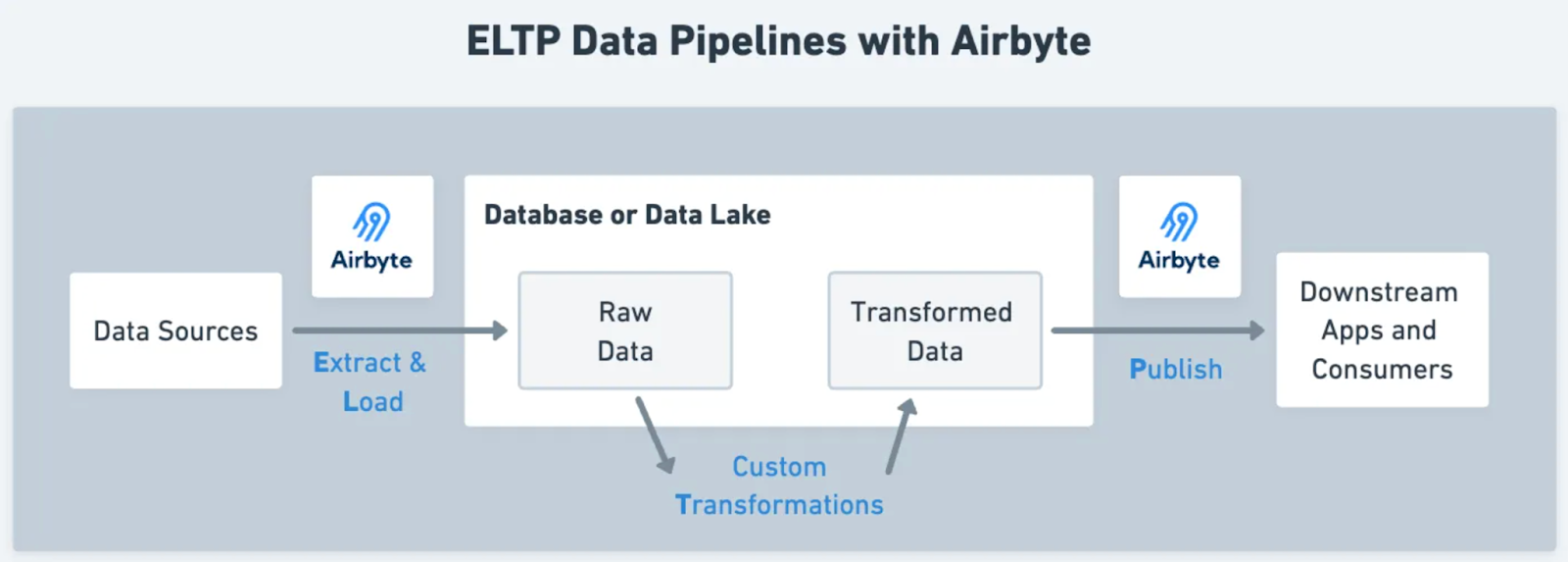

If you’re looking for a powerful and flexible solution to streamline your ETL processes and eliminate bottlenecks, Airbyte is the ideal choice.

With over 600 pre-built connectors, support for both batch and real-time data integration, and a user-friendly interface, Airbyte allows you to manage your data pipelines with ease. Whether you’re working with cloud databases, third-party SaaS tools, or custom sources, Airbyte ensures seamless integration and optimal performance.

Ready to supercharge your ETL pipeline? Start using Airbyte today and experience the difference in performance and reliability!

Frequently Asked Questions (FAQ)

What is an ETL bottleneck?

An ETL bottleneck is any stage in the Extract, Transform, Load process where data movement slows down or stops. It often happens due to slow queries, resource limits, large data volumes, or inefficient orchestration.

How do I know if my ETL pipeline has a bottleneck?

You’ll notice delays in data delivery, missed SLAs, or failed jobs. Monitoring tools, system logs, and query performance metrics can help pinpoint where the slowdown occurs.

What are the most common causes of bottlenecks in ETL pipelines?

The main culprits include inefficient extraction queries, heavy transformations, insufficient hardware, growing data volumes, network latency, and poorly managed workflows.

Can ETL bottlenecks be prevented?

Yes. Regular monitoring, optimizing queries, partitioning large datasets, incremental processing, and keeping infrastructure scalable can significantly reduce the risk of bottlenecks.

When should I scale my infrastructure versus optimizing my pipeline?

If your pipeline is already optimized but still struggles with performance at higher data volumes, scaling infrastructure makes sense. If inefficiencies exist in queries, transformations, or orchestration, optimization should come first.

How can Airbyte help remove ETL bottlenecks?

Airbyte offers 600+ pre-built connectors, real-time and batch data movement, and scalable architecture. It helps teams reduce manual query tuning and orchestration overhead, making it easier to manage data pipelines at scale.

.webp)