25 Best ETL Tools: Features & Pricing

Summarize this article with:

The evolution of data storage, processing, and integration, particularly with the advent of cloud computing has transformed how businesses handle and leverage data. ETL tools have played a crucial role in this shift by enabling organizations to extract, transform, and load data seamlessly across systems and platforms. Cloud-based ETL tools allow businesses to store, process, and manage vast amounts of data without significant investments in infrastructure, ensuring scalability, flexibility, and faster time-to-insight.

The emergence of cloud-based data operations is a driving force behind the modernization of ETL processes. ETL plays a fundamental role in preparing your dataset for further analysis. It structures, refines, and seamlessly integrates the data into modern data ecosystems. This process elevates the quality and consistency of your data, contributing to strategic enhanced decision-making.

The advancement of the ETL process has resulted in the development of sophisticated tools and technologies. If you are looking to choose the best ETL tool for your business, you have arrived at the right place! This article provides a detailed overview of the ETL process and introduces the top 25 ETL tools to help you make informed decisions.

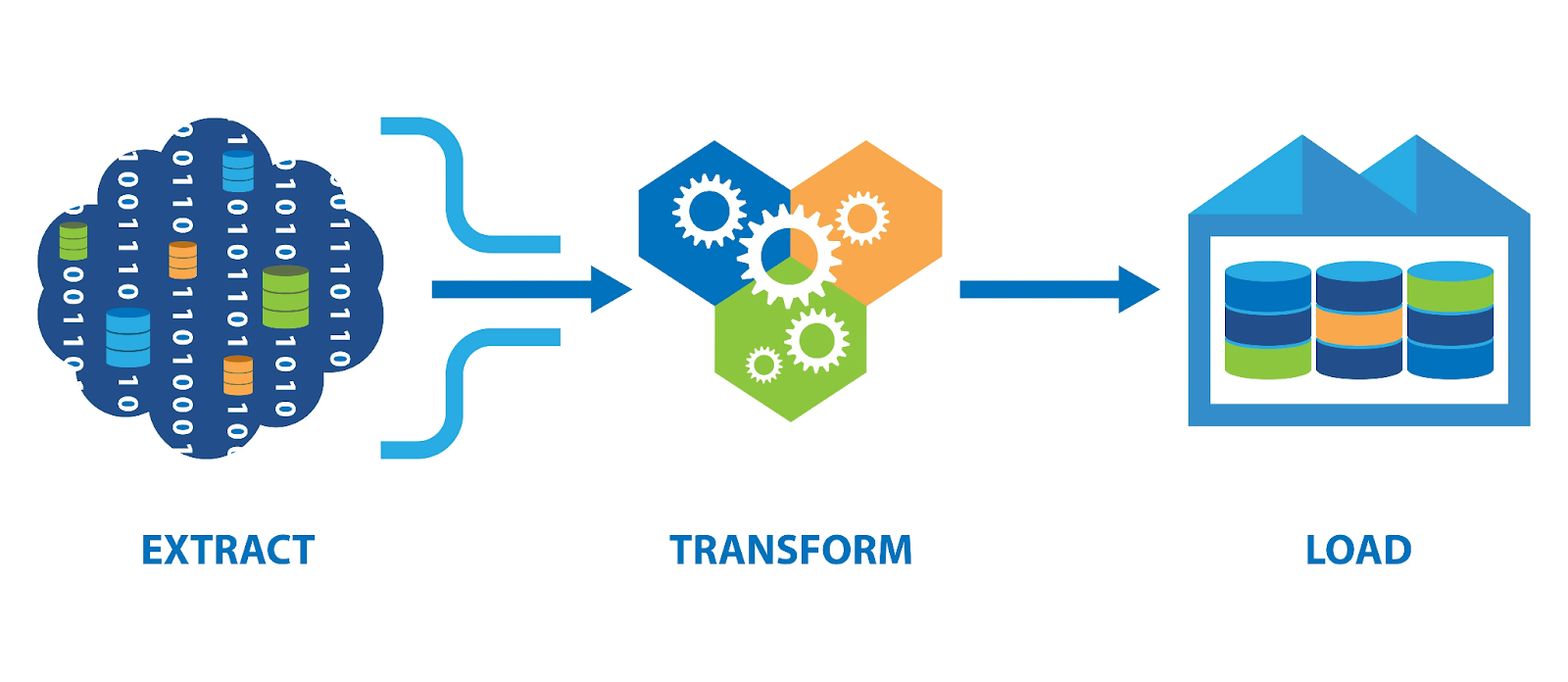

What is ETL?

ETL, short for Extract, Transform, and Load, is a vital data integration process that not only consolidates data but also safeguards the security of sensitive information in the process.. The method involves collecting data, applying standard business rules to clean and reform data in a proper format, and finally, loading it to a data warehouse or database. Look at each of the terms in more detail.

- Extract: The extraction stage involves retrieving data from different sources, including SQL or NoSQL servers, Customer Relationship Management (CRM) platforms, SaaS applications and software, marketing platforms, and webpages. The raw data is then exported to a staging area, preparing it for subsequent processing.

- Transform: In the transformation stage, the extracted data undergoes a series of operations to ensure it is clean, formatted, and ready for querying in data warehouses. Transformation tasks can include filtering, de-duplicating, standardizing, and authenticating the data to meet the specific demands of your business.

- Load: The loading phase in the ETL process is where the transformed data is transferred to the designated data destination, which can be a data warehouse or database. The loading can involve moving the entire dataset or migrating only the latest changes made to the dataset. It can be done periodically or continuously in a way that there is minimal impact on the source and target systems.

Why are ETL Tools Needed?

Utilizing ETL processes enables you to study raw datasets in a suitable format necessary for analytics and deriving meaningful insights. It facilitates tasks such as studying demand patterns, shifts in consumer preferences, latest trends and ensuring compliance with regulatory standards.

Today, ETL Platforms automate the data migration process, offering flexibility to set up periodic integrations or perform them during runtime. They allow you to focus on important tasks at hand instead of carrying out mundane tasks of extracting and loading the data. It is vital to pick the best ETL tool for your business, so take a moment to understand some of the popular ETL tools available today.

Suggested Read: ETL tools use cases

25 Best ETL Tools

After a complete understanding of ETL processes and the types of tools, here’s a comprehensive guide elaborating on the best ETL tools to handle modern data workloads.

1. Airbyte

Airbyte is one of the best data integration and replication tools for setting up seamless data pipelines. This leading open-source platform offers you a wide catalog of 600+ pre-built connectors. Even if you are not proficient at coding, you can quickly load your data from the source to the destination without writing a single line of code.

Although the catalog library is quite expansive, you can still build a custom connector to data sources and destinations not present in the pre-built list. Creating a custom connector takes a few minutes because Airbyte makes the task easy for you. The Connector Development Kit (CDKs) and Connector Builder options help you build custom connectors within minutes. The Connector Builder comes with AI-assist functionality. The AI assist fills out most UI fields by reading through the API documentation of your preferred platform, simplifying the connector development process.

Airbyte Offering

Core (Always Free / Self-Managed):

Core is the open source, self-managed tier that gives you full control over your deployment without any cost. It includes access to 600+ connectors, support for change data capture (CDC), schema propagation, column selection, cron scheduling, and sub-5 minute sync frequency. This plan is great if you have the internal expertise to run and maintain the system and don’t need managed support or additional enterprise governance features.

Standard (Most Popular):

The Standard plan is a fully managed cloud offering designed for teams who prefer not to run infrastructure themselves. It uses volume-based pricing (charging you by how much data you sync) and provides basic functionality like the full suite of connectors, scheduling, column filtering, and API access. It’s intended for practitioners who want an easy setup and predictable pricing for smaller workloads.

Pro:

The Pro plan is built for organizations that require scale, governance, and tighter control over their data workflows. Rather than volume pricing, Pro (and the higher enterprise tiers) use a capacity (Data Workers) model, which lets you scale based on the number of parallel pipelines rather than pure data volume. With Pro, you also gain features like multiple workspaces, SSO, role-based access control, and more advanced support.

Enterprise Flex (New):

Enterprise Flex is tailored for large organizations needing a balance between flexibility and control. You can choose deployment modes (cloud, hybrid, multi-cloud) while still getting the enterprise capabilities of the Pro tier—such as multiple workspaces, SSO, RBAC, and premium support. The key is that you retain more sovereignty over your deployment and infrastructure choices.

Enterprise Self-Managed:

This plan is for enterprises with very stringent security, compliance, or infrastructure needs. In this tier, Airbyte is deployed entirely on your infrastructure (even air-gapped, on-premises) so that you maintain full control over data, access, and environment. You still benefit from enterprise support, but the infrastructure responsibility is fully yours.

Airbyte’s Key Features

An interesting feature that Airbyte provides is the ability to consolidate data from multiple sources. If you have large datasets spread across several locations or source points, you can bring it all together at your chosen destination under one platform.

This data integration and replication platform has one of the largest data engineering communities, with over 1100+ contributors and more than 20,000 members. Every month, 1000+ engineers are engaged to build connectors and expand Airbyte’s comprehensive connector library.

By migrating the data into DataFrame, you can transform the data into a format compatible with the destination of your choice. This data can then be loaded into the destination connector using Python’s extensive libraries. With this feature, you get the flexibility to manage ETL workflows according to your requirements.

Airbyte extends beyond traditional data integration by offering advanced RAG transformations that convert raw data into vector embeddings through chunking, embedding, and indexing processes. These enriched data representations can be seamlessly stored in specialized vector data stores such as Pinecone and Chroma through Airbyte's connector ecosystem. This capability, reflective of modern AI ETL approaches, creates a smooth pathway from unstructured information to structured, AI-ready assets—enabling more sophisticated data operations and intelligent workflows without requiring complex custom engineering.

2. Meltano

Developed in 2018, Meltano is an open-source platform that offers a user-friendly interface for seamless ETL processes. Meltano is pip-installable and comes with a prepackaged Docker container for swift deployment. This ETL tool powers a million monthly pipeline runs, making it best suited for creating and scheduling data pipelines for businesses of all sizes.

Key Features:

- The platform offers a wide range of plugins for connecting to over 300 natively supported data sources and targets.

- You can also customize connectors through extensile SDKs, ensuring adaptability to your specific needs.

- Meltano is aligned with the DataOps best practices and has an extensive Meltano Hub community for continuous development and collaboration.

3. Matillion

Matillion is one of the best cloud-native ETL tools specifically crafted for cloud environments. It can operate seamlessly on major cloud-based data platforms like Snowflake, Amazon Redshift, Google BigQuery, Azure Synapse, and Delta Lake on Databricks. The intuitive user interface of Matillion minimizes maintenance and overhead costs by running all data jobs on the cloud environment.

Key Features:

- Matillion ensures versatility through its innovative and collaborative features supported by Git.

- It has an extensive library of over 150 pre-built connectors for popular applications and databases.

- Matillion’s introduces a generative AI feature for data pipelines where you can connect or load vector databases to develop your preferred large language models (LLM).

4. Fivetran

One of the prominent cloud-based automated ETL tools, Fivetran, streamlines the process of migrating data from multiple sources to a designated database or data warehouse. The platform supports over 500+ data connectors for various domains and provides continuous data synchronization from the source to the target destination.

Key Features:

- This ETL tool offers a low-code solution with pre-built data models, enabling you to handle unexpected workloads easily.

- Fivetran ensures data consistency and integrity throughout the ETL process by swiftly adjusting to APIs and schema changes.

- There is a 24x7 access to Support Specialists to help you troubleshoot any technical concerns during the migration process.

5. Stitch

Stitch is a cloud-based open-source ETL service provider owned by the cloud integration company Talend. The platform is well-known for its security measures and swift data transfer into warehouses without the need for coding.

Key Features:

- Stitch supports simple data transformation and provides over 140 data connectors. However, it does not support user-defined transformations.

- It is known for its data governance measures, having HIPAA, GDPR, and CCPA compliance certifications.

- The open-source version of Stitch has limitations in handling large volumes of data. Hence, you can subscribe to an enterprise version tailored for vast datasets.

6. Apache Airflow

Apache Airflow is an open-source framework that has been primarily designed as an orchestrator. The platform provides integrations with some of the best ETL tools through custom logic.

Key Features:

- Airflow allows you to build and run workflows that are represented through Directed Acyclic Graph (DAG). DAG represents a collection of individual tasks in a proper Python-script structure. It is created to facilitate the simplified management of each task in your workflow.

- You can deploy Airflow on both on-premise and cloud servers, gaining the flexibility to choose the infrastructure of your choice.

- You will find several in-built connectors for many industry-standard sources and destinations in Airflow. The platform even allows you to create custom plugins for databases that are not natively supported.

7. Integrate.io

Integrate.io is a low-coding data integration platform offering comprehensive solutions for ETL processes, API generation, and data insights. With a rich set of features, it enables you to swiftly create and manage secure automated pipelines, making it one of the well-known ETL tools available.

Key Features:

- The platform supports over 100 major SaaS application packages and data repositories, covering a wide range of data sources.

- You can tailor the data integration process on Integrate.io to suit your specific requirements through its extensive expression language, sophisticated API, and webhooks.

- Integrate.io has a Field Level Encryption layer that enables encryption and decryption of individual data fields using unique encryption keys.

8. Oracle Data Integrator

Oracle Data Integrator provides a comprehensive and unified solution for the configuration, deployment, and management of data warehouses. The platform is well-known for ETL processes, facilitating seamless integration and consolidating diverse data sources.

Key Features:

- Oracle Data Integrator supports real-time event processing through its advanced Change Data Capture (CDC) ability. It allows the processing of databases in real-time and keeps your target system up-to-date.

- This ETL tool can be integrated with Oracle SOA Suite, a unified service infrastructure component for developing and monitoring service-oriented architecture (SOA). Thus, its interoperability with other components of the Oracle ecosystem enhances your data pipeline.

- Oracle Data Integrator employs Knowledge Modules that provide pre-built templates and configurations for data integration tasks, boosting productivity and modularity.

9. IBM Infosphere Datastage

InfoSphere DataStage, as a part of the IBM InfoSphere Information Server, is one of the best data integration tools. It leverages parallel processing and enterprise connectivity, ensuring scalability and performance for organizations dealing with huge datasets.

Key Features:

- InfoSphere DataStage provides a graphical interface for designing data flows. This makes it user-friendly and accessible to extract data from diverse sources.

- The tool enables the development of jobs that interact with big data sources, including accessing files on the Hadoop Distributed File System (HDFS) and augmenting data with Hadoop-based analytics.

- InfoSphere DataStage supports real-time data integration, enhancing the responsiveness in workflows.

10. AWS Glue

AWS Glue is a comprehensive serverless data integration service provided by AWS. It allows you to orchestrate your ETL jobs by leveraging other AWS services to move your datasets into data warehouses and generate output streams.

Key Features:

- The platform facilitates connection to over 100 diverse data sources. It allows you to manage data in a centralized data catalog, making it easier to access data from multiple sources.

- AWS Glue operates in a serverless environment, giving you a choice to conduct ETL processes using either the Spark or the Ray engine.

- Another way of running data integration processes on AWS Glue is through table definitions in the Data Catalog. Here, the ETL jobs consist of scripts that contain programming logic necessary for transforming your data. You can also provide your custom scripts through the AWS Glue console.

11. Azure Data Factory

Azure Data Factory is a fully managed, serverless data integration service offered by Microsoft Azure. It is one of the best ETL tools for creating data pipelines and managing transformations without extensive coding.

Key Features:

- Azure Data Factory comes with over 90 built-in connectors that are all maintenance-free.

- The platform supports easy rehosting of SQL Server Integration Services (SSIS) to build ETL pipelines. It also includes built-in Git integration and facilitates continuous integration and continuous delivery (CI/CD) practices.

- Azure Data Factory allows you to leverage the full capacity of underlying network bandwidth, supporting up to 5 Gbps throughput.

12. Dataddo

Dataddo stands out as a prominent ETL (Extract, Transform, Load) solution that is suitable for most of the modern cloud-based infrastructures. It resolves the integration issue with popular cloud platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), Microsoft Azure, and more, giving you an unlimited choice to scale and manage your data operations.

Key Features:

- Dataddo stands out by its interface which is designed to do data management tasks and at the same time reduce maintenance and operation issues.

- Dataddo, a complete solution with a library of over 300 pre-built connectors, gives you the ability to easily connect any applications and databases, thus facilitating a smooth data flow in your ecosystem.

- Dataddo introduces AI modules that increase data processing speed. It employs the most advanced algorithmic AI to improve the data pipelines and the performance and accuracy of the system, which in turn enables a user to take data-driven actions.

13. Informatica

Informatica is a cloud ETL tool developed to work with the leading data platforms. Its easy-to-utilize interface is designed for data management tasks. This eliminates the need for maintenance and upkeep of on-premise infrastructure.

Key Features:

- With Informatica's wide range of innovative and collaborative features, it provides integrated Git support to help the team work together efficiently and effectively.

- Informatic features more than 200 pre-built connectors, which enables application integration with a broad spectrum of applications and databases in a way that brings unrivaled flexibility and interoperability.

- Informatica provides AI-driven insights for the data pipeline development, which brings new AI capabilities for the users.

14. Qlik

Qlik offers an intuitive all-in-one platform by transforming the traditional ETL process into an easy-to-use and effortless data exploration and analysis for business users. While traditional ETL tools with complex coding requirements are a priority, Qlik emphasizes customized interfaces and self-service abilities.

Key Features:

- Qlik eliminates the need to learn complicated programming by applying a simple drag-and-drop interface. Business users with little or no technical skill can readily build data visualization, discover trends, trends, and identify patterns within their data.

- Qlik is a winner at data integration in real-time. The in-memory architecture it provides permits interactive engagement with the most recent data as it becomes available, which gives a vital look at the business operation and facilitates proactive decision-making.

15. Skyvia

Skyvia is another cloud-based ETL tool that allows users to easily navigate through the intricate world of data integration. Skyvia, for your everyday data operations, offers its services to small and large businesses. You can easily integrate data from different sources, transform it, and load it into the desired destination with a unified and easy-to-use interface.

Key Features:

- Skyvia has 200+ pre-built connectors, which could be used for easy data extraction from a large number of cloud apps, databases, and file storage.

- Skyvia's drag-and-drop interface is so easy to use that even a beginner can use it.

- Skyvia exceeds a simple data import by offering powerful two-directional data synchronization functionality. Keep your data sources in sync automatically by carrying out this synchronization process in one place, allowing you to have a real-time view of the data you have across your ecosystem.

16. Estuary

Estuary is a cloud-based ETL tool designed to reinvent data integration for businesses of all sizes. Contrary to the conventional ETL tools specializing solely in batch processing, Estuary provides a distinctly different approach encompassing real-time and batch operations. Your data pipelines remain always up-to-date, as they feed you with the freshest data.

Key Features:

- Estuary aims to create an environment that is easy to use by providing an interface where users at any level of technical expertise will be able to effectively build and manage data pipelines by using drag and drop.

- Estuary is unique in its use of real-time data processing capabilities. Data changes are reported as they occur and then entered as they happen, thus, all the latest info is always at a fingertip.

17. Singer

Singer is not a traditional ETL tool. It is an open-source framework that caters to the unified approach to constructing data pipelines. It provides a common interface and software application, that is, "taps", which can connect to different data sources. These data sources could be generating data in different formats depending on the programming language.

Key Features:

- Singer emphasizes modularity and customization. It separates data extraction (taps) and data loading (targets), enabling users to develop personalized data pipelines, in which each component is adjusted to exactly fit the user's needs.

- Singer is also of an open-source type which builds a lively developer community which in turn makes a library of taps and targets to include data from various sources to destinations.

18. Keboola

Keboola is a multifunctional ETL software that helps companies from different industries simplify data integration and processing. Although Keboola works on the cloud, it is an all-around platform that enables customers to unify data from different sources, transform it, and analyze it. Keboola gives you the capability to handle any kind of data whether it's structured or unstructured.

Key Features:

- It features a user interface that has been intuitively designed and simplifies the data pipeline building, designing, and management processes. Through the use of custom workflows, users can conveniently extract data from various sources, apply business logic, and adjust it to their own needs by loading it into the location they prefer.

- Keboola boasts an impressive module of pre-built connectors which is a great way to get your data to various sources and applications.

- Users can utilize power data transformation functions, such as data cleansing, enrichment, and aggregation. The platform’s user-friendly interface and the flexible data modeling tools allow users to perform the hardest transformations in a simple way and maintaining the data quality and consistency is the process.

19. Apache Kafka

Apache Kafka is a distributed streaming platform that has gained the reputation of being able to deal with significant data loads in near real-time. At first, it was developed by LinkedIn and then, Apache Software Foundation made it an open-source that has become essential for building real-time data pipelines and streaming applications.

Key Features:

- Kafka distributes data across multiple nodes thereby mitigating a single point of failure as well as scaling out. The replication of messages across the cluster allows for failure-proof data processing that happens irrespective of the node failures.

- With its publish-subscribe messaging model, Kafka provides real-time data processing and therefore is suitable for cases where event sourcing, log aggregation, and stream processing are applied.

- Kafka offers horizontal scalability as a feature, enabling organizations to process data loads of higher volumes by adding more brokers to the cluster. This helps ensure a strong network infrastructure that can scale up as data consumption increases.

20. Rivery

Rivery is the most advanced ETL tool available for enterprises. It comes with robust and user-friendly tools that allow enterprises to carry out data management, data transformation, and data analysis in a frictionless way. You can process various types of data, structured or not, with Rivery which comes with the technical capabilities and features for deriving meaningful insights and informed decision-making.

Key Features:

- Rivery provides simple integration with a great variety of data sources and destinations, including cloud-based data warehouses. Companies can now bring data from various sources and queue it for analytics and reports by easily joining data from multiple sources.

- The Rivery data pipeline builder is easy to understand, so designing, scheduling, and automating various workflows is no longer complex.

21. Talend

Talend is a comprehensive data integration platform that offers a wide range of solutions for enterprise ETL operations. The platform's unified approach to data integration allows you to manage, transform, and visualize your data across various environments, including on-premises, cloud, and hybrid setups.

Key Features:

- Talend offers a unified platform that integrates data integration, data quality, application integration, and master data management capabilities in a single environment.

- The platform provides robust data governance features.

- ELT/ETL and change data capture (CDC) support.

22. Hevo Data

Hevo Data is another no-code, cloud-based ETL tool for businesses of all sizes. As a fully managed solution, Hevo Data enables you to build automated data pipelines without writing any code, allowing you to focus on analysis rather than data preparation and movement.

Key Features:

- Hevo Data supports over 150 ready-to-use integrations, making it easy to connect various data sources.

- With its schema mapping and auto-schema detection features, Hevo automatically adapts to changes in your source data structure without disrupting your data pipelines.

- Hevo provides data transformation capabilities through its Python-based interface.

23. Pentaho

Pentaho Data Integration (PDI) is a codeless data orchestration tool that enables organizations to ingest, blend, orchestrate, and transform data from various sources into a unified format for analysis and reporting.

Key Features:

- PDI offers a low-code environment with a drag-and-drop graphical interface, allowing you to develop and maintain data pipelines efficiently without extensive coding knowledge.

- PDI's metadata injection feature allows for the reuse of transformation templates.

- PDI also operationalizes AI/ML models based on Spark, R, Python, Scala, and Weka, extending its capabilities beyond traditional ETL processes.

24. Portable.io

Portable.io is an Extract, Transform, Load (ETL) platform that specializes in integrating data from various sources into data warehouses such as Snowflake, BigQuery, and Redshift.

Key Features:

- Portable offers over 1,500 connectors, enabling you to seamlessly integrate data from diverse sources.

- The platform provides a user-friendly, no-code interface, allowing you to set up and manage data pipelines without the need for coding expertise.

- Portable ensures secure and reliable data delivery, integrating seamlessly with major data warehouses like Snowflake, BigQuery, Redshift, PostgreSQL, and MySQL.

25. SQL Server Integration Services (SSIS)

SQL Server Integration Services (SSIS) is Microsoft's enterprise ETL and workflow platform, built as a component of the SQL Server database software. SSIS is particularly popular among organizations with significant investments in Microsoft technology stacks.

Key Features:

- SSIS offers a visual design interface through SQL Server Data Tools (SSDT), allowing you to build complex data integration solutions through a drag-and-drop workflow designer.

- The platform includes a comprehensive set of out-of-box data transformations, enabling you to perform various operations like aggregation, merging, conditional splitting, and data cleansing without additional programming.

- SSIS offers powerful debugging and logging capabilities, helping you to identify and resolve issues in your ETL processes quickly and efficiently.

Types of ETL Tools

ETL tools have evolved significantly to meet diverse data integration needs. Understanding the different categories helps data engineers select the right tooling for their specific use cases and infrastructure requirements.

Enterprise Commercial ETL Tools

Enterprise-grade commercial platforms like Informatica PowerCenter, IBM DataStage, and SAP Data Services offer comprehensive data integration frameworks with visual development interfaces, pre-built connectors, and robust metadata management. These tools excel in regulated industries requiring extensive audit trails and compliance features, though they come with higher licensing costs and potential vendor lock-in.

Cloud-Native ETL Platforms

Cloud-native solutions such as AWS Glue, Azure Data Factory, and Google Cloud Dataflow are purpose-built for cloud environments with elastic scalability and serverless architectures. They provide tight integration with cloud ecosystems, automatic scaling based on workload demands, and pay-per-use pricing models that eliminate infrastructure management overhead.

Open-Source ETL Frameworks

Open-source tools like Apache Airflow, Apache NiFi, and Talend Open Studio provide flexibility and cost-effectiveness without licensing fees. Apache Airflow has become the standard for workflow orchestration using Python DAGs, while NiFi offers visual flow-based programming. These tools give data engineers complete control and extensibility, though they require hands-on infrastructure management.

ELT-Focused Tools

Modern ELT tools like dbt, Fivetran, and Stitch reverse the traditional paradigm by loading raw data first, then transforming within the target data warehouse. This approach leverages cloud data warehouse computational power, simplifies pipeline architecture, and is particularly effective for analytical workloads with automated schema drift handling.

Real-Time Streaming ETL Tools

Streaming platforms such as Apache Kafka with Kafka Streams, Apache Flink, AWS Kinesis, and Azure Stream Analytics handle continuous data ingestion and processing for near real-time analytics. These tools are essential for low-latency use cases like fraud detection, IoT analytics, and real-time dashboards, though they introduce complexity around state management.

Hybrid ETL Tools

Hybrid solutions combine on-premises and cloud capabilities, enabling data engineers to build pipelines that span multiple environments. Tools like Talend Data Fabric, SnapLogic, and Matillion offer deployment flexibility, allowing organizations to process data where it resides while gradually migrating to cloud infrastructure. This approach is ideal for enterprises with legacy systems and cloud modernization initiatives.

Custom-Built ETL Solutions

Many organizations build custom ETL solutions using Python, Scala, or Java with frameworks like Apache Spark, Pandas, or Apache Beam. This approach provides maximum flexibility for complex transformation logic, optimized performance for specific use cases, and complete architectural control, though it requires significant development effort and ongoing maintenance.

Final Takeaways

ETL procedures form the foundation for data analytics and machine learning processes. It is a crucial step in preparing raw data for storage and analytics. You can later use this stored data to generate reports and dashboards in business intelligence tools or create predictive models for making timely forecasts.

ETL tools facilitate you to conduct advanced analytics, enhance data operations, and improve end-user experience. Hence, it is of utmost importance that you select the best ETL tool that is well-suited for your business to make the right strategic decisions.

Among the ETL tools we've covered, Airbyte stands out as a solid option for teams looking to streamline their data integration workflows. With support from thousands of companies and a robust feature set, it handles both straightforward and complex data pipelines effectively. If you're evaluating ETL solutions, Airbyte offers a free tier to test whether it fits your needs. For larger-scale deployments, their enterprise options are worth exploring.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)