Discover How ETL Tools Solve Industry-Specific Challenges

Summarize this article with:

✨ AI Generated Summary

The exponential growth of data presents challenges in integration, quality, and transformation, which ETL (Extract, Transform, Load) tools effectively address by consolidating disparate data sources into a unified, clean, and analyzable format.

- ETL use cases include data warehousing, machine learning preparation, database replication, and IoT data integration.

- Common challenges solved by ETL tools: data quality issues (inaccuracy, inconsistency, duplication, outdatedness), schema alignment, complex transformations, handling unstructured data, and managing large data volumes.

- Airbyte is highlighted as a modern, developer-friendly ETL platform with extensive connectors and customizable pipelines to streamline data integration without coding.

The exponential growth of data is a double-edged sword. While it holds immense potential for insights and innovation, managing it effectively can be a nightmare. A recent report estimates that the global datasphere will reach 175 zettabytes by 2025. This data deluge creates a significant challenge for businesses across industries with information. Imagine a data engineer who wants to analyze customer segmentation data. However, the data is scattered across siloed systems—CRMs, e-commerce platforms, marketing databases, etc. Integrating these disparate data sources into a unified data pipeline can be complex and time-consuming, significantly, if the data formats and structures differ.

This is where ETL tools come in. They allow you to extract all data from diverse sources and transform it into a consistent, usable format. Transformation requires wrangling missing values, handling errors, and even standardizing units of measurement. Finally, the data is loaded into a central repository.

In this article, you will explore ETL use cases and how they can help your business overcome unique data challenges.

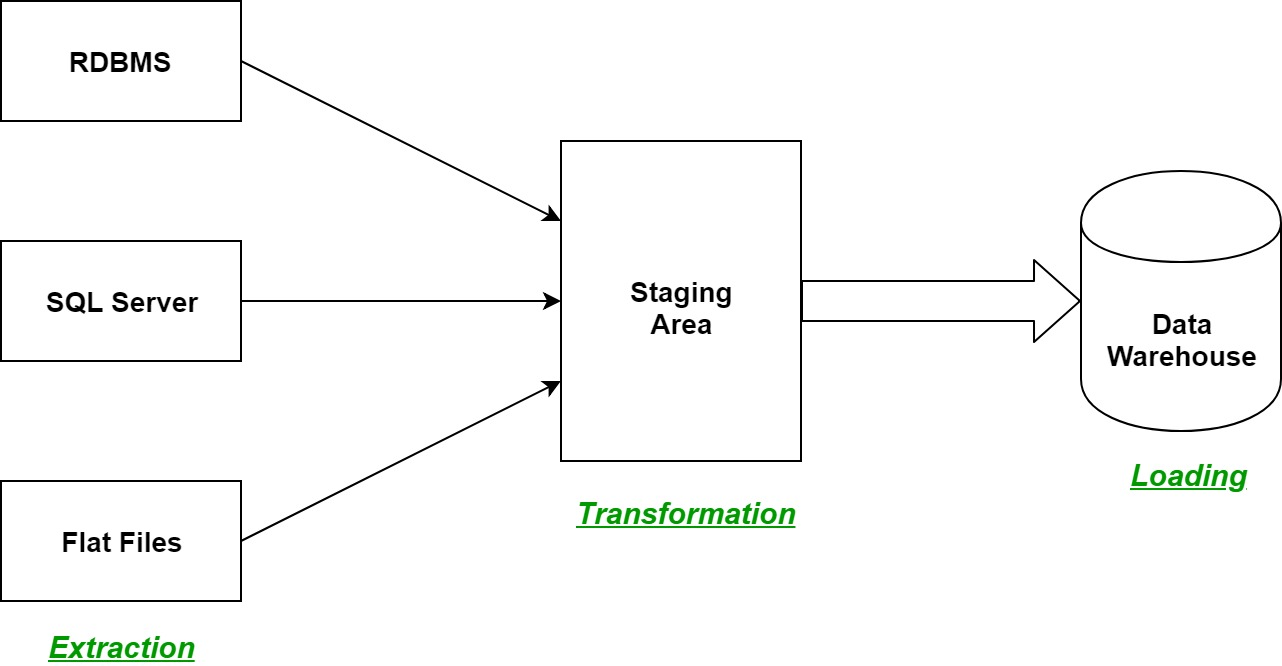

What is ETL?

ETL stands for Extract, Transform, and Load. It's a process used in data warehousing and integration. Here’s an overall breakdown of the ETL process:

Extract

In this stage, data is pulled from various origins. These sources can be databases, spreadsheets, flat files, social media feeds, and more. The way information is extracted depends on the format and location of the data.

Transform

The raw data isn’t always compatible with the target system and is not ready for analysis. It might have inconsistencies, errors, outliers, etc. The data is cleaned, formatted, and organized consistently in this stage. This might involve removing duplicates, correcting errors, converting the formats, and applying business rules.

Load

The final stage involves loading the transformed data into a target system, which is often a warehouse or a database. It serves as a centralized repository, allowing you to perform efficient data storage, retrieval, and analysis.

ETL Use Cases

ETL serves an essential role in various data management scenarios. Here are some common ETL use cases:

Data Warehousing

ETL is a core process in maintaining data warehouses. Consolidating segregated data in a single repository will give you a unified view of information. This will help you perform historical data analysis and reporting.

Machine Learning and Artificial Intelligence

ML models rely heavily on high-quality data for training. ETL helps in preparing clean and standardizing data for these models. Also, during the transformation process, new features can be created from the existing data, revealing hidden patterns or trends that can improve the models performance.

Database Replication

One of the fundamental use cases for ETL processes is data replication. This process involves duplicating data from a single source system to another for backup, distribution, or maintaining consistency across systems.

Data replication ensures that a backup copy of the data is readily available for disaster recovery scenarios, synchronizes data to maintain consistency, facilitates data sharing, and supports data migration efforts to modern platforms or cloud environments. By leveraging ET tools for data replication, you can enhance data availability, integrity, and accessibility. This will help you streamline various business processes.

IoT Data Integration

ETL helps manage the large volumes and velocity of data generated by IoT devices. IoT devices can generate different types of data—sensor, automation, location data, etc. Sensor data also includes data on temperature, pressure, humidity, and more.

ETL tools facilitate extracting data from these devices, cleaning and transforming it into a consistent format, and loading it into a central repository for analysis. This allows leveraging the data for tasks like trend analysis and anomaly detection, predictive maintenance, or gaining insights into consumer behavior and preferences. These insights can then be used to optimize processes and improve the efficiency of various IoT applications.

What are the Challenges Faced By Industries and How Can ETL Tools Solve them?

ETL processes are crucial for getting data into a usable state for analysis, but they come with their own challenges. Here are some of the most common challenges:

Data Quality Issues

Data quality is a roadblock that prevents data from being readily usable for analysis. These issues can stem from various sources and can significantly impact the accuracy of your results. Here is how the quality of data may be impeded:

Inaccuracy and Incompleteness

While extracting data from sources, it might sometimes contain errors, such as typos, incorrect values, logical inconsistencies, or crucial information that needs to be included. For example, a customer’s address with the wrong zip code or a sales record without a quantity field would make calculating total sales impossible.

ETL tools can automate validation checks, catching errors on the fly. They can also identify missing data and flag it for review or suggest ways to fill the gaps, like using reference data.

Inconsistency

Inconsistent formats, units, or coding across datasets make it difficult to combine and analyze data efficiently. The ETL tools can enforce data formatting standards and identify inconsistencies through a combination of pre-defined rules, data profiling techniques, and automation.

These tools also enable you to define the desired layout of your data elements, such as data types (e.g., text, number, date), formats (e.g., date format: YYYY-MM-DD), etc. Furthermore, they help standardize units of measurement and abbreviations.

Duplication

Duplicate records for the same entity inflate data volume and can lead to inaccurate results. For example, you have a customer database for an online store. Due to data entry errors or multiple accounts created by the same person, you might end up with duplicate entries from the same customer.

The ETL tools can aid in finding and removing duplicates by identifying records with matching data points like names, addresses, or IDs. This will prevent storage wastage and skewed analysis.

Outdatedness

Data that hasn’t been updated can become irrelevant over time. Stale customer information can lead to failed marketing campaigns. By using these tools, you can even track data lineage, showing how data has been transformed over time, which helps assess its freshness.

Data Transformation Challenges

Transformation challenges are the hurdles you face when trying to convert raw data from various sources into a usable format for analysis. These challenges can add complexity and slow down the ETL process. Here are some common data transformation challenges:

Schema Alignment

Imagine data coming from distinct databases, each with its own structure for storing information. The schema might use different field names, data types, and units. This makes it challenging to reconcile these dissimilarities and create a unified schema of the system. ETL tools employ several methods to achieve the suitable alignment of format for analysis:

- Data Mapping: ETL tools allow you to define a visual or code-based mapping that translates elements from source schemas to the target scheme. You can map corresponding fields, define transformations (e.g., converting schemas), and handle missing data.

- Standardize Libraries: Some ETL tools offer pre-built libraries with common data standards and transformation functions. This simplifies mapping data from various sources, such as addresses (converting them to a standard format) or currencies (converting them to a single currency).

- Metadata Management: These tools can store metadata (data about data) that defines the structure and meaning of data elements in each source schema. This metadata helps you understand how data needs to be transformed to fit the target schema.

Complex Transformations

Sometimes, data transformations go beyond simple reformering. You might need to perform more complex manipulation to get the data in the desired format. ETL tools provide the following functionalities to handle these complexities:

- Scripting Language: Many ETL tools allow the embedding of scripting languages within the transformation logic. This enables complex calculations, data aggregations (i.e., calculating average sum), data cleansing (i.e., removing special characters), and conditional transformations.

- User-Defined Functions (UDFs): ETL tools let you create reusable UDFs for frequently used complex transformations. This promotes code modularity and simplifies complicated transformations.

Handling Unstructured Data

The data that comes from social media posts, emails, and sensors can be unstructured and require additional processing to extract meaningful insights. By using the following techniques, ETL tools can handle this data:

- Built-in Connectors: Some tools offer pre-built connectors for popular unstructured data sources, such as social media platforms or cloud storage services. These connectors simplify data extraction from the sources.

- Parsing and Pre-processing Techniques: ETL tools can parse unstructured data to extract relevant information. This might involve techniques like text extraction from documents, sentiment analysis, or image recognition.

Data Volume and Performance

ETL processes can deal with massive amounts of data, which can strain network bandwidth and slow down the entire process. Here is how ETL tools can tackle these challenges:

- Incremental Processing: Instead of processing the entire dataset on every run, ETL workflows can be designed for incremental updates. When only new or changed data is extracted and processed, it significantly reduces processing time and resource consumption, especially for large datasets.

- Parallel Processing: Some ETL tools facilitate processing tasks across multiple sources to leverage available resources more efficiently. This allows for faster processing of larger datasets by dividing the workload and running tasks concurrently.

Streamline Your Data Integration Processes with Airbyte

Analyzing and maintaining the data segregated on different platforms separately can be troublesome and complex for you. To overcome this problem, Airbyte, a modern data integration and replication platform, enables you to extract data from disparate sources and load it directly into the target repository. This helps you move data from different locations to a single destination, such as a data warehouse or lake, without any need for coding.

Airbyte includes several key features:

- Extensive Pre-Built Connectors: Airbyte offers a vast library of pre-built connectors, exceeding 350+ sources and destinations today. This extensive collection allows you to effortlessly connect to a wide range of databases, cloud applications, APIs, etc., streamlining your data extraction process.

- Developer-friendly Pipeline: PyAirbyte is an open-source Python library that simplifies working with the Airbyte connectors within the Python-based data workflow. You can use PyAirbyte with various libraries, such as Pandas and Numpy.

- Custom Connections: With Airbyte’s Connector Development Kit (CDK), you can create custom connections that fit your needs in 10 minutes. This allows you to focus on the unique aspects of each data source instead of writing repetitive code from scratch.

- dbt Transformation: Although Airbyte doesn't have built-in data transformation features, you can use it with dbt to execute advanced data transformations yourself. This lets you handle modifications according to your needs, ensuring a tailored data processing workflow.

Conclusion

Data is valuable information but can be messy, inconsistent, and overwhelming in its raw state. Here’s where ETL tools step in, acting as knights in shining armor. These tools overcome all the challenges and provide you with clean and error-free data for analysis. With the different ETL use cases listed above, you can understand and weld the right tool effectively. Your organization can transform its data into a powerful weapon for better decision-making.