Mastering Data Management: A Guide to Handling Information in the Digital Age

Summarize this article with:

✨ AI Generated Summary

Effective data management is essential for modern businesses to ensure data integrity, availability, and security throughout its lifecycle, enabling informed decision-making and regulatory compliance. Key components include:

- Data collection, storage, organization, maintenance, security, and governance

- Use of modern technologies like data fabric, data lakes, and integration platforms (e.g., Airbyte)

- Strong data governance with stewardship, ownership, quality management, and lineage tracking

- Robust backup, disaster recovery, and compliance with privacy regulations

- Choosing appropriate storage solutions (on-premises, cloud, hybrid) based on business needs

Implementing these practices helps organizations safeguard data, optimize operations, and gain competitive advantages in a data-driven economy.

Data management is pivotal in modern businesses, streamlining operations and driving informed decision-making. It encompasses a series of practices and procedures to ensure the integrity, availability, and timeliness of data across its lifecycle.

A modern strategy that simplifies and unifies data management across diverse sources is data fabric architecture. This approach creates a centralized framework that facilitates seamless access, integration, and governance of data, enabling organizations to improve data delivery and gain comprehensive insights into business performance.

By understanding and implementing effective data management strategies, organizations can safeguard their critical information assets, comply with regulations, and leverage data for competitive advantage.

In the digital economy, data has transformed from a by-product of business operations into a primary driver of value and innovation. As a result, data management has become pivotal.

Data management is a vital discipline for modern businesses. It involves the processes, strategies, and technologies used to collect, store, organize, protect, and utilize data effectively and efficiently.

Organizations that recognize the value of data, implement effective data management processes, and foster a data-driven culture are better positioned to thrive in today's data-rich environment.

In this article, we will explore data management in depth. We will explain its key components and processes, along with tips and best practices for each stage.

What Does IT Data Management Involve for Modern Organizations?

Data management is the practice of collecting, organizing, managing, and maintaining an organization's data to support productivity, efficiency, and decision-making. In today's data-driven world, effective data management is crucial for businesses to make sense of their data and find relevance in the noise created by diverse systems and technologies.

The data management process includes various functions that collectively aim to make data accurate, available, and accessible.

Core Components of Data Management

The data management process includes several key components:

Data Collection involves gathering data from various sources, including internal systems, external providers, and user inputs. Data Storage focuses on storing data in a structured manner, whether in databases, data warehouses, or data lakes.

Data Organization encompasses classifying and organizing data to ensure it is easily retrievable and usable. Data Maintenance includes regularly updating and cleaning data to maintain its accuracy and relevance.

Data Security implements measures to protect data from unauthorized access and breaches. Data Governance establishes policies and standards to ensure data integrity and compliance.

Collaborative Approach to Data Management

IT professionals and data management teams typically perform most of the required work, while business users participate to ensure data meets their needs. Data management involves creating internal data standards and usage policies as part of data governance programs.

This collaborative approach ensures that data is not only well-managed but also aligned with the organization's goals and objectives.

How Do You Define Effective Data Management Systems?

Data management refers to processes involved in collecting, storing, organizing, and maintaining data in a systematic and secure manner. A data management system (DMS), which is a software solution or a set of tools, is used to effectively manage data and ensure information is available, reliable, and usable.

A data management platform or system encompasses a wide range of activities related to handling data throughout its lifecycle. Proper data management is crucial for organizations to make informed decisions, gain insights, meet regulatory requirements, and achieve their objectives.

Framework for Data Management Excellence

The DAMA Data Management Body of Knowledge (DAMA-DMBOK) provides a foundational framework for effective data management practices, ensuring data is treated as a strategic asset by outlining essential policies, procedures, and best practices.

The scope of data management can be broad, including various types of data, like structured data such as databases, semi-structured data such as XML files, and unstructured data such as text documents, images, and videos.

Modern Data Management Technologies

It also involves managing data across different platforms, such as on-premises servers, cloud environments, and hybrid infrastructures. Data management technologies have evolved significantly, with advancements such as relational databases, NoSQL, and newer concepts like data fabric and data mesh addressing the complexities of modern data environments.

Key Facets of Data Management Software

Data management software or tools involve several key facets, each critical in ensuring data is accessible and secure. This includes:

Data Life Cycle Management - Managing data through all stages of its existence Data Governance - Establishing policies and procedures for data oversight Data Security and Privacy - Protecting sensitive information and ensuring compliance Data Storage and Architecture - Designing optimal data storage solutions Master Data Management (MDM) - Centralizing critical business data Data Integration and Interoperability - Connecting disparate data sources Data Backup and Disaster Recovery - Ensuring business continuity

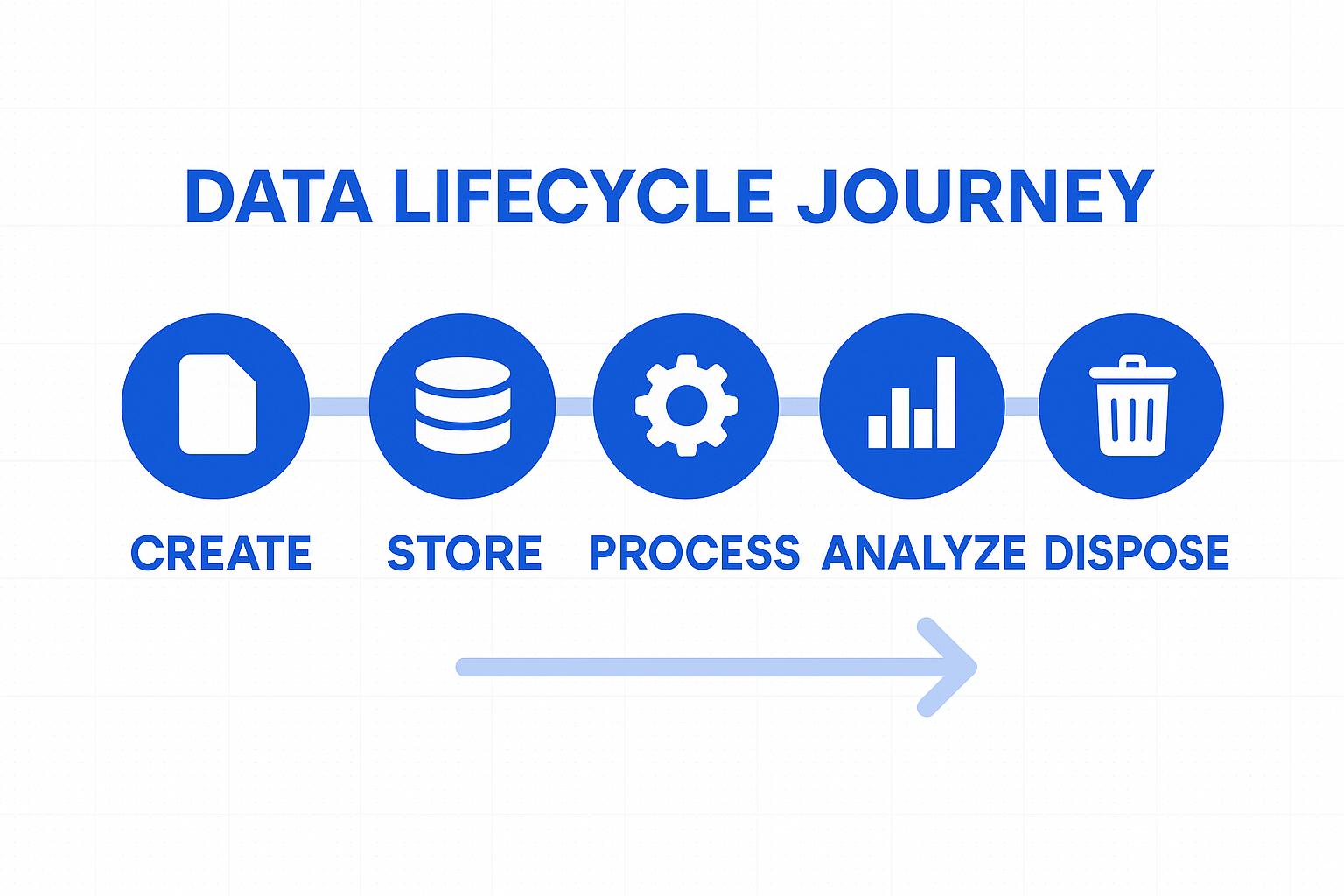

How Does Data Lifecycle Management Support Business Operations?

Data management and data lifecycle management (DLM) are related but distinct concepts. Data management is a broad concept covering the entire lifecycle of data, whereas DLM specifically focuses on managing the various stages data goes through.

Effective DLM ensures that data is stored, organized, and used efficiently while also addressing security, compliance, and cost considerations.

Data Creation and Initial Processing

The data lifecycle typically begins with data creation, where data is generated by source systems, such as sensors, applications, users, or external data providers. Proper documentation of data creation is essential for understanding its source, context, and purpose.

After data is created, it is ingested into the organization's data infrastructure through data ingestion processes. This may include data lakes, data warehouses, databases, or other storage systems.

Data Storage and Organization

Data is stored in various repositories, and the choice of storage technology depends on factors like data structure, data volume, access patterns, and cost considerations.

Once stored, data undergoes processing and analysis to extract insights, make informed decisions, and derive value from it.

Data Presentation and Long-term Management

The results of data analysis are presented through reports, dashboards, or visualizations, making insights accessible to a broader audience.

Over time, some data may become less frequently accessed but must be retained for legal or historical reasons. Archiving involves moving data to lower-cost storage tiers.

Data must be retained according to regulatory requirements and organizational policies through proper data retention and compliance procedures.

Finally, data that is no longer needed or has reached the end of its lifecycle is securely disposed of to minimize security risks and storage costs.

Why Is Data Governance Essential for IT Data Management Success?

Data governance is a comprehensive framework and an essential part of the data management system. It involves defining policies and procedures to ensure data integrity, security, and compliance.

Key components include data stewardship, data ownership, data quality, and data lineage.

Data Stewardship Responsibilities

Data stewardship involves several critical activities that ensure data remains valuable and reliable. Data stewards monitor and maintain data quality across all organizational systems.

They define data standards and guidelines that govern how information is collected, processed, and stored. Additionally, data stewards resolve data-related issues and discrepancies that arise during normal business operations.

Data Ownership and Access Control

Data ownership establishes clear accountability for data assets within the organization. This includes defining data access permissions and controls to ensure appropriate security measures.

Data owners establish data usage policies that govern how information can be utilized across different business functions. They also ensure compliance with data regulations and industry standards.

Data Quality Management

Data quality is essential for reliable decision-making throughout the organization. Poor data quality can lead to wrong insights and costly mistakes that impact business performance.

Organizations must implement systematic approaches to measure, monitor, and improve data quality across all systems and processes.

Data Lineage Tracking

Data lineage tracks the movement and transformation of data throughout its lifecycle. This visibility helps organizations understand data dependencies and assess the impact of changes.

Effective data lineage documentation supports compliance efforts and enables better troubleshooting when data issues arise.

How Can Organizations Protect Data Security and Privacy?

Data security and privacy are major concerns for organizations and a big part of a successful data management strategy. Protecting data from unauthorized access is crucial for maintaining trust, avoiding legal consequences, and safeguarding information.

Essential Security Practices

Key practices include data classification systems that categorize information based on sensitivity levels. Access controls ensure that only authorized personnel can view or modify specific data sets.

Encryption protects data both at rest and in transit, while authentication mechanisms verify user identities before granting access. Data masking techniques protect sensitive information in non-production environments.

Infrastructure and Monitoring Security

Network and endpoint security measures protect against external threats and unauthorized access attempts. Regular audits help identify vulnerabilities and ensure compliance with security policies.

Incident response planning prepares organizations to respond quickly and effectively to security breaches or data compromises.

Compliance Requirements

Many countries have implemented data privacy regulations such as GDPR and CCPA that organizations must follow. These regulations establish strict requirements for data handling, storage, and processing.

Non-compliance can lead to significant fines, legal action, and reputational damage that affects business operations and customer trust.

What Storage Solutions Support Modern Data Architecture?

Data architecture and storage are crucial in an organization's ability to efficiently manage, access, and leverage its data assets. Modern organizations must choose between various storage options based on their specific requirements and constraints.

Storage Deployment Options

On-premises storage offers organizations full control over their data infrastructure but requires substantial capital investment and ongoing maintenance. This approach provides maximum security control but limits scalability options.

Cloud storage provides scalability, flexibility, and pay-as-you-go pricing through providers like AWS, GCP, and Azure. This option reduces infrastructure management overhead while offering global accessibility.

Hybrid storage combines on-premises and cloud storage approaches, balancing control with scalability to meet diverse organizational needs.

Storage Technology Solutions

Modern organizations can choose from several storage technologies based on their specific requirements. Data warehousing provides a centralized repository specifically designed for structured data and analytical workloads.

Databases serve as transactional stores and include both SQL and NoSQL options depending on data structure and access patterns. Data lakes offer scalable storage solutions that can handle structured, semi-structured, and unstructured data in their native formats.

Each storage solution addresses different use cases and organizational requirements for data access, processing, and analysis.

How Does Master Data Management Ensure Data Consistency?

Master Data Management centralizes and governs critical organizational data such as customer, product, or employee information to ensure accuracy and consistency across all business systems.

Implementation Steps for MDM

Organizations can implement effective MDM by following several key steps. First, they must define clear objectives that align with business goals and data requirements.

Establishing a data governance framework provides the foundation for consistent data management practices. Data profiling and cleansing activities ensure that master data meets quality standards before integration.

Ongoing MDM Operations

Creating a centralized repository consolidates master data from multiple source systems into a single, authoritative source. Integration with other systems ensures that master data remains synchronized across the organization.

Continuous data quality monitoring maintains data accuracy over time, while ongoing improvement processes adapt MDM practices to changing business requirements.

Why Is Data Integration Critical for Business Success?

Data integration combines data from different sources into a unified view, while interoperability ensures systems can communicate and share data without compatibility issues. These capabilities are essential for organizations that need comprehensive insights from multiple data sources.

Modern Integration Challenges

Organizations today face complex data integration challenges as they work with diverse systems, formats, and platforms. Legacy systems often use proprietary formats that don't easily connect with modern applications.

Cloud migration initiatives require integration between on-premises and cloud-based systems. Real-time data requirements demand streaming integration capabilities that traditional batch processing cannot provide.

Streamlining Integration with Modern Platforms

Airbyte is an open-source data integration platform offering 600+ pre-built connectors, batch or streaming ingestion, and comprehensive monitoring features. This helps organizations streamline data engineering, analytics, and operations.

The platform eliminates the need for custom integration development while providing the flexibility to handle complex data transformation requirements. Organizations can focus on deriving business value rather than managing integration infrastructure.

How Do You Ensure Business Continuity Through Backup and Recovery?

Regular backups and robust disaster recovery plans are vital components of comprehensive data management strategies. These practices protect organizations from various risks that could disrupt business operations.

Critical Benefits of Backup and Recovery

Effective backup and recovery systems prevent permanent data loss from hardware failures, human errors, or malicious attacks. They ensure business continuity by enabling rapid restoration of critical systems and data.

Protection against cyberattacks becomes increasingly important as ransomware and other threats target organizational data assets. Meeting regulatory compliance requirements often mandates specific backup and retention practices.

Best Practices for Data Protection

Key practices include implementing automated backups that run consistently without manual intervention. Routine testing ensures that backup systems function properly and that data can be successfully restored when needed.

Documented disaster recovery plans provide clear procedures for responding to various emergency scenarios. Redundancy across multiple locations and systems prevents single points of failure.

Data encryption protects backup data from unauthorized access during storage and transmission. Employee training ensures that staff understand their roles in data protection and recovery procedures.

Clear communication protocols enable coordinated responses during emergency situations that require data recovery activities.

How Can Organizations Build Smarter Data Foundations?

Effective data management isn't just about storage but about unlocking insights, driving action, and protecting what matters. From lifecycle management and governance to integration and recovery, every piece of your data strategy contributes to how well your business adapts, scales, and competes. Airbyte helps make that strategy work with 600+ connectors, seamless ELT pipelines, and automation-first tooling that removes friction across your data stack. Organizations can move fast, stay compliant, and stay ahead by leveraging modern data integration platforms that eliminate traditional bottlenecks.

Frequently Asked Questions

What is data management and why is it important?

Data management is the practice of collecting, organizing, storing, and maintaining data throughout its lifecycle to support business operations and decision-making. It's important because it ensures data accuracy, availability, and security while enabling organizations to derive valuable insights from their information assets. Effective data management helps companies comply with regulations, reduce operational costs, and gain competitive advantages through better data-driven decisions.

What are the key components of a data management system?

A comprehensive data management system includes data collection from various sources, structured storage in databases or data warehouses, organization and classification for easy retrieval, regular maintenance and cleaning, security measures to protect against unauthorized access, and governance policies to ensure compliance. These components work together to create a reliable foundation for business intelligence and analytics activities.

How does data lifecycle management differ from general data management?

Data lifecycle management focuses specifically on managing data through distinct stages from creation to disposal, while general data management encompasses all aspects of handling data assets. DLM includes creation, ingestion, storage, processing, presentation, archiving, retention, and secure disposal phases. This approach ensures that data is handled appropriately at each stage while optimizing costs and maintaining compliance throughout its useful life.

What role does data governance play in organizational success?

Data governance establishes the policies, procedures, and standards that ensure data integrity, security, and compliance across the organization. It includes data stewardship responsibilities, ownership accountability, quality management, and lineage tracking. Strong governance frameworks enable organizations to maintain trusted data assets while meeting regulatory requirements and supporting reliable business decision-making processes.

How can organizations choose the right data storage and architecture solutions?

Organizations should evaluate storage options based on their specific requirements for control, scalability, and cost. On-premises solutions offer maximum control but require significant investment, while cloud storage provides flexibility and pay-as-you-go pricing. Hybrid approaches balance these trade-offs, and organizations can choose from data warehouses for structured data, databases for transactions, or data lakes for diverse data types depending on their use cases and analytical needs.

.webp)