What Is Data Quality Management: Framework & Best Practices

Summarize this article with:

✨ AI Generated Summary

Data quality management (DQM) is essential for ensuring data accuracy, consistency, completeness, and validity across distributed systems, enabling reliable analytics and decision-making. Key components include data cleansing, profiling, validation rules, governance, and continuous monitoring, with emerging technologies like blockchain, AI, and edge computing enhancing real-time quality assurance. Platforms like Airbyte facilitate scalable, automated data integration and quality management, while best practices emphasize automation, clear standards, and fostering a data-centric culture to prevent costly data crises and ensure regulatory compliance.

Data professionals at growing enterprises face an increasingly complex challenge: managing data quality across distributed systems while legacy ETL platforms consume significant engineering resources just to maintain basic pipelines. With organizations processing massive volumes of data from CRMs, internal databases, and marketing platforms, poor data governance creates what industry experts call a "data crisis" where extracting actionable insights becomes nearly impossible.

This comprehensive guide explores how to implement a robust data quality management framework that transforms raw, unrefined datasets into reliable business assets. You'll discover proven methodologies for ensuring data accuracy, consistency, and compliance while exploring cutting-edge approaches including blockchain-based quality assurance and edge-computing validation techniques.

What Makes Data Quality Essential for Modern Organizations?

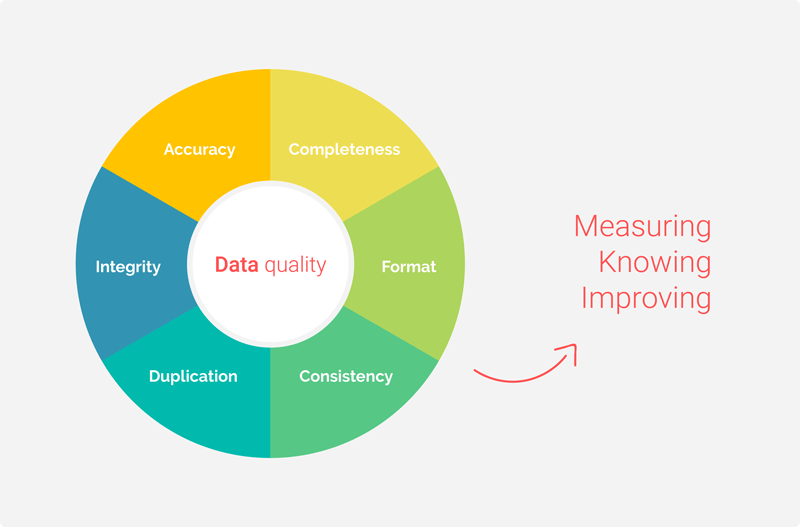

Data quality measures how well your data aligns with your organization's predefined standards across four key dimensions: validity, completeness, consistency, and accuracy. Adhering to these requirements ensures that the data used for analysis, reporting, and decision-making is trustworthy and reliable.

These dimensions work together to create a foundation for reliable analytics. Validity ensures your data conforms to defined formats and business rules. Completeness identifies missing critical information that could skew analysis results. Consistency maintains uniform formatting across systems. Accuracy verifies that data correctly represents real-world entities.

Closely monitoring these parameters reduces the time spent fixing errors and reworking, leading to improved employee productivity, customer service, and successful business initiatives.

What Is Data Quality Management?

Data Quality Management involves making data more accurate, consistent, and dependable throughout its lifecycle. It is a robust framework that helps you regularly profile data sources, confirm data validation, and run processes to remove data quality issues.

Modern DQM extends beyond traditional cleansing activities to include proactive monitoring, automated anomaly detection, and predictive quality scoring. These capabilities are essential for real-time decision-making in today's fast-paced business environment. This evolution addresses the challenges of real-time data processing, where traditional batch-oriented quality checks create bottlenecks in decision-making workflows.

3 Core Features of Effective Data Quality Management

- Data Cleansing corrects duplicate records, unusual data representations, and unknown data types while ensuring adherence to data-standards guidelines. Data cleansing forms the foundation of any comprehensive quality management approach.

- Data Profiling validates data via statistical methods and identifies relationships between data points. This process reveals patterns and anomalies that might otherwise go undetected.

- Validating Rules ensures data aligns with company standards, reducing risk and empowering reliable decisions. These rules act as guardrails that prevent poor-quality data from entering your systems.

Why Should Your Organization Prioritize Data Quality Management?

Unregulated and poorly managed data leads to a data crisis, making insight extraction and optimization difficult. Data quality management plays a crucial role in mitigating the data crisis and preventing it from happening in the first place. By implementing DQM techniques, you can transform your data into a valuable asset for informed decision-making.

Poor data quality creates measurable business impact. Organizations lose an average of $15 million annually due to data quality issues, experiencing operational inefficiencies, missed opportunities, and damaged customer relationships. Financial institutions face losses from inaccurate loan decisions, while healthcare providers risk compliance violations from inconsistent patient records.

Quality data enables faster decision-making, more accurate analytics, and improved customer experiences that drive competitive advantage. It also ensures regulatory compliance with GDPR, HIPAA, and industry-specific requirements while reducing audit risks and potential penalties.

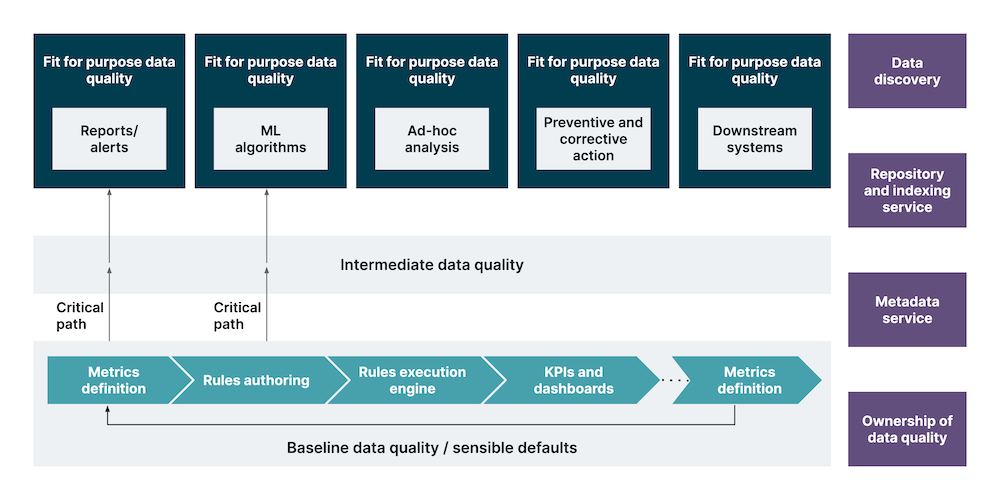

What Are the Essential Steps for Building a Data Quality Framework?

1. Define Your Data Quality Dimensions

Establish criteria including accuracy, completeness, consistency, timeliness, validity, and uniqueness. When relevant, incorporate relevance and accessibility as additional dimensions. These criteria serve as the foundation for all quality assessment activities.

2. Establish Data Quality Rules and Guidelines

Collaborate with stakeholders to draft rules and select appropriate tools, balancing automation with human oversight. Clear guidelines ensure consistent application of quality standards across all data sources and systems.

3. Implement Data Cleansing and Enrichment

Remove invalid data and enrich datasets with relevant external information, leveraging predictive analytics for proactive fixes. This step addresses existing quality issues while preventing future problems.

4. Implement Data Governance and Stewardship

Allocate roles, version controls, access rights, and recovery measures while using metadata-driven approaches for policy propagation. Strong governance ensures accountability and maintains quality standards over time.

5. Continuous Monitoring and Improvement

Track KPIs with real-time alerting systems that distinguish normal variation from genuine quality problems. Regular monitoring enables rapid response to quality degradation and supports continuous improvement initiatives.

How Can Blockchain Technology Transform Data Quality Assurance?

Immutable Data Lineage and Provenance Tracking

Blockchain records every transformation as a cryptographically signed transaction, providing irrefutable audit trails. This capability becomes particularly valuable in regulated industries where data lineage must be documented and verified.

Consensus-Based Quality Validation

Distributed networks allow multiple parties to validate data quality through smart contracts and consensus mechanisms. This approach reduces reliance on single points of failure and increases confidence in quality assessments.

Decentralized Identity and Data Sovereignty

Self-sovereign identity systems shift quality responsibility to users, requiring privacy-preserving validation methods like zero-knowledge proofs. Organizations can validate data quality without exposing sensitive information.

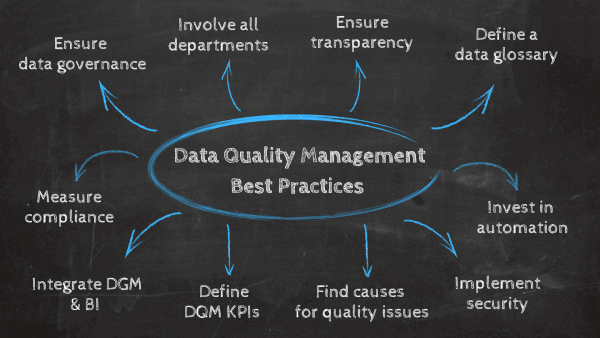

What Best Practices Ensure Long-Term Data Quality Success?

- Define Data Standards Clearly by documenting naming conventions, formats, value ranges, and making them accessible to all stakeholders. Clear standards eliminate ambiguity and ensure consistent data interpretation across teams.

- Automate Processes using predictive quality scoring and automated correction workflows. Automation reduces manual effort while ensuring consistent application of quality rules.

- Regularize Data Cleaning by scheduling cleaning based on data velocity and impact. Regular maintenance prevents quality degradation and reduces the accumulation of data debt.

- Utilize Data Quality Tools that specialize in automated validation and monitoring. Data quality tools provide capabilities that would be difficult to build in-house.

- Encourage a Data-Centric Culture by training employees and creating incentives for quality improvements. Cultural change ensures that quality becomes everyone's responsibility, not just the data team's.

- Monitor and Audit Data Quality through dashboards that provide real-time visibility into quality metrics. Continuous monitoring enables proactive quality management and rapid issue resolution.

How Does Edge Computing Impact Real-Time Data Quality Management?

Resource-Constrained Quality Processing

Edge devices perform lightweight validation while streaming data for deeper analysis. This distributed approach enables quality checks at the point of data generation, reducing latency and network requirements.

Federated Learning and Distributed Quality Assurance

Collaborative model training across devices improves quality without sharing raw data. This approach preserves privacy while enabling organizations to benefit from collective quality intelligence.

Latency-Sensitive Quality Validation

Edge-optimized algorithms validate data within milliseconds for safety-critical systems. Real-time validation becomes essential in applications where data quality directly impacts operational safety.

Security and Quality Integration at the Edge

Zero-Trust architectures and homomorphic encryption allow validation on encrypted data. This capability ensures that quality checks can occur without compromising data security or privacy.

How Can Airbyte Enhance Your Data Quality Management Strategy?

Airbyte is an open-source data-integration platform with over 600+ connectors and a Connector Development Kit. The platform provides essential capabilities that support comprehensive data quality management.

Airbyte's unique features that can enhance your DQM process:

- Unified Data Movement: Airbyte's groundbreaking capability to merge structured records with unstructured files in a single connection preserves critical data relationships while generating metadata for AI training and compliance requirements. This unified approach eliminates quality gaps that typically occur when managing these data types separately.

- Multi-Region Deployment: Self-Managed Enterprise users can deploy pipelines across isolated regions while maintaining centralized governance, enabling compliance with global regulations like GDPR and HIPAA, while reducing cross-region data transfer costs that can impact quality budgets.

- Direct Loading Technology: The platform's Direct Loading feature eliminates redundant deduplication operations in destination warehouses, reducing costs by up to 70% while speeding synchronization by 33%. This efficiency enables more frequent quality checks without proportional cost increases.

- Complex Transformations: Airbyte allows you to integrate with platforms like dbt, enabling you to perform more complex transformations within your existing workflows. You can also leverage its SQL capabilities to create custom transformations for your pipelines that incorporate quality validation logic.

- User-Friendly: Airbyte's intuitive interface allows you to get started without extensive technical expertise. This ensures that various stakeholders within your organization can effectively monitor the data quality processes and understand pipeline status.

- Scalability and Flexibility: You can scale Airbyte to meet your organization's growing needs and business requirements. This adaptability is critical to handling increasing data volumes and changing workloads, a vital capability to avoid data crises. Based on your current infrastructure and budget, you can deploy Airbyte as a cloud or self-managed service.

- Schema Change Management: You can configure Airbyte's settings to detect and propagate schema changes automatically. Airbyte reflects changes in source data schemas into your target system without manual intervention. This eliminates the need for manual schema definition and mapping, saving valuable time and reducing errors in your DQM process.

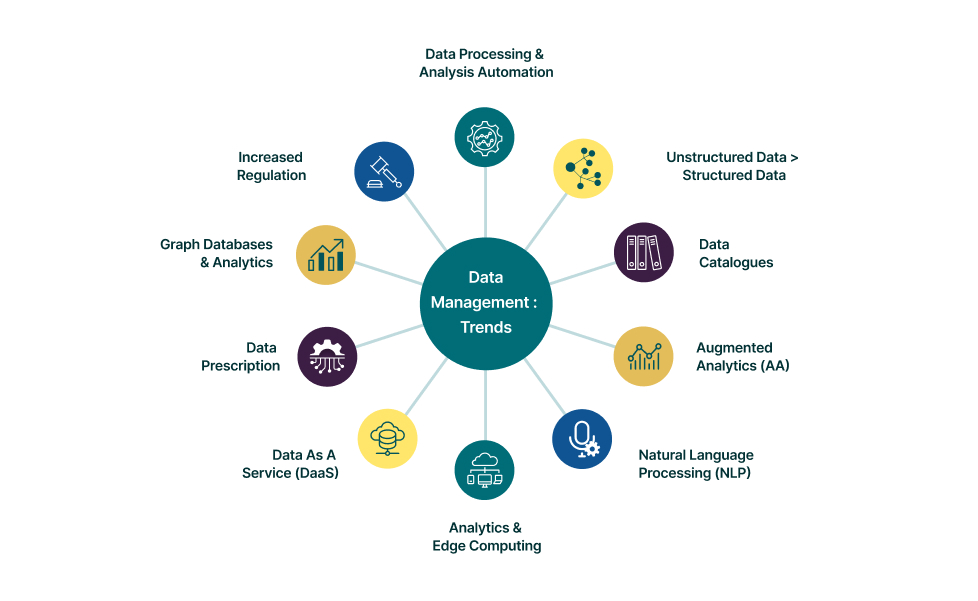

What Emerging Trends Are Shaping the Future of Data Quality Management?

Machine Learning (ML) and Artificial Intelligence (AI)

AI and ML automation introduce predictive quality scoring and self-learning rules that adapt to changing data patterns. These technologies enable proactive quality management that anticipates issues before they impact business operations.

Proactive Data Observability

Modern observability platforms provides real-time anomaly detection and lineage mapping, creating comprehensive visibility into data health. Organizations can identify and resolve quality issues before they propagate downstream.

Democratization of Data

Accessing high-quality data through no-code quality tools empowers business users and increases data literacy across organizations. This trend reduces dependency on technical teams for routine quality management tasks.

Cloud-Based Data Quality Management

Cloud-native solutions offer vendor-agnostic, scalable platforms that adapt to organizational growth. Cloud deployment eliminates infrastructure management overhead while providing enterprise-grade capabilities.

Focus on Data Lineage

Advanced lineage tracking with active metadata enables automated policy enforcement and impact analysis. Organizations gain deeper insights into how quality issues propagate through their data ecosystems.

DaaS (Data Quality as a Service)

DaaS provides AI-powered, cloud-native quality engines that require minimal setup and maintenance. This approach makes enterprise-grade quality management accessible to organizations of all sizes.

Conclusion

A robust data quality framework preserves data consistency, accuracy, and integrity over time while adapting to evolving business needs. As decentralized ecosystems and edge computing grow, organizations must leverage blockchain audit trails, federated learning, and real-time validation. DQM represents a continuous process where proactive observability, AI-driven automation, and collaborative governance models ensure quality scales with organizational growth. Investing in comprehensive data quality management today delivers long-term advantages in decision-making, compliance, and competitive performance.

Frequently Asked Questions (FAQs)

What are the key components of a data quality management framework?

The essential components include governance policies, data profiling capabilities, cleansing processes, continuous monitoring systems, validation rules, and lineage tracking. These components work together to ensure comprehensive quality management across all data sources and throughout the data lifecycle.

What is data governance and why is it critical for data quality?

Data governance establishes the policies, standards, and procedures for managing data within an organization. It defines roles and responsibilities, sets quality standards, and ensures regulatory compliance. Strong governance provides the foundation for consistent quality management and accountability across all data initiatives.

How do you measure the success of a data quality management program?

Success metrics include data accuracy rates, completeness percentages, consistency scores across systems, timeliness of data updates, and business impact measures. Organizations should also track operational metrics like reduced time spent on data correction, improved user satisfaction, and faster time-to-insight for business decisions.

What role does automation play in modern data quality management?

Automation enables continuous monitoring, real-time validation, and proactive issue detection without manual intervention. Automated systems can apply quality rules consistently, identify anomalies quickly, and trigger remediation workflows. This reduces human error and ensures that quality management scales with data volume growth.

How can organizations balance data quality with data accessibility?

Organizations should implement tiered access models where data quality requirements match use case criticality. Business users can access self-service tools for routine analysis while maintaining stricter controls for regulatory reporting. Clear data lineage and quality scoring help users understand data reliability for their specific needs.

.webp)