9 Best Data Profiling Tools to Ensure Data Quality (2026)

Summarize this article with:

The success of your organization depends on how well you handle and maintain your data quality. If your data is well structured and organized, you can draw data-driven insights to improve your business processes. But before you analyze data, resolving data issues and determining data accuracy is crucial. This is where data profiling tools come into play. These tools ensure data consistency and provide a complete breakdown of the source data to uncover actionable insights from your dataset.

In this article, you will understand the basics of data profiling, its different types, its benefits, and the best data profiling tools you can use today.

What is Data Profiling?

Data profiling is the process of assessing data to ensure its structure, consistency, and quality. It delves deep into the data and allows you to understand content, evaluate conditions, and utilize data profiling tools to fix any issues. Data profiling helps you analyze the data better by establishing relationships between different data sources, databases, and tables. With data profiling, you can empower your business to identify trends, predict customer behavior, and formulate a robust data governance strategy.

The first step in the data profiling process is to collect data from multiple sources and store it in a centralized repository. After the data is gathered, the next step involves cleaning. You can remove duplicate entries, fill in missing values, or address inconsistencies to ensure unified structured data. The further step includes performing quality assessments, identifying distributions, and executing inter-table analysis.

What are Data Profiling Tools?

Data profiling tools are important for analyzing and assessing data quality, structure, and content. These tools help identify anomalies, patterns, and inconsistencies in datasets, enabling better data management and decision-making. They are important in guaranteeing data accuracy and reliability for various business processes.

Types of Data Profiling

Data profiling is primarily categorized into three types. Though their end goal of improving the data quality and understanding the data is similar, they differ in their techniques.

- Structure Discovery: This approach focuses on validating the consistency, structure, and format of the data. It helps determine your data structure through different processes such as pattern matching, fundamental statistical analysis, and many others. For instance, in a dataset containing customer addresses, you can check missing fields or inconsistent formatting, such as variations in postal code lengths.

- Content Discovery: This method involves examining the data quality of the dataset by identifying errors in the rows' and columns' values, if any. It helps uncover anomalies, inconsistencies, and data quality issues within the dataset.

- Relationship Discovery: Relationship profiling helps you examine the relationships between existing and different datasets. The process generally begins with metadata analysis to establish the relationship and then narrows down the connections between specific fields in a dataset.

Benefits of Data Profiling

Data profiling has various benefits, offering top-notch data quality and consistency. Here are a few of them:

- Mitigate Risks: Data profiling enables you to adhere to industry regulations related to data handling by providing transparency into your data structure and content. It also assists you in detecting potential data threats and preventing data breaches.

- Facilitate Data Governance: Data profiling supports various functions of data governance programs. This includes data discovery to understand the data in use, data lineage to track data from the source to the destination, and data literacy to communicate data findings.

- Cost Optimization: By identifying potential data quality and content issues, data profiling can help you save high costs that would otherwise be spent on rectifying poor data quality.

Best Tools for Data Profiling

Here is a list of the top 9 data profiling tools in 2026 that will help you to improve your dataset content and quality:

Astera Centerprise

Astera Centerprise is an on-premises data integration platform with profiling and transformation capabilities. It enables you to assess your data effectively and streamline workflows by delivering unified, configured, and relevant datasets. With its intuitive drag-and-drop interface, you can work on your data without coding.

Key Features:

- Data Quality: Astera supports advanced data profiling and quality features to enhance data reliability and accuracy. With the Data Quality Mode feature, you can track and process record-level errors, validate your data against business rules, and perform data profiling to generate aggregate statistics for deeper insights into the data.

- Data Cleaning Capabilities: Astera offers diverse data cleaning features to streamline the data cleaning process. With capabilities like eliminating duplicate entries, removing punctuations, and addressing unwanted whitespaces, you can effectively cleanse your data and ensure consistency.

- Automation Facility: Astera has an in-built job scheduler to automate tasks based on specified intervals, conditions, or triggers, such as file drops into designated folders. This functionality streamlines workflow, allowing you to focus more on building effective strategies and less on doing manual tasks.

Data Fabric By Talend

Data Fabric by Talend is an open-source platform that brings data integration, governance, and integrity into a unified modular system. It is also one of the most popular data profiling platforms that supports your organization's complete data lifecycle process. Data Fabric has a host of features, including data integration from multiple sources, data management, and assessing the quality of databases.

Key Features:

- Better Data Quality: Data quality is integral to Talend’s Data Fabric, allowing you to perform real-time data cleaning and profiling. It is equipped with machine learning capabilities to suggest recommendations regarding quality issues as data flows in your system.

- Improved Data Efficiency: If your data is not correctly structured and secured, it can compromise your efficiency in formulating better strategies and making informed business decisions. It assists you in dealing with data chaos by providing an integrated platform to explore and share crucial datasets within your organization.

- Assessing Data Integrity: Data Fabric comes with a built-in Talend Trust Score, which provides an instant, actionable, and intelligible assessment of your datasets. This helps you differentiate between which data is safe to share and which datasets require further cleansing.

Informatica Data Explorer

Informatica is a widespread data integration and management platform that allows you to process large amounts of data. It has an Informatica Data Explorer feature to cater to your data profiling needs. With Informatica, you can thoroughly analyze your dataset to establish benchmarks, identify trends, and spot anomalies. You can also efficiently scan and record data from different sources and define relationships within datasets.

Key Features:

- Data Quality: You can use Informatica data quality solutions to enhance the quality of your datasets. It helps to gain insights into the structure and content of your data, identifying inconsistencies or errors like duplicate values or invalid numbers. Moreover, Informatica allows you to create graphical scorecards that represent the profile of your dataset for better analysis.

- Master Data Management: Informatica offers a comprehensive Master Data Management solution to connect data across your business, giving you a 360-degree view of your data. With this unified view, you can effectively plan and execute business objectives like supply chain management, enhancing customer experience, and optimizing costs.

- Leveraging AI: Informatica is equipped with artificial intelligence capabilities like CLAIRE AI. You can utilize CLAIRE’s features to automate your data management tasks, thus reducing the complexity of handling large datasets, ensuring scalability, and achieving high-quality data.

IBM InfoSphere Information Analyser

IBM’s Information Analyzer is one of the most potent data profiling and quality assessment platforms. It offers a range of features that help you understand your data better, identify inconsistencies, and ensure data quality. With Information Analyzer, you can perform in-depth analysis of your data, detect anomalies, and generate reports to support data governance initiatives.

Key Features:

- Data Quality: This platform has a rich library of 200 built-in data quality rules to prevent the consumption of bad-quality data. It leverages the support of multi-layered evaluations by pattern and rule records provided by the reusable rules library.

- Resource Optimization: Information Analyzer allows you to manage your resources efficiently by tracking incorrect and missing data in your datasets. It also helps address any redundant information before starting the data integration process to ensure the smooth profiling of your datasets.

OpenRefine

Formerly known as Google Refine, OpenRefine is an open-source tool for handling and managing messy data. This Java-based tool enables you to integrate, clean, transform, and understand datasets, thus facilitating improved data profiling. Apart from cleaning data, you can also use OpenRefine to identify errors and outliers that may affect your data quality.

Key Features:

- Data Cleaning: This feature is used to detect any inconsistencies in your data and fix them to get data accuracy. You can perform several cleanup operations like removing duplicate values, merging similar data, and many others to enhance data analysis.

- Data Transformation: OpenRefine allows you to deal with complex transformations with prior knowledge of programming skills. You can perform various functions on its user-friendly interface, from basic filtering, formatting, and sorting to advanced data cleaning.

Data Ladder

Data Ladder is a powerful tool that helps businesses improve data quality. It ensures that the data is accurate, complete, and consistent by providing features like data quality assessment, deduplication, and data matching.

Key Features

- Data Quality Assessment: This feature checks if the data is accurate, complete, and reliable. It identifies any errors or inconsistencies in the data, helping businesses to maintain high-quality data standards.

- Deduplication: Data Ladder identifies and removes duplicate records from the dataset, helping to maintain unique values throughout. This ensures that the data is clean and free from repetitions, which can otherwise cause errors in analysis and decision-making.

- Data Matching: This feature compares data from different sources to find matches and ensure consistency. It helps in integrating data from various databases, making sure that the information is accurate and uniform across all sources.

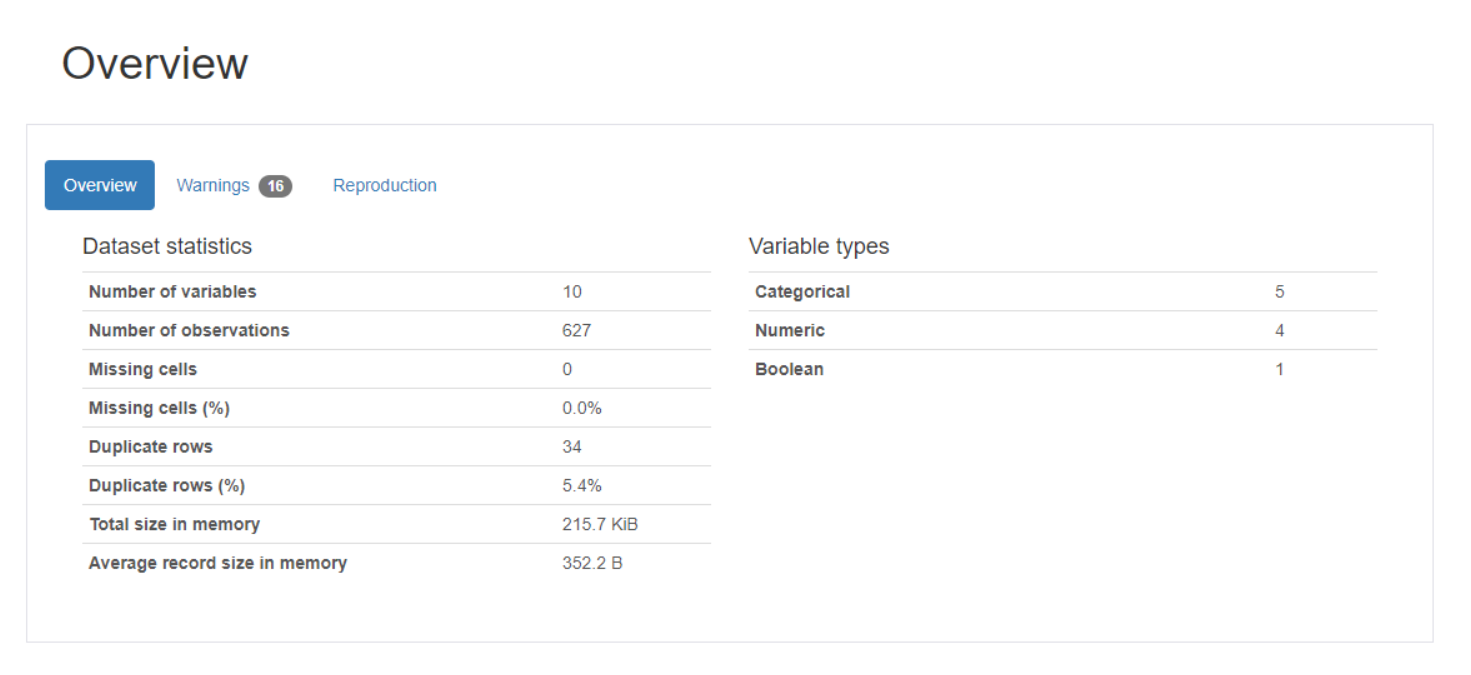

Pandas Profiling

Pandas Profiling is a Python library that helps you quickly generate detailed reports from pandas DataFrames. With just a few lines of code, you can gain deep insights into your dataset, making it easier to understand and analyze.

Key Features

- Detailed Overview: Generates a comprehensive report that includes statistics like mean, median, and standard deviation for each column in your DataFrame.

- Missing Values Analysis: Identifies missing values in your dataset, showing how much data is missing and where.

- Data Types and Distributions: Provides information on the data types and distributions, helping you understand the structure and nature of your data.

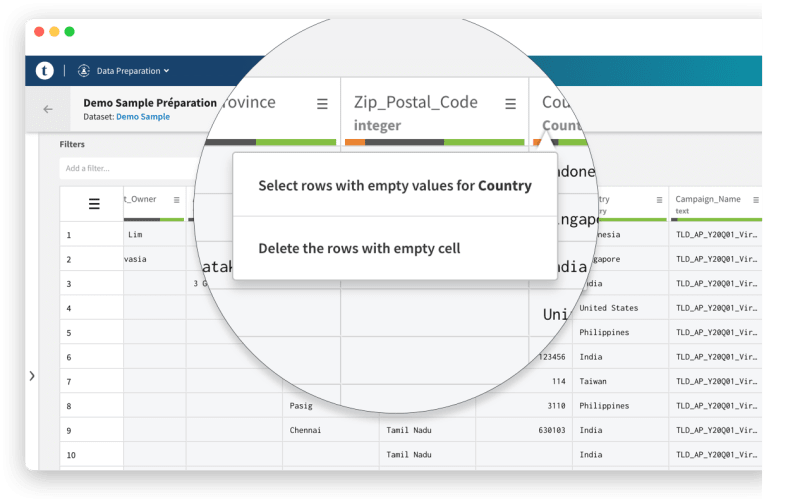

Talend Data Preparation

Talend Data Preparation simplifies the process of working with data. With its intuitive interface, it enables users to clean, transform, and enrich data efficiently, making data preparation tasks much easier and faster.

Key Features

- Data Cleaning: This feature helps identify and correct errors, inconsistencies, and inaccuracies in the data. It ensures the data is clean and ready for analysis or further processing.

- Data Transformation: Talend allows users to transform data into the desired format. Users can easily apply transformations like filtering, sorting, aggregating, and joining data from different sources to meet their specific needs.

- Collaborative Features: Talend Data Preparation supports collaboration among team members. Users can share their data preparations and collaborate in real-time, ensuring that everyone is working with the most up-to-date data.

Alteryx Designer Cloud

Alteryx Designer Cloud is a versatile tool designed for powerful data profiling and preparation. It emphasizes ease of use and supports collaborative data management, making it accessible for both technical and non-technical users.

Key Features

- Data Profiling: Alteryx Designer Cloud allows users to examine their data thoroughly. This feature helps identify patterns, inconsistencies, and potential errors in the dataset, ensuring data quality and reliability.

- Data Preparation: The tool provides a wide range of functionalities for cleaning, transforming, and organizing data. Users can easily manipulate data to prepare it for analysis, saving time and effort.

- Ease of Use: With an intuitive interface and user-friendly design, Alteryx Designer Cloud makes data preparation simple. Users can drag and drop functions and use visual tools without needing to write complex code.

Apache Griffin

Apache Griffin is an open-source tool that helps organizations ensure their data is accurate and reliable. It provides features for profiling data, measuring data quality, and detecting anomalies. This tool is especially useful for businesses with large amounts of data that need a scalable and flexible solution to manage and improve data quality.

Key Features

- Data Profiling: It provides a detailed analysis of the data, identifying patterns, and summarizing key statistics to give a clear picture of the data's condition.

- Data Quality Measurement: Apache Griffin measures the quality of data by checking for accuracy, completeness, consistency, and timeliness. It provides metrics and scores to help users understand the current state of their data quality and identify areas for improvement.

- Data anomaly detection: This feature detects any outliers or unusual patterns in data that may indicate errors. Businesses can prevent data integrity problems by identifying these anomalies and taking corrective measures.

Key features to look out for in data profiling tools

1. Data Quality Assessment

These tools evaluate the completeness, accuracy, and consistency of data, ensuring that the data meets the required standards for decision-making processes.

2. Metadata Management

Effective data profiling tools provide comprehensive metadata management capabilities, helping users understand the data's origin, structure, and transformation history.

3. Pattern Recognition

The ability to detect patterns, trends, and anomalies in data is essential. This feature helps in identifying unexpected values and potential errors within datasets.

4. Data Integration

Easy integration with various data sources and other data management tools is a vital feature, ensuring smooth data flow and compatibility.

5. Visualization and Reporting

Advanced visualization and reporting capabilities allow users to easily interpret data profiling results through charts, graphs, and summary reports.

6. Scalability

The tools should be capable of handling large volumes of data efficiently, scaling up as the data grows.

Enhancing Data Profiling with Airbyte

You can effortlessly perform data profiling and receive a high-quality dataset using the tools mentioned above. Before profiling, you must unify your data into a single repository to ensure seamless data assessment. With Airbyte, you can streamline your integration process by extracting data from disparate sources and loading it to your preferred destination, like a data warehouse or data lake. You can utilize its extensive library of 550+ pre-built connectors to gather, prepare, and transfer your data and create a data pipeline within minutes. You can also build custom connectors within minutes using its Connector Development Kit.

Some of the key features that Airbyte offers to enrich your data profiling process are:

- AI-powered Connector Development: The AI-assist functionality in Connector Builder simplifies your connector-building experience, auto-filling most UI fields by reading through the platform’s official API documentation.

- Change Data Capture: Airbyte supports CDC, which allows you to replicate only the changes made in the source into your target system to ensure that the data profiling process remains uninterrupted.

- Simplifying AI Workflows: With Airbyte, you can automatically perform RAG techniques, such as chunking, embedding, and indexing, on semi-structured and unstructured data. These transformations convert raw data into vector embeddings that can be stored in Airbyte-supported vector stores like Pinecone and Milvus, which helps streamline GenAI workflows.

- Self-Managed Enterprise: The Enterprise version enables you to manage and secure large-scale data. It offers advanced features such as role-based access control (RBAC), multitenancy, masking personally identifiable information (PII), and support with service level agreements (SLAs).

- Data Security: Airbyte protects your data during transmission with strong measures like role-based access control, strong encryption, and audit logs. By adhering to industry-specific regulations, including GDPR, SOC 2, HIPAA, and ISO 27001, Airbyte safeguards your data from unauthorized access.

- Data Transformation: You can use Airbyte’s dbt integration capabilities to create and run customized transformations. This provides flexibility to your data transformation workflows.

Final Word

As you transition into a data-driven world, it becomes more imperative than ever to integrate, simplify, cleanse, and automate your data. Data profiling is a powerful method that caters to all your data-centric needs, thus securing the most valuable assets of your organization. It will aid you in making informed decisions and empower you to build successful businesses.

Leverage the dynamic features of Airbyte to make informed business decisions and optimize workflow by signing up on the Airbyte platform today.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey: