10 Best Data Replication Tools in 2026

Summarize this article with:

What is Data Replication?

Database replication is a pivotal function in data management, involving the movement of data from a primary database to one or more replica databases. This replication process can occur in real-time, continuously monitoring changes in the primary database. Alternatively, it can be executed periodically or as one-time batch processes.

The primary goal of database replication is to enhance data availability, which is critical for improving analytics capabilities and ensuring effective disaster recovery. By replicating data across multiple databases, organizations can minimize downtime and maintain business continuity in the event of system failures or disruptions.

10 Best Data Replication Tools

Data Replication is an important process for maximizing analytics output. Choosing the right data replication tool is even more important. Here are 10 of the best data replication tools to consider.

1. Airbyte

Airbyte boasts a robust catalog of 600+ pre-built connectors, supporting both structured and unstructured data for data replication. Beyond connector flexibility, Airbyte streamlines data replications with automation, version control, and monitoring. It also seamlessly integrates with orchestration and transformation tools such as Airflow, Prefect, Dagster, dbt, and more.

Key Features

- Custom Connector Creation: Airbyte’s no-code Connector Builder allows you to create custom connectors within minutes. Its AI-assist feature automatically fills out most UI fields by scanning through the preferred platform’s API documentation.

- Change Data Capture: Airbyte employs a log-based CDC for the rapid detection of data changes and efficient replication with minimal resource usage.

- Streamlining GenAI Workflows: Airbyte supports structured and unstructured data sources, catering to various AI use cases. You can convert raw data into vector embeddings using natively supported RAG techniques, such as chunking, embedding, and indexing.

- Robust Enterprise Solution: The Self-Managed Enterprise Edition provides capabilities to manage and secure large-scale data. It offers additional features, including personally identifiable information (PII) masking, role-based access control (RBAC), and enterprise support with SLAs.

- Advanced Security: Airbyte prioritizes end-to-end security by offering reliable connection methods like SSL/TLS and SSH tunnels. It also adheres to industry-specific standards and regulations, including ISO 27001, HIPAA, SOC 2, and GDPR, to safeguard your data from unauthorized access.

2. Fivetran

Fivetran is an automated data integration platform that excels in synchronizing data from various sources, including cloud applications, databases, and logs. With Fivetran, you can move large volumes of data efficiently from your database with minimal latency. It also ensures seamless data synchronization, even when schemas and APIs evolve.

Key Features

- With Fivetran, you can access over 400 ready-to-use source connectors without writing any code.

- With log-based CDC, You can quickly identify data changes and replicate them to your destination, all with a straightforward setup and minimal resource usage.

- Fivetran’s high-volume agent empowers you to replicate huge data volumes in real-time effortlessly. These high-volume agent connectors utilize log-based change data capture to extract information from the source system’s logs, optimizing your replication process.

- You can enable log-free replication with teleport sync. Fivetran’s Teleport Sync presents a specialized method for database replication, blending snapshot thoroughness with log-based system speed.

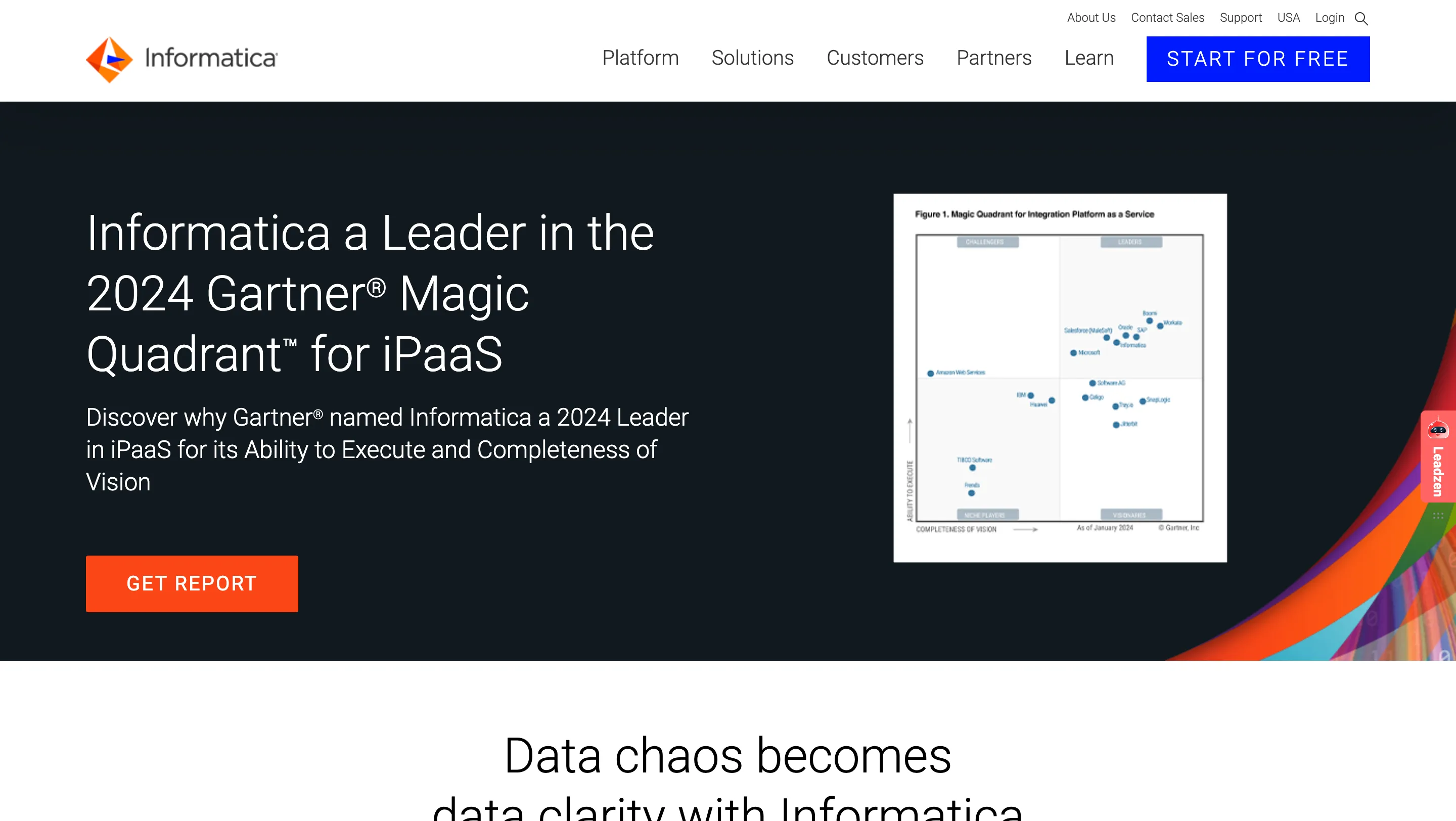

3. Informatica

Informatica offers a comprehensive data integration tool set, catering to diverse needs through on-premises and cloud deployment options. It seamlessly merges advanced hybrid integration and governance capabilities with user-friendly self-service access for various analytical tasks.

Augmented integration is achievable through Informatica’s CLAIRE Engine, a metadata-driven AI engine that harnesses machine learning.

Key Features

- Real-time replication of changes and metadata from source to target databases over LAN or WAN, using batched or continuous methods.

- You can replicate data across diverse databases and platforms while ensuring consistency.

- You can use nonintrusive log-based capture techniques that do not disrupt databases or applications.

- Employ InitialSync and Informatica Fast Clone for efficient data loading. It includes high-speed Oracle data transfer and data streaming for specific targets like Greenplum and Teradata.

- Scale the replicable solution to meet your data distribution, migration, and auditing needs.

4. IBM Informix

IBM Informix is a trusted embeddable database designed for optimal performance in OLTP and Internet of Things (IoT) data handling. Informix stands out for its seamless integration of SQL, NoSQL, time series, and spatial data. Offering reliability, flexibility, user-friendliness, and cost-effectiveness, it caters to everyone from developers to global enterprises.

Key Features

- Whether on-premise or Cloud, IBM data replication centralizes dispersed data.

- Ensure high availability and safeguard mission-critical data against disruptions.

- Enhance your business decision-making with real-time data for up-to-the-minute insights.

- IBM Data replication operates with minimal impact, exclusively monitoring changes captured in the log.

- You can use IBM Data Replication to send data changes to support data management, including data warehouses, quality processes, and critical systems.

5. Qlik Replicate

Qlik Replicate empowers you to expedite data replication, ingestion, and streaming across diverse databases, data warehouses, and big data platforms. With a worldwide user base, Qlik Replicate is tailored for secure and efficient data movement while minimizing operational disruptions.

Key Features

- Qlik Replicate streamlines data availability management, reducing time and complexity in heterogeneous environments.

- Centralized monitoring and management facilitate scalability, enabling data movement across many databases.

- You can handle data replication, synchronization, distribution, and ingestion across major databases, regardless of deployment location.

- The platform optimizes workloads and provides robust support for business operations, applications, and analytical needs.

- Qlik Replicate ensures data availability and accessibility, making it a valuable asset for organizations.

6. Hevo Data

Hevo is a zero-maintenance data pipeline platform that autonomously syncs data from 150+ sources, encompassing SQL, NoSQL, and SaaS applications. Over 100 pre-built integrations are native and tailored to specific source APIs. With the help of Hevo, you can gain control over how data lands in your warehouse by performing on-the-fly actions such as cleaning, formatting, and filtering without impacting load performance.

Key Features

- Hevo incorporates a streaming architecture that can automatically detect schema changes in incoming data and replicate them to your destination.

- Monitor pipeline health and gain real-time visibility into your ETL with intuitive dashboards, revealing every pipeline and data flow stats. Utilize alerts and activity logs for enhanced monitoring and observability.

- With Hevo, you can process and enrich raw data without coding.

- Hevo offers a top-tier fault-tolerant architecture that scales seamlessly, ensuring zero data loss and low latency.

7. Dell RecoverPoint

Dell RecoverPoint is a leading data replication solution designed to ensure continuous data protection and disaster recovery for organizations of all sizes. With its advanced features and robust capabilities, RecoverPoint offers peace of mind by safeguarding critical data and minimizing downtime in the event of unforeseen incidents.

Key Features

- RecoverPoint provides real-time replication of data, enabling organizations to recover to any point in time with minimal data loss.

- It supports replication across multiple sites, allowing for flexible disaster recovery strategies and business continuity planning.

- RecoverPoint automates the failover and failback processes, ensuring seamless transitions between primary and secondary data centers.

- RecoverPoint ensures application-consistent replication, preserving data integrity and consistency across replicated environments.

- It enables granular recovery of files, folders, and virtual machines, empowering organizations to quickly recover specific data elements as needed.

8. Carbonite

Carbonite Availability utilizes continuous replication technology to duplicate databases. This process is executed without burdening the primary system or consuming bandwidth. You can install Carbonite on both primary and secondary systems, which mirrors the primary system’s configuration at the secondary location.

In the event of a failure, the secondary system becomes active, and DNS redirection seamlessly directs users. Depending on the system configuration, you may not even discern that they operate on the secondary system.

Key Features

- With Carbonite’s real-time application at the byte level, you can achieve a Recovery Point Objective (RPO) within seconds.

- Combat malware and data loss by recovering from corruption and ransomware through the use of “snapshots,” often used to protect data.

- You can achieve platform independence by seamlessly supporting physical, virtual, or cloud source and target systems.

- You can implement automatic failover using a server heartbeat monitor.

- Carbonite’s encryption protects your data during transfer from the source to the destination.

9. AWS Database Migration Service (AWS DMS)

AWS DMS is a fully managed migration and replication service designed to help organizations move databases quickly and securely into AWS, or between on-premises and cloud environments. It’s particularly useful for migrating live production databases with minimal downtime.

Key Strengths:

- Heterogeneous migrations: Can move data between different database engines (e.g., Oracle → PostgreSQL).

- Minimal downtime: Uses CDC (Change Data Capture) to keep source and target in sync during migration.

- Pay-as-you-go: No upfront licensing fees; cost is based on compute resources and storage used.

10. Google Datastream

Google Datastream is a serverless, real-time data replication and CDC service within Google Cloud. It’s optimized for low-latency synchronization between transactional databases and Google Cloud analytics/storage platforms like BigQuery, Cloud SQL, and Cloud Storage.

Key Strengths:

- Fully serverless: No infrastructure management — scales automatically with demand.

- Tight GCP integration: Works seamlessly with BigQuery for analytics pipelines.

- Reliable CDC: Captures and streams changes in near real-time from supported databases.

Challenges of Data Replication

While data replication offers clear benefits — such as high availability, disaster recovery, and improved analytics — it comes with its own set of challenges that organizations need to address:

- Latency & Performance Overhead

- Maintaining multiple synchronized copies of data in real time can introduce network load and processing delays, especially in geographically distributed systems.

- High-frequency replication can impact the performance of source systems.

- Data Consistency Issues

- In asynchronous replication, there is always a risk of data lag or inconsistency between source and target systems.

- Handling conflicts when the same record changes in multiple locations can be complex.

- Scalability Limitations

- As data volumes grow, replication pipelines must scale without degrading speed or reliability.

- Some tools struggle with extremely large datasets or high-change-rate workloads.

- Security & Compliance Risks

- Sensitive data replicated to multiple locations increases the attack surface.

- Ensuring compliance with GDPR, HIPAA, and other regulations requires careful access controls, masking, and encryption.

- Operational Complexity

- Multi-cloud or hybrid setups often require specialized connectors and custom configurations.

- Monitoring and troubleshooting replication issues can be resource-intensive without proper tooling.

- Cost Management

- Storage, compute, and network usage can grow rapidly with frequent replication.

- Vendor licensing models can make long-term costs unpredictable.

Criteria for Selecting the Right Data Replication Tool

When it comes to selecting the right data replication solution for your organization, several crucial criteria should be considered to ensure optimal performance and compatibility with your specific needs.

Here are some key factors to keep in mind:

- Performance: Emphasize solutions showcasing high-performance capabilities to adeptly manage data processing tasks.

- Scalability: Verify the tool's ability to seamlessly expand alongside escalating data requirements while maintaining peak performance levels.

- Reliability: Prioritize features such as automatic failover mechanisms and robust error handling protocols to mitigate the potential for data loss.

- Ease of Deployment: Opt for solutions offering intuitive interfaces and streamlined setup procedures to expedite deployment processes.

- Compatibility: Ensure seamless integration with existing databases and platforms to foster a cohesive operational environment.

- Security: Prioritize tools fortified with robust encryption protocols and stringent access controls to safeguard sensitive data assets.

- Cost-effectiveness: Evaluate the comprehensive cost of ownership, encompassing upfront expenses and ongoing operational costs.

- API Support: Favor tools equipped with extensive API support, facilitating smooth integration with diverse system architectures.

- Custom Connectors: Seek out solutions featuring tailored connectors for popular databases, streamlining integration efforts.

- Data Transformation: Confirm the software's capability to execute data transformation tasks proficiently, accommodating diverse data formats seamlessly.

- Real-time Integration: Prioritize solutions offering real-time data replication capabilities to ensure timely synchronization across systems.

- Change Data Capture (CDC): Consider solutions equipped with CDC functionality for efficient and precise data replication processes.

- Monitoring and Management: Select data replication software equipped with advanced monitoring and management functionalities, enabling comprehensive oversight and control.

Importance of Data Replication in Modern Business

In contemporary business operations, data replication stands as a cornerstone for ensuring continuity, security, and accessibility of vital data assets. This process involves duplicating and synchronizing data across multiple databases or storage systems, bolstering data availability and integrity amidst evolving business needs and technological advancements.

Data replication plays a pivotal role in modern business for several compelling reasons:

- Enhanced Data Availability: Replicating data across distributed environments ensures continuous access to critical information, fostering agility and responsiveness in decision-making processes.

- Business Continuity and Disaster Recovery: Maintaining synchronized copies of data enables swift recovery from unexpected disruptions, safeguarding business operations and mitigating potential losses.

- Improved Performance and Scalability: Distributing data processing loads enhances system performance and scalability, ensuring optimal performance even during peak usage periods.

- Data Distribution and Collaboration: Real-time data replication facilitates seamless distribution and collaboration across diverse teams, fostering innovation and informed decision-making.

- Regulatory Compliance and Data Governance: Adhering to data replication best practices helps organizations demonstrate compliance with data protection regulations, mitigating legal and regulatory risks associated with data breaches.

In summary, data replication serves as a vital component of modern data management strategies, empowering organizations to optimize data utilization, mitigate risks, and drive innovation in today's competitive business landscape.

Conclusion

In today's rapidly changing data management environment, selecting the right data replication solution can significantly impact an organization's ability to maintain data availability, reliability, and efficiency. We've evaluated several leading replication tools in the field, each offering distinct strengths and capabilities.

As technology progresses, these tools become increasingly essential for businesses to leverage data effectively, make well-informed decisions, and maintain a competitive edge in a data-centric world. It's crucial to select a tool that aligns with your specific objectives to optimize your data management strategy.

Moreover, there's a growing emphasis on hybrid and multi-cloud replication solutions to accommodate diverse deployment environments and ensure consistent data availability and reliability across distributed systems. To quench your thirst for knowledge, do checkout this amazing article about Top Data Integration tools.

Data Replication Tools FAQs

What is the importance of selecting the right data replication tool for organizations?

Selecting the right data replication tool is paramount for organizations to optimize data usage and productivity. These tools ensure seamless availability, reliability, and operational efficiency, vital for maintaining uninterrupted business operations and meeting customer demands in today's digital landscape.

How does data replication enhance data availability and reliability for businesses?

Data replication plays a pivotal role in enhancing data availability and reliability for businesses. By creating replicas of primary databases, organizations can safeguard mission-critical data and ensure continuous operations, even in the event of primary database failures or system disruptions. This ensures business continuity and minimizes the risk of data loss, supporting efficient decision-making and customer service delivery.

What industries or use cases are these data replication tools best suited for?

These data replication tools are best suited for various industries and use cases, including finance, healthcare, retail, manufacturing, and telecommunications. They support critical business functions such as analytics, reporting, customer relationship management, and regulatory compliance, offering flexibility and scalability to meet diverse business needs.

How do data replication tools contribute to disaster recovery and business continuity strategies?

Data replication tools play a crucial role in disaster recovery and business continuity strategies by ensuring continuous data availability and minimizing downtime in the event of system failures or disasters. These tools enable organizations to quickly failover to replicated systems, ensuring uninterrupted operations and minimizing the impact on business operations and customer service delivery.

What is the difference Between Data Replication vs. Data Backup?

While data replication involves creating replicas of primary databases to ensure continuous availability and reliability, data backup involves making copies of data for archival purposes, typically stored in separate locations. While data replication ensures real-time synchronization and immediate failover in case of primary system failures, data backup serves as a secondary copy of data for recovery purposes in case of data loss or corruption.

Can data replication tools seamlessly integrate with existing databases and systems?

Data replication tools are designed to seamlessly integrate with existing databases and systems, ensuring compatibility and interoperability. These tools support a wide range of databases, platforms, and data formats, allowing organizations to replicate data from various sources and destinations without disruption to existing systems or workflows.

Suggested Reads:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)