Data replication techniques

Data can be replicated on demand, in batches on a schedule, or in real-time as written, updated, or deleted in the source. Typical data replication patterns used by data engineers are ETL (extract, load, transform), and EL(T) pipelines.

The most popular data replication techniques are full replication, incremental replication, and log-based incremental replication. Full replication and incremental replication allow for batch processing. Meanwhile, log-based incremental replication can be a technique for near real-time replication.

- Full Replication: This technique ensures complete data consistency between the source and destination databases by copying all records. It's suitable for smaller datasets or initial replication tasks but may become resource-intensive for larger datasets.

- Incremental Replication: Incremental replication transfers only the changed data since the last update, reducing network bandwidth usage and replication times. It's efficient for large datasets but requires a more complex setup and may lead to data inconsistencies if not implemented properly.

- Log-based Replication: Log-based replication utilizes database transaction logs to identify changes and replicate them in near real-time. It efficiently captures and replicates changes, minimizing data latency. However, it requires support from the source database and is limited to specific event types.

- Snapshot Replication: Snapshot replication captures the state of data at a specific time and replicates it to the destination. It facilitates point-in-time recovery but increases storage requirements and replication times for large datasets.

- Key-based Replication: This technique focuses on replicating only data that meets specific criteria or conditions, such as changes to specific tables or rows identified by unique keys. It's useful for selective replication but may require more complex configuration.

- Transactional Replication: Transactional replication replicates individual transactions from the source database to the target database, ensuring data consistency across both systems. It's commonly used in scenarios where data integrity is critical, such as financial transactions or order processing.

- Merge Replication: Merge replication allows changes to be made independently at both the source and destination databases, with the ability to reconcile differences and merge conflicting modifications. It's suitable for distributed environments where data is updated at multiple locations but requires careful conflict resolution mechanisms.

- Change Data Capture (CDC): CDC involves capturing and tracking changes made to the source data, including inserts, updates, and deletes, and replicating these changes to the destination in near real-time. It provides granular visibility into data modifications and is commonly used for real-time analytics, data warehousing, and maintaining data synchronization between operational systems.

- Bi-directional Replication: Bi-directional replication ensures data consistency between multiple databases or systems by replicating changes bidirectionally. It facilitates decentralized data synchronization and fault tolerance. However, it requires careful conflict resolution mechanisms to handle data conflicts and increases complexity and management overhead compared to uni-directional replication.

- Near Real-time Replication: This method replicates changes almost instantly, providing up-to-date data for real-time analytics and decision-making. It minimizes data latency but requires high network bandwidth and low latency connections for efficient replication, potentially increasing system overhead and resource utilization.

- Peer-to-peer Replication: Peer-to-peer replication enables decentralized data synchronization and fault tolerance by distributing data processing and reducing single points of failure. However, it requires complex conflict resolution mechanisms to handle data conflicts and increases management overhead.

Data Replication Vs. Data Integration Vs. Data Synchronization

1. Directionality of Data Flow

- Data Replication: This involves one-way data transfer from a source to a target, often for backup or distribution purposes.

- Data Integration: Data integration involves combining data from multiple sources into a unified view, which can involve bidirectional data flow during the transformation and loading process.

- Data Synchronization: This includes bidirectional data exchange, ensuring that changes made in one source are reflected in others in near real-time, maintaining consistency across systems.

2. Purpose and Focus

- Data Replication: It primarily focuses on redundancy, disaster recovery, and distributed computing, ensuring data availability and reliability.

- Data Integration: Data integration mainly focuses on providing a unified view of data from disparate sources, enabling comprehensive analysis and decision-making.

- Data Synchronization: It aims to maintain consistency and coherence among data from multiple sources in near real-time, minimizing data discrepancies across systems.

3. Frequency of Updates

- Data Replication: This typically involves periodic or scheduled updates, where changes in the source are replicated to the target at predefined intervals.

- Data Integration: Data integration may involve batch processing or real-time updates. It depends on the requirements of the system and the frequency of changes in data.

- Data Synchronization: It often operates in near real-time, ensuring that changes made in one source are quickly propagated to other sources, which finally helps in minimizing data latency and discrepancies.

Few best practices to follow when doing data replication

- Plan Carefully: Before initiating replication processes, it's crucial to clearly define your requirements and objectives.

- Assure Data Quality: Maintain accurate and consistent data across systems by validating and cleaning it regularly.

- Monitor Performance: Regularly monitor replication performance to detect issues and optimize procedures.

- Implement Security Measures: Ensure the security of sensitive data during replication by implementing access controls and encryption.

- Document Processes: Record replication setups and methods for future reference in troubleshooting manuals.

- Test Intensively: Prior to deployment, conduct thorough testing to validate replication accuracy and reliability.

Suggested Read: Types of Data Replication Strategies: The Ultimate Guide

Few Best Data replication tools

1. Airbyte

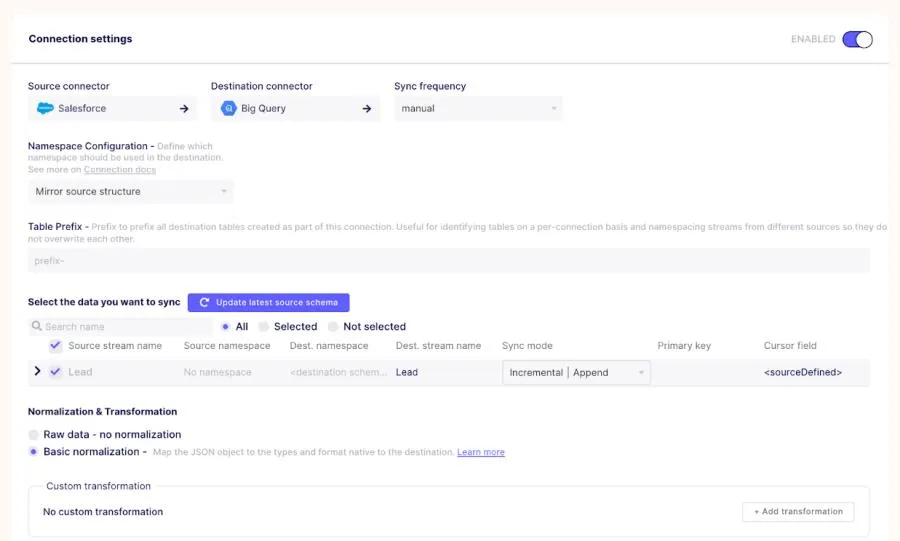

Airbyte is a great data replication tool with effective characteristics that help in smooth data transfer between several sources and destinations. It offers versatile replication capabilities, allowing data to be transferred in batches according to a schedule, on-demand, or in near real-time. Airbyte offers users the choice between self-hosted and cloud-managed deployment options, meeting diverse organizational needs and preferences.

Suggested Read: An overview of Airbyte’s replication modes

2. Oracle GoldenGate

This all-inclusive tool for data integration and replication allows real-time data transfer between disparate systems. GoldenGate guarantees high availability, data consistency, and scalability by supporting a wide range of databases, platforms, and cloud environments.

3. AWS Database Migration Service (DMS)

Oracle, MySQL, PostgreSQL, and other database engines can be easily and securely migrated and replicated with the help of Amazon DMS. It provides continuous data replication, has little downtime during migrations, and easily integrates with other AWS services.

Using a data replication tool to solve challenges

Implementing a data integration solution doesn’t come without challenges. At Airbyte, we have interviewed hundreds of data teams and discovered that most of the issues they confront are universal. To simplify the work of data engineers, we have created an open-source data replication framework.

This section covers common challenges and how a data replication tools like Airbyte can help data engineers overcome them.

Using, maintaining, and creating new data sources

If your goal is to achieve seamless data integration from several sources, the solution is to use a tool with several connectors. Having ready-to-use connectors is essential because you don’t want to create and maintain several custom ETL scripts for extracting and loading your data.

The more connectors a solution provides, the better, as you may be adding sources and destinations over time. Apart from providing a vast amount of ready-to-use connectors, Airbyte is an open-source data replication tool. Using an open-source tool is essential because you have access to the code, so if a connector’s script breaks, you can fix it yourself.

If you need special connectors that don’t currently exist on Airbyte, you can create them using Airbyte’s CDK, making it easy to address the long tail of integrations.

Reducing data latency

Depending on how up-to-date you need the data, the extraction procedure can be conducted at a lower or higher frequency. At higher frequencies, the higher processing resources and more optimized scripts you require to reduce the data replication latency.

Airbyte allows you to replicate data in batches on a schedule, with a frequency as low as 5 minutes, and it also supports log-based CDC for several sources like Postgres, MySQL, and MSSQL. As we have seen before, log-based CDC is a method to achieve near real-time data replication.

Increasing data volume

The amount of data extracted has an impact on system design. As the amount of data rises, the solutions for low-volume data do not scale effectively. With vast volumes of data, you may require parallel extraction techniques, which are sophisticated and challenging to maintain from an engineering standpoint.

Airbyte is fully scalable. You can run it locally using Docker or deploy it to any cloud provider if you need to scale it vertically. It can also be scaled horizontally by using Kubernetes.

Handling schema changes

When the data schema changes in the source, the data replication may fail as you haven't updated the schema on the destination. As your company grows, the schema is frequently modified to reflect changes in business processes. The necessity for schema revisions can result in a waste of engineering hours.

Dealing with schema changes coming from internal databases and external APIs is one of the most difficult challenges to overcome. Data engineers commonly have to drop the data in the destination to do a full data replication after updating the schema.

The most effective way to address this problem is to sync with the source data producers and advise them not to make unnecessary structural updates. Still, this is not always possible, and changes are unavoidable.

Normalizing and transforming data

A fundamental principle of ELT philosophy is that raw data should always be available. If the destination has an unmodified version of the data, it can be normalized and transformed without syncing data again. But this implies a transformation step needs to be done to have the data in your desired format.

Airbyte integrates with dbt to perform transformation steps using SQL. Airbyte also automatically normalizes your data by creating a schema and tables and converting it to the format of your destination using dbt.

Monitoring and observability

Working with several data sources produces overhead and administrative issues. As your number of data sources increases, the data management needs also expand, increasing the demand for monitoring, orchestration, and error handling.

You must monitor your extraction system on multiple levels, including resources consumption, errors, and reliability (has your script run?).

Airbyte’s monitoring provides detailed logs of any errors during the data replications so that you can easily debug or report an issue to the community, so other contributors help you solve it. If you need more advanced orchestration you can integrate Airbyte with open-source orchestration tools like Airflow, Prefect, and Dagster.

Depending on data engineers

Building custom data replication strategies requires experts. But being a bottleneck for stakeholders is one of the most unpleasant situations a data engineer can experience. On the other hand, stakeholders may feel frustrated by relying on data engineers to set up a data replication infrastructure.

Using a data replication tool can solve most of the challenges described above. A key benefit of employing such tools is that data analysts and BI engineers can become more independent and begin working with data seamlessly and as soon as possible, in many cases, without depending on data engineers.

Airbyte is trying to democratize data replication and make it accessible for data professionals of all technical levels. When working with Airbyte, you can use the user-friendly data replication UI.

How to validate the data replication process?

The following steps can help verify the data replication process:

- Compare Source and Target Data: After replication, ensure that the data in the target system corresponds accurately to the source.

- Check for Consistency: Verify the integrity and consistency of data across all systems involved in the replication process.

- Review Error Logs: Thoroughly examine replication error logs to identify and address any issues or inconsistencies.

- Perform Reconciliation: Regularly reconcile data to identify and resolve any discrepancies between source and target systems.

- Conduct End-to-End Testing: Validate the accuracy and reliability of the replication process by performing comprehensive testing across various scenarios.

Suggested Read: Validate data replication pipelines with data-diff

Conclusion

In this article, we learned what data replication is, some common examples of replication, and the most popular data replication techniques. We also reviewed the challenges that many data engineers face and, most importantly, how a data replication tool can help data teams better leverage their time and resources.

The benefits of data replication are clear. But the number of sources and destinations continues to grow, companies need to be prepared for the challenges associated with it. That’s why it’s essential to have a reliable and scalable data replication strategy in place.

FAQ

When to use data replication?

A business should consider data replication when it needs to ensure high availability, disaster recovery, or distributed computing. It's beneficial for organizations that cannot afford downtime and require continuous access to critical data. Data replication helps mitigate the risk of data loss by creating redundant copies of data across multiple systems or locations, ensuring data availability in the event of hardware failures, natural disasters, or other unforeseen disruptions.

Can we replicate data without the CDC?

It is feasible to replicate data between systems without the need for Change Data Capture (CDC). Entire datasets are periodically copied from a source to a target system using traditional replication techniques. CDC is not the only method available; it provides real-time replication by capturing and propagating only the modifications made to the source data. Although it is less efficient in terms of processing and bandwidth, full data replication can still be used in situations where real-time data synchronization is not necessary.

Suggested Read:

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)