What is OLTP Database: A Guide to Online Transaction Processing

Summarize this article with:

✨ AI Generated Summary

Businesses need a database to store and manage their daily transactions. Online Transaction Processing systems are essential for handling payments, inventory updates, and customer records—classic examples of transactional data.

To centralize and process this data, they use OLTP (Online Transaction Processing), a type of database optimized for storing and processing high volumes of simple online transactions in real time. OLTP systems can support concurrent users and maintain data integrity while minimizing response time for any database changes.

In this article, we explain what OLTP is, how it serves as a foundational system for modern companies, and the key differences between OLTP and OLAP solutions. We examine common OLTP use cases and list best practices for organizations to implement effective OLTP systems.

TL;DR: OLTP Systems at a Glance

- OLTP databases process high volumes of short, real-time transactions like e-commerce orders, banking payments, and inventory updates with millisecond-level performance

- Key characteristics include ACID compliance and high concurrency support. Three-tier architecture, while common, is a frequent implementation pattern rather than a defining feature of OLTP systems

- Differs from OLAP by focusing on rapid transaction execution rather than complex analytics, though modern HTAP systems now combine both capabilities

- Popular implementations include MySQL, PostgreSQL, Oracle, and cloud-native solutions with serverless scaling and advanced security features

- Modern trends emphasize AI integration, autonomous operations, and seamless data integration with analytics platforms through CDC and stream processing

What Is OLTP and How Does It Power Modern Business Operations?

Online Transaction Processing (OLTP) databases are designed to process a large number of short, interactive transactions in real time.

Some common examples include:

- E-commerce websites processing customer orders

- Banking systems handling financial transactions

- Airline reservation systems managing flight bookings

- POS systems in retail stores facilitating sales and inventory updates

Businesses rely on OLTP systems to handle large volumes of concurrent database transactions without sacrificing consistency and accuracy. A database transaction is any change made in a database—such as inserting or deleting data.

OLTP databases are relational databases. They organize data in tables consisting of rows and columns, and analysts use SQL queries to execute transactions for data manipulation.

How Does OLTP Architecture Enable High-Performance Transaction Processing?

An OLTP system typically follows a three-tier architecture:

- Presentation layer – the front-end or user interface where transactions are generated

- Logic layer – the business logic or application layer that processes data based on predefined rules

- Data layer – the data store (DBMS + database server) where each transaction and related data are stored and indexed

Each tier has its own infrastructure and update intervals, so changes to one tier do not impact the others.

How do OLTP databases work behind the scenes?

The main function of OLTP is to take transactional data, process it, and then reflect new input after updating the database in the back end. Modern OLTP systems increasingly leverage cloud-native architectures with disaggregated compute-storage models. Systems like Amazon Aurora and Google Spanner separate transactional processing from persistent storage, eliminating monolithic bottlenecks and enabling independent scaling. Oracle's sharding implementation distributes data across thousands of autonomous shards without shared infrastructure, enabling petabyte-scale OLTP with linear scalability while maintaining ACID compliance through advanced consensus protocols.

What Are the Key Characteristics That Define OLTP Systems?

- Fast query processing – high-speed responses, often achieving microsecond-level latency with in-memory implementations and persistent memory accelerators that bridge DRAM-flash gaps using advanced storage-class memory

- High concurrency – algorithms such as row-level locking and optimistic concurrency control allow many users to transact simultaneously, with modern systems supporting lock-free concurrency patterns that eliminate deadlocks

- ACID properties – Atomicity, Consistency, Isolation, Durability ensure reliable operations through write-ahead logging and distributed consensus mechanisms

- Support for simple transactions – optimized for order entry, inventory updates, customer service, etc. (not complex analytics), while maintaining transactional integrity across distributed environments

How Do OLTP and OLAP Systems Complement Each Other?

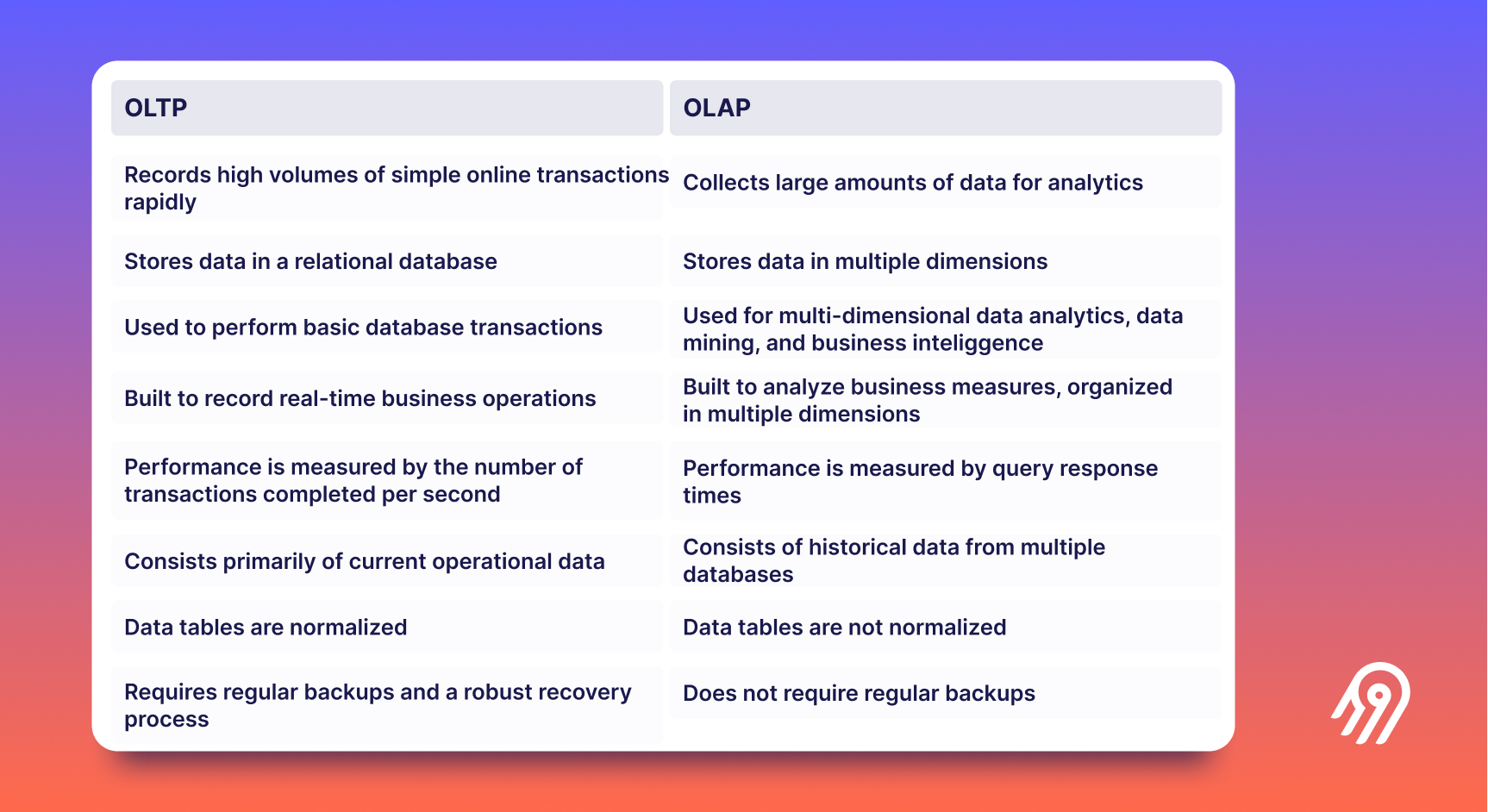

OLAP (Online Analytical Processing) databases enable data aggregation and complex analysis, whereas OLTP focuses on rapid transaction execution. OLAP systems often integrate data from OLTP databases into a centralized repository such as a cloud data warehouse. Modern data integration platforms use Change Data Capture (CDC) to stream changes from OLTP systems to analytical environments in near real time.

What Database Systems Are Best Suited for OLTP Workloads?

OLTP databases fall into two main types:

- Relational databases – the most common choice, often delivered as cloud services

- NoSQL databases – useful for large volumes of unstructured or semi-structured data

Popular OLTP databases

- MySQL – supports query optimization, replication, automatic failover, and monitoring with enhanced JSON path indexing for document queries

- PostgreSQL – offers MVCC, indexing, partitioning, replication, and high availability with improved autovacuum algorithms for write-intensive workloads

- Microsoft SQL Server – includes in-memory OLTP with optimized locking mechanisms and Always On Availability Groups for enterprise deployments

- Oracle Database – provides Real Application Clusters and advanced scaling with autonomous optimization capabilities that reduce administrative overhead

- MongoDB – a NoSQL document database with flexible schema and improved transaction support across replica sets

NewSQL systems (CockroachDB, TiDB, Google Spanner) combine SQL familiarity with horizontal scaling and global consistency, incorporating advanced vector indexing for machine learning applications and distributed ACID guarantees across geo-partitioned data.

How Do Serverless Architectures Transform OLTP Performance and Scalability?

Serverless OLTP platforms have evolved significantly, with systems like Aurora Serverless v2 demonstrating sub-second scaling from minimal to maximum capacity. These platforms eliminate server provisioning by dynamically allocating resources based on transaction load, achieving unprecedented elasticity for variable workloads.

Dynamic resource allocation

Auto-scaling at sub-second granularity reduces idle costs and absorbs traffic spikes while maintaining availability through multi-zone deployment strategies. Modern implementations handle traffic variations up to 100x without manual intervention, automatically adjusting compute resources based on transactional demand patterns.

Technical innovations

Serverless integration has matured beyond experimental adoption, with cloud providers offering containerized OLTP instances that preserve transactional integrity within microservices architectures. Advanced consensus protocols maintain consistency across rapidly shifting compute nodes, while warm instance pools and predictive scaling algorithms mitigate cold-start latency to sub-100ms levels.

Market impact

Organizations adopting serverless OLTP report cost reductions while maintaining performance through per-second billing models and automated resource optimization that eliminates over-provisioning during low-demand periods.

What Are the Latest Security Innovations for Protecting OLTP Systems?

Modern OLTP security extends beyond traditional encryption to encompass multi-layer protection strategies and automated compliance frameworks that address evolving cyber threats.

- Multi-layer encryption – Advanced implementations include attribute-based encryption where sensitive data remains cryptographically segmented from operational data, reducing breach exposure through granular access controls and field-level protection

- Confidential computing integration – Secure enclaves isolate sensitive operations within trusted execution environments, preventing memory scraping attacks without compromising query performance through hardware-assisted security boundaries

- Automated compliance frameworks – Blockchain-audited trails create immutable records of every schema change and data access event, with cryptographically chained log entries that satisfy regulatory requirements while reducing audit preparation time

- Geo-distributed security – Modern systems implement geo-fenced data storage that physically isolates regulated data within regional boundaries while maintaining global accessibility through encrypted replication channels

What Are the Most Common OLTP Use Cases Across Industries?

Real-world implementations demonstrate OLTP's versatility across sectors. Capital One's migration to Amazon Aurora enabled real-time fraud detection within 50ms of card transactions, processing machine learning models on live transactional data to reduce false positives. Walmart's adoption of Google Cloud Spanner handles traffic spikes during peak shopping periods, processing over one million transactions per minute while maintaining inventory accuracy across thousands of stores.

- E-commerce systems – orders, payments, inventory with real-time pricing optimization based on demand patterns

- Banking and financial services – secure transaction processing supporting fraud detection algorithms that analyze spending patterns instantaneously

- Reservation systems – travel and hospitality bookings with dynamic pricing and availability management

- CRM platforms – real-time customer data synchronization across multiple touchpoints for personalized service delivery

What Are the Main Advantages and Limitations of OLTP Databases?

Pros

- Sub-second response times with modern hardware acceleration achieving microsecond-level latency

- ACID-compliant data integrity through advanced consensus protocols and distributed transaction management

- Real-time processing capabilities that enable immediate business decision-making

- High concurrency support through optimized locking mechanisms and parallel processing architectures

- Cloud-native scalability with automatic resource allocation and multi-zone redundancy

Cons

- Limited analytical capabilities compared to specialized OLAP systems, though HTAP architectures increasingly address this gap

- Resource-intensive infrastructure requirements for high-performance implementations, particularly for in-memory processing

- Complex concurrency management requiring specialized expertise for optimization and troubleshooting

- Storage costs can escalate with high-volume transactional data retention requirements

How Do Hybrid Transactional/Analytical Processing (HTAP) Systems Transform Real-Time Business Intelligence?

HTAP architectures represent a fundamental shift in database design, eliminating traditional trade-offs between transactional speed and analytical depth by converging OLTP and OLAP capabilities within unified platforms. This convergence enables real-time analytics on live transactional data without ETL pipelines, transforming how organizations derive insights from operational activities.

Unified Engine Architecture

Modern HTAP implementations like TiDB demonstrate dual-engine architectures where TiKV handles OLTP workloads while TiFlash manages analytical queries. This integration enables real-time analytics on live transactional data through automatic data replication using Raft consensus protocols. E-commerce platforms leverage this architecture for instant inventory optimization, analyzing sales trends as transactions occur to prevent stockouts and reduce overordering.

Financial institutions implement HTAP for real-time fraud detection by running predictive models directly on transaction streams, reducing analysis latency from hours to milliseconds. Supply chain systems use converged processing for dynamic route optimization, correlating shipment transactions with real-time weather and traffic analytics to minimize delivery delays.

Technical Implementation Advantages

HTAP systems resolve inherent conflicts between row-oriented OLTP operations and columnar OLAP processing through shared buffer architectures and cache invalidation protocols. Systems like YugabyteDB maintain transactional integrity during schema modifications while supporting distributed backups and online index builds. Performance benchmarks reveal HTAP systems achieve significantly faster mixed workloads than traditional partitioned setups, though resource allocation balance remains crucial during peak loads.

Business Impact and Use Cases

The architectural shift transforms decision-making from periodic batch processing to continuous intelligence. Organizations report improved deployment speed and reduced analytical latency, with technical teams regaining focus on business value creation rather than maintaining separate transactional and analytical data pipelines.

What Performance Optimization Techniques Maximize OLTP System Efficiency?

Performance optimization in OLTP environments requires systematic approaches spanning indexing strategies, concurrency control, memory management, and storage architecture. Modern optimization techniques leverage machine learning algorithms, hardware acceleration, and predictive scaling to achieve unprecedented throughput levels.

Advanced Indexing and Storage Optimization

Adaptive indexing techniques use multi-armed bandit algorithms to evaluate potential indexes against simulated query workloads, automatically creating and dropping indexes based on access patterns. Hash indexing provides O(1) lookup performance for session management applications, while B-tree structures optimize range queries common in financial reporting systems.

Storage tiering separates transaction logs from data files on high-performance devices, with modern implementations leveraging persistent memory technologies that bridge DRAM-flash latency gaps. NVMe-oF TCP implementations now rival traditional RDMA performance while requiring significantly less infrastructure investment, making high-performance storage accessible to mid-market organizations.

Memory and Connection Management

In-memory OLTP implementations eliminate disk bottlenecks through memory-optimized tables and natively compiled stored procedures, achieving throughput improvements in payment processing and inventory management systems. Buffer pool optimization targets high hit rates through predictive algorithms that analyze access patterns, reducing physical I/O operations while maintaining data consistency.

Connection pooling optimizations reduce overhead through dynamic sizing algorithms that maintain optimal utilization rates. Modern implementations automatically adjust pool sizes based on workload patterns, preventing resource exhaustion during traffic spikes while minimizing idle connection overhead during low-demand periods.

Benchmarking and Monitoring Standards

TPC-C benchmarks provide standardized performance measurement for OLTP workloads, simulating wholesale supplier operations with mixed transaction types including order processing, payment verification, and inventory updates. Organizations use these benchmarks to evaluate system performance across different hardware configurations and software optimizations.

Monitoring implementations track key performance indicators including transaction throughput, lock wait times, buffer pool hit ratios, and query execution plans. Automated alerting systems detect performance degradation patterns, triggering optimization procedures before user experience impacts occur.

How Can Organizations Design Effective OLTP Systems?

Best practices for schema design

- Normalize data to reduce duplication and maintain referential integrity across related tables

- Create appropriate indexes on frequently queried columns while monitoring index usage patterns to prevent over-indexing

- Use correct keys (primary, composite, foreign) with consideration for distributed environments and sharding strategies

- Continuously monitor and optimize query performance using execution plan analysis and automated tuning recommendations

Scalability considerations

- Horizontal scaling through intelligent sharding strategies that distribute data based on access patterns and geographic requirements

- Vertical scaling optimizations for CPU, memory, and storage resources with automatic scaling triggers based on performance metrics

- Cloud-based services integration (Amazon RDS, Azure SQL, Google Cloud SQL) with multi-zone deployment for high availability

- Replication, caching, and load balancing architectures that maintain consistency while distributing read workloads

Transaction and concurrency management

- Advanced locking mechanisms balancing shared and exclusive locks with deadlock prevention algorithms

- Isolation level optimization from Read Uncommitted to Serializable based on application consistency requirements

- Deadlock handling through timeout configurations and transaction prioritization schemes

- Optimistic concurrency control implementation for high-throughput workloads with low contention scenarios

How Can Data Integration Bridge OLTP and Analytical Systems?

CDC-based data integration streams transactional changes from OLTP databases to analytical platforms with sub-minute latency, supporting both ETL and ELT workflows while preserving operational system performance. Modern integration approaches leverage advanced replication techniques and automated schema management to maintain data consistency across distributed environments.

Platforms like Airbyte offer over 600 connectors with incremental replication capabilities and containerized extraction that avoids disrupting operational workloads. These connectors support real-time data movement from diverse OLTP sources including MySQL, PostgreSQL, SQL Server, and Oracle databases to cloud data warehouses and analytics platforms. Advanced Change Data Capture implementations monitor transaction logs continuously, capturing schema changes and data modifications with minimal impact on source system performance.

Integration architectures increasingly support both batch and streaming patterns, enabling organizations to balance real-time analytics requirements with infrastructure costs. Automated schema evolution handling ensures analytical systems adapt to operational database changes without manual intervention, while data lineage tracking provides audit trails for compliance and governance requirements.

How Do You Query an OLTP Database Effectively?

SQL remains the primary language for OLTP database interactions, though modern implementations incorporate performance optimizations and security enhancements that improve both efficiency and safety.

Modern querying techniques incorporate prepared statements for security and performance, connection pooling for resource efficiency, and query hints for execution plan optimization. Advanced implementations use parameterized queries to prevent SQL injection attacks while enabling query plan reuse for improved performance.

What Is the Future of OLTP Database Systems?

The future of OLTP systems centers on autonomous operations, hardware acceleration, and deeper integration with machine learning workflows. Emerging trends include quantum-resistant encryption standards for long-term security, WebAssembly stored procedures for safety-isolated business logic execution, and neuromorphic hardware prototypes demonstrating energy efficiency improvements for inference workloads.

Cloud-native architectures continue evolving toward fully serverless deployments with sub-second elasticity and pay-per-operation billing models. Integration with edge computing enables distributed OLTP deployments that process transactions closer to data sources while maintaining global consistency through advanced consensus protocols.

Machine learning integration transforms database administration through continuous optimization algorithms that automatically tune configurations, predict failure scenarios, and scale resources based on workload patterns. These autonomous systems reduce operational overhead while improving performance through real-time adaptation to changing business requirements.

For deeper dives into related topics, explore more data-engineering resources on our content hub.

Frequently Asked Questions About OLTP

What is the main purpose of an OLTP system?

OLTP systems are designed to process large numbers of small, quick transactions in real time—such as payments, reservations, or inventory updates—while maintaining data accuracy and integrity.

How does OLTP differ from OLAP?

OLTP handles day-to-day operational transactions, while OLAP is built for analyzing aggregated data. OLTP focuses on speed and concurrency, whereas OLAP supports complex queries and reporting.

Can OLTP databases handle analytics?

Traditional OLTP databases are not optimized for complex analytics. However, hybrid transactional/analytical processing (HTAP) systems now combine OLTP and OLAP capabilities, enabling real-time insights on live data.

Which industries use OLTP most?

OLTP is essential across sectors like e-commerce, banking, travel, retail, and customer service—anywhere businesses need to process high volumes of transactions quickly and reliably.

.webp)