8 Best Data Lake Tools For Engineers in 2026

Summarize this article with:

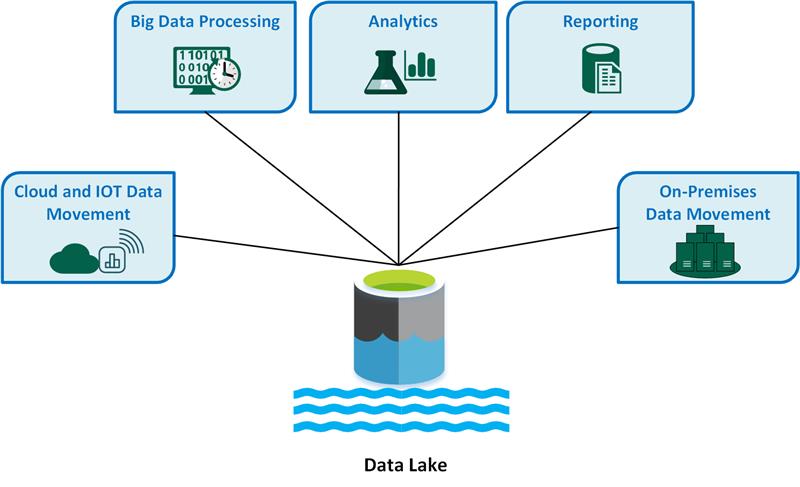

Your businesses acquire and generate vast amounts of data from various sources on a large scale. To effectively harness the value of this data, you need a robust and scalable data management solution. This is where a data lake becomes crucial. It is a centralized repository that enables you to store massive amounts of data in its raw form. Data lakes offer flexibility, scalability, and cost-effectiveness, as they can accommodate diverse data types and handle massive data volumes without requiring any transformations.

In this article, you will explore the top data lake tools that can empower your business to manage your data efficiently. Let’s explore each of them in detail, along with their key features.

Top 10 Data Lake Tools

Let’s explore the best data lake tools to consider in 2026:

1. AWS S3

Amazon Simple Storage Service (S3) is AWS’s most popular object storage solution for storing structured and unstructured data. It allows you to collect data from various sources in real-time or in batches and store it in its original format. Furthermore, it enables you to seamlessly integrate with powerful AWS services like Athena, Redshift Spectrum, AWS Glue, and Lambda, enabling you to query, process, and analyze your data efficiently.

Here are some important features of Amazon S3:

- AWS S3 makes it simple to create a multi-tenant environment that allows multiple users to run various analytical tools on the same data copy. This reduces costs and enhances data consistency compared to traditional solutions, which require distributing multiple data copies across several processing platforms.

- It offers multiple storage classes, each optimized for specific use cases. This allows you to optimize costs by storing data based on its access patterns.

- Amazon S3 prioritizes security by default and offers robust user authentication features. It provides access control mechanisms like bucket policies and access-control lists to allow fine-grained access to data stored in S3 buckets.

- S3 Cross-Region Replication enables you to copy your objects across S3 buckets, even across different accounts. This minimizes latency by storing the objects closer to the user's location.

2. Cloudera

Cloudera provides a comprehensive Data Lake Service built on open-source technologies like Hadoop, Hive, and Spark. It differentiates itself by prioritizing enterprise-grade security, governance, and compliance features. Cloudera empowers you to set up and manage data lakes, ensuring the safety of your data wherever it’s stored, from object stores to Hadoop Distributed File System (HDFS).

Here is an overview of Data Lake Service key features:

- Data Lake storage resides in external locations independent of the hosts running the Data Lake Services. This ensures that workloads are protected from data loss in the event of a failure of the Data Lake nodes.

- It automatically captures and stores metadata definitions as they're discovered and created during platform workloads. This transforms metadata into valuable information assets, enhancing their usability and overall value.

- A Data Lake cluster utilizes Apache Knox to offer a secure gateway to access Data Lake component UIs.

- Data Lake Service enforces granular, role, and attribute-based security policies. It encrypts data at rest and in motion and efficiently manages encryption keys.

3. Apache Hudi

Apache Hudi is an efficient open-source data lake platform that offers data ingestion, storage, and querying capabilities. It includes DeltaStreamer, a dedicated tool designed for ingesting real-time data. This allows you to capture and process data continuously as it arrives from streaming sources like Apache Kafka, Apache Pulsar, or other messaging systems.

Here are the key features of Apache Hudi :

- Apache Hudi ensures the ACID (Atomicity, Consistency, Isolation, and Durability) properties for data operations within the data lake. This makes it well-suited for use cases where maintaining data integrity and consistency is crucial.

- It supports various cloud storage systems, including Amazon S3, Microsoft Azure, and Google Cloud Storage (GCS), allowing for deployment in cloud-based data lake environments.

- Hudi maintains a timeline of all activities performed on the table at different instants of time. This facilitates quick access to historical data and enables efficient querying.

- It ensures data integrity and consistency through atomic file commits and write-ahead logs. This guarantees that data changes are not lost in case of failures.

- Hudi's data compaction feature consolidates small data files into larger ones, reducing storage overhead and improving query performance.

4. Snowflake

Snowflake’s cloud-built architecture provides a flexible solution to support your Data Lake needs. It allows you to store all your data, regardless of the format (unstructured, semi-structured, and structured), within Snowflake’s optimized, managed storage. Furthermore, it secures your data lake with detailed, granular, and consistent access controls, ensuring data remains protected.

Here are some of the key features of Snowflake :

- Snowflake's cloud architecture allows for independent scaling of storage and compute. This separation enables you to optimize costs by scaling resources based on your needs.

- It also supports a schema-on-read approach for data storage. You can store data in its original format and define the schema only when querying the data.

- Data Lake distinguishes itself by being open to all data types and storing data in its original raw state. It transforms data only when required for analysis based on query criteria.

- Snowflake allows you to use pre-built views that are readily available for querying to comply with regulatory auditing requirements. These views provide insights into data lineage, usage patterns, and relationships.

- It enforces column-level security through dynamic data masking. This allows you to protect sensitive data by dynamically masking specific columns based on the privileges and access rights.

5. Infor Data Lake

Infor Data Lake is a scalable and flexible platform that offers a unified repository for storing your enterprise data. It supports data ingestion from multiple sources through connectors and functions like ION Messaging Service (IMS), AnySQL, and File Connector. This facilitates the loading of data from various systems and databases into Data Lake, ensuring a seamless flow of information.

Here are some of the known features of Infor Data Lake:

- The Infor Data Catalog offers various services to help you analyze and track changes in your captured data. This helps you understand your data by providing information about its origin, format, and usage patterns.

- Infor Data Lake prioritizes data security and governance. Data objects stored in Data Lake are encrypted with AES-256 bit encryption to ensure data security.

- It supports a schema-on-read approach and a fast, flexible data consumption framework for making informed decisions based on captured data.

- Infor Data Lake provides indexing capabilities to make data easily accessible. Using the indexing functionality, you can efficiently search and retrieve specific data objects or information.

It seamlessly integrates with tools like Birst for advanced data analytics and visualization.

6. Azure Data Lake Storage

Azure Data Lake Storage is Microsoft's cloud-based data lake solution, designed for scalable and secure data storage and analytics. It integrates seamlessly with Azure services and supports a wide range of big data processing frameworks.

Three key features of Azure Data Lake Storage:

1. Hierarchical namespace: Enables efficient organization and navigation of data, improving performance for big data analytics workloads.

2. Limitless scalability: Supports petabyte-scale storage with no fixed limits on file size or number of objects, allowing for massive data growth.

3. Multi-protocol access: Provides compatibility with both Blob and Data Lake Storage APIs, offering flexibility in data access and integration with various analytics tools.

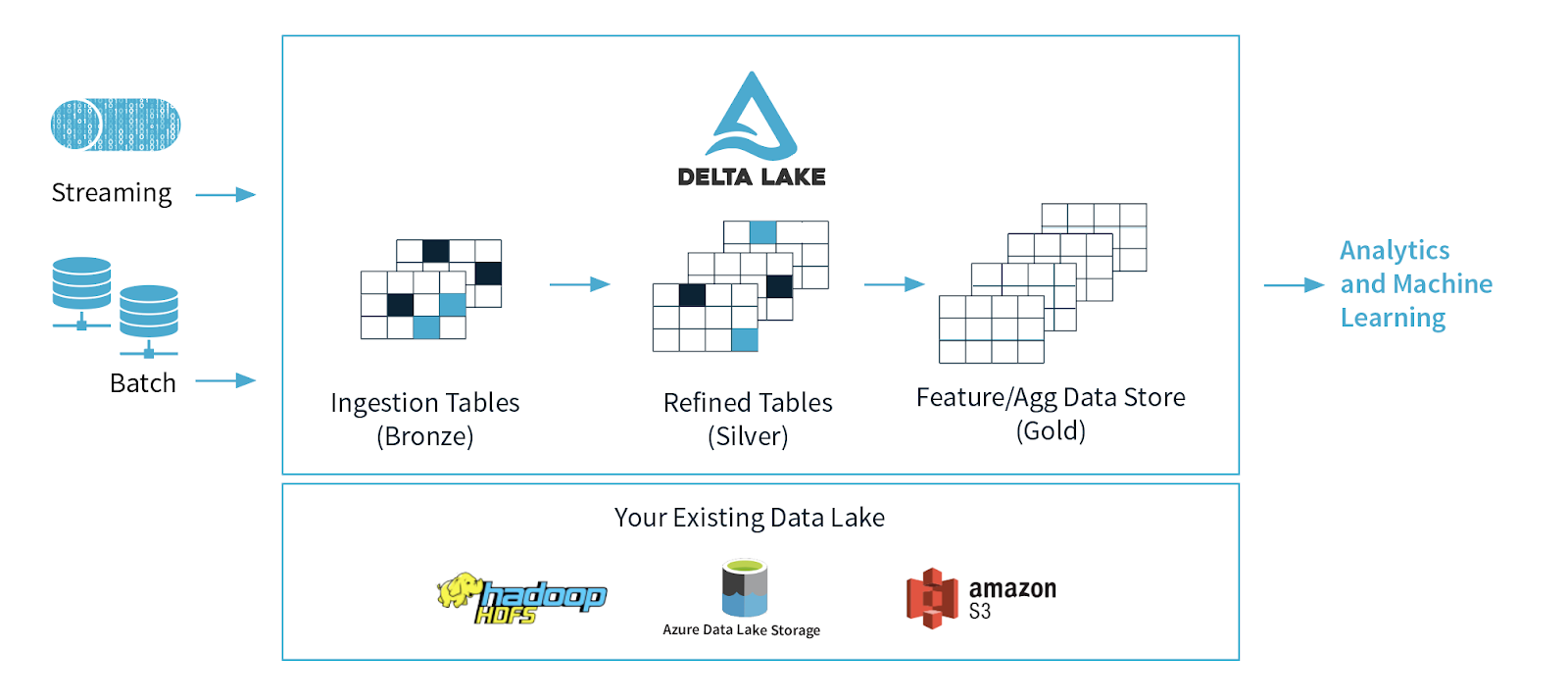

7. Databricks Delta Lake

Databricks Delta Lake is an open-source storage layer that brings ACID transactions to Apache Spark and big data workloads. It's designed to work with cloud object stores and provides reliability and performance optimizations for data lakes.

Three key features of Databricks Delta Lake:

1. ACID transactions: Ensures data consistency and reliability, even with concurrent reads and writes, reducing data corruption risks.

2. Data versioning: Allows access to previous versions of data for audits, rollbacks, or reproducing experiments.

3. Schema enforcement and evolution: Automatically handles schema variations and prevents data corruption from schema mismatches, while allowing schema to change as data evolves.

8. Google BigLake

Google BigLake is a unified analytics platform that extends BigQuery's capabilities to data lakes. It allows organizations to analyze data across multiple storage systems, including Google Cloud Storage, without data movement or duplication.

Three key features of Google BigLake:

1. Multi-engine support: Enables data processing using BigQuery, Spark, or other analytics engines, providing flexibility in tool choice.

2. Fine-grained security: Offers row-level and column-level security controls that are consistently applied across different processing engines.

3. Open format support: Works with open data file formats like Parquet and ORC, facilitating interoperability and avoiding vendor lock-in.

9. MinIO

MinIO is an open-source object storage system optimized for high-performance workloads and S3 compatibility. It's designed for hybrid cloud, on-premise, and Kubernetes environments, providing data lake scalability and flexibility.

Three key features of MinIO:

- S3 API Compatibility: MinIO offers a drop-in replacement for AWS S3, making it easy to integrate with existing applications and tools without rewriting code.

- High-Performance Architecture: Built for speed, MinIO delivers high throughput for read/write operations, supporting big data analytics and machine learning workloads.

- Erasure Coding and Data Protection: MinIO uses advanced erasure coding techniques to ensure data integrity, availability, and self-healing storage clusters.

10. Wasabi

Wasabi is a cloud object storage service designed for simplicity and cost-efficiency. It provides enterprise-grade durability and performance for storing large volumes of data, ideal for data lake use cases.

Three key features of Wasabi:

- Predictable Pricing Model: Wasabi eliminates egress fees and API request charges, offering flat-rate pricing that simplifies cost management for growing data lakes.

- S3 Compatibility: Full support for AWS S3 APIs ensures seamless integration with popular data lake and analytics tools.

- Data Immutability: Wasabi’s built-in immutability prevents data from being deleted or modified

Tips to choose the best Data Lake tool

Here are 5 tips for data engineers to choose the best data lake:

1. Assess scalability and performance

Consider your current data volume and projected growth. Choose a solution that can handle your expected data scale without compromising on query performance. Test the data lake's ability to handle concurrent users and complex analytics workloads.

2. Evaluate integration capabilities

Look for a data lake that integrates well with your existing tech stack and tools. Consider compatibility with your preferred analytics engines, ETL tools, and data visualization platforms. Good integration can significantly reduce development time and complexity.

3. Prioritize security and compliance

Ensure the data lake offers robust security features like encryption at rest and in transit, fine-grained access controls, and audit logging. If you're in a regulated industry, verify that the solution can help you meet relevant compliance requirements (e.g., GDPR, HIPAA).

4. Consider total cost of ownership

Look beyond just storage costs. Factor in computing costs, data transfer fees, and potential licensing fees. Also consider the operational costs, including the expertise required to manage and maintain the system.

5. Assess data governance features

Choose a data lake that provides strong data governance capabilities. Look for features like data cataloging, metadata management, and data lineage tracking. These features can help maintain data quality, improve discoverability, and ensure proper data usage across your organization.

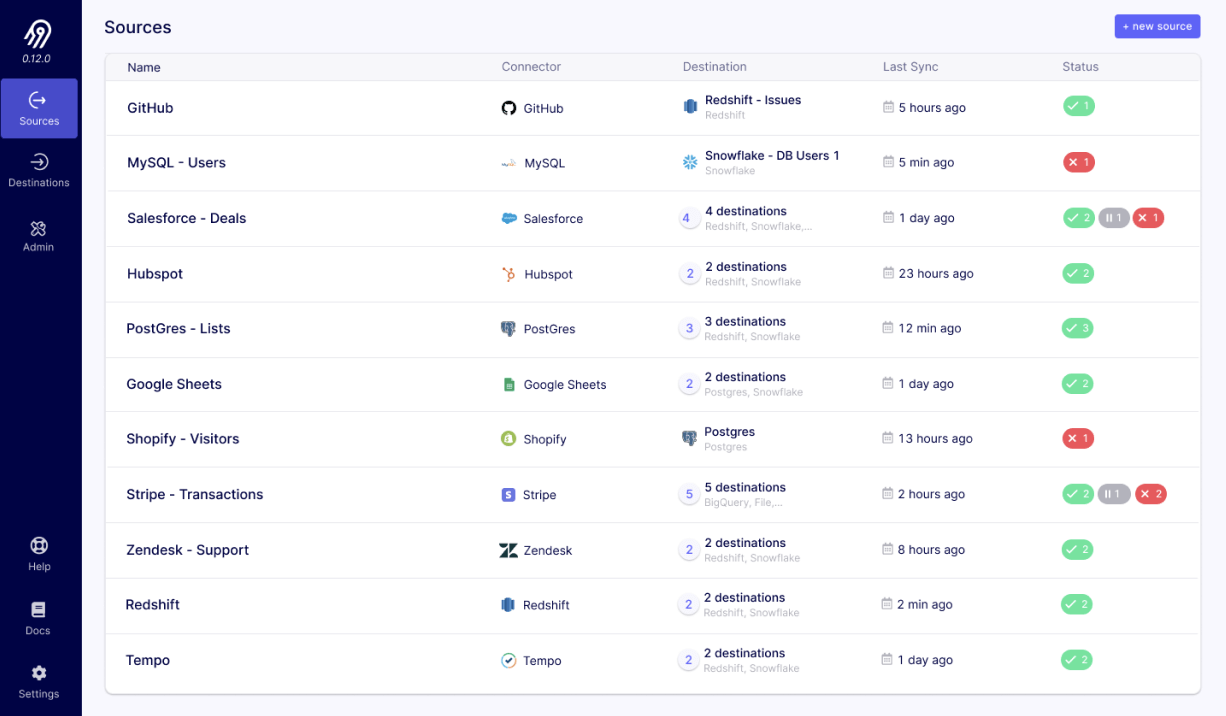

Seamlessly Move Your Data into Data Lake Using Airbyte

Data lakes have become essential to store vast amounts of raw data from various sources for analytics and insights. This data may reside in diverse sources such as APIs, databases, files, and data warehouses, requiring a streamlined approach to move data into the data lake. While this data holds immense value, managing and gathering it all instantly can be a challenge. That's where platforms like Airbyte can help!

Airbyte is a cloud-based data integration and replication platform that can expedite the process of extracting data from multiple data sources and loading it to your target system. It offers a vast catalog of over 600+ connectors, including AWS S3 and Azure Blob Storage.

Here are the key features of Airbyte:

Ease of Use: Airbyte prioritizes ease of use, offering a user-friendly interface for configuration, monitoring, and management. You can conveniently utilize multiple options, including UI, API, Terraform Provider, and PyAirbyte, to design and manage data pipelines.

Connector Customization: If the required connector is unavailable, Airbyte lets you build custom connectors using the AI-enabled Connector Builder or Connector Development Kit (CDK). This empowers you with the flexibility to create tailored connectors that align with the specific requirements, ensuring seamless integration with the desired data sources.

Simplifying AI Workflow: With Airbyte, you can directly store semi-structured and unstructured data in prominent vector stores like Pinecone, Milvus, and Qdrant. Migrating data into such databases enables you to streamline GenAI workflows.

RAG Transformations: Integrating Airbyte with LLM frameworks like LangChain or LlamaIndex allows you to perform RAG transformations, such as chunking, embedding, and indexing. These operations convert raw data into vector embeddings, which can be useful in training large language models (LLMs).

Enterprise General Availability: The Airbyte Self-Managed Enterprise Edition empowers you to centralize data access while ensuring data security. This version offers features like multitenancy and role-based access control (RBAC) that can help you manage multiple teams within a single deployment.

Custom Transformations: Airbyte adopts the ELT (Extract, Load, Transform) approach, where data is loaded into the target system before transforming it. However, Airbyte allows you to integrate with dbt (data build tool) to facilitate customized transformations. By leveraging dbt's robust capabilities, you can perform advanced data transformations.

Data Security: Airbyte incorporates various robust security measures, such as access control, audit logging, encryption, and authentication mechanisms. These ensure data integrity, confidentiality, and safety throughout the migration process. By adhering to industry-specific regulations, including GDPR, ISO 27001, SOC 2, and HIPAA, Airbyte protects your data from cyber-attacks.

Flexible Pricing: Airbyte provides flexible pricing options to accommodate diverse business needs. It offers four distinct plans—Airbyte Open Source, Cloud, Team, and Enterprise. The open-source version is free to use, while the Cloud plan operates on a pay-as-you-go model. The Team and Enterprise versions offer pricing based on specific syncing frequency requirements. To learn more about the pricing plans, contact the Airbyte sales team.

Wrapping Up

The data lake is becoming increasingly important in managing large volumes of data, and your business needs to leverage the right data lake tools to ensure effective data management. Whether you're dealing with structured or unstructured data, these tools offer the scalability, flexibility, and security required to manage your data lake environment effectively. Investing in the right data lake tool can transform the way you handle data, leading to enhanced productivity.

1. What is the difference between a data lake and a data warehouse?

A data lake stores raw, unstructured, semi-structured, and structured data, enabling flexible schema-on-read access. In contrast, a data warehouse stores structured and pre-processed data optimized for analytics using a schema-on-write approach. Data lakes are more suitable for big data, machine learning, and real-time analytics, while data warehouses are ideal for traditional BI reporting.

2. Can I use multiple data lake tools together?

Yes, many organizations adopt a multi-tool architecture. For example, you can use AWS S3 or Azure Data Lake Storage as the storage layer, Delta Lake or Apache Hudi for ACID-compliant transactions, and a platform like Airbyte for data ingestion. The key is to ensure compatibility and smooth integration between tools.

3. Which data lake tools are best for on-premise or hybrid environments?

Tools like Cloudera, MinIO, and IBM Data Lake are well-suited for hybrid and on-premise deployments. MinIO, in particular, offers S3 compatibility for private clouds, while Cloudera supports Hadoop-based data lakes across cloud and on-prem environments.

4. How do data lake tools handle data security and compliance?

Modern data lake tools implement security through features like encryption at rest and in transit, role-based access control (RBAC), audit logging, and data immutability. Tools like Snowflake, Azure Data Lake, and Airbyte also support compliance with regulations such as GDPR, HIPAA, and SOC 2.

5. How does Airbyte help in moving data into a data lake?

Airbyte simplifies data movement by offering 600+ pre-built connectors to sources like APIs, databases, and SaaS apps. It supports cloud storage destinations like AWS S3 and Azure Blob. With custom connector creation, ELT support, dbt integration, and AI/LLM-ready workflows, Airbyte accelerates and automates the data ingestion process into your data lake environment.

Suggested Reads:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)