Data Integrity vs Data Quality: What is the Difference?

Summarize this article with:

✨ AI Generated Summary

Data integrity ensures data accuracy, consistency, and security throughout its lifecycle, focusing on preventing unauthorized changes and maintaining trustworthiness. Data quality emphasizes the fitness of data for its intended use, including completeness, relevance, timeliness, and usability. Both require distinct strategies and collaborative governance, with emerging technologies like AI, blockchain, and real-time processing enhancing their management.

- Data integrity involves security measures, validation, and structural consistency to protect data from corruption.

- Data quality focuses on data completeness, relevance, timeliness, and usability for effective decision-making.

- Misconceptions include confusing the two concepts, treating quality as a one-time fix, and assigning responsibility solely to IT.

- Best practices include robust governance frameworks, continuous audits, security enforcement, and leveraging platforms like Airbyte for integration and monitoring.

The terms data integrity and data quality are frequently used interchangeably, leading to confusion regarding their distinct meanings and implications. Understanding the fundamental differences between these concepts is crucial if you strive to use your data effectively. Data integrity refers to data's accuracy, consistency, and reliability throughout its lifecycle, ensuring that it remains unaltered and trustworthy. Data quality, on the other hand, pertains to the fitness of data, encompassing factors such as precision, completeness, and relevance.

While both concepts are essential for maintaining the veracity and usability of data, they address distinct aspects of data management and require tailored strategies for implementation.

This article highlights the difference between data integrity and data quality, outlining their respective roles and implications in data management and decision-making processes.

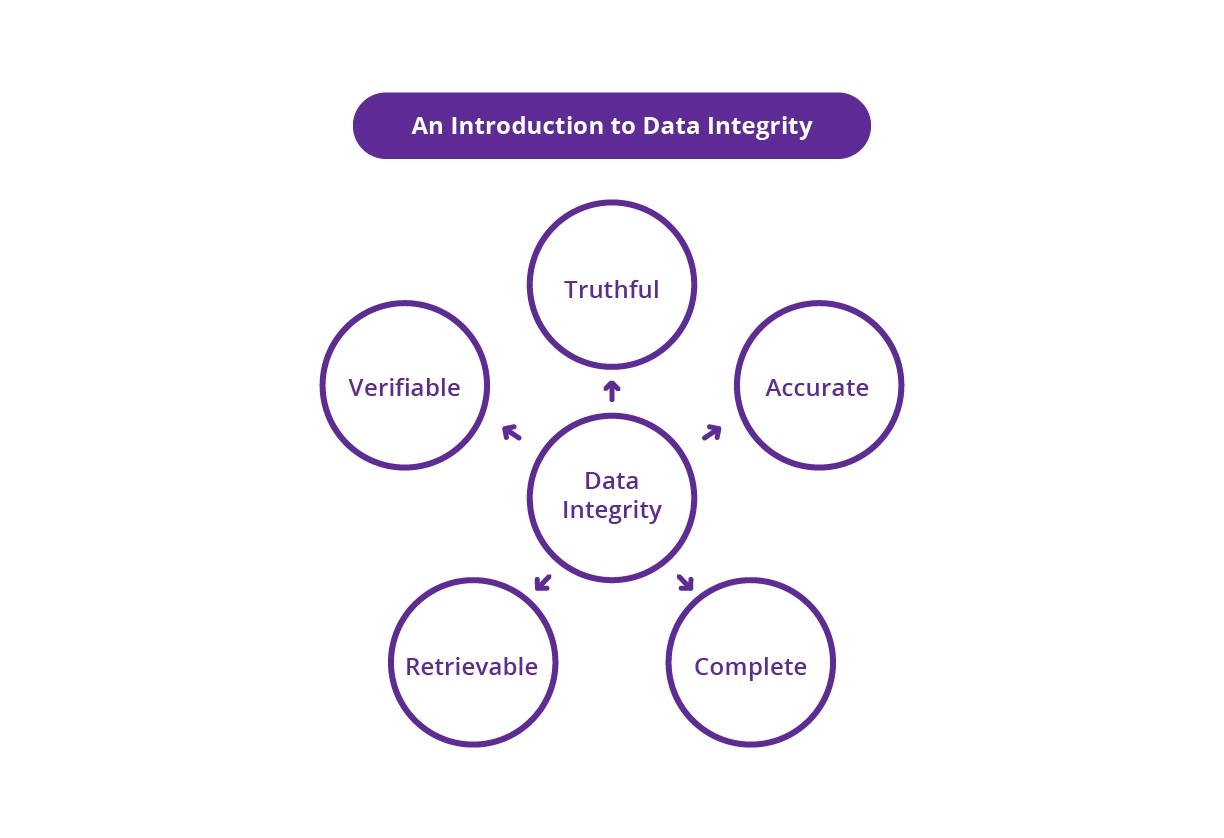

What Is Data Integrity and Why Does It Matter?

Data integrity is a fundamental aspect of data management, focusing on maintaining the accuracy and consistency of data. It involves implementing steps to ensure that data remains unchanged and reliable throughout its lifecycle, from creation to storage and usage. This includes implementing validation checks, encryption methods, and access controls to prevent errors, corruption, or unauthorized alterations to the data.

Data integrity serves as the guardian of trustworthiness in your data ecosystem, ensuring that information maintains its original form and meaning across all systems and processes. Unlike data quality, which focuses on fitness for purpose, data integrity specifically addresses the structural soundness and security of data assets.

Key characteristics of data integrity:

- Consistency Across Systems: Maintaining consistency across various data instances is a core aspect of data integrity. You can rely on consistent datasets to make informed decisions and conduct reliable analyses by ensuring coherence and uniformity in data representation. This consistency extends to referential integrity in databases, where relationships between data entities remain valid and enforceable.

- Security and Protection Measures: Robust security measures like encryption, access controls, and authentication protocols are integral to data integrity. These efforts help protect your data from unauthorized access, tampering, or corruption, thus ensuring the integrity and confidentiality of sensitive information. Modern approaches include role-based access controls and audit trails that track every data modification.

- Structural Validation: Data integrity ensures that data adheres to predefined constraints and rules, such as primary key uniqueness, foreign key relationships, and data type validations. These structural safeguards prevent data corruption at the database level and maintain logical consistency across your data architecture.

What Is Data Quality and How Does It Impact Business Operations?

Data quality is essential for ensuring data is fit for its intended purpose. It encompasses various aspects such as precision, completeness, and relevance. Data of high quality is reliable and can be trusted to make informed decisions and derive meaningful insights.

Data quality directly impacts business operations by determining how effectively organizations can leverage their data assets for strategic decision-making, operational efficiency, and competitive advantage. Poor data quality can cascade through business processes, leading to flawed analytics, misguided strategies, and decreased customer satisfaction.

Key characteristics of data quality:

- Completeness and Coverage: Data quality ensures that all necessary data is present and available, without any missing or incomplete information, to support accurate analysis and decision-making. Complete datasets enable comprehensive analytics and prevent gaps that could lead to biased or inaccurate conclusions.

- Relevance and Context: By emphasizing the importance of data relevance through data quality, you can ensure that the information collected and maintained is pertinent to the planned purpose or use case. Relevant data aligns with business objectives and provides actionable insights that drive meaningful outcomes.

- Consistency Across Sources: Data quality maintains consistency across different datasets and sources, ensuring uniformity and coherence in data interpretation and analysis. This consistency enables organizations to create unified views of customers, products, and operations across multiple systems and departments.

- Timeliness and Currency: Timeliness is critical to data quality, ensuring that data is up-to-date and reflects the most current information available. Fresh data enables real-time decision-making and prevents organizations from acting on outdated information that could lead to missed opportunities or competitive disadvantages.

- Usability and Accessibility: Data quality focuses on the usability of data, ensuring that it is structured, formatted, and organized in a way that facilitates easy access, analysis, and interpretation. Well-structured data reduces the time and effort required for analysis while improving the accuracy of insights derived from the data.

What Are the Key Differences Between Data Integrity vs Data Quality?

With a solid understanding of the overarching concepts of data integrity and data quality, let's now explore the key differences between these two critical aspects of data management.

The main difference between Data Integrity and Data Quality is that Data Integrity ensures the accuracy, consistency, and reliability of data throughout its lifecycle, while Data Quality focuses on the overall fitness of data for its intended use, including completeness, accuracy, and timeliness.

Data Quality vs Data Integrity: Objective

- Data integrity: Safeguard the security and trustworthiness of data by preventing unauthorized modifications, intentional manipulation, or accidental degradation. The primary goal is to maintain data's original state and ensure it remains uncompromised throughout its lifecycle.

- Data quality: Optimize the usability and usefulness of data, ensuring it is fit for analysis, decision-making, and operational use within the organization. The focus is on maximizing the business value derived from data assets.

Data Quality vs Data Integrity: Implementation

- Data integrity: Implemented through security measures such as encryption, access controls, and verification mechanisms. Technical implementations include checksums, digital signatures, database constraints, and immutable audit logs that track all data modifications.

- Data quality: Requires processes such as data profiling, cleansing, and governance to address errors, inconsistencies, and inaccuracies. Implementation involves data validation rules, standardization processes, and continuous monitoring to maintain quality standards.

Data Quality vs Data Integrity: Assessment

- Data integrity: Assessed through verification mechanisms such as checksums or digital signatures. Evaluation focuses on whether data has been altered, corrupted, or compromised during storage, transmission, or processing.

- Data quality: Evaluated based on completeness, accuracy, relevance, consistency, and timeliness using data profiling and quality metrics. Assessment involves measuring against business-defined quality dimensions and establishing quality scorecards.

Data Quality vs Data Integrity: Impact

- Data integrity: Builds trust in data assets, safeguarding against data breaches, fraud, and regulatory non-compliance. The impact extends to legal and regulatory compliance, with integrity violations potentially resulting in severe penalties and reputational damage.

- Data quality: Enhances decision-making processes, operational efficiency, and customer satisfaction, leading to improved business performance and competitiveness. High-quality data enables organizations to identify opportunities, optimize operations, and deliver superior customer experiences.

Data Quality vs Data Integrity: Timeframe

- Data integrity: Focuses on maintaining the accuracy of data over its entire lifecycle. Integrity measures must be sustained from data creation through storage, processing, and eventual archival or deletion.

- Data quality: Prioritizes timeliness, ensuring that data is current and relevant at the moment of use. Quality considerations may vary based on the specific use case and the acceptable age of data for different business processes.

Data Quality vs Data Integrity: Responsibility

- Data integrity: Typically a responsibility of IT and security teams who implement technical controls and monitor for violations. These teams focus on infrastructure, access controls, and technical safeguards that protect data from compromise.

- Data quality: A shared responsibility across analysts, managers, and business users who understand the context and requirements for data usage. Business stakeholders define quality standards while technical teams implement the processes to achieve them.

Data Quality vs Data Integrity: Risk Management

- Data integrity: Minimizes the risks of deliberate or accidental alterations, distortions, or unauthorized use. Risk management focuses on preventing data breaches, maintaining audit trails, and ensuring compliance with regulatory requirements.

- Data quality: Reduces the likelihood of decisions based on inaccurate or incomplete data. Risk management involves preventing business errors, missed opportunities, and strategic missteps that result from poor-quality information.

Data Quality vs Data Integrity: Regulatory Compliance

- Data integrity: Often driven by regulatory requirements and industry standards that mandate protection of sensitive data. Compliance frameworks such as GDPR, HIPAA, and SOX specifically require maintaining data integrity through technical and administrative controls.

- Data quality: Aligns with regulatory compliance efforts and broader organizational objectives. While not always explicitly mandated, high data quality supports accurate reporting and decision-making required for regulatory compliance.

What Emerging Technologies and Methodologies Are Transforming Data Integrity and Quality Management?

Modern data environments demand sophisticated approaches to maintaining both integrity and quality. Emerging technologies are revolutionizing how organizations protect, validate, and optimize their data assets, creating new opportunities for automated governance and enhanced reliability.

AI-Driven Automation and Machine Learning Integration

Artificial Intelligence has become a transformative force in data management, enabling proactive identification and resolution of both integrity and quality issues. Machine learning algorithms now perform predictive quality scoring, automatically flagging potential problems before they impact business operations. These systems learn from historical patterns to identify anomalies, such as unusual data distributions or unexpected schema changes, that could indicate integrity breaches or quality degradation.

AI-powered tools excel at automating data cleansing processes by recognizing patterns in errors and inconsistencies. For example, machine learning models can identify when customer addresses follow non-standard formats and automatically standardize them according to business rules. This automation reduces manual intervention while maintaining consistency across large datasets.

Federated learning represents a particularly innovative approach that enhances both privacy and data quality. This methodology allows organizations to train machine learning models on distributed data without centralizing sensitive information, preserving data integrity while enabling collaborative insights across multiple parties.

Blockchain and Immutable Data Validation

Blockchain technology provides unprecedented capabilities for maintaining data integrity through immutable ledgers and distributed validation. Organizations use blockchain-based systems to create highly tamper-evident records of data modifications, enhancing auditability and significantly reducing the risk of unauthorized alterations.

This technology proves particularly valuable for supply chain management, where data integrity across multiple organizations is critical. Blockchain enables automatic reconciliation between different systems while maintaining an immutable record of all transactions and data changes.

Smart contracts on blockchain platforms can automatically enforce data quality rules, triggering corrective actions when quality thresholds are not met. This automated governance ensures consistent quality standards across distributed data ecosystems.

Real-Time Data Processing and Event-Driven Architectures

Event-driven architectures enable organizations to maintain data integrity and quality through continuous monitoring and immediate response to data changes. These systems process data as it's generated, allowing for instant validation and correction of issues before they propagate through downstream systems.

Real-time processing capabilities reduce the latency between data creation and quality assessment, enabling organizations to maintain higher standards of data freshness and accuracy. Event sourcing patterns create immutable logs of all data changes, supporting both integrity requirements and quality auditing needs.

Edge computing paired with AI acceleration hardware addresses latency challenges while maintaining data quality standards. Local processing reduces dependence on centralized systems while ensuring that quality validations occur as close to the data source as possible.

Privacy-Enhancing Technologies for Secure Data Quality

Differential privacy enables organizations to perform data quality assessments while protecting individual privacy. This technology adds controlled noise to datasets, allowing statistical analysis for quality purposes while masking sensitive information.

Homomorphic encryption allows processing of encrypted data without decryption, enabling quality validations and transformations while maintaining data confidentiality. This capability is particularly valuable for organizations handling sensitive data that must remain encrypted throughout processing.

These privacy-enhancing technologies enable organizations to maintain high data quality standards while meeting strict regulatory requirements for data protection and privacy.

What Are the Most Common Misconceptions About Data Integrity vs Data Quality?

Despite their importance in modern data management, professionals frequently misunderstand the distinct roles and requirements of data integrity and data quality. These misconceptions can lead to inadequate governance, compliance risks, and suboptimal data management strategies.

The Interchangeability Misconception

Many professionals incorrectly assume that data integrity and data quality refer to the same set of practices and can be used interchangeably. This fundamental misunderstanding leads organizations to focus on one aspect while neglecting the other, creating significant gaps in their data management approach.

Data integrity functions like a fortress guard, preventing breaches and maintaining the original state of data, while data quality acts like a refinery, polishing raw data for maximum utility and business value. Organizations need both protective measures and optimization processes to achieve comprehensive data management.

This misconception often results in inadequate governance frameworks where teams implement quality measures without proper integrity controls, or establish security measures without addressing underlying quality issues that could compromise data utility.

The One-Time Fix Fallacy

A prevalent misconception suggests that data cleansing is a one-time activity that permanently resolves quality issues. In reality, data quality degrades continuously due to system updates, human errors, integration of new sources, and changing business requirements.

Effective data quality management requires continuous monitoring and iterative improvement processes. Organizations must implement ongoing profiling, validation, and cleansing cycles that adapt to evolving data landscapes and business needs.

The consequences of this misconception include recurring quality problems, decreased trust in data assets, and missed opportunities for data-driven insights when quality issues reappear after initial cleansing efforts.

The Prevention-Only Integrity Perspective

Some professionals view data integrity narrowly as a prevention-focused discipline, overlooking its role in ensuring consistency during data transformations and processing. Modern data workflows require integrity checks throughout the entire data lifecycle, not just at rest.

Data integrity must extend beyond preventing corruption to include validation during ETL processes, cross-system data synchronization, and real-time data streaming. Organizations need integrity controls that function across all stages of data processing and transformation.

This limited perspective can result in integrity breaches during data processing that go undetected, leading to downstream quality issues and potential compliance violations.

The Volume Equals Value Assumption

Organizations often prioritize data quantity over quality, assuming that more data automatically translates to better insights and business outcomes. This misconception leads to data hoarding without proper quality controls, creating storage costs and analytical complexity without corresponding value.

High-quality, relevant data typically provides more actionable insights than large volumes of poor-quality information. Organizations achieve better results by focusing on data that meets specific quality criteria and aligns with business objectives.

The volume-first approach can overwhelm analytical systems, increase storage costs, and reduce the signal-to-noise ratio in business intelligence and machine learning applications.

The IT-Only Responsibility Myth

Many stakeholders believe that data quality is solely an IT responsibility, excluding business users from quality management processes. This misconception ignores the critical role that domain experts play in defining quality requirements and identifying quality issues that technical teams might overlook.

Effective data quality management requires collaboration between technical experts who understand systems and constraints, and business stakeholders who understand context and requirements. Cross-functional teams achieve better outcomes by combining technical capabilities with business knowledge.

Organizations that treat data quality as purely technical often struggle with quality definitions that don't align with business needs and miss quality issues that require domain expertise to identify and resolve.

What Are the Best Practices for Maintaining Data Integrity and Data Quality?

Ensuring data integrity and quality requires a proactive approach and adherence to best practices throughout the data lifecycle. Organizations must implement comprehensive strategies that address both technical and governance aspects of data management.

1. Establish Data Governance Frameworks

Implement robust data governance frameworks that define policies, procedures, and responsibilities for managing and protecting data. Effective governance frameworks establish clear ownership models, define quality standards, and create accountability mechanisms for maintaining both integrity and quality.

Data governance should include cross-functional teams that represent both technical and business perspectives, ensuring that policies address real-world requirements while maintaining technical feasibility. Regular governance reviews and updates ensure that frameworks evolve with changing business needs and regulatory requirements.

2. Conduct Regular Audits and Assessments

Conduct regular audits and assessments of data to identify potential issues or discrepancies, using techniques such as data profiling and validation checks. Automated monitoring systems can continuously assess data against predefined quality rules while generating alerts for integrity violations.

These assessments should cover multiple dimensions including completeness, accuracy, consistency, timeliness, and validity. Regular reporting on quality metrics enables organizations to track improvements and identify areas requiring additional attention.

3. Implement Security Measures

Enforce encryption, access controls, authentication mechanisms, and data masking or tokenization to safeguard sensitive information and prevent unauthorized disclosure. Modern security approaches include zero-trust architectures that verify every access request and maintain detailed audit logs.

Role-based access controls ensure that users only access data necessary for their specific functions, reducing the risk of accidental modifications or unauthorized use. Multi-factor authentication and regular access reviews further strengthen security postures.

Invest in Quality Data Integration Solutions

Airbyte simplifies data integration tasks, reduces manual effort, and ensures data consistency and reliability through its comprehensive platform capabilities.

Airbyte's approach to data integration directly supports both integrity and quality objectives through several key features:

Schema Change Management: Airbyte automatically detects structural changes in source data and provides adaptive schema management capabilities. The platform checks for schema changes regularly and allows organizations to propagate field changes, add new streams, or pause pipelines until issues are resolved. This proactive approach prevents data integrity violations that could occur from schema drift.

Change Data Capture (CDC) Capabilities: Airbyte's CDC functionality enables incremental data synchronization, capturing only changes to source data. This approach maintains data consistency while reducing latency and computational overhead. CDC ensures that data warehouses and lakes remain current without full-table refreshes that could introduce quality issues.

Built-in Validation and Monitoring: The platform includes extensive monitoring and validation features that track pipeline performance and data accuracy. Integration with observability tools like Datadog and OpenTelemetry provides real-time insights into pipeline health, enabling rapid response to integrity or quality issues.

Security and Compliance Features: Airbyte's enterprise edition provides capabilities for end-to-end encryption, role-based access controls, and comprehensive audit logging, which can help protect data integrity and support compliance requirements for GDPR, HIPAA, and SOC 2 when properly configured.

Some unique features of Airbyte include:

- Open-source and cloud deployment options that prevent vendor lock-in while maintaining enterprise-grade capabilities.

- A centralized platform for connecting to diverse data sources with over 600 pre-built connectors that reduce integration complexity.

- Deduplication strategies and dbt integration that refine data quality through automated cleansing processes.

- PII masking and column-level filtering capabilities that enhance compliance while maintaining data utility.

Frequently Asked Questions

What happens when data integrity is compromised?

When data integrity is compromised, organizations face serious consequences including corrupted databases, unreliable analytics, regulatory compliance violations, and loss of stakeholder trust. Compromised data integrity can lead to incorrect business decisions and potential security breaches that affect the entire organization.

Can you have high data quality without data integrity?

No, you cannot maintain high data quality without data integrity. Data integrity serves as the foundation that ensures data remains accurate and unaltered, which is essential for achieving genuine data quality. Without integrity, even seemingly high-quality data cannot be trusted for critical business decisions.

How do you measure data integrity vs data quality?

Data integrity is measured through verification mechanisms like checksums, digital signatures, and audit trails that confirm data remains unchanged. Data quality is measured using criteria such as completeness, accuracy, consistency, timeliness, and relevance to business needs.

Who is responsible for maintaining data integrity and data quality?

Data integrity typically falls under the responsibility of IT, data engineering, and security teams who implement technical safeguards. Data quality responsibility is shared across departments, involving analysts, managers, and business users who understand data requirements and usage patterns.

What tools help maintain both data integrity and data quality?

Modern data platforms like Airbyte provide comprehensive solutions that address both areas through secure data integration, validation checks, monitoring capabilities, and governance features. These tools combine technical security measures with data quality assessment and improvement capabilities.

Conclusion

Distinguishing data integrity from data quality is crucial for modern data management and organizational success. Data integrity safeguards data accuracy, consistency, and reliability, protecting it against unauthorized alterations and maintaining trust throughout the data lifecycle.

Data quality ensures data is complete, relevant, timely, and usable for analytics and decision-making purposes. Both concepts require tailored strategies and continuous effort to meet their unique challenges and unlock the full value of organizational data assets. Organizations that successfully implement both data integrity and data quality practices position themselves for better decision-making and competitive advantage.

.webp)