10 Best Snowflake ETL Tools in 2026

Summarize this article with:

Efficient data management and integration are the top priorities of organizations striving for proficiency and making informed business decisions. At the forefront of this transformative journey lies the Snowflake architecture, known for its versatility and scalability. To unleash the complete capabilities of Snowflake, organizations leverage a diverse range of ETL tools crafted to enhance data handling. From streamlining data extraction to orchestrating intricate transformations and loading processes, these tools help drive efficiency by improving analysis capabilities.

As you read along, you will gain insights into the ETL process, factors for choosing ETL tools, and leading ETL tools for Snowflake available in the market.

Top 10 Snowflake ETL Tools

Here are the top Snowflake ETL tools determined by their popularity.

1. Airbyte

Airbyte is an AI-powered data integration platform that helps you merge data from APIs and databases to destinations such as data warehouses, lakes, or vector databases. With an extensive set of around 600+ pre-built connectors, Airbyte is crafted to simplify seamless data transfer and synchronization. A distinctive factor of Airbyte is that it supports both structured and unstructured data from different sources, allowing you to work smoothly with different data types and formats. This further simplifies your GenAI workflows, as the platform allows you to transform raw, unstructured data and load it directly into vector databases such as Milvus, Chroma, and Qdrant.

Some of the key features of Airbyte are:

- Custom Connector Development: You can build custom connectors quickly using Airbyte’s no-code Connector Builder, low-code Connector Development Kit (CDK), or other language-specific CDKs. To speed up the data pipeline development, you can leverage the AI assistant in Connector Builder. It automatically reads the API documentation, pre-fills configuration fields, and provides suggestions for fine-tuning the configuration process.

- PyAirbyte: Airbyte offers an open-source Python library, PyAirbyte, that allows you to build ETL pipelines using Airbyte connectors in the Python environment. You can use PyAirbyte to extract data from multiple sources and load it into various SQL caches, like Snowflake, DuckDB, Postgres, and BigQuery. This cached data is compatible with Python libraries, like Pandas and SQL-based tools, enabling you to perform advanced analytics.

- Schema Change Management: Airbyte allows you to define how to handle changes in the source schema in each transfer. This flexibility significantly ensures a robust data migration process when the source schema changes.

- Flexible Deployment: You have four deployment options: Airbyte Open Source, Airbyte Cloud, Airbyte Team, or a self-managed enterprise solution in your infrastructure. This gives you the flexibility to choose the deployment method that best fits and aligns with your preferences and requirements for a tailored data integration experience.

2. StreamSets

StreamSets is a cloud-native data integration platform that helps you build, run, and monitor data pipelines. It allows you to streamline your pipeline by connecting with various external systems, such as cloud data lakes, warehouses and on-premises storage systems like relational databases. While a pipeline executes, you can actively observe real-time statistics and error information as data moves from source to destination systems, ensuring efficient and transparent data flow.

Some of the key features of StreamSets are:

- With the StreamSets Transformer component, you can perform complex transformations in Snowflake with a no-code approach, surpassing SQL limitations.

- You can use the StreamSets Python SDK to quickly template and scale data pipelines with just a few lines of code. It also allows you to smoothly integrate with the UI-based tool for programmatic creation and handling data flows and jobs.

3. Azure Data Factory

Azure Data Factory (ADF) is a fully managed, serverless data integration platform. It helps you streamline the process by connecting data sources to various destinations through 90+ built-in connectors, including Snowflake. With Azure Data Factory’s infographics and visual designer, simplify the creation and management of data workflows through an intuitive drag-and-drop interface. This user-friendly approach allows you to design complex data pipelines smoothly.

Some of the amazing features of Azure Data Factory are:

- With ADF, you can track the data lineage, gaining insights into the origin and flow of data throughout the integration pipeline. This facilitates you to evaluate the potential effects of changes on downstream processes.

- Azure Data Factory enables you to automate the scheduling and triggering of data pipeline processes based on specific time intervals or events. This guarantees optimal execution without the need for manual intervention.

4. AWS Glue

AWS Glue is a serverless data integration platform that simplifies and expedites data preparation. It allows you to explore and connect over 70 varied data sources, monitor ETL pipelines, and manage a centralized data catalog for your data. The data catalog will enable you to swiftly explore and search AWS datasets without relocating the data. It becomes accessible for querying through Amazon Athena, Amazon EMR, and Amazon Redshift Spectrum.

Some of the amazing features of AWS Glue are:

- AWS Glue’s auto-scaling helps you dynamically adjust resources in response to workload fluctuations, assigning jobs as needed. You can add or remove resources based on task distribution or idle resource costs as your job advances.

- Integrating AWS Glue with AWS DataBrew, a user-friendly, point-and-click visual interface, lets you clean and normalize data effortlessly without requiring you to write code.

5. Fivetran

Fivetran is a fully managed ELT platform designed to simplify and automate data integration into Snowflake and other cloud warehouses. It enables seamless data replication through 300+ pre-built connectors, reducing the need for manual engineering work. With its schema-mirroring capabilities, Fivetran automatically adapts to changes in source systems—making it ideal for fast-growing teams that want to focus on analytics rather than pipeline maintenance.

Some of the amazing features of Fivetran are:

- Fivetran automates schema evolution by detecting changes in source systems and updating the destination schema accordingly, eliminating the need for manual mapping.

- The platform provides built-in scheduling and monitoring tools to ensure data freshness and quickly detect pipeline failures or sync issues.

- Fivetran’s pre-engineered connectors ensure fast setup and reliable sync performance, allowing you to get data into Snowflake within minutes.

6. Talend (Qlik Data Integration)

Talend, now part of Qlik, offers a comprehensive suite for data integration, transformation, and governance. It’s especially powerful for businesses seeking to enforce data quality and compliance across complex environments. With support for batch and real-time integrations into Snowflake, Talend makes it easy to design pipelines that ensure reliable, clean, and trustworthy data.

Some of the amazing features of Talend are:

- Talend includes built-in tools for data profiling, cleansing, and deduplication, ensuring high data quality before it enters Snowflake.

- The platform supports advanced metadata management and data lineage visualization, helping you trace and audit every data movement.

- Talend allows both low-code and code-based development, enabling data teams of all skill levels to build and manage integration pipelines effectively.

7. Hevo Data

Hevo Data is a no-code data pipeline platform that enables real-time data movement into Snowflake and other modern data warehouses. With over 150 connectors and a fully managed infrastructure, Hevo ensures that your data is always current, complete, and usable—without needing to write a single line of code.

Some of the amazing features of Hevo Data are:

- Hevo automatically detects and maps schemas from source systems to Snowflake, making it easy to onboard new datasets.

- The platform offers real-time data sync capabilities, ensuring your dashboards and reports reflect the most current data.

- Hevo’s intuitive UI and built-in monitoring tools simplify troubleshooting and give you full control over data pipeline health.

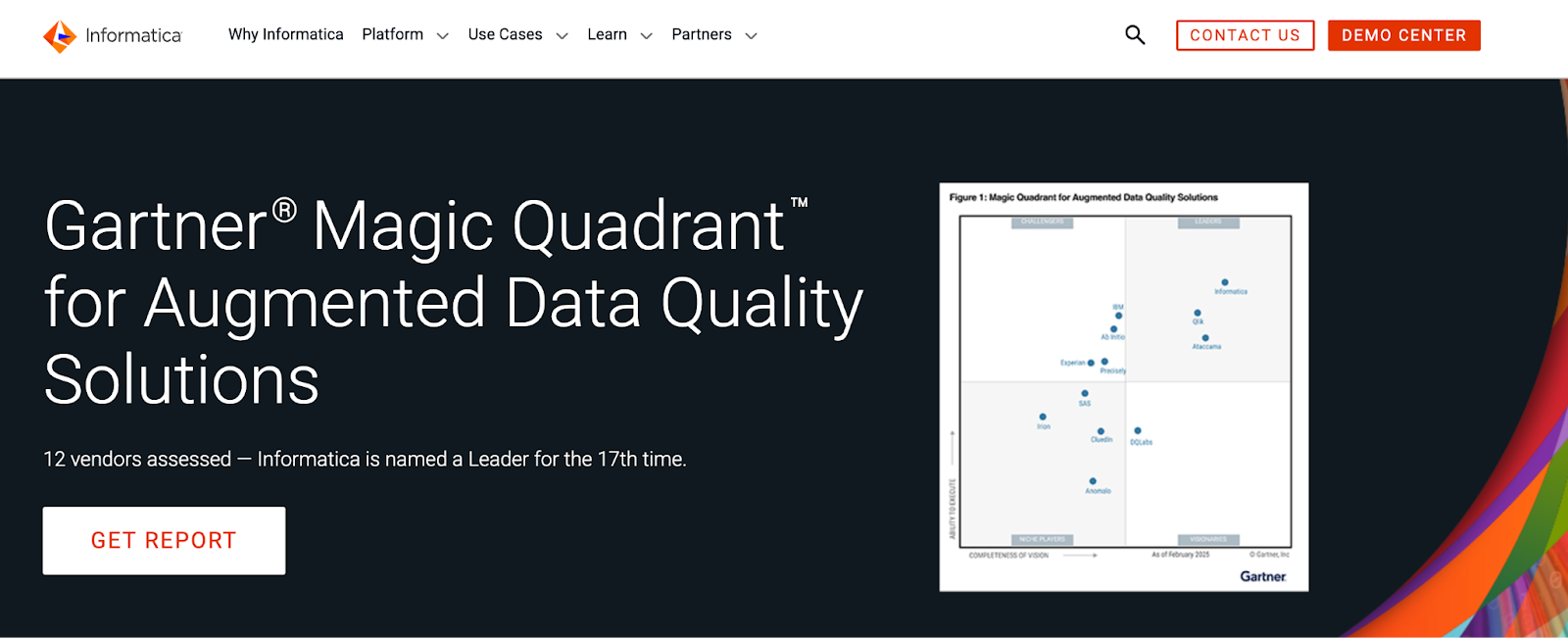

8. Informatica Cloud Data Integration

Informatica’s Cloud Data Integration platform provides enterprise-grade data management capabilities for organizations working with Snowflake. It supports thousands of connectors and emphasizes robust governance, scalability, and AI-powered data mapping. Informatica is widely used in regulated industries where compliance, reliability, and control are paramount.

Some of the amazing features of Informatica Cloud Data Integration are:

- With AI-powered transformation logic, Informatica simplifies complex data mapping tasks, saving time and reducing human error.

- The platform offers a centralized metadata manager that supports data lineage tracking, impact analysis, and auditing.

- Its elastic and scalable cloud architecture enables smooth handling of high-volume data pipelines across hybrid and multi-cloud environments.

9. Matillion

Matillion is a purpose-built cloud-native ETL/ELT tool optimized for Snowflake. It offers a drag-and-drop visual interface to help users design and orchestrate transformation workflows directly within Snowflake’s compute engine. This tight integration ensures performance efficiency and seamless scalability as your data grows.

Some of the amazing features of Matillion are:

- Matillion enables you to visually build complex transformation workflows that run natively inside Snowflake, minimizing data movement.

- The platform includes built-in components for data enrichment, orchestration, and error handling, reducing dependency on external tools.

- Matillion supports scheduling and versioning, so you can manage multiple pipelines and environments with confidence.

10. Stitch

Stitch is a simple, cloud-first ETL tool designed for rapid data replication to cloud warehouses like Snowflake. It provides a straightforward setup experience and offers over 130 data source connectors to get your pipeline running quickly. Stitch is especially suitable for small to mid-sized teams looking for minimal management overhead.

Some of the amazing features of Stitch are:

- Stitch allows you to define custom sync schedules to align with your business needs, whether hourly or daily.

- The platform provides automatic schema detection and maps data fields without needing manual configuration.

- Stitch's lightweight, no-frills interface makes it easy to monitor and manage your pipelines without complex setup or engineering.

Snowflake Overview

Snowflake is a cloud-based data warehousing platform that provides data storage, processing, and analytics. It employs a collaborative architecture in its storage system, enabling the seamless storage and real-time management of extensive databases.

The snowflake architecture is a hybrid combination of shared-disk and shared-nothing database architecture. In shared-disk, processing occurs on multiple nodes connected to a single memory disk, enabling you to access all data simultaneously. Conversely, shared-nothing involves independent nodes that process data in parallel, resulting in improved data warehouse performance and enhanced SQL query processing.

Key features of Snowflake are:

Cloning:

Snowflake’s cloning capability helps you to duplicate databases, schemas, and tables by editing metadata rather than replicating storage contents. This facilitates the quick creation of clones for testing the whole database.

Time-travel:

The time-travel feature enables you to access past data within a set time, even if altered or deleted. It aids you in restoring deleted data, creating backups, and examining changes over time.

Snowsight:

It is Snowflake’s interface that replaces SQL worksheets. It allows you to create and share charts and dashboards, supporting data validation and ad-hoc analysis.

Criteria to Choose the Right Snowflake ETL Solution

For efficient data extraction from diverse sources, avoid employing distinct data migration tools for each source. Instead, adopt a clear integration strategy and specific criteria to choose your Snowflake ETL tool.

Here are some of the factors to consider when choosing the right Snowflake ETL tool:

Ease of Use:

Emphasize tools that offer a user-friendly interface and intuitive functionalities with an aim to enhance the capability of the ETL process and facilitate a smoother learning experience for your team.

Extending Connectors:

Check if you can modify connectors to add new endpoints or address any issue related to connectors. Ensure the tool has a feature to create custom connectors, ensuring adaptability to new technologies, seamless integration, and smooth functioning.

Flexibility:

Look for tools that offer flexibility in handling different data formats, sources, and transformation requirements to accommodate diverse business needs.

Integration Capabilities:

Check whether the ETL tool can integrate seamlessly with other tools and systems within your data ecosystem. This promotes a cohesive and interoperable infrastructure.

Cost-effective:

Evaluate the cost of maintenance, licensing, and potential scaling to ensure the tool aligns with your budget constraints.

How to Import Data into Snowflake in Minutes

Unlocking the potential of Snowflake for various business objectives, including analytics, compliance, and performance optimization, involves implementing the ETL process.

To fully harness the potential of Snowflake, it is necessary to extract data from your desired sources and load it into Snowflake. For this purpose, we recommend leveraging Airbyte as it facilitates data replication with ease. This process can be achieved with just a few clicks by following the three steps mentioned below.

Step 1: Configure a Source Connector

Login to your Airbyte account and, using the user-friendly interface, set up a source connector from which you want to extract data.

Step 2: Configure Snowflake as a Destination Connector

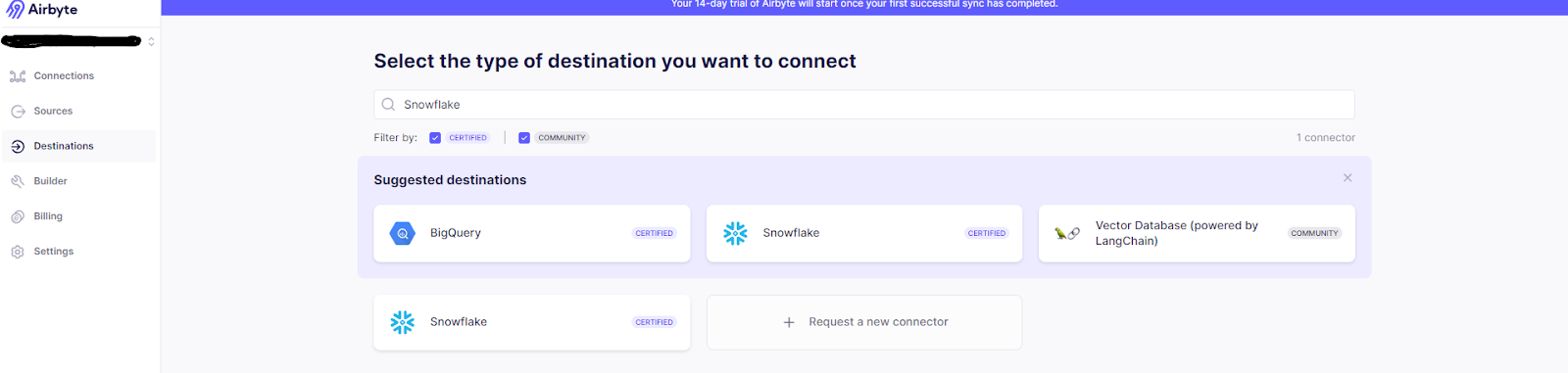

- Navigate to the dashboard and click on the Destinations option.

- Type Snowflake in the Search box of the destination page and click on the connector.

- On the Snowflake destination page, fill in the details such as Host, Role, Warehouse, Database, Default Schema, Username, and Optional fields like JDBC URL Params and Raw Table Schema Name. Then click on Set up destination.

Step 3: Configure the Snowflake Data Pipeline in Airbyte

After you set both the source and destination, proceed to configure the connection. This step includes choosing the source data (step 1), defining the sync frequency, and specifying the destination as your Snowflake table.

Completing these three steps will help you finalize the data integration process in Airbyte, enabling you to migrate data from your chosen sources to Snowflake. Additionally, with Airbyte, you can seamlessly configure Snowflake as your preferred source.

Conclusion

This article presents the top four ETL tools that offer unparalleled data integration capabilities for your business. With user-friendly interfaces and robust features, these tools empower you to streamline the data workflows and replicate data seamlessly within Snowflake. So, choose the Snowflake ETL tool based on your requirements to enhance analytics and derive actionable insights, ultimately driving efficiency and informed decision-making.

Consider leveraging the convenience of Airbyte, a user-friendly tool equipped with a diverse range of connectors and robust security features. Simplify your workflows effortlessly by giving Airbyte a try today!

Suggested Read:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)