10 Best Change Data Capture (CDC) Tools in 2026

Summarize this article with:

Is your organization experiencing a constant flow of data that is similar to a river where every edit, update, and deletion is like a wave in the current? Capturing these ripples and understanding the ever-changing landscape is essential for real-time, informed decision-making. This is where change data capture tools emerge as your life rafts, allowing you to navigate and extract valuable insights from the ever-changing stream.

What is Change Data Capture?

Change Data Capture (CDC) is identifying and capturing changes made to data in a database. It enables monitoring and tracking of modifications such as inserts, updates, and deletes, allowing systems to stay synchronized and updated with real-time changes. CDC is commonly used in data integration, replication, and warehousing scenarios, facilitating efficient and timely updates across different applications and ensuring data consistency.

CDC typically involves:

- Assigning timestamps or sequence numbers to changes to maintain an order and track them accurately.

- Log-based implementation, where the database transaction log is examined for changes. CDC can also be carried out through the trigger-based approach, where triggers on tables capture changes when they occur.

- Propagating the captured changes to other systems or data repositories to ensure consistent and up-to-date information flow across different distributed system components.

Comparison Table: Top 10 CDC Tools

Here are the top 10 CDC tools listed below that you can use to seamlessly replicate your data in real-time:

1. Airbyte

Airbyte is an AI-powered data integration platform that focuses on replicating data from various sources to data warehouses, lakes, and databases. It supports log-based (CDC), where these logs store the record of changes that have occurred in the database. To assist log-based CDC, Airbyte uses Debezium as an embedded library to capture and monitor changes constantly from your databases. This includes capturing various operations like INSERT, UPDATE, and DELETE.

Key features of Airbyte include:

- Availability of Connectors: Airbyte provides you with 600+ pre-built connectors. If these pre-built connectors do not support your sources, Airbyte’s Connector Development Kit (CDK) and Connector Builder allow you to build custom connectors.

- AI-powered Connector Creation: The Connector Builder supports an AI-assist functionality that reads through your preferred platform’s API documentation. Based on the API docs, it auto-fills most configuration fields, simplifying your custom connector development journey.

- Simplify GenAI Workflows: With Airbyte, you can simplify your AI workflows by loading semi-structured and unstructured data into popular vector databases, including Milvus, Pinecone, and Qdrant. Migrating data into a vector database allows you to train AI models quickly.

- Homogeneous and Heterogeneous Migrations: Airbyte supports both homogeneous and heterogeneous migrations. This allows you to replicate data between the same sources (e.g., MySQL to MySQL) and with different database engines (e.g., MySQL to PostgreSQL).

- Developer-friendly Data Pipeline: Airbyte’s PyAirbyte is an open-source Python library that allows you to utilize the Airbyte connectors. This is effective for custom data integration and transformation requirements using Python programming.

- Self-Managed Enterprise Features: The self-managed enterprise edition of Airbyte provides the capabilities to gain full control over your sensitive information with features like role-based access control (RBAC), PII, column hashing, and many more.

Pricing

Airbyte offers four pricing versions—Airbyte Open Source, Cloud, Team, and Enterprise. The open-source version is freely available to all. The Airbyte Cloud, Team, and Enterprise plans have flexible pricing options depending on your data replication requirements. For more information, you can contact the Airbyte sales team.

2. Debezium

Debezium is an open-source distributed platform designed to capture changes in data. It is built on top of Apache Kafka, a popular streaming platform. Debezium is developed to monitor the transaction logs and capture events representing the modifications to the data. It provides connectors for various database management systems (DBMS) like PostgreSQL, MySQL, and MongoDB. These connectors allow you to capture database changes in real time and stream them to Kafka topics for further processing.

Here’s key aspects of Debezium:

- If a Debezium source connector generates a change event for a table without an existing target topic, the topic is created during runtime. The change events are subsequently ingested into Kafka.

- Debezium lets you mask the values of specific columns in a schema. This feature is especially useful when your dataset contains sensitive data.

Pricing

As Debezium is an open-source platform, it is free of cost for use.

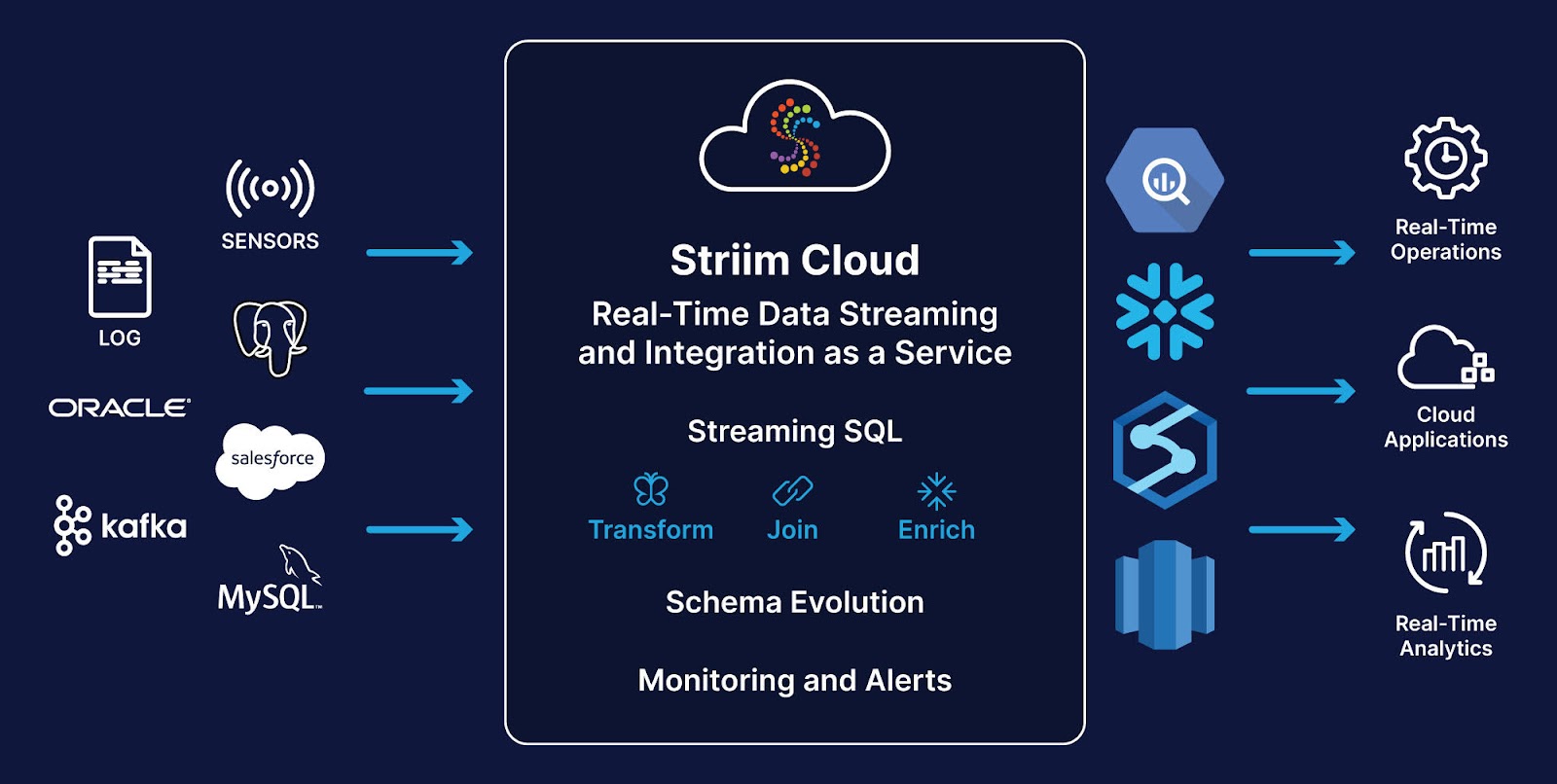

3. Striim

Striim is a software outlet designed for real-time data integration and streaming analytics. It allows your organization to continuously collect, process, and deliver data from various sources, including databases, applications, and sensors. Striim also facilitates data migration from on-premises databases to cloud environments without downtime and keeps them up-to-date using CDC.

Here are some features of Striim:

- Multiple stream sources, windows, and caches can be combined in a single query and chained together in directed graphs, known as data flows. These data flows can be built through the UI or the TQL scripting language. You can easily deploy and scale across a Striim cluster without writing additional code.

- You can use Striim for OpenAI and parse any type of data from one of Striim’s 100+ streaming sources into the JSONL format. It can be easily uploaded to OpenAI for creating AI models.

Pricing

Striim offers four pricing versions—Striim Developer, Automated Data Streams, Striim Cloud Enterprise, and Striim Cloud Mission Critical. The Striim Developer version is freely available to you. The Automated Data Streams start from $1000 per month. The Strim Cloud Enterprise costs $2000 per month, providing you with more advanced functionalities. Lastly, the Striim Cloud Mission is a customized version, and you can contact the Striim sales team for more details.

4. AWS Database Migration Service

Managed by AWS, Database Migration Service (DMS) helps you replicate your databases. You can set up CDC to capture changes while you are migrating your data from the source to the target data. Additionally, you can create a task to capture the ongoing changes from the source data. This ensures that any modifications that occur during the migration process are also replicated in the target system.

Here are the features of AWS DMS:

- DMS supports a variety of popular database engines, including Oracle, SQL Server, PostgreSQL, MySQL, MongoDB, MariaDB, and others. This allows you to migrate your databases regardless of the platform they are currently on.

- AWS offers a serverless option with AWS DWS Serverless. This option automatically provisions, monitors, and scales resources, simplifying the migration process and eliminating the need for manual configuration. It is particularly beneficial for scenarios where diverse database engines are involved.

Pricing

AWS DMS offers you multiple hourly pricing options. You can contact the AWS team for their pricing details.

5. GoldenGate (Oracle)

Oracle GoldenGate is a real-time data integration and replication platform from Oracle Corporation. It facilitates the real-time movement of data between different types of databases and platforms without impacting the performance of the source system. It allows you to capture data changes as they occur and replicate them timely to the target system.

The features of Oracle GoldenGate include:

- GoldenGate offers Stream Analytics, which gives you access to features such as time series, machine learning, geospatial, and real-time analytics.

- Along with Oracle repositories, GoldenGate allows you to connect with many non-Oracle databases and data services for data integration. The databases supported by OCI are Microsoft SQL Server, IBM DB2, Teradata, MongoDB, MySQL, PostgreSQL, etc.

Pricing

OCI offers different pricing versions for different needs, and you can contact their sales team for more details.

5. Qlik Replicate

Qlik Replicate is a real-time data replication and ingestion platform. It enables organizations to implement CDC by continuously capturing and delivering changes from various data sources to target systems with minimal latency. Qlik Replicate supports a wide range of databases, data warehouses, and cloud platforms.

Two key features of Qlik Replicate

- Zero-footprint architecture: Qlik Replicate can capture changes from source systems without installing agents or performing intrusive operations on production servers.

- Wide platform support: It offers connectivity to a vast array of databases, data warehouses, and cloud platforms, allowing for flexible data movement across diverse environments.

Pricing

Qlik Replicate's pricing is not publicly available as it typically follows an enterprise pricing model. To get accurate pricing information, organizations need to contact Qlik's sales team for a custom quote tailored to their specific needs and usage scenarios.

6. IBM InfoSphere

IBM InfoSphere Data Replication is a comprehensive data replication solution that supports CDC across various data sources and targets. It enables real-time data integration, replication, and synchronization, allowing organizations to maintain consistent and up-to-date data across multiple systems.

Two key features:

- Q Replication: A high-performance, low-latency replication technology that uses IBM MQ to queue changes for efficient data movement.

- SQL Replication: Provides flexible, SQL-based replication capabilities for various databases and platforms.

Pricing

To obtain accurate pricing information, you would need to contact IBM's sales team.

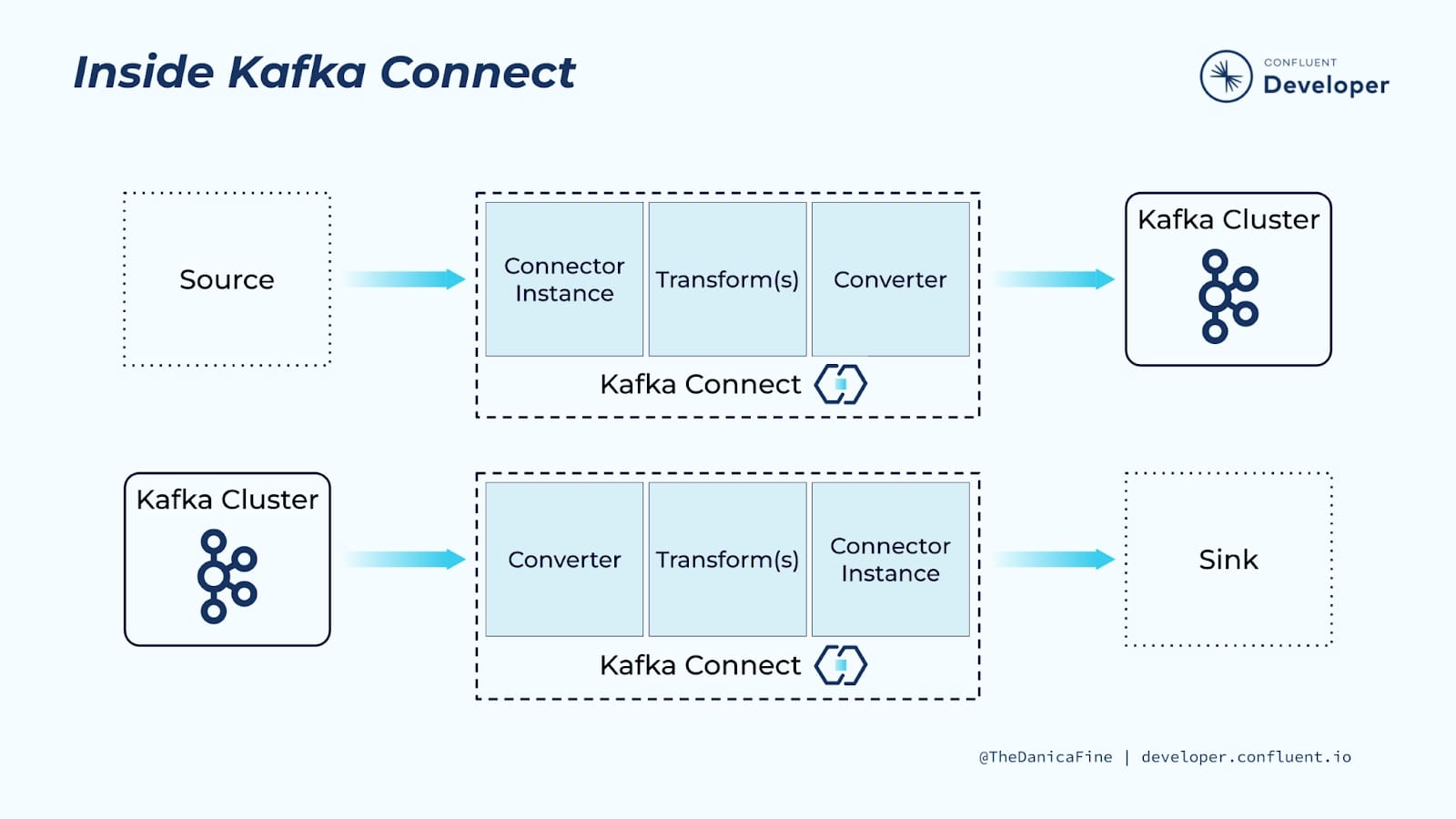

7. Kafka Connect

Kafka Connect is an open-source component of Apache Kafka, designed to facilitate data integration between Kafka and other systems. It provides a framework for building and running reusable connectors that move large collections of data into and out of Kafka. Confluent's version enhances this with additional features and support.

Two key features of Kafka Connect are,

- Distributed mode: Allows scaling of data integration tasks across multiple workers for improved performance and fault tolerance.

- Connector ecosystem: Offers a wide range of pre-built connectors for various data sources and sinks, simplifying integration with different systems.

Pricing

Kafka Connect itself is open-source and free to use. However, Confluent offers enterprise features and support through Confluent Platform, which follows a tiered pricing model.

8. Azure Data Factory

Azure Data Factory is Microsoft's cloud-based data integration service that enables the creation, scheduling, and management of data pipelines. It supports CDC through its Copy Activity and Change Data Capture (CDC) features, allowing users to efficiently replicate changes from various data sources to target systems in Azure or other environments.

Key features of Data Factory are,

- Mapping Data Flows: A visually designed data transformation tool that enables code-free ETL/ELT processes, including CDC operations.

- Integration Runtime: Provides a flexible compute infrastructure for executing data movement and transformation activities across different network environments.

Pricing

Azure Data Factory follows a consumption-based pricing model. Costs are primarily based on the number of pipeline runs, data flow execution time, and data movement operations.

9. Hevo Data

Hevo Data is a no-code data pipeline platform that enables real-time replication using Change Data Capture (CDC). It is designed to simplify data integration from multiple sources into cloud data warehouses without requiring manual intervention or complex coding. Hevo supports a wide variety of databases and SaaS applications and automates the detection and application of changes.

Two key features of Hevo Data are:

- Auto Schema Mapping: Hevo automatically maps source schema to the destination schema, ensuring seamless ingestion even when the schema evolves.

- Minimal Setup: With a fully managed architecture and intuitive UI, you can set up CDC pipelines in minutes without coding or managing infrastructure.

Pricing

Hevo Data offers tiered paid plans starting with a 14-day free trial. You can contact their sales team for enterprise pricing and additional features.

10. Google Cloud Dataflow

Google Cloud Dataflow is a fully managed stream and batch data processing service that supports real-time analytics and ETL. While not a CDC tool in the traditional sense, it can implement log-based or event-driven CDC workflows when integrated with Pub/Sub, Cloud SQL, or BigQuery. It supports the Apache Beam SDK for custom data processing pipelines.

Two key features of Cloud Dataflow are:

- Unified Stream and Batch Processing: Write your CDC pipeline once and execute it as batch or streaming jobs without changing the code.

- Autoscaling and Serverless Architecture: Cloud Dataflow automatically scales resources to meet throughput demands, reducing operational overhead.

Pricing

Google Cloud Dataflow follows a consumption-based pricing model, charging based on CPU, memory, and data processing time. You can estimate costs using the Google Cloud Pricing Calculator.

Benefits of Change Data Capture

CDC offers several advantages over traditional data transfer methods, making it valuable for various data management tasks. Here are some key benefits of using CDC:

- Real-time Data: Unlike batch processing, which transfers data periodically, CDC captures the modifications as they happen, enabling real-time data movement and analysis. This is crucial for applications that require up-to-date information, such as fraud detection, stock market analysis, and personalized recommendations. However, you can also use CDC to send data in batches.

- Reduced Resource Consumption: CDC only transfers the changed data, minimizing the amount of data transfer and processing compared to full data transfers. This translates to lower bandwidth usage, less strain on system resources, and improved overall efficiency.

- Faster and more Efficient Data Migration: With the CDC technique, you can experience smoother and faster data migration with minimal downtime by continuously capturing changes. Since the target system is constantly updated with the latest changes, it minimizes the disruption to ongoing operations.

- Simplified Application Integration: It allows for easier integration between applications that use different database systems. By capturing changes in a standardized format, CDC enables seamless understanding and utilization of data from other systems.

Wrapping Up

The array of CDC tools available listed above empowers your organization to manage and analyze data effectively. From surveillance to prevention, these tools play a crucial role in fostering data-driven decision-making. Choosing the right CDC tool depends on your specific needs and priorities. Consider the features mentioned above and evaluate options to find the best fit for your data integration.

FAQs

1. What is Change Data Capture (CDC), and how is it different from traditional ETL?

CDC identifies and tracks changes (inserts, updates, deletes) in databases. Unlike traditional ETL that processes entire datasets on a schedule, CDC captures only changes in near real time, reducing load and latency.

2. Which CDC tools are best suited for organizations looking for open-source solutions?

Top open-source CDC tools include Airbyte, Debezium, and Kafka Connect. Airbyte offers extensive connectors and customization, Debezium provides native Kafka integration, and Kafka Connect is scalable but requires manual setup.

3. Can CDC tools be used for real-time analytics and machine learning workflows?

Yes. Tools like Google Dataflow, Striim, and Airbyte support real-time streaming into destinations such as BigQuery or vector databases, making them suitable for analytics dashboards or AI/ML pipelines.

4. Are there CDC tools that don’t require writing code to get started?

Yes. Hevo Data, Striim, Azure Data Factory, and Qlik Replicate offer no-code interfaces with visual builders, ideal for users with limited engineering resources.

5. How should I choose the right CDC tool for my organization?

Consider deployment model (cloud, hybrid, on-prem), pricing (open-source vs. enterprise), supported connectors, technical complexity, and whether you need features like real-time sync, AI integration, or governance tools.

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)