10 Best Data Extraction Tools in 2026

Summarize this article with:

Businesses need a centralized pool of data for proper and in-depth analysis to make informed decisions. Data extraction assists businesses in extracting information from various sources, which ultimately helps businesses perform better analytics in today's data-driven landscape.

In this article, we’ll understand what data extraction is, the different types of data extraction, and the top 10 tools to consider for performing data extraction.

What is Data Extraction?

Data extraction involves gathering diverse data from various sources. This process enables data aggregation, processing, and refinement, facilitating storage in a centralized location for analytics or backup requirements. This storage can be on-site, in the cloud, or a combination of both.

What are Data Extraction Tools?

Data extraction tools are designed to extract raw data from different sources such as databases, websites, documents, or APIs, and transfer it to another location. The extracted data can be replicated or copied to a destination, like a data warehouse or storage system. These tools can handle various types of data, whether structured, unstructured, or poorly organized. Once the data is collected, it can be processed and refined for analysis or storage.

These tools play a vital role in data management and analytics by simplifying the gathering and organizing of large volumes of data from diverse sources. They help businesses and organizations streamline their data collection processes and make informed decisions based on accurate and up-to-date information.

Top 10 Data Extraction Tools

While you perform data extraction, your tools are directly related to the output and efficiency. Here are some of the best data extraction tools to ensure maximum efficiency.

1. Airbyte

Airbyte is a prominent data integration platform that enables you to migrate data from various sources to a destination of your choice. It offers an extensive library of pre-built connectors for centralizing data in a single data store, such as data warehouses, data lakes, and databases.

Some key features of Airbyte are:

- Data Extraction Methods: The versatile data extraction tool supports both incremental and full extraction methods.

- Pre-Built Connectors: With over 600 pre-built connectors, Airbyte allows you to extract data from multiple sources into a destination, facilitating seamless data movement.

- Connector Builder: If the connector you seek is unavailable in the pre-built options, you can develop custom connectors using Connector Builder or Connector Development Kits (CDKs). The Connector Builder offers an AI-assist functionality to automatically fill out most UI fields by scanning your preferred platform’s API documentation.

- Vector Database Support: Airbyte supports prominent vector databases, including Pinecone, Milvus, and Weaviate. You can automatically apply RAG techniques, such as chunking, embedding, and indexing, to raw data and transform it into vector embeddings. These vectors can then be stored in a vector database of your choice.

- Enterprise-Level Solution: Airbyte offers an Enterprise Edition, enabling you to handle large-scale data with features like role-based access control, multitenancy, PII masking, and enterprise support with SLAs.

- Change Data Capture(CDC): CDC allows you to automatically identify incremental changes made to the source data store and replicate them in the destination. This helps track data updates and maintain data consistency.

- Robust API: Airbyte offers an API for simplified integrations with numerous customer-facing applications.

Flexible Plans: Airbyte supports four pricing plans depending on the data replication and hosting needs. This includes Open Source, Cloud, Team, and Enterprise versions. While the Open Source and Enterprise editions are self-hosted, Cloud and Team editions provide cloud hosting options.

2. Talend

Talend Data Fabric unifies data integration, data quality, and data governance within a singular, low-code platform that seamlessly interacts with a wide variety of data sources and architectures. With Talend, you can save engineering time by extracting data from 140+ popular sources to your warehouse or database in minutes.

Some of the key features of Talend are:

- Addresses end-to-end data management requirements throughout the organization, encompassing integration and delivery.

- Offers versatile deployment options, including on-premises, cloud, multi-cloud, or hybrid-cloud configurations.

- Delivers consistent and predictable value, prioritizing security and compliance.

- Centralize business data into your cloud data warehouse for fresh, analysis-ready data.

- Stitch pipelines automatically and continuously update, allowing you to focus on insights instead of IT maintenance.

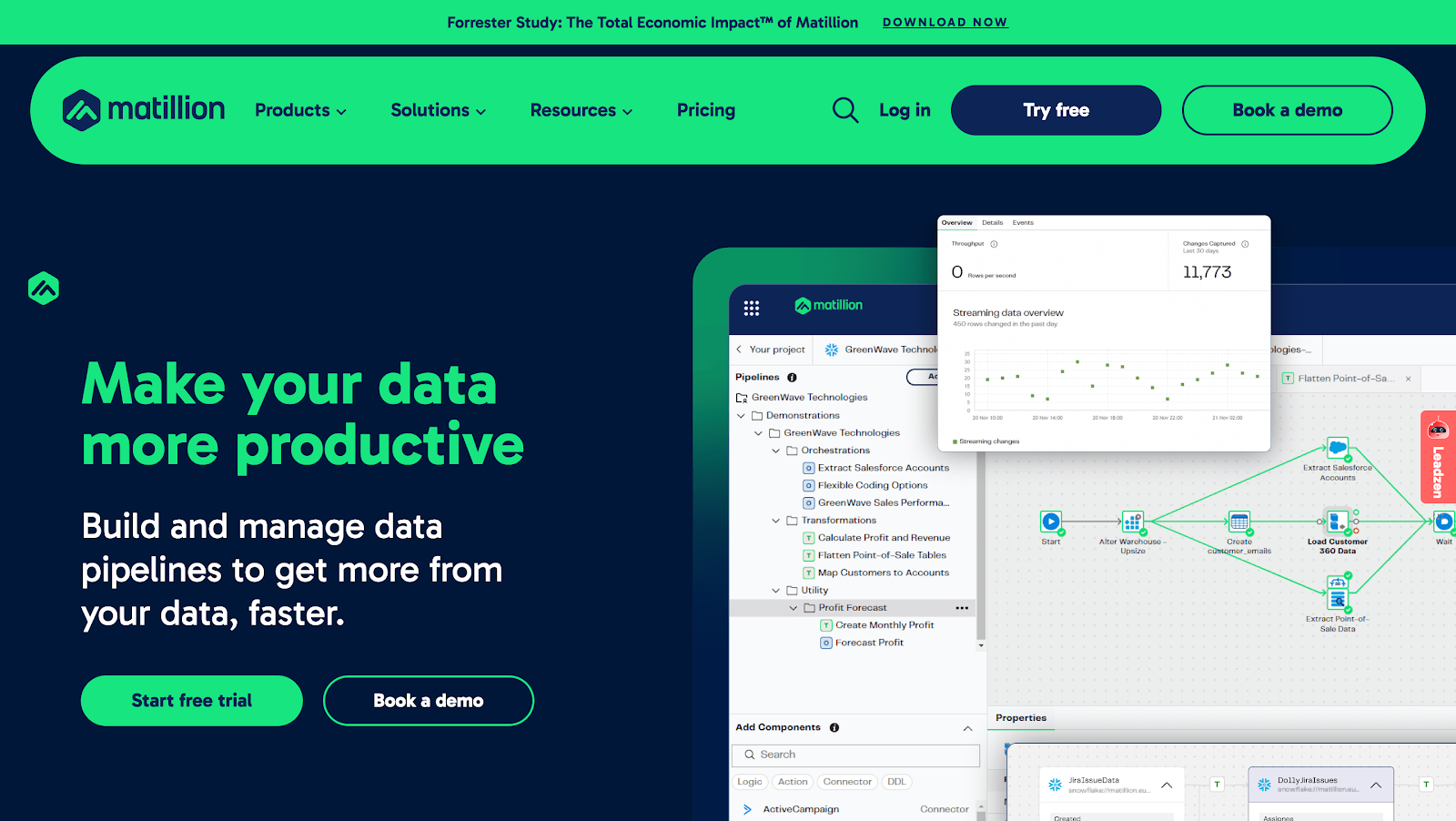

3. Matillion

Matillion’s cloud-based ETL software seamlessly integrates with a wide range of data sources, efficiently ingests data into leading cloud data platforms for utilization by analytics and business intelligence tools. Along with swift movement, it also facilitates the transformation and orchestration of data pipelines. Matillion serves as the go-to data extraction platform for data teams, enhancing efficiency for both coders and non-coders.

Some of the key features of Matillion are:

- Establish connections to virtually any data source with a broad array of pre-built connectors.

- Construct advanced data pipelines or workflows using an intuitive low-code/no-code GUI.

- Develop your own connectors effortlessly in minutes or access customer-created ones from the Matillion community.

- Shorten the time to see results with quicker deployment.

4. Integrate.io

Integrate.io offers a comprehensive set of tools that enable businesses to consolidate all their data for a unified source of insights. What sets this tool apart is its exceptional user-friendliness. Even non-technical users can easily build data pipelines with a drag-and-drop editor and many built-in connectors. Businesses can also use Integrate.io to extract data from in-house tools, utilizing its robust expression language, advanced API, and webhooks.

Some of the key features of Integrate.io are:

- Low-code transformation by Integrate.io for swift integration with warehouses or databases.

- Utilize reverse ETL to push warehouse data back to in-house tools, enhancing insights into the customer journey for improved marketing and sales operations.

- Ensure data value with Integrate.io’s observability features, setting up alerts for instant awareness of data issues.

- You can choose from more than 220 low-code transformation possibilities for your data.

5. Hevo Data

Hevo Data is a no-code, bi-directional data pipeline platform designed specifically for modern ETL, ELT, and Reverse ETL requirements. Tailored to assist data teams, it facilitates the streamlined automation of organization-wide data flows. This leads to significant time savings for engineering tasks per week and enabling faster reporting, analytics, and decision-making processes.

Some of the key features of Hevo are:

- Hevo automatically synchronizes data from over 150 sources, including SQL, NoSQL, and SaaS applications.

- Hevo streamlines data operations by automatically detecting and mapping data schemas to the destination. This eliminates the tedious task of schema management.

- Hevo’s simple and interactive UI ensures an easy learning curve for you, facilitating seamless navigation and operations.

- Efficient bandwidth utilization is achieved through Hevo’s capability to transfer real-time modified data.

6. Stitch

Stitch is a fully managed, lightweight ETL tool focusing on data extraction from over 130 sources. While lacking some advanced transformation features, it excels in simplicity and accessibility, making it ideal for small to medium-sized businesses. With robust security measures, including SOC 2 and HIPAA compliance, Stitch ensures data integrity throughout the extraction process.

Key Features:

- ETL functionality for data extraction.

- Offers 130+ pre-built integrations.

- Provides enterprise-grade security measures.

7. Fivetran

Fivetran is an ELT platform offering over 300 built-in connectors for rapid data extraction from diverse sources. It supports real-time data replication and allows custom cloud functions for advanced extraction needs. Although it transforms data after loading, its automation capabilities streamline the extraction process.

Key Features:

- ETL functionality with 300+ built-in connectors.

- Automated schema drift handling and customization.

8. ScraperAPI

ScraperAPI is a specialized web scraping platform designed to simplify large-scale web data extraction. Acting as a robust proxy API, it manages IP rotation, CAPTCHA solving, and JavaScript rendering to bypass anti-scraping measures. Its ability to deliver structured data in JSON format makes it a reliable solution for businesses and developers that need to gather public web data without maintaining complex infrastructure.

Key Features:

- Automatic proxy rotation and IP management for higher extraction success rates.

- Built-in CAPTCHA solving and retries for uninterrupted data collection.

- Geo-targeting options for localized data extraction.

- JavaScript rendering to capture dynamic content from modern websites.

- Auto-parsing of HTML into JSON for easier integration with analytics tools.

- Scalable infrastructure designed to handle millions of requests.

9. Informatica

Informatica caters mainly to large enterprises with its robust ETL/ELT capabilities and thousands of connectors. It provides comprehensive tools for data integration and transformation, supported by a user-friendly interface and extensive documentation.

Key Features:

- Offers both ETL and ELT functionality.

- Thousands of connectors for extensive data integration.

- Enterprise-grade extraction capabilities.

10. SAS Data Management

SAS Data Management is a comprehensive solution for managing and integrating data from various sources. It allows for seamless access, extraction, transformation, and loading of data into unified environments. Its key strength lies in reusable data management rules, ensuring consistent data quality and governance.

Key Features:

- Provides ETL/ELT capabilities.

- Offers out-of-the-box SQL-based transformations.

- Integrates with any data source, including cloud and legacy systems.

Types of Data Extraction

Data extraction utilizes automation technologies to simplify manual data entry, and it can be categorized into three main types:

Full Extraction

When transferring data from one system to another, a full extraction involves pulling all available data from the original source and sending it to the destination. This method is ideal for populating a large system for the first time, ensuring a comprehensive one-time collection of all necessary data. While it may have potential pitfalls and overhead costs, it simplifies future processes and ensures accuracy when analyzing critical data.

Incremental Stream Extraction

Incremental stream extraction involves capturing only the data that has changed since the last extraction. This approach enhances efficiency by saving time, bandwidth, computing resources, and storage space, as redundant data is not transferred during each complete record extraction. The advantage lies in keeping systems continuously updated with minimal effort, contributing to the accuracy of data sharing.

Incremental Batch Extraction

Incremental batch extraction is employed when dealing with large datasets that cannot be processed in one go. This method entails breaking the datasets into smaller chunks and extracting data from them separately, allowing greater efficiency in handling extensive information. Instead of waiting for a single large file to complete processing, multiple smaller files can be processed quickly. This approach minimizes resource strain and facilitates easier batch management.

Why Do You Need Data Extraction Tools?

Efficient Data Retrieval

Data extraction tools streamline data retrieval from various sources, automating repetitive tasks and conserving time and resources.

Improved Data Accuracy

These tools ensure precise data extraction, minimizing manual errors and standardizing extraction processes to reduce discrepancies.

Real-time Insights

Data extraction tools facilitate real-time data retrieval, empowering timely decision-making. Businesses gain access to current information for enhanced analysis and planning.

Data Integration

These tools seamlessly integrate data from diverse sources, ensuring consistency across systems and databases.

Scalability

Data extraction tools are scalable, proficient in managing large data volumes, and adaptable to evolving business needs.

Web scraping

Data extraction tools play a crucial role in streamlining the process of collecting data from websites, databases, and other digital platforms. Web scraping is another use case that data extraction tools solve, enabling users to effortlessly extract relevant data from websites for analysis, research, or monitoring purposes.

Conclusions

Without top extraction tools, the proper gathering of data might not be of the best standards. These 8 tools can be game changers for your business in today’s day and age. These tools can solve data overload issues and extract material from various sources, eventually simplifying the complex task of extracting valuable information.

In addition to simplifying data extraction, these top tools offer customizable features tailored to specific business needs. These tools can streamline the extraction process, ensuring that the data gathered empowers businesses to make informed decisions swiftly and efficiently.

Data Extraction FAQs

What are the benefits of using data extraction tools?

Data extraction tools streamline the process of retrieving data from various sources, saving time and effort. They improve data accuracy, facilitate real-time insights, and enhance decision-making capabilities.

How do I choose the right data extraction tool for my business needs?

Consider factors like data source compatibility, scalability, ease of use, security features, and pricing when selecting a data extraction tool. Evaluate trial versions or demos to ensure they meet your specific business requirements.

Can data extraction tools handle large volumes of data?

Top data extraction tools are equipped to handle large volumes of data efficiently. They often offer features like parallel processing, data partitioning, and optimized algorithms to ensure fast and reliable extraction.

Is it possible to schedule automated data extraction processes?

Many data extraction tools support automated scheduling, allowing users to set up regular extraction jobs without manual intervention. This feature enhances productivity and ensures data is always up-to-date.

How secure is the data extraction process, especially when dealing with sensitive information?

Data extraction tools prioritize data security, offering encryption, access controls, and compliance with regulations like GDPR. Choose tools with robust security measures to safeguard sensitive information throughout the extraction process.

Suggested Read:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)