10 Best Data Movement Tools in 2026

Summarize this article with:

✨ AI Generated Summary

Data movement is essential for transferring and integrating data across systems to enable unified insights, operational efficiency, and informed decision-making. Key strategies include batch processing, real-time streaming, incremental, and full data transfers, supported by various tools like Airbyte, Fivetran, Talend, and Apache NiFi.

- Data movement technologies: ETL, ELT, CDC, replication, and streaming address different needs for data processing and synchronization.

- Choosing the right tool depends on data volume, integration needs, transformation requirements, scalability, budget, security, and ease of use.

- Common challenges include data compatibility, loss, lack of expertise, standardization, and security compliance.

- Airbyte stands out for its open-source flexibility, extensive connectors (600+), CDC support, and schema management, enabling efficient, customizable data pipelines.

Organizations accumulate information from multiple sources, such as sensory devices, web analytics tools, and social media platforms. These datasets are significant for crucial initiatives, such as obtaining customer insights for marketing campaigns, financial analysis, and operational efficiency. To perform such advanced analysis, multiple departments within the organization must work together with seamless data movement between their systems.

Effective data workflows ensure all your employees can access the latest information and get a unified view of all operations. This helps eliminate discrepancies and inconsistencies during the decision-making process. This article will delve deeper into practical examples and tools available to simplify and optimize data movement within your organization.

What Is Data Movement?

Data movement refers to transferring information between different locations within your system or organization. This can involve moving data from on-premises storage to cloud environments, between databases, or from data lakes to analytics platforms.

Data movement is crucial during data warehousing, system upgrades, data synchronization, and integration. It allows you to populate warehouses, migrate data to new environments during upgrades, sync the latest information between systems, and consolidate data for a consistent view. Various tools and techniques, such as managed file transfer services, cloud-based data integration platforms, and pipelines, allow for secure and efficient data movement.

Top 10 Data Movement Tools

Many data movement tools have entered the market, offering various functionalities to help you simplify and automate the process. You should choose the one that fits your business needs.

1. Airbyte

Airbyte is a data integration and replication platform that helps you quickly build and manage data pipelines. Its no-code, user-friendly interface allows you to perform data movement without any technical expertise.

Airbyte supports an ELT approach that reduces latency when extracting and loading high-volume, high-velocity data. Here are some features of Airbyte for you to explore:

- Extensive Library of Connectors: Airbyte automates data movement by offering you a library of over 600 pre-built connectors. These connectors help you connect multiple data sources to a destination system. Using Airbyte’s low-code Connector Development Kit (CDK), you can also build custom connectors within minutes.

- Change Data Capture: You can leverage Airbyte’s Change Data Capture (CDC) functionality to capture the incremental changes occurring at the source system. This feature helps you efficiently utilize resources while dealing with constantly evolving large datasets.

- Schema Change Management: The schema change management feature allows you to configure settings to detect and propagate schema changes at the source. Based on these settings, Airbyte automatically syncs or ignores those changes.

2. Skyvia

Skyvia is a versatile data movement tool that can help you streamline your workflows in various scenarios, including data warehousing, CRM or ERP integration. It is a no-code platform that allows you to handle multiple data integration needs, including ETL, ELT, reverse ETL, and one-way and bidirectional data sync.

3. Matillion

Matllion is a cloud-based data integration and transformation tool that can help your organization with data movement processes. It supports various data integration requirements like ETL, ELT, reverse ETL, and data replication and is best suited for cloud data warehousing and analytics.

4. Talend

Talend is a robust data integration and transformation tool that offers both open-source and enterprise solutions. It supports a wide range of integration patterns including ETL, ELT, and data governance workflows, making it ideal for companies looking to scale their data operations securely.

5. Fivetran

Fivetran is a fully managed data pipeline tool focused on automated ELT. It is widely known for its ease of use, quick setup, and extensive connector library, making it a top choice for analytics teams looking to centralize data without heavy engineering involvement.

6. Hevo Data

Hevo Data is a no-code ELT platform that helps companies quickly integrate data from multiple sources into data warehouses. It supports real-time data pipelines and automatic schema mapping, reducing the manual effort typically involved in data integration.

7. Stitch

Stitch is a simple, developer-focused ELT tool designed to move data quickly from multiple sources to data warehouses. It offers a straightforward setup and transparent pricing model, which makes it attractive for small and medium-sized teams.

8. Informatica

Informatica is a comprehensive enterprise-grade data integration platform that supports ETL, ELT, data quality, and governance. It is best known for its stability and scalability, especially for large-scale enterprises with complex data management requirements.

9. Apache NiFi

Apache NiFi is an open-source data flow automation tool that enables the design of complex workflows through a visual user interface. It supports real-time streaming and batch data movement, offering a flexible solution for routing and transforming data.

10. IBM DataStage

IBM DataStage is a powerful ETL tool that helps organizations build and manage large-scale data integration solutions. It’s designed for high-performance processing of structured and unstructured data across various sources.

What is the purpose of Data Movement?

Data movement streamlines most of your organization’s processes and allows you to implement an effective data management strategy. Below are several reasons why you should have a robust data movement infrastructure.

Data movement streamlines most of your organization’s processes and allows you to implement an effective data management strategy. Below are several reasons why you should have a robust data movement infrastructure.

1. Connecting Data from Disparate Sources

Data often resides in various databases, applications, and cloud storage locations. Data movement helps break down silos and consolidate this information, offering deeper insights into customer behavior, operational trends, and market dynamics.

2. Moving Data for Processing and Insights

With data movement, you can facilitate the transfer of raw information to dedicated systems for further processing, such as sales forecasting or marketing optimization.

3. To Handle Growing Data Volumes

You can strategically distribute data based on access frequency by moving infrequently accessed data to cost-effective storage and keeping critical data readily accessible.

4. Keeping Data Consistent across Systems

Using techniques like data replication, ELT/ETL processes, and synchronization ensures your systems are always working with the latest, most accurate data.

Data Movement Technologies

With the purpose of data movement established, this section delves into the technologies that make it all happen. These tools ensure efficient, secure, and reliable transfer of information across your data infrastructure.

Data Movement Technologies

ETL

ETL, which stands for extract, transform, load, is a data movement technology better suited for structured data with a well-defined schema. It allows you to extract data from various sources, cleanse and transform it into a usable format, and load it into your preferred destination.

ELT

ELT (extract, load, and transform) allows you to load the raw data directly into the target system, like a data lake, and perform the transformations within that environment. It offers a faster alternative to ETL by skipping the initial transformation steps.

CDC

CDC, or Change Data Capture, is a technique used in data integration to identify and track changes made to data in a source system. It focuses on capturing only the changes made to data since the last transfer. There are two main approaches to achieve this: log-based and trigger-based CDC.

Replication

Data replication refers to creating and maintaining multiple copies of the same data in different locations. A replicated copy can keep applications running and minimize downtime if your primary source system becomes unavailable due to hardware failure or maintenance.

Streaming

Data streaming refers to the continuous flow of data generated in real-time by various sources. This is ideal for high-velocity data like sensor feeds, where you need to identify trends, patterns, or anomalies in the data as it arrives.

Data Movement Vs. Data Migration Vs. Data Synchronization

Data movement, Data migration, and synchronization are all essential processes for managing data within your organization. While they all involve transferring data, their purposes and functionalities differ significantly. The table below explores the key differences between them:

Things to Consider Before Choosing a Data Movement Tool

Choosing the right tool for your business involves evaluating several key factors:

1. Volume and Velocity of Data

Evaluate whether your data is batch-based or real-time. Streaming tools are essential for high-velocity environments, while batch tools work for periodic data movement.

2. Integration Needs

Check for out-of-the-box connectors to your existing systems (databases, SaaS apps, file systems). Tools like Airbyte and Fivetran have broad connector ecosystems.

3. Transformation Requirements

Some tools are better suited for ETL vs ELT. Choose tools based on whether you prefer transforming data pre- or post-loading.

4. Scalability & Performance

Ensure the tool can scale with your growing data. Cloud-native tools typically offer better horizontal scaling.

5. Budget Constraints

Understand pricing models: subscription, usage-based, or custom enterprise pricing. For example, Airbyte is open source, while Fivetran charges per row.

6. Compliance & Security

Your tool should comply with data protection laws (e.g., GDPR, HIPAA) and offer features like data encryption, access control, and audit logs.

7. Ease of Use

Look for tools that align with your team’s expertise. No-code tools are good for business users; code-based tools work better for data engineers.

Data Movement Strategies

Effective data movement strategies are essential for effortlessly transferring data within your organization. Here are some key considerations to optimize your data movement approach.

Batch Processing

Batch processing is a data movement strategy where you can accumulate, process, and transfer data in large, individual chunks at scheduled intervals. It is efficient and helps minimize network strain by handling data in bulk.

Batch processing is advantageous for tasks requiring significant computational resources, such as end-of-day financial transactions and bulk data imports. By executing operations in batches, you can optimize performance and resource utilization, reducing the load on primary systems during peak hours. Apache Spark and AWS Batch are tools you can leverage to execute batch processing.

Real-Time Streaming

In contrast to batch processing, real-time streaming continuously transmits data sequentially as it is generated at the source. This method offers minimal latency and high throughput, making it ideal for real-time decision-making scenarios such as Internet banking, stock market platforms, and online gaming.

However, real-time streaming can be resource-intensive and requires robust infrastructure to handle the constant data flow. Some popular tools for real-time data streaming include Apache Kafka, Apache Flink, and Amazon Kinesis.

Incremental Transfer

This data movement strategy focuses on transferring only the new or modified data since the last successful transfer. Incremental transfers are efficient for frequently changing high-volume datasets as they minimize redundant transmissions and bandwidth consumption. Implementing incremental data transfer after database replication, backup, and synchronization tasks ensures that only updated information gets reflected at the destination.

By transferring only the modified data, your organization can maintain up-to-date datasets with minimal overhead. Airbyte, Talend, IBM Informix, and Hevo are well-known tools for incremental data transfer.

Full Data Transfer

Full data transfer involves transferring the entire dataset from the source to the destination system at a single point in time. You can use it for initial data migrations, replicating static datasets, and complete backups.

While full data transfer ensures accurate data movement, it can be resource-intensive and time-consuming, especially for large datasets. Full data transfer is usually utilized when data integrity and completeness are top priorities. Some data transfer tools that can help you are IRI NextForm, Matillion, and Stitch.

Challenges in Data Movement

While data movement is seemingly straightforward, it can present several hurdles that can significantly impact the progress, efficiency, and success of your data initiatives.

Here are some of the most common challenges you can encounter during data movement:

- Data Compatibility: Your data can reside in various formats and structures, making it difficult to integrate and move data from source to destination.

- Data Loss or Corruption: Data errors or disruptions during transfer can lead to data loss or corruption, compromising the integrity of your data at its destination.

- Lack of Experienced Resources: Performing data movement without proper expertise can lead to errors, causing delays and impacting the overall efficiency.

- Lack of Standardization: Without standardized processes and tools for data movement, your overall data management strategy can be inconsistent and inefficient.

- Data Security and Compliance: Ensuring data security and adhering to regulations can be complex, with multiple touchpoints representing opportunities for unauthorized access or data breaches.

How to Use Airbyte to Move Data Efficiently?

With Airbyte, you can seamlessly transfer data between various sources and destinations. Here's a step-by-step guide explaining how to move your data using Airbyte.

Step 1: Set up Your Data Source

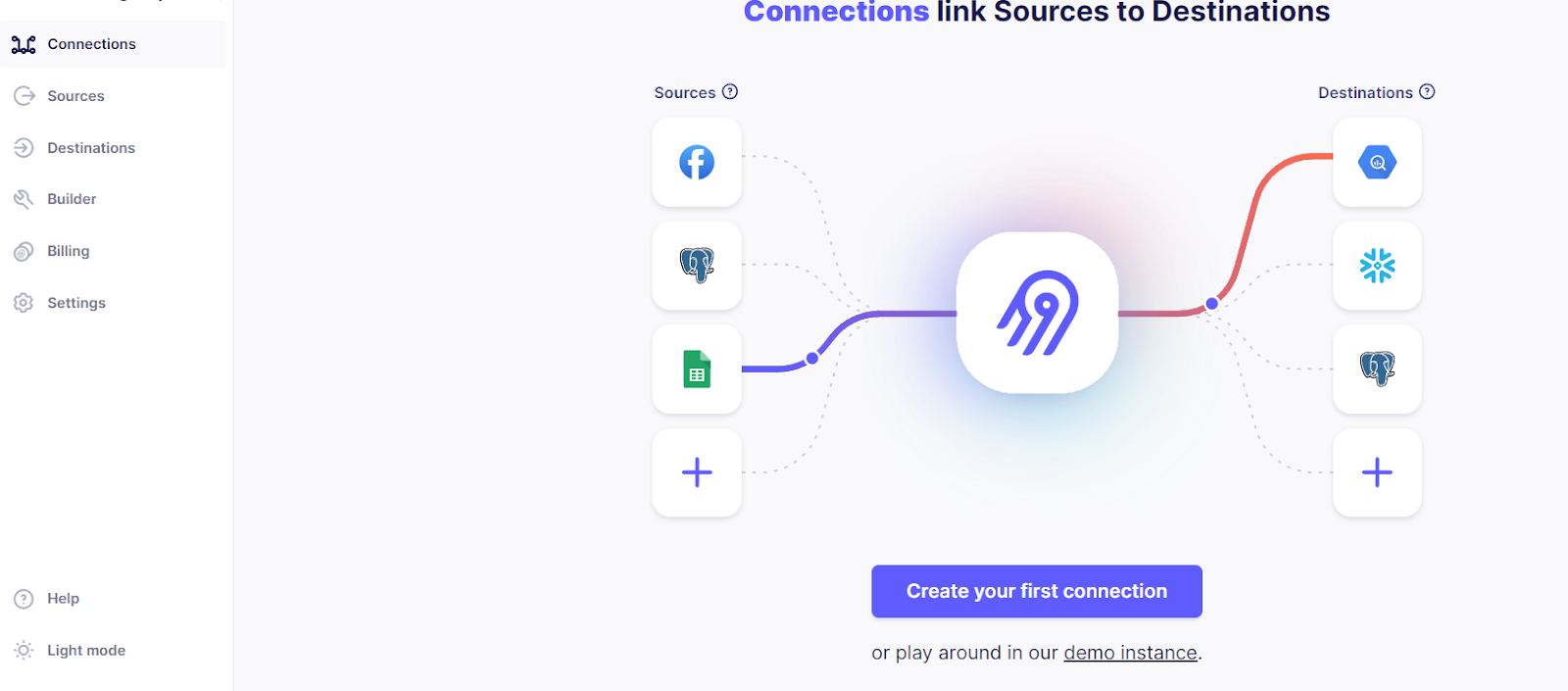

- Airbyte offers two types of accounts: Airbyte Cloud and a self-managed version. Once you set it up, log into your account, and you will see the screen below.

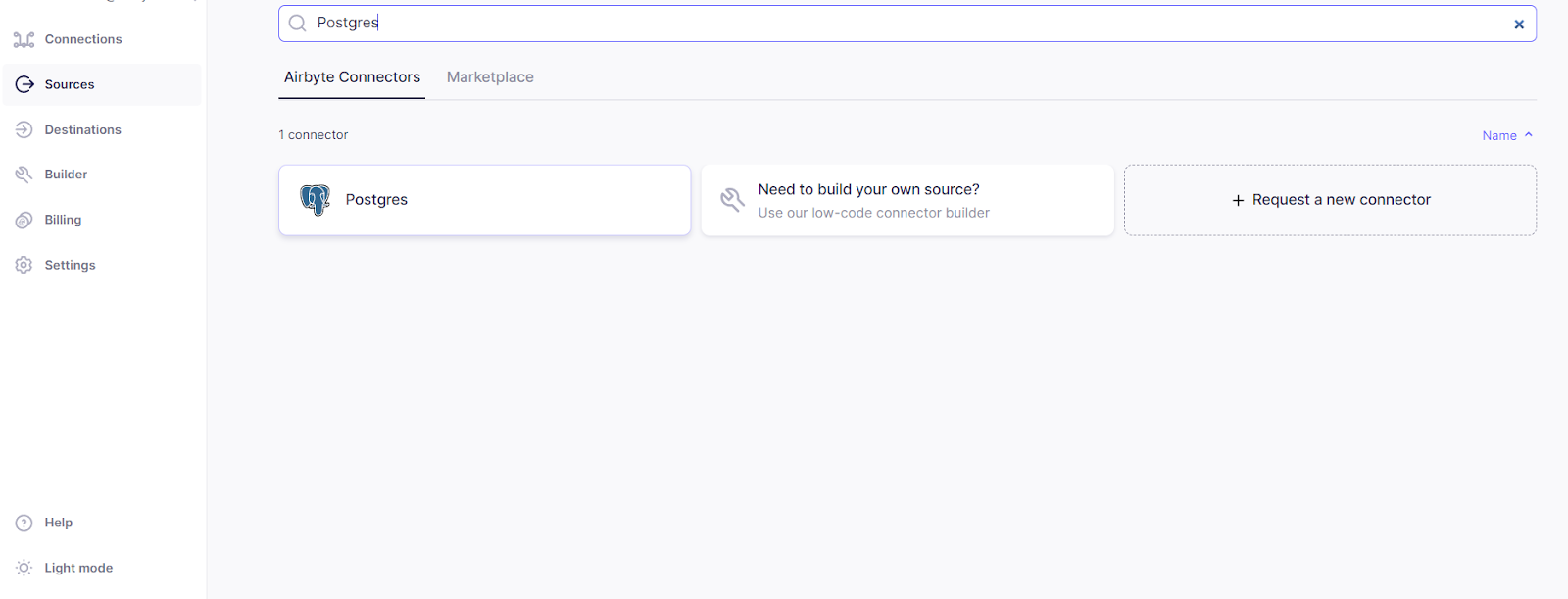

- Select the Sources tab on the left side of the screen, enter your data source (e.g., Postgres) in the Search field, and select the corresponding connector as shown below.

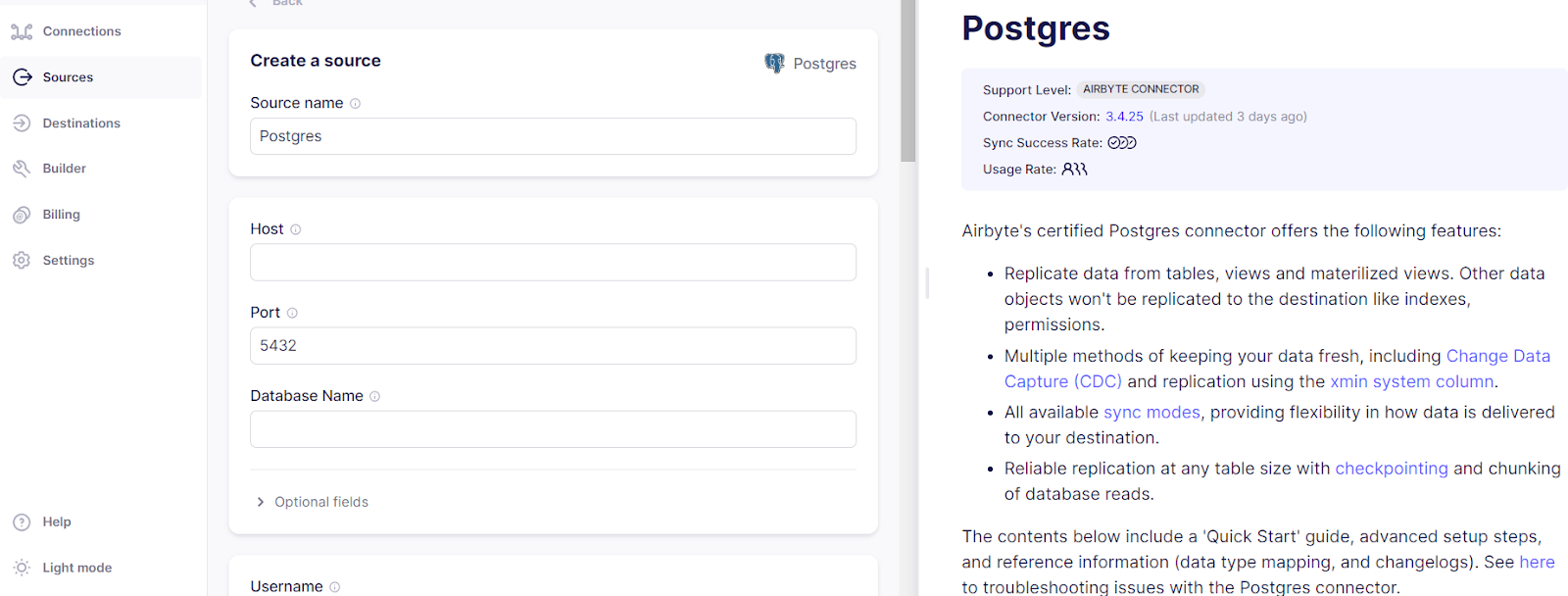

- Enter all the specific configuration details required for your chosen data source, including credentials such as Host, Database Name, Username, and Password.

- After filling in all the details, scroll down and select Set up Source button. Airbyte will run a quick check to verify the connection.

Step 2: Set up Your Destination

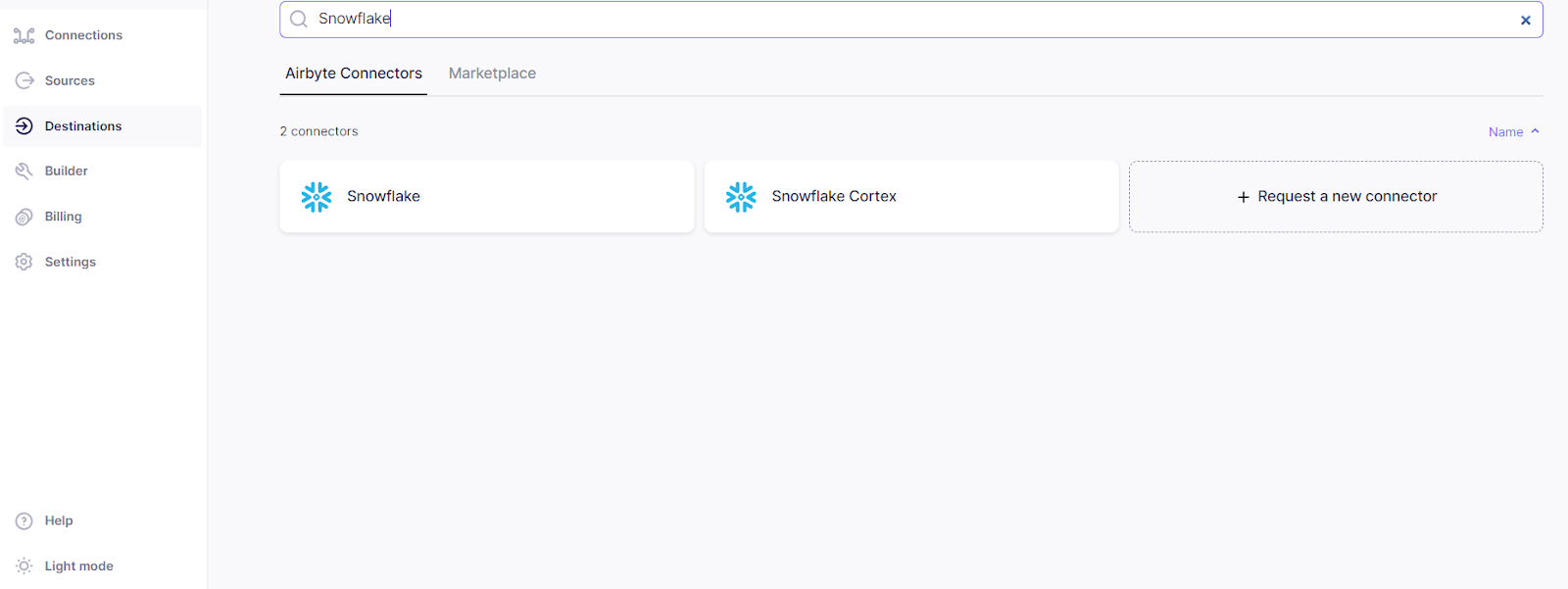

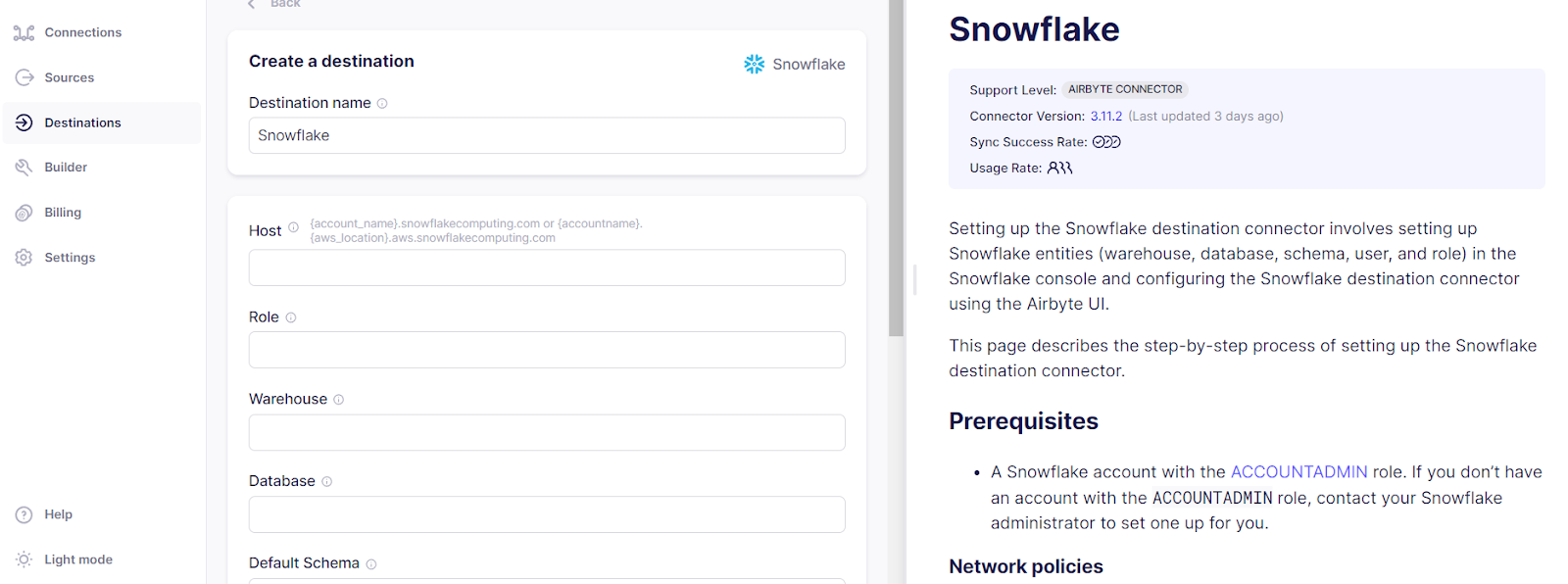

- To set up your destination, click on the Destination tab on the left side of the Airbyte homepage and enter your preferred data warehouse or cloud storage solution. Select the corresponding connector (e.g., Snowflake) as shown below.

- Enter all the credentials, such as Host, Warehouse, Database, and Default Schema. Scroll down and click on the Set up Destination button. Airbyte will run a verification check to ensure a successful connection.

Step 3: Set up Your Connection

- Click on the Connections.

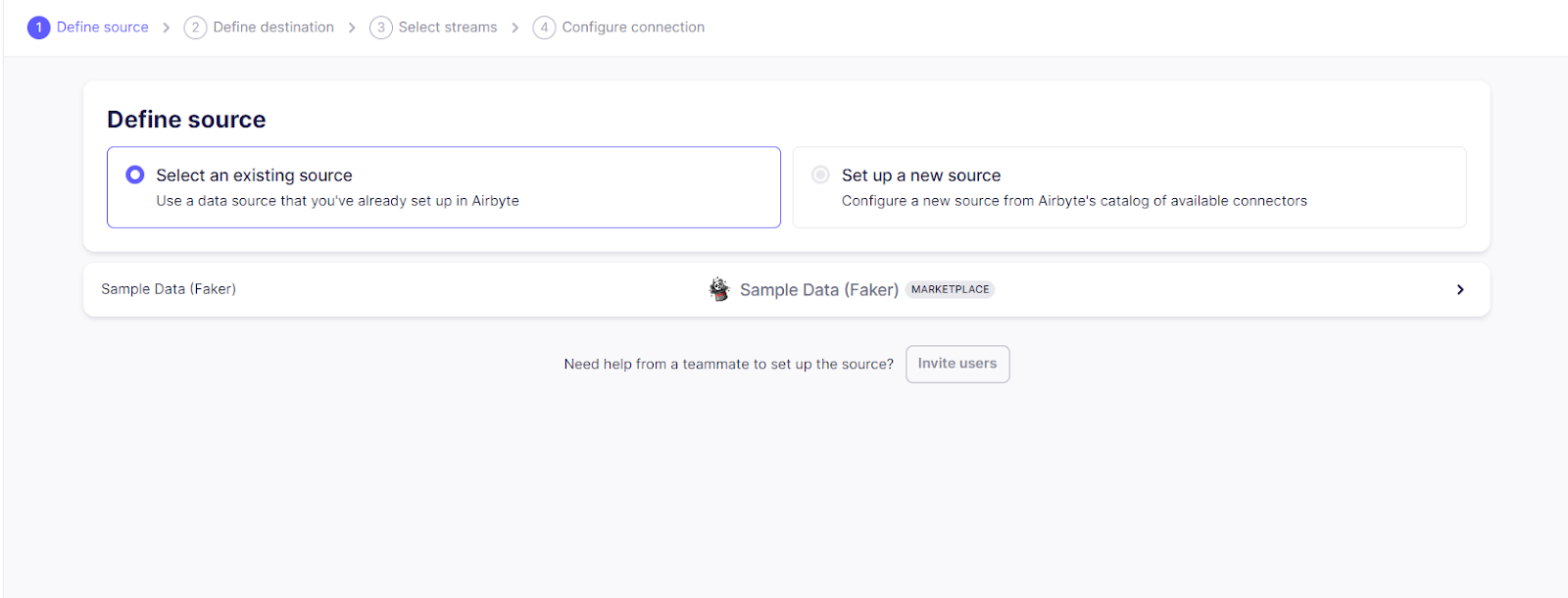

- You will see a screen indicating four steps to complete the connection. The first and second steps involve selecting your previously configured source and destination.

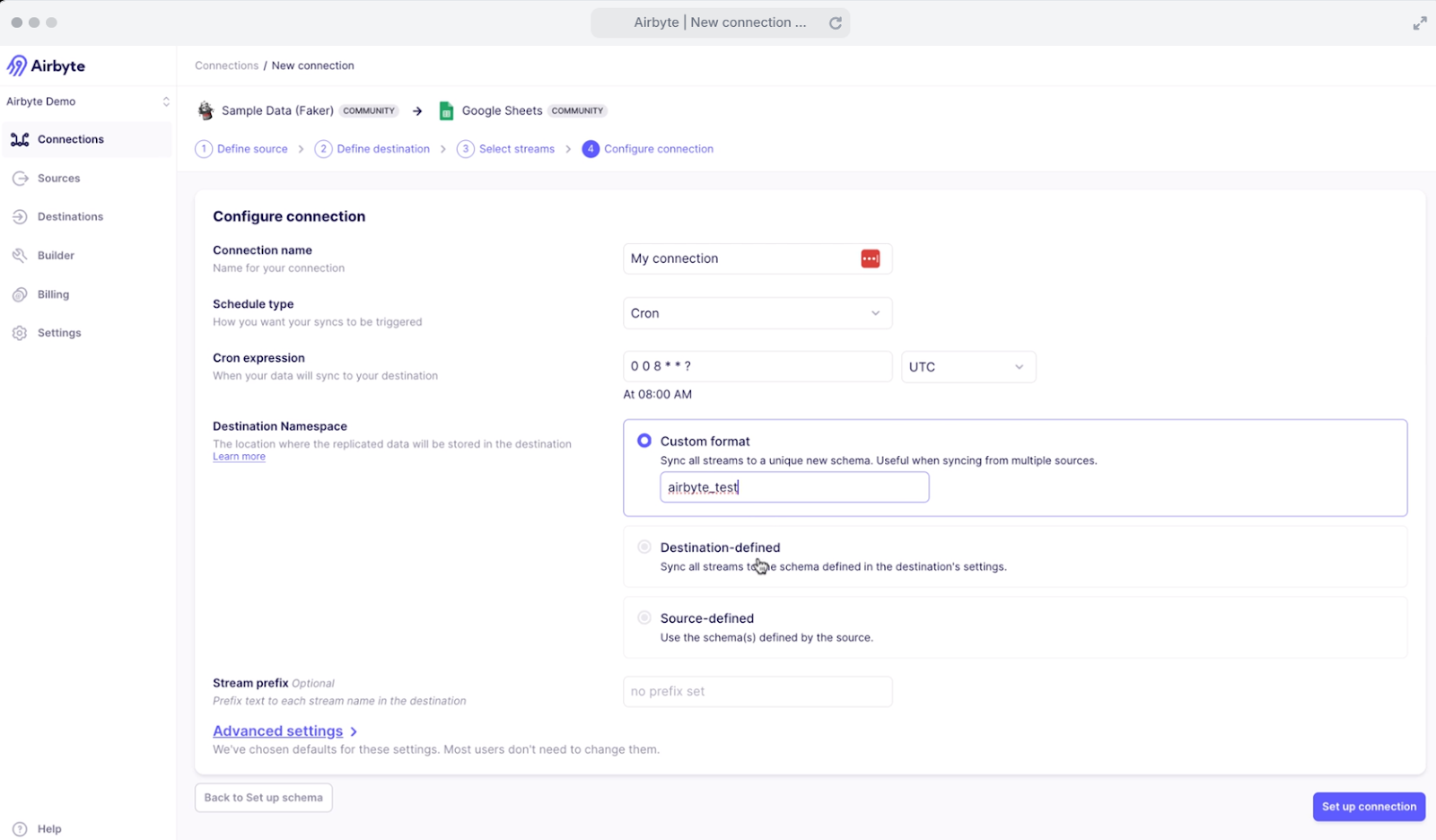

- In the third and fourth steps, you will need to configure other settings like replication frequency and sync mode, explaining how your data should sync. It also includes entering your destination namespace and configuring how Airbyte should handle schema changes.

- Once you fill in all the required details, click on Set up Connection. With this, you have successfully built your Airbyte pipeline for data movement.

Conclusion

Data movement is fundamental to your data strategy. It helps you easily transfer the required data to the location where it is needed. This seamless data movement within the organization enables you to extract valuable insights, improve decision-making, and gain a competitive edge.

The article explores various data movement strategies, tools, and challenges that can help your organization establish efficient and secure data pipelines to leverage your information assets. By gaining a holistic view of your data, you can increase operational efficiency and make smarter business decisions crucial to sustainable growth.

Suggested Read:

.webp)

.png)