8 Best Open Source ETL Tools for Data Integration

Summarize this article with:

Starting an effective data integration between a source & a destination requires the right tools to seamlessly Extract, Transform, and Load (ETL) data across various systems. In the realm of data management, numerous ETL platforms present unique traits and functionalities. From robust extraction mechanisms to sophisticated transformation abilities and efficient loading attributes, these tools play a pivotal role in orchestrating the flow of data. With these solutions, you can streamline the migration process and improve data quality, accessibility, and analysis capabilities.

Let's dive into the fundamentals of data integration and explore the popular open-source ETL tools that contribute to process enhancement and workflow simplification.

What is ETL?

ETL (Extract, Transform, Load) is a data integration process that merges, cleans, and organizes the data from different sources into one consistent data set. Thus, the unified data is then stored in a data warehouse, data lake, or another target system. The ETL pipelines are the base for data analytics and machine learning workstreams. Here’s how it works:

Extract: Data is taken from different sources, for instance, databases, APIs, or files.

Transform: The extracted data is then cleaned, enriched, and restructured. Business rules are used to solve specific intelligence requirements.

Load: The altered data is loaded into a target database or storage system.

8 Best Open Source ETL Tools

Here are the top eight open-source ETL tools with various features and capabilities for seamless data integration.

1. Airbyte

Airbyte is a data integration and replication tool that facilitates swift data migration through its pre-built and customizable connectors. With over 600+ connectors, Airbyte enables seamless data transfer to a wide range of destinations, including popular databases and warehouses. Its uniqueness lies in its ability to manage structured and unstructured data from diverse sources. This feature facilitates smooth operations across analytics and machine learning workflows, distinguishing Airbyte as a highly adaptable platform.

To enhance ETL workflows with Airbyte, you can use PyAirbyte, a Python-based library. PyAirbyte enables you to utilize Airbyte connectors directly within your developer environment. This setup allows you to extract data from various sources and load them in SQL caches, which can then be converted into Pandas DataFrame objects for transformation using Python’s robust capabilities.

Once transformed into an analysis-ready format, you can load it into your preferred destination using Python’s extensive libraries. For example, to load data into Google BigQuery, you can use pip install google-cloud-bigquery, establish a connection, and eventually load data. This method offers flexibility in terms of the transformation you want to perform before loading the data into a destination.

Some of the key features of Airbyte are:

- Streamline GenAI Workflows: You can use Airbyte to simplify AI workflows by directly loading semi-structured or unstructured data in prominent vector databases like Pinecone. The automatic chunking, embedding, and indexing features enable you to work with LLMs to build robust applications.

- AI-powered Connector Development: If you do not find a particular connector for synchronization, leverage Airbyte’s intuitive Connector Builder or Connector Developer Kit (CDK) to craft customized connectors. The Connector Builder’s AI-assist functionality scans through your preferred connector’s API documentation and pre-fills the fields, allowing you to fine-tune the configuration process.

- Custom Transformation: You can integrate dbt with Airbyte to execute advanced transformations. This enables you to tailor data processing workflow with dbt models.

- Robust Data Security: Airbyte guarantees the security of data movement by implementing measures, including strong encryption, audit logs, role-based access control, and ensuring the secure transmission of data. By adhering to popular industry-specific regulations, including GDPR, ISO 27001, HIPAA, and SOC 2, Airbyte secures your data from cyber-attacks.

Active Community: Airbyte has a open-source community. With over 25,000+ members on Airbyte Community Slack and active discussions on Airbyte Forum, the community serves as a cornerstone of Airbyte’s development.

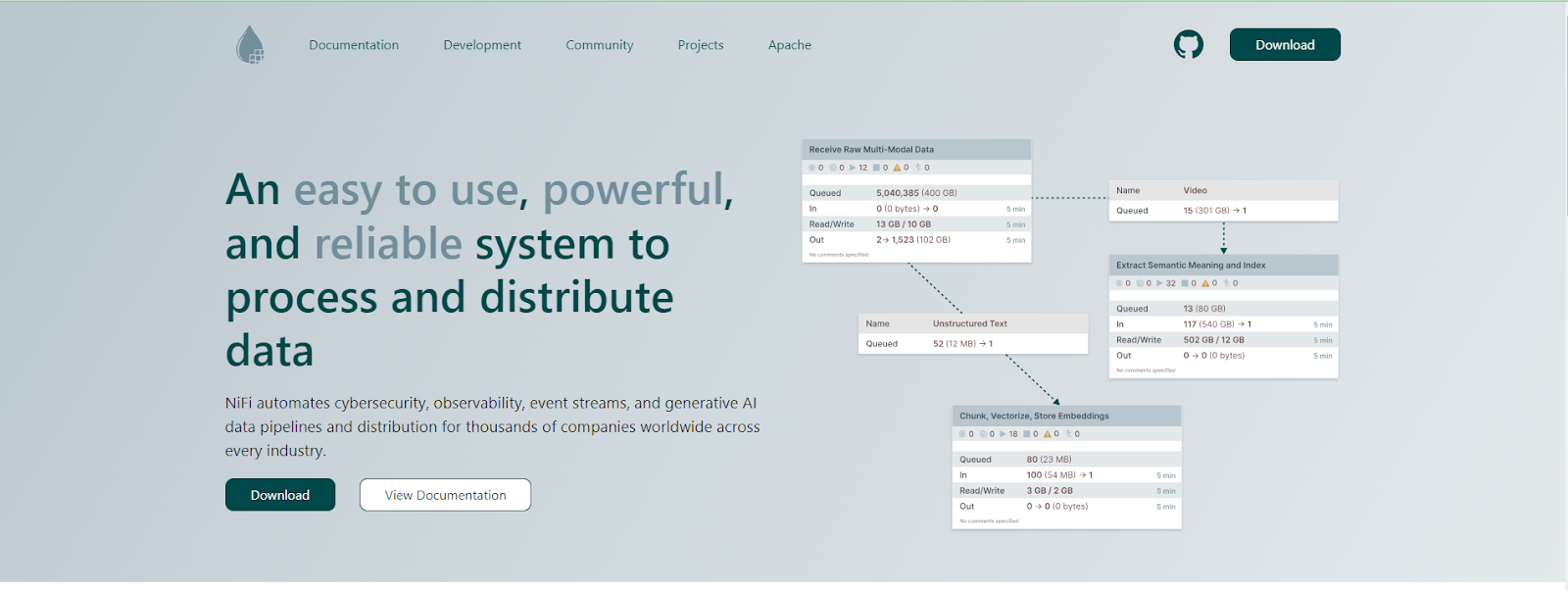

2. Apache NiFi

Apache NiFi is an open-source data integration tool that facilitates the automation of data flow between the systems. It offers a graphical user interface (GUI) for real-time designing, monitoring, and management of data. NiFi seamlessly supports a wide range of data sources and destinations, such as databases, cloud applications, and more.

Some of the significant features of Apache NiFi are:

- NiFi allows you to set prioritization schemas for data retrieval from a queue. By default, data is fetched in the order of oldest first, but other options include newest first, largest first, or custom schemas.

- In Apache NiFi, you need to configure processors to transform data by specifying parameters like formats, routine logic, and more. Data is then routed through these processors, which help you to perform the required transformations.

- NiFi helps you with a secure data exchange using encryption protocols like 2-way Secure Sockets Layer (SSL) at every stage of the data flow. It also enables content encryption and decryption with shared keys or other mechanisms for senders and recipients.

3. Pentaho

Pentaho Data Integration (PDI), also known as Pentaho Kettle, is an open-source ETL tool for data integration. It simplifies the task of capturing, cleansing, and storing data in a standardized format that is accessible and applicable to both end users and IoT technologies.

Some of the amazing features of Pentaho Data Integration are:

- You can create ETL tasks using a user-friendly drag-and-drop interface. This allows for the seamless creation of workflows involving steps like data extraction, validation, integration, and loading without the need for coding.

- It allows you to connect to a diverse range of relational databases, big data stores, and enterprise applications.

- PDI uses a workflow metaphor to transform data and execute tasks, with workflows composed of steps or entries. Transformations and jobs are created within these tasks, enabling you to define data movement and transformation processes visually.

4. Talend

Talend Open Studio is an open-source ETL tool for data integration, enabling you to connect and manage all your data, regardless of your location. It offers several pre-built connectors and components to link virtually any data source with any data environment, whether in the cloud or on-premises. You can effortlessly develop and deploy reusable data pipelines using a drag-and-drop interface that operates faster than manual coding.

Some of the key features of Talend Open Studio are:

- Talend provides a complete solution for addressing your organization’s end-to-end data management requirements. It encompasses a robust platform that covers data integration, governance, and quality control.

- Using advanced data mapping transformation tools, Talend allows you to streamline complex JSON, XML, and B2B integrations. Its built-in visual data mapper enables you to manipulate intricate data formats smoothly.

- Talend data inventory helps you identify data silos and create reusable assets. It facilitates a shared inventory between the pipeline designer and data preparation, streamlining internal processes with swift dataset documentation.

5. CloverDX

CloverDX is an open-source ETL tool for data integration that helps you automate and manage data pipelines efficiently, empowering you with real-time, high-quality data. Whether on-premise, hybrid solutions, or in the cloud, you can have complete control over your data infrastructure.

Some of the key features of CloverDX are:

- CloverDX’s data catalog contains custom data sources and target connectors shared within your company. These connectors, set up by your CloverDX server administrators, are designed to read from or write to external interfaces according to your business needs.

- CloverDX offers an intuitive and comprehensive platform. It combines highly visual design with robust coding features and assistance, enabling you to code complex data solutions swiftly.

6. Keboola

Keboola is a data integration platform that automates ETL, ELT, and reverse ETL data pipelines. It integrates open-source and freemium vendor technology to allow you to seamlessly extract and load data from various endpoints. Additionally, for complex transformations, you can integrate with dbt.

Some of the key features of Keboola are:

- It offers more than 400 connectors to integrate your sources and destination to quickly move data without writing code.

- Keboola offers a wide variety of transformation capabilities. You can use no-code for basic transformations or perform complex tasks with Python, SQL, and dbt.

- Using data streams API, you can rapidly ingest data from any system directly into Keboola storage without the need to set any component.

7. Singer

Singer is an open-source ETL tool for data integration. It defines the communication protocols for data extraction scripts, known as taps, and data loading scripts, known as targets. This standardized communication allows taps and targets to work interchangeably, enabling data movement from any source to the destination. You can transfer data between databases, web APIs, and more.

Some of the key features of Singer are:

- Singer taps, and targets follow a Unix-inspired design, which are simple applications built using pipes and do not require complex plugins to function.

- In Singer, applications use JavaScript Object Notation (JSON) for communication, enabling seamless integration and implementation across various programming languages.

- It supports incremental extraction by helping you keep track of the state between invocations (executing a tap or target). This process involves storing a timestamp in a JSON file between instances to note the last occurrence when the target consumed data.

8. Hevo Data

Hevo Data is an open-source, cloud-native ETL tool for data integration. It serves as a no-code solution accessible for both technical and non-technical users, facilitating the smooth transfer of data across multiple destinations like databases and warehouses. With over 150+ pre-built connectors, Hevo helps you to simplify the complex task of connecting various sources to destinations in near to real-time. After extracting the data from the source, you can perform transformations based on your requirements before loading data into the destination. This can be achieved using a drag-and-drop feature or Python-based script.

Some of the key features of Hevo Data are

- Utilizing the schema mapper, you can define the storage structure for data extracted from source applications and the form it will be organized in the destination. This enables automated mapping between source event types and destination tables.

- Hevo provides flexible data replication options, allowing you to sync databases, tables, or even individual columns between sources and destinations.

- It enables real-time data pipeline monitoring, helping you swiftly identify and resolve errors. This ensures uninterrupted data flow and minimizes downtime.

What are the differences between Data Integration and ETL?

5 Benefits of using open-source ETL tools

Here are the benefits of using open-source ETL tools:

1. Cost-Effective: Open-source tools are free and thus they are cheap for organizations.

2. Customization: You can alter the open-source ETL tools to match your particular needs.

3. Community Support: Active communities provide help, documentation, and troubleshooting services.

4. Transparency: You can see the codebase, thus you can be sure of the security and trust.

5. Innovation: Community-driven development is the key to constant enhancement.

6 Things to consider when choosing an open source tool for ETL

1. Functionality: Check if the tool is suitable for your data integration requirements.

2. Ease of Use: Go for the tools with interfaces that are easy to use and clear documentation.

3. Scalability: Think about the tool's capacity to cope with huge data volumes.

4. Community Support: A community of active members is a source of valuable resources and troubleshooting help.

5. Security: The tool should be designed with the best practices for data security in mind.

6. Integration Flexibility: Verify if the tool is capable of dealing with different data sources and destinations.

Conclusion

This article presents the eight best open-source ETL tools for data integration needs. These solutions offer diverse functionalities and capabilities ranging from comprehensive data management to streamlined workflow with optimization and affordability. As data complexity and volume increase, the availability of these platforms ensures that you have the resources necessary to orchestrate and harness the potential of the data sets efficiently. Choose among these top ETL tools for migrating your data based on your requirements, allowing businesses to optimize the process, foster innovation, and drive better decision-making.

We recommend trying Airbyte, a user-friendly tool that offers an extensive set of connectors and robust security features to simplify your workflows. Give Airbyte a try today!

FAQs

Which is the best open source no code ETL tool?

The most suitable open-source no-code ETL tool is the one that is based on your particular needs and tastes. Airbyte can be the best option since it allows users to create data pipelines without writing code. Furthermore, users can connect to various data sources, transform data, and load it to their preferred destination.

What’s the difference between open source and paid ETL tools?

Open-source ETL tools are free for anyone to use, modify, and distribute, while paid ETL tools usually have more features, support, and services for a fee. Paid tools usually have more sophisticated functions, customer support, and security features that are suitable for enterprises.

Are open source ETL tools safe to use for critical data sources?

Open-source ETL tools can be safe for critical data sources, it's crucial to carefully evaluate the specific tool, its community support, security practices, and your organization's risk tolerance and mitigation strategies. For instance, Airbyte has 15,000+ active community members, 800+ open-source contributors, and 500+ integrations. Thus, you can trust Airbyte for your critical data pipelines.

Do open source ETL tools offer fewer data integrations?

Not necessarily. A lot of open-source ETL tools support a vast array of data integrations such as databases, cloud services, APIs, and file formats. For instance, Airbyte offers 500+ off-the-shelf connectors for databases, data lakes, data warehouses, and APIs. The user-friendly UI and API of these connectors make them usable right out of the box. The dependability of these connectors is actively maintained and watched upon by community developers.

Suggested Read:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)