Top 11 AI ETL Tools for Data Teams

Summarize this article with:

✨ AI Generated Summary

AI ETL tools revolutionize data integration by automatically adapting to schema changes, detecting anomalies, and optimizing workflows, overcoming limitations of traditional hard-coded ETL systems. They offer user-friendly interfaces, pre-built connectors, and advanced security features like automated compliance, dynamic access control, and continuous monitoring, enabling business users to manage pipelines with minimal developer involvement.

Key benefits include:

- Adaptive orchestration and self-healing pipelines for increased reliability

- Predictive optimization to reduce costs and avoid bottlenecks

- Comprehensive governance with audit trails and regulatory compliance automation

- Scalable integration with diverse data sources and cloud ecosystems

- Leading platforms like Airbyte, Fivetran, Hevo Data, and Informatica cater to various team needs from fast analytics to enterprise-grade security

ETL processes have been reliable but face challenges as data environments evolve. Hard-coded pipelines often break with schema changes, and batch processing misses real-time insights.

AI ETL tools address these issues by adapting to schema drifts, spotting anomalies, and suggesting data transformations automatically, ensuring fewer sync failures and faster insights. They feature pre-built connectors and user-friendly interfaces, making data management more accessible.

With increasing data volumes, integrating AI into your data stack is crucial for maintaining data quality, integrity, and security. Incorporating AI into ETL processes enhances data workflows, allowing seamless integration from diverse sources.

These tools empower business users to maintain data pipelines without relying heavily on developers. This enables data teams to extract valuable insights from massive datasets while ensuring data accuracy and protection.

What Are AI ETL Tools and How Do They Revolutionize Data Processing?

AI ETL tools are advanced data integration platforms using AI and machine learning to enhance ETL (Extract, Transform, Load) processes. Unlike traditional ETL tools relying on static logic and manual intervention, they continuously adapt to changing data environments.

These tools automatically infer schemas, detect anomalies, optimize job execution, and recommend transformations. They extract data from multiple sources, transform it into usable formats, and load it into target systems while learning from errors to improve future performance.

Advanced machine-learning algorithms monitor data patterns and system performance to detect deviations indicating quality issues, security threats, or operational problems, enabling intelligent automation throughout the data integration processes lifecycle.

AI ETL tools are user-friendly, allowing business users to manage workflows without heavy developer reliance. For enterprises handling high-volume, fast-changing data, they reduce repetitive tasks, minimize failures, and ensure accurate delivery with unprecedented scalability and adaptability.

Why Do Traditional ETL Systems Struggle with Modern Data Challenges?

Legacy ETL tools were designed for a bygone era. In the past, data sources were limited, pipelines were mostly static, and updates occurred on predictable schedules.

Today's data landscape is dynamic, messy, real-time, and constantly evolving. Traditional tools struggle to meet the demands of modern data processing and the complexities involved in maintaining robust data pipelines.

Hard-coded logic becomes fragile when schemas change. Manual mapping introduces bottlenecks. Often, failures go unnoticed until a dashboard goes blank or an executive questions missing metrics.

The fundamental architecture of traditional ETL systems assumes stable data structures and predictable processing requirements. This makes them poorly suited for environments where data sources frequently evolve and business requirements change rapidly.

Efficient loading processes are a crucial component of comprehensive solutions that address data extraction, transformation, and integration tasks. Traditional systems often struggle with real-time processing requirements, forcing organizations to choose between data freshness and system stability.

For data engineers, this means dealing with reactive workflows and endless patching. For IT managers, it raises concerns about compliance and auditability.

Data governance is essential for ensuring compliance and robust monitoring capabilities, which traditional tools often lack. For BI teams, it delays the insights they need to make informed decisions.

Modern data teams require tools that can adapt. AI ETL tools go beyond task automation by anticipating changes, monitoring performance, and reducing failure points while strengthening pipelines, not just speeding them up.

How Do AI-Powered Automation Capabilities Transform Modern Data Pipeline Management?

AI-powered automation represents a fundamental paradigm shift in data pipeline management. It moves from reactive, manually intensive processes to proactive, intelligent systems that can learn, adapt, and optimize autonomously.

This transformation enables organizations to build resilient data infrastructure that adapts to changing business requirements. The automation capabilities extend beyond simple task execution to include predictive optimization and intelligent decision-making.

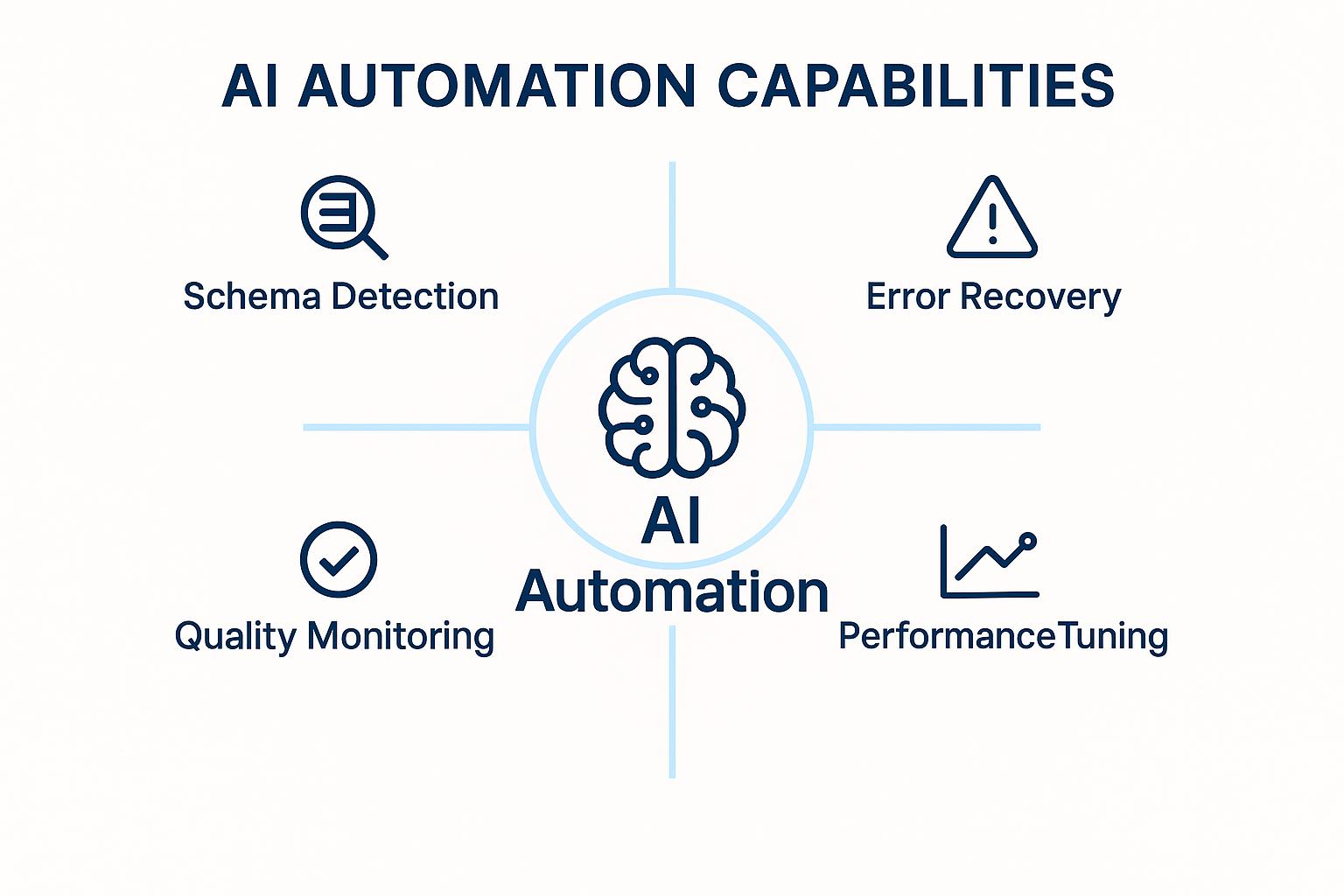

Key AI Automation Capabilities

- Adaptive orchestration dynamically adjusts workflow execution based on real-time conditions.

- Self-healing pipelines detect failures, diagnose root causes, and recover automatically without human intervention.

- Predictive optimization anticipates resource needs to avoid bottlenecks while minimizing costs.

- Intelligent error handling and anomaly detection identifies issues before they disrupt operations.

- Automated schema management detects drift and adjusts mappings without breaking pipelines.

These capabilities work together to create data infrastructure that becomes more reliable and efficient over time.

What Should Modern Data Teams Consider When Evaluating AI ETL Tools?

Understanding Your Team's Needs

Start with a deep understanding of workflows, data volumes, compliance obligations, and technical expertise. Align tool capabilities with both current operations and future growth requirements.

Consider the skill levels of your team members and the complexity of your data environment. Evaluate how different tools will integrate with your existing technology stack and business processes.

Key Features and Adaptability

Look for automatic schema-change handling, ML-driven transformation suggestions, and predictive optimization capabilities. Non-developers should be able to manage pipelines via low-code or no-code interfaces.

The platform should adapt to changing business requirements without requiring extensive reconfiguration. Flexibility in deployment options and integration capabilities is essential for long-term success.

Observability and Data Security

Choose platforms that provide comprehensive visibility into data flows and proactive alerting systems. Compliance certifications are non-negotiable for enterprise environments, while field-level encryption is strongly recommended for protecting sensitive data.

Real-time monitoring capabilities enable quick identification and resolution of issues before they impact business operations. Transparent audit trails support compliance requirements and troubleshooting efforts.

Governance and Flexibility

Ensure support for fine-grained access controls, detailed audit logs, and open APIs for customization. Engineers need flexibility for complex workflows while BI teams require trusted data delivery.

The governance framework should scale with your organization and adapt to evolving regulatory requirements. Integration with existing security and compliance tools streamlines operations.

Which AI ETL Tools Are Leading the Market for Data Teams?

1. Airbyte

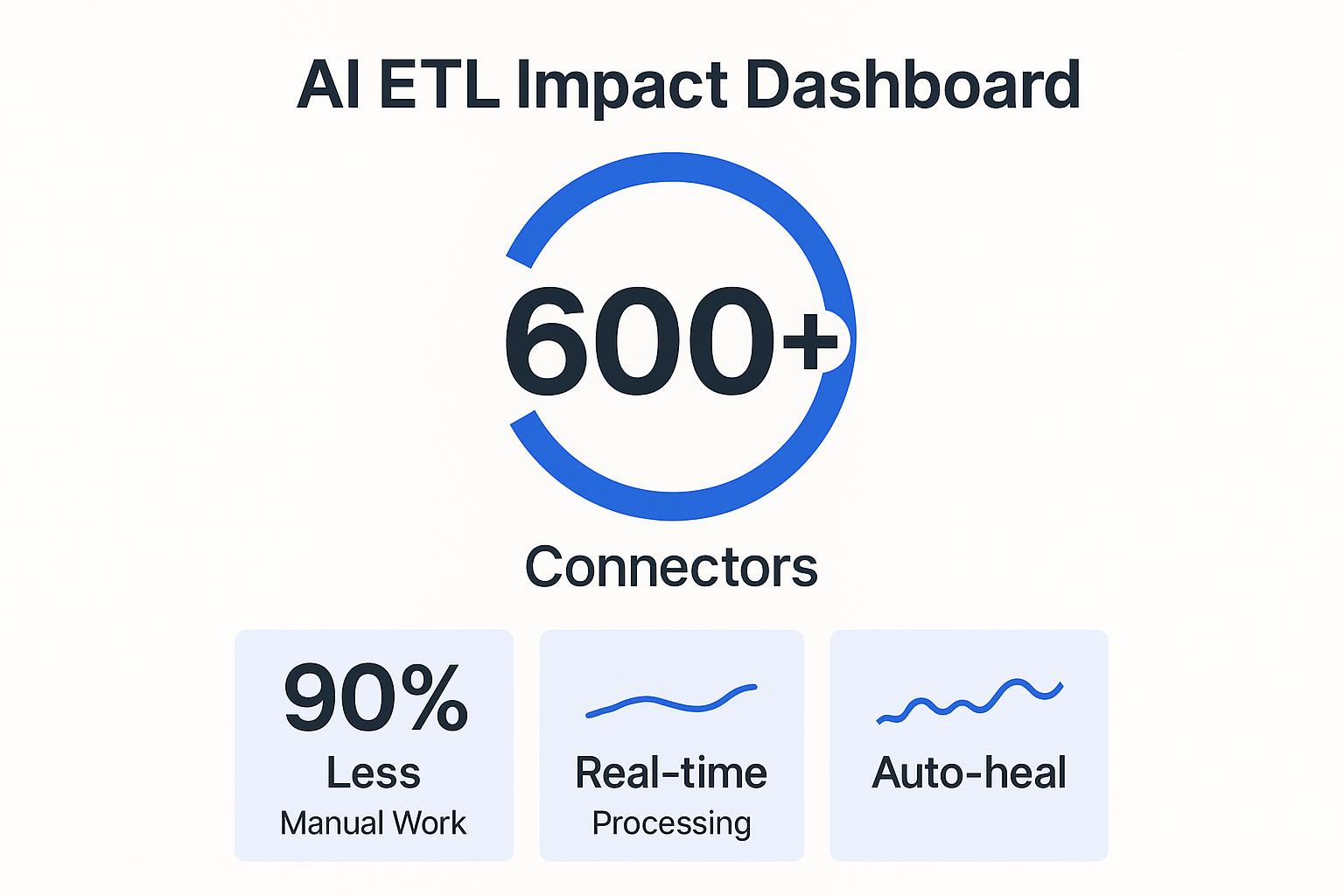

Airbyte combines AI-powered schema detection and adaptation with enterprise-grade reliability. The platform offers 600+ pre-built connectors plus AI-assisted connector generation for custom data sources.

Available in open-source, cloud, and self-managed options, Airbyte provides deployment flexibility without vendor lock-in. Native dbt integration enables complex transformations while maintaining pipeline simplicity.

2. Fivetran

Fivetran delivers fully managed syncs with automatic schema updates and minimal maintenance requirements. The platform focuses on high reliability and operational simplicity.

Their rich library of business-application connectors covers most enterprise data sources. The platform handles schema changes automatically without disrupting data flows.

3. Hevo Data

Hevo Data features a no-code, drag-and-drop interface that makes data integration accessible to business users. Real-time data syncing combines with ML-based error detection for reliable operations.

The platform supports diverse sources through its library of connectors. Built-in transformation capabilities handle most common data preparation requirements without coding.

4. Informatica

Informatica's CLAIRE engine provides intelligent metadata management across complex enterprise environments. Deep governance, lineage, and security capabilities support regulatory compliance.

The platform scales for large, complex enterprises with sophisticated data governance requirements. Advanced AI capabilities automate data discovery and classification processes.

5. Talend

Talend integrates ML-based anomaly detection with Trust Score capabilities for data quality assurance. The platform supports both cloud and hybrid deployments for flexible infrastructure options.

A broad connector ecosystem covers diverse data sources and target systems. Built-in data quality tools help maintain accuracy throughout the integration process.

6. Matillion

Matillion offers AI-guided transformation flows designed for cloud data warehouses. Native integration with Snowflake, Redshift, and BigQuery optimizes performance.

Built specifically for large-scale cloud workloads, the platform handles complex transformations efficiently. The visual interface simplifies pipeline development and maintenance.

7. Keboola

Keboola provides a low-code platform with AI recommendations for optimal data processing. The system handles both structured and unstructured data efficiently.

Collaboration features make it ideal for mid-sized teams working on shared data projects. Built-in analytics capabilities enable rapid insights from integrated data.

8. IBM DataStage

IBM DataStage features a graphical interface for complex data integration scenarios. Enterprise-grade security and quality controls meet stringent compliance requirements.

The platform excels in finance, healthcare, and other regulated sectors requiring robust governance. Advanced parallel processing capabilities handle high-volume workloads efficiently.

9. AWS Glue

AWS Glue provides ML-based schema inference and automatic code generation within the AWS ecosystem. The fully serverless architecture eliminates infrastructure management overhead.

Scalable, automated data management integrates seamlessly with other AWS services. Built-in optimization features reduce costs while maintaining performance.

10. Azure Data Factory

Azure Data Factory combines a low-code UI for intuitive pipeline development. Deep Azure service integration streamlines cloud-native architectures.

Intelligent orchestration capabilities optimize hybrid data workflows across cloud and on-premises systems. Built-in monitoring and alerting ensure reliable operations.

11. Google Cloud Dataflow

Google Cloud Dataflow handles both real-time and batch processing built on Apache Beam. Embedded ML capabilities enable complex transformations and analytics.

The platform excels for predictive analytics and event-driven architectures requiring low-latency processing. Auto-scaling features optimize resource utilization and costs.

Which AI ETL Tool Fits Your Team?

Identify Your Team's Needs

Match platform capabilities to team size, skills, budget, and compliance requirements. Consider total cost of ownership beyond initial licensing fees.

Evaluate vendor roadmaps to ensure alignment with your long-term technology strategy. Factor in training requirements and change management implications for your team.

For Fast-Moving Analytics Teams

Tools like Hevo Data or Fivetran deliver clean, real-time data with minimal engineering effort. These platforms prioritize ease of use and rapid deployment over extensive customization options.

The focus on automation and pre-built connectors enables analytics teams to concentrate on insights rather than infrastructure management. Quick setup and minimal maintenance reduce time-to-value, especially for teams balancing multiple data priorities or managing tight deadlines, similar to how academic platforms like Essaypro assist students in streamlining their research and writing tasks through automation and ease of access.

For Enterprise IT Managers

Informatica and IBM DataStage provide deep governance, lineage, and security capabilities ideal for regulated industries. These platforms excel in complex enterprise environments requiring comprehensive audit trails.

Advanced security features and compliance certifications meet stringent regulatory requirements. Extensive customization options support unique business requirements and legacy system integration.

Cloud-Ecosystem-Specific Solutions

AWS Glue integrates seamlessly with Amazon-centric technology stacks and provides native optimization for AWS services. Azure Data Factory offers deep integration with Microsoft environments and Office productivity tools.

Google Cloud Dataflow excels for GCP-based architectures requiring real-time ML pipelines and advanced analytics capabilities. Each platform leverages cloud-specific optimizations and services.

How Can Airbyte Streamline Intelligence in Your Data Operations?

Airbyte merges open-source flexibility with AI-powered automation to deliver enterprise-grade data integration without vendor lock-in. The platform supports over 600+ connectors while providing AI-assisted connector generation for custom data sources.

Automated schema handling eliminates pipeline breaks from source system changes. Predictive pipeline optimization ensures reliable performance while minimizing resource consumption and operational overhead.

Real-time data-quality monitoring combines with self-recovery capabilities to maintain pipeline integrity. Deployment freedom across open source, cloud, and enterprise options provides flexibility without compromising functionality.

The platform generates open-standard code and supports deployment across multiple cloud providers and on-premises environments. This approach ensures that organizations maintain control over their data integration investments while benefiting from continuous innovation and community contributions.

Conclusion

AI ETL tools represent a fundamental shift from reactive data integration to proactive, intelligent automation that adapts to changing business requirements. The choice of platform depends on specific organizational needs, existing technology infrastructure, and long-term strategic objectives.

Organizations that embrace these technologies now will gain significant competitive advantages as data volumes and complexity continue to grow. With proper evaluation and implementation, AI ETL tools transform data integration from a cost center into a strategic business capability that drives innovation and competitive differentiation.

Frequently Asked Questions

What Makes AI ETL Tools Different From Traditional ETL Solutions?

AI ETL tools use machine learning to automatically detect schema changes, optimize pipeline performance, and recommend transformations with minimal manual setup. Traditional ETL tools rely on fixed rules and explicit coding for every data source and transformation.

How Do AI ETL Tools Handle Schema Changes Automatically?

AI ETL tools continuously monitor data sources for changes such as new columns, renamed fields, or data type updates. When changes are detected, mappings and transformation logic are updated automatically to keep pipelines stable and operational.

What Security Features Should You Prioritize in AI ETL Tools?

Essential security features include encryption for data in transit and at rest, role-based access control, automated data masking, and PII protection. Detailed audit logs are also important for compliance, monitoring, and traceability.

How Do You Measure ROI From AI ETL Tool Implementation?

ROI can be measured by reduced manual maintenance, faster integration of new data sources, lower infrastructure and licensing costs, improved data quality, and quicker access to insights that support better decisions.

What Training Requirements Come With AI ETL Tool Adoption?

Most AI ETL platforms offer intuitive, visual interfaces that require limited training for business users. Data engineers typically need additional platform-specific training to fully leverage automation and advanced optimization features.

.webp)