Data Accuracy in 2026: What It Is & How to Ensure

Summarize this article with:

✨ AI Generated Summary

Organizations face significant data-accuracy challenges despite heavy investments, requiring advanced validation frameworks and AI-driven quality management to ensure precise data for critical decisions. Key issues include incomplete, duplicated, outdated, and inaccurate data, compounded by human errors and integration problems. Modern solutions leverage AI for anomaly detection, predictive quality assurance, and intelligent validation, while real-time data environments demand continuous, low-latency monitoring and adaptive controls. Effective accuracy management involves comprehensive governance, regular audits, data profiling, and secure, automated ETL processes, with tools like Airbyte facilitating reliable data integration and validation.

Leading organizations struggle with fundamental data-accuracy challenges that undermine their most critical business decisions. Despite massive investments in data infrastructure and analytics capabilities, many data professionals spend significant time preparing datasets for analysis due to accuracy issues that could have been prevented through proper validation frameworks and quality-management practices.

The challenge of maintaining data accuracy has evolved far beyond simple correctness verification to encompass sophisticated approaches, including AI-powered validation, real-time streaming-accuracy management, and predictive quality assurance that can identify potential issues before they impact business operations.

Modern data accuracy, therefore, requires a comprehensive understanding of validation methodologies, automated quality-management systems, and proactive monitoring approaches that ensure every data-accuracy client receives the most precise information possible throughout every stage of data processing and consumption.

What Is Data Accuracy?

Data accuracy measures how precisely your data reflects real-world scenarios. It is a subset of data quality and integrity, measuring the level of correctness in information collected, utilized, and stored.

Consider a scenario where your IT team built a new navigation app. During testing, you search for a highly recommended restaurant. The app guides you to where it thinks the restaurant is, but it turns out to be incorrect. This misguided information shows the importance of accurate data for applications or websites with a large user base.

What Are the Most Common Examples of Data Inaccuracies?

Incomplete Data

Incomplete data occurs when required fields in datasets are missing. System errors, human errors, and incomplete user registration forms can lead to missing data.

Example: Some entries in the customer dataset are missing email addresses. When sending promotional emails, these customers are left out of the campaign.

Duplicated Data

Duplicated data occurs when the same information is repeated across multiple datasets. This can result in increased storage and operational costs.

Example: If the same product information is entered twice, you may misinterpret distribution data, leading to incorrect analysis.

Outdated Data

Outdated data arises when your database is not consistently updated to reflect changes, causing errors in analysis and decision-making.

Example: Out-of-date phone numbers in a customer dataset lead to unsuccessful outreach and wasted time.

Inaccurate Data Sources

Unverified social media accounts, online forums, and websites can provide incorrect or incomplete information.

Example: Missing responses or biased samples in online surveys skew customer-satisfaction insights and impact profitability.

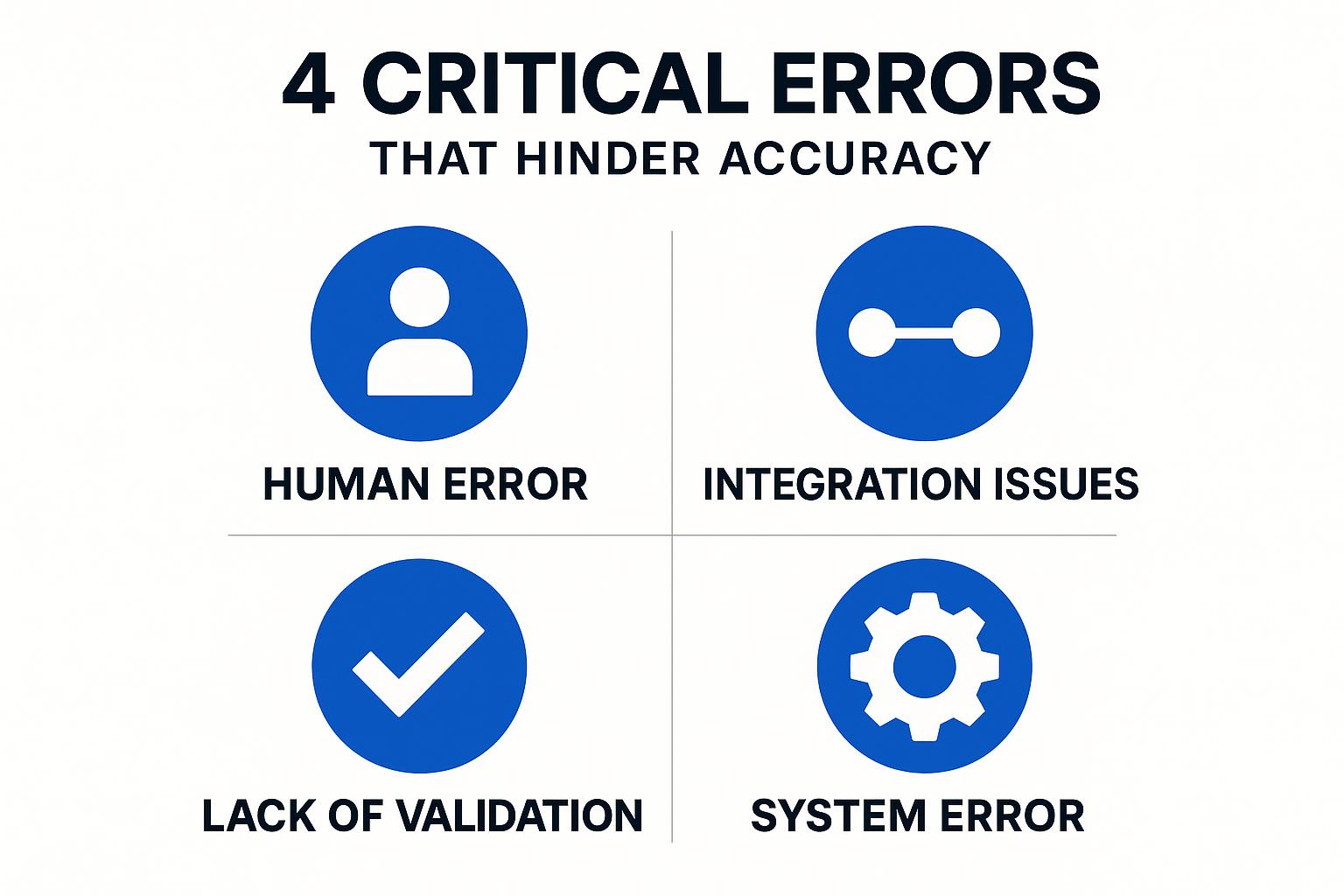

What Are the Common Errors That Hinder Data Accuracy?

- Human Error: Typographical mistakes, omitted fields, or misinterpretations during manual data entry.

- Integration Issues: Varying data structures, date formats, or naming conventions introduce misalignment or data loss during integration.

- Lack of Data Validation: Without proper data validation, incorrect or incomplete data can go undetected.

- System Error: Database crashes, hardware failures, or software bugs can lead to data loss or corruption.

How Can AI and Machine Learning Enhance Data-Accuracy Management?

Automated Anomaly Detection and Pattern Recognition

ML algorithms establish baseline patterns of normal data behavior and automatically identify deviations such as gradual drift or complex multi-dimensional anomalies that traditional rules might miss. These systems continuously learn from data patterns to improve detection accuracy over time.

Advanced pattern recognition capabilities enable organizations to identify subtle quality issues that manual processes would overlook, providing comprehensive coverage across large datasets and complex data relationships.

Predictive Quality Assurance and Proactive Management

AI systems analyze historical patterns of quality issues to predict when and where problems may occur, enabling preventative measures that maintain accuracy with minimal disruption. Predictive approaches shift data quality from reactive to proactive management.

Machine learning models can forecast quality degradation based on system changes, data volume fluctuations, and historical issue patterns, allowing organizations to address problems before they impact business operations.

Intelligent Data Validation and Correction

Advanced AI can interpret semantic meaning and apply context-appropriate rules, perform cross-reference checks, and even auto-correct common issues while logging confidence scores and audit trails. Intelligent validation goes beyond rule-based checking to understand data context and meaning.

These systems provide automated correction suggestions and can implement approved changes while maintaining detailed logs for audit and compliance purposes.

What Are the Key Challenges in Maintaining Accuracy in Real-Time Data Environments?

Streaming Data Validation Complexities

Validation must run continuously within strict latency constraints, often across interdependent streams, requiring distributed architectures that scale dynamically. Real-time systems need validation processes that operate at stream processing speeds without creating bottlenecks.

Traditional batch validation approaches cannot meet the performance requirements of streaming data environments, necessitating specialized validation architectures designed for continuous operation.

Event-Driven Architecture Accuracy Management

Event-driven systems introduce temporal dependencies, late-arriving data, and out-of-order events, necessitating sophisticated handling to preserve accuracy. Managing data accuracy in event-driven architectures requires understanding complex timing relationships and data dependencies.

Organizations must implement validation logic that accounts for event sequencing, handles late-arriving information gracefully, and maintains consistency across distributed event processing systems.

Continuous Monitoring and Adaptive Quality Control

Real-time environments need monitoring that detects accuracy degradation instantly and adapts to evolving patterns without interrupting processing. Continuous monitoring systems must balance accuracy requirements with performance constraints.

Adaptive quality control mechanisms adjust validation rules and thresholds based on changing data patterns while maintaining consistent accuracy standards across varying operational conditions.

How Do You Ensure Data Accuracy Through Comprehensive Quality Management?

Ensuring accuracy involves continuous strategic planning and consistent effort.

- Validate Data Upon Ingestion: Automated checks prevent bad data from entering your system.

- Establish a Data Governance Team: Define and enforce data-quality standards.

- Conduct Regular Data Audits: Periodic reviews catch inaccuracies early.

- Use Data Quality Tools: Identify and resolve incorrect, incomplete, or duplicate values.

- Train Your Staff: Educate teams on best practices and error detection.

How Can You Measure and Validate Data Accuracy Effectively?

- Check for Missing Values, Fields, or Records: Cleanse or complete data.

- Verify That Data Is Consistent: Standardize formats (e.g., "St." vs. "Street").

- Identify Duplicate Records: Deduplicate using quality tools.

- Ensure That Data Is Up-to-date: Refresh data on a regular schedule.

- Compare Data with Trusted Sources: Cross-reference against authoritative datasets.

- Verify That Data Conforms to the Established Quality Standard: Track KPIs such as percentage of validated records.

- Data Profiling: Use data profiling tools to uncover anomalies.

- Check for Any Data Corruption or Unauthorized Modifications: Monitor logs and system integrity.

- Compare with Historical Data: Identify unexpected deviations or trends.

Data Accuracy Vs Data Integrity

Understanding the distinction between accuracy and integrity helps organizations develop comprehensive data quality strategies. While accuracy focuses on correctness, integrity emphasizes protection from unauthorized changes and corruption.

Both concepts work together to ensure data remains reliable and trustworthy throughout its lifecycle. Organizations need strategies that address both accuracy and integrity requirements to maintain high-quality information assets.

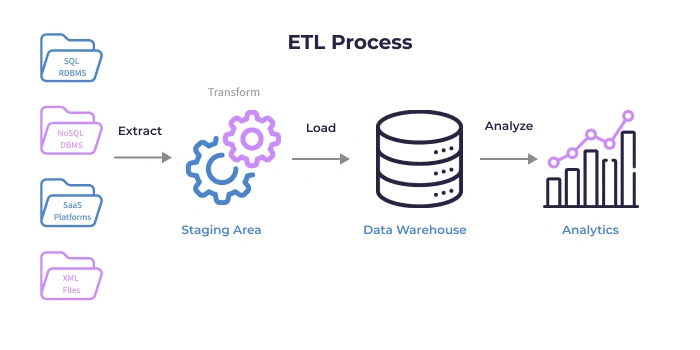

How Does Data Accuracy Apply in ETL Processing?

1. Extract

Extraction captures data from multiple sources and addresses inaccuracies early in the process. This phase provides the first opportunity to identify and resolve quality issues before they propagate through downstream processing.

2. Transform

Transformation converts and restructures data to match the destination schema while applying validation rules and quality checks. This provides opportunities to standardize formats and resolve inconsistencies between source systems.

3. Load

Loading involves moving transformed data into the destination system and verifying for errors through automated checking processes. Loading validation provides final confirmation that data meets quality standards before becoming available for business use.

How Does Airbyte Help Ensure Accuracy for Efficient Data Integration?

Airbyte automates data movement, helping you extract data from numerous sources, apply transformations with dbt, and load into destinations without loss.

Key features:

- 600+ Built-in Connectors: Seamless integration with diverse data sources, ensuring each data accuracy client receives the most accurate information through reliable, pre-validated connections.

- Connector Development Kit (CDK): Build custom connectors for unique needs while maintaining accuracy standards throughout development processes.

- Change Data Capture (CDC): Keep targets synchronized in real time with immediate accuracy validation and error detection capabilities.

- Developer-Friendly Pipelines: Use PyAirbyte within Python workflows for enhanced accuracy control and validation logic implementation.

- Data Security: SSL/TLS/HTTPS encryption, SSH tunneling, and SOC 2 Type II & ISO 27001 compliance, ensuring accuracy preservation throughout secure data transmission processes.

Conclusion

Prioritizing data accuracy maximizes value and drives strategic objectives. By understanding common errors and implementing robust validation processes enhanced with AI, real-time monitoring, and proactive quality assurance, organizations mitigate risk, boost efficiency, and strengthen stakeholder trust. Modern data accuracy requires comprehensive approaches that address technical, process, and organizational factors to ensure sustainable quality improvements.

Frequently Asked Questions

Are there any tools to perform data-accuracy checks?

Yes. Platforms such as Monte Carlo and BigEye can automate data-accuracy monitoring.

What is the most effective way to ensure data accuracy during ETL?

Thorough testing throughout extraction, transformation, and loading ensures the processed data is accurate and reliable.

How can AI improve data accuracy management?

AI enables automated anomaly detection, predictive quality assurance, and intelligent validation that resolve issues faster than traditional rule-based methods.

What makes real-time data accuracy challenging?

Validation must occur within tight latency constraints while maintaining standards, requiring specialized approaches beyond batch processing.

How do you measure the business impact of data-accuracy improvements?

Track metrics like reduced investigation time, decreased error rates, improved user satisfaction, and heightened confidence in data-driven decisions.

.webp)