15 Best Data Pipeline Tools in 2026

Summarize this article with:

.png)

Your organization must manage large data volumes daily. Valuable insights that could improve your business growth remain hidden within this operational data. To uncover those insights is like searching for a single grain of sand on a beach. That is where data pipeline tools come in.

Data pipeline tools allow you to focus only on gaining valuable insights and making smart decisions effectively for your business.

However, with numerous data pipeline tools available, choosing the right one can take time and effort. This article has reviewed the 15 best data pipeline tools in 2026 for efficient business workflows. Explore their key features, pricing plans, pros and cons to find the best fit for your business requirements.

Ready to turn your data into valuable insights? Let’s get started!

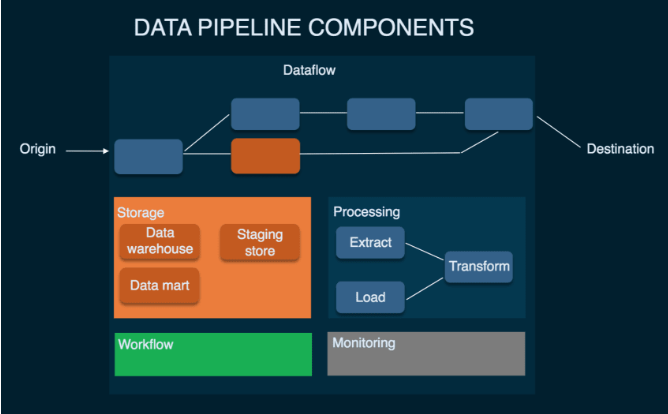

What is a Data Pipeline?

The data pipeline is a process specifically designed to ingest data from different sources, transform it, and load it to a destination. The destination can be a data warehouse, backend database, analytics platform, or business application.

The goal of a data pipeline is to execute data collection, preprocessing, transformation, and loading tasks in a structured manner. This ensures timely and consistent data arrives at its destination ready for further analysis, decision-making, reporting, and other business needs.

Top 15 Data Pipeline tools

Comparison Table of Top 15 Data Pipeline Tools

1. Airbyte

Airbyte is a robust AI-powered data pipeline tool designed for seamless data integration and replication. Its user-friendly no-code/low-code interface empowers you to streamline data movement between various sources and destinations.

With a vast catalog of 600+ pre-built connectors, you can easily build data pipelines. This helps you focus on extracting meaningful insights from your data, avoiding the complexities of traditional coding requirements.

Key Features of Airbyte

- Customized Connectors: If you can’t find the connector you need from the existing catalog, Airbyte lets you create a custom connector using the Connector Development Kit (CDK).

- AI-enabled Connector Development: You can utilize Airbyte’s AI Assistant in Connector Builder to speed up the development process. This AI assistant automatically pre-fills and configures key fields and offers intelligent suggestions to fine-tune your connector configuration process.

- Change Data Capture: With Airbyte’s Change Data Capture (CDC) approach, you can easily capture and synchronize data changes from the source, keeping the destination system updated with the latest modifications.

Self-Managed Enterprise Edition: Airbyte has announced the general availability of its Self-Managed Enterprise version. This version combines centralized control of user access with self-serve data ingestion capabilities. This feature makes it easier to create secure, scalable data architectures, like multi-tenant data mesh and data fabric environments. You can operate the Enterprise edition in air-gapped environments.

2. Stitch Data

Stitch Data is a fully managed data integration platform with a no-code interface. It lets you swiftly move data from its 140+ data sources into a cloud-based data lake or warehouse. This simplifies the process of consolidating data from various sources, empowering you to drive valuable decisions.

Key Features of Stitch Data

- Automatic Scaling: Stitch Data’s high availability infrastructure enables you to manage billions of records daily. With automatic scaling, you can adjust to rapidly growing data volumes without concern about hardware provisioning or workload management.

Pipeline Scheduling: It allows you to schedule your data pipeline to run at specific intervals or based on triggers. This will ensure timely access to the most relevant data whenever required.

3. Fivetran

Fivetran is a data pipeline tool designed to automate ELT processes with a low-code interface. With its 500+ pre-built connectors, you can migrate data from SaaS applications, databases, ERPs, and files to data warehouses or data lakes. You can also create custom connectors with its function connector feature as an extension to Fivetran.

Key Features of Fivetran

- Auto Schema Mapping: When you add a new column or modify an existing one in your source data schema, Fivetran automatically recognizes and replicates them to the destination schema.

- Multiple Deployment Models: Fivetran provides cloud, hybrid, or on-premise deployment options to meet every business's requirements.

Secure and Reliable: With Fivetran’s automated column hashing, column blocking, and SSH tunnels, you can protect your organization's data during migration.

4. Apache Airflow

Apache Airflow is an open-source platform that lets you automate and monitor the execution of data pipelines. It helps streamline the process of building and scheduling complex workflows. Airflow offers a wide range of operators for performing common integration tasks. With these operators, you can interact with various data sources and destination platforms, including Google Cloud Platform (GCP), Amazon Web Services (AWS), Microsoft Azure, and many more.

Key Features of Apache Airflow

- Directed Acyclic Graph (DAG): DAG is the core component of Airflow workflows. Defined in a Python script, DAGs allow you to visualize and define your data pipelines as a series of tasks. These tasks can be arranged with dependencies, specifying the order of execution.

- Dynamic Pipeline Generation: With Airflow, you have the flexibility to create data pipelines dynamically using Python code. This allows you to define workflows dynamically.

Extensibility: You can effortlessly define your custom operators that best suit your processing requirements. Here, operators contain logic for each data processing step in Python classes.

5. Hevo Data

Hevo Data is a data integration and replication solution that helps you move data from various sources to a target system. It enables you to collect data from 150+ data sources, such as SaaS applications or databases, and load it to over 15 destinations utilizing its library of pre-built connectors. Hevo Data also offers a no-code interface, making it user-friendly for those without extensive coding skills.

Key Features of Hevo Data

- Real-time Replication: With Hevo’s incremental data load technique, you can keep your target systems up-to-date, ensuring quick analysis.

- Data Transformation: Hevo Data offers analyst-friendly data transformation approaches, such as Python-based scripts or drag-and-drop transformation blocks. These approaches help you clean, prepare, and transform data before loading it into the destination.

Automatic Schema Mapping: Its auto-mapping feature eliminates the tedious task of manual schema management, automatically recognizing and replicating the source schema to the destination schema.

6. Apache Kafka

Apache Kafka is an open-source distributed event streaming platform. Its central component is formerly Kafka’s Publisher-Subscriber model, which automates data flow from Publishers to Subscribers in real-time. Apache Kafka allows you to build high-performance real-time data pipelines within your organization. It also helps you conduct streaming analytics, perform data integration, and support mission-critical applications.

Key Features of Apache Kafka

- High Scalability: You can easily scale the Kafka cluster horizontally by adding more brokers to the cluster. This allows you to manage petabytes of data and handle trillions of messages daily. Additionally, you can scale up or down by scaling storage and processing resources as needed.

- High Throughput: With Apache Kafka, you can achieve network-limited throughput by delivering messages through a cluster of machines with latencies less than two milliseconds. This efficiency makes Kafka suitable for real-time data pipelines.

Permanent Storage: You can securely store the data streams in a distributed, fault-tolerant, and durable cluster. This ensures that data is highly available, even in hardware failures or network issues.

7. AWS Glue

AWS Glue is a cloud-based integration service that streamlines data preparation for faster analysis. It offers 70 different data sources with which you can easily extract and consolidate your data into a centralized system with a manageable catalog. With AWS Glue, you can build, monitor, and execute ETL pipelines visually or through code.

Key Features of AWS Glue

- Data Catalog: AWS Glue Data Catalog serves as a central repository that allows you to store metadata in tables for your organization’s datasets. You can use it as an index to the location and schema of your data sources.

- Data Quality: AWS Glue utilizes ML-powered anomaly detection algorithms to help you identify inconsistencies, errors, or unexpected patterns within your data. By proactively resolving complex data quality anomalies, you can ensure accurate business decisions.

Interactive Sessions: AWS Glue provides interactive sessions that allow you to work with data directly within the AWS environment. You can integrate, explore and prepare data with your preferred tools like Jupyter Notebook.

8. Matillion

Matillion is a cloud platform designed to streamline data integration needs. It caters to various data processing needs, such as ETL, ELT, reverse ETL, and many more. With its extensive library of 100+ connectors, Matillion allows you to effortlessly extract, transform, and load data to your destination. For performing basic to complex transformations, you can either integrate with dbt or use SQL or Python.

Key Features of Matillion

- Intuitive Interface: Matillion provides a no-code/low-code interface to create powerful data pipelines within minutes. You can smoothly integrate data into a cloud data warehouse using its user-friendly drag-and-drop feature.

Data Lineage Tracking: In Matillion, you can improve your understanding of data flow by tracing data lineage back to its source. This enables you to identify and resolve issues quickly.

9. Google Cloud Dataflow

Dataflow is a fully managed and serverless service for stream and batch data processing tasks. It can help train, deploy, and manage complete machine learning pipelines. In addition, Dataflow simplifies data processing by allowing you to easily share your data processing workflows with team members and across your organization using Dataflow templates.

Key Features of Google Cloud Dataflow

- Horizontal Autoscaling: The Dataflow service can automatically select the required number of worker instances for your job. The service can also dynamically scale up or down during runtime based on the job’s characteristics.

- Dataflow Shuffle Service: Shuffle operation is used for grouping and joining data in worker virtual machines. It can move to the Dataflow service back end for batch pipelines, allowing scaling to hundreds of terabytes without tuning.

Dataflow SQL: It allows you to utilize your SQL skills, allowing you to write SQL queries directly within the BigQuery web UI to create simpler processing operations on batch and streaming data.

10. Azure Data Factory

Azure Data Factory is a serverless data integration service offered by Microsoft. It allows you to easily integrate all your data sources using over 90 pre-built connectors, which require no additional maintenance costs. You can then load the integrated data into Azure Synapse Analytics, a robust data analytics platform, to drive business insights.

Key Features of Azure Data Factory

- Code-free Data Flows: ADF utilizes a drag-and-drop interface and a fully managed Apache Spark service to handle data transformation requirements without writing code.

Monitoring Pipelines: With Azure Data Factory, you can visually track all your activity and improve operational efficiency. You can configure alerts to monitor your pipelines, ensuring timely notifications to prevent any issues.

11. Talend

Talend is a powerful data integration and management platform that supports both cloud and on-premise deployment. It offers strong data governance, quality, and cleansing tools, making it ideal for enterprise environments. Talend provides open-source and commercial editions and supports both batch and real-time processing.

Key Features of Talend

- Unified platform for data integration, quality, and governance.

- Drag-and-drop interface and native support for Java code.

- Pre-built components and connectors for databases, APIs, and cloud systems.

- Built-in data profiling, masking, and lineage capabilities.

12. Segment

Segment is a customer data platform (CDP) designed to collect, clean, and route first-party customer data to analytics and marketing tools. It simplifies event tracking and makes customer data easily accessible for marketing and product teams.

Key Features of Segment

- Unified API for cross-platform customer event tracking.

- Real-time data collection and routing.

- Over 300 built-in integrations with analytics, CRM, and data warehouses.

- GDPR and CCPA compliance, with strong data governance controls.

13. IBM DataStage

IBM DataStage is an enterprise ETL tool designed for building and managing data pipelines across large-scale systems. It's built for high-performance workloads and complex data transformations, particularly in regulated industries.

Key Features of IBM DataStage

- Parallel processing architecture for scalability.

- Visual job designer for ETL orchestration.

- Supports real-time, batch, and hybrid workloads.

- Deep integration with IBM Cloud Pak for Data and governance modules.

14. Meltano

Meltano is an open-source data pipeline tool that uses the Singer specification (taps and targets) to extract and load data. It is geared toward developers who want pipeline version control, testing, and CI/CD integration.

Key Features of Meltano

- CLI-based workflows with Git-native version control.

- Based on Singer open-source ecosystem for connectors.

- Ideal for developer-first data teams.

- Supports dbt, Airflow, Great Expectations, and other tools.

15. Informatica PowerCenter

Informatica PowerCenter is a comprehensive enterprise data integration platform. It provides a suite of services for data warehousing, data governance, data quality, and big data management. It's widely used in enterprises for mission-critical workloads.

Key Features of Informatica PowerCenter

- Advanced metadata management and data lineage.

- Robust scheduler and job monitoring features.

- Built-in connectors for a wide range of sources.

- Drag-and-drop mapping designer with transformation library.

Why Does Your Company Need a Data Pipeline Tool?

- Maximize Efficiency: Automates manual tasks so teams can focus on strategic goals rather than data movement.

- High Data Quality and Consistency: Cleanses and standardizes data during transfer to improve decision-making.

- High Scalability: Automatically scales with your data volume as your business grows.

- Centralized Access: Consolidates disparate data into a centralized platform for easier access and control.

Types of Data Pipeline Tools

Final Thoughts

You have explored insights into the top 15 data pipeline tools. These tools cater to various integration and replication requirements, offering functionalities for different processing needs, data volumes, and deployment preferences.

Choosing the right data pipeline tool is important to simplify the data integration processes according to your business needs. Consider factors like ease of use, technical expertise, scalability, security features, and cost when making your choice.

FAQs

1. What is the difference between data pipeline tools and ETL tools?

While both serve similar purposes, data pipeline tools support a broader range of tasks including real-time streaming, data replication, and reverse ETL. ETL tools focus more narrowly on Extract, Transform, and Load operations in batch mode.

2. Which data pipeline tool is best for real-time streaming?

If your use case demands real-time capabilities, Apache Kafka, Airbyte (with CDC), and Hevo Data are excellent choices. Kafka, in particular, is optimized for high-throughput, low-latency streaming data.

3. Are open-source data pipeline tools reliable for enterprise use?

Yes, tools like Airbyte, Apache Airflow, and Meltano are open-source yet powerful. Many enterprises use them due to their flexibility, customizability, and large community support. However, they may require more technical setup and infrastructure management.

4. What should I consider when choosing a data pipeline tool?

When selecting a data pipeline tool, it’s important to evaluate several aspects based on your business needs. Consider the availability of pre-built connectors for your data sources and destinations, the deployment model that suits your infrastructure (cloud, on-premise, or hybrid), and the ease of use—whether it offers a drag-and-drop UI or requires coding. Also, factor in support for real-time vs. batch processing, scalability to handle increasing data volumes, and the level of support or documentation provided. These elements together will help you find a solution that aligns with your technical resources and integration goals.

5. Do any of these tools support low-code or no-code interfaces?

Yes. Tools like Airbyte, Hevo Data, Stitch Data, Matillion, and Azure Data Factory offer no-code/low-code interfaces, making them ideal for teams without extensive engineering resources.

Suggested Reads:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)