12 Best Open-Source Data Orchestration Tools in 2026

Summarize this article with:

Data orchestration coordinates the data workflows of your organization to ensure seamless integration, transformation, and movement of data across disparate systems or environments. It reduces your workload through automation, eliminates data silos, enhances scalability, and enables efficient error management. Thus, data orchestration is crucial in data management for improved operational efficiency and insights.

Additionally, data orchestration provides complete visibility into data workflows, enhancing oversight and control.

In this guide, you will learn about 12 free and best open-source data orchestration tools you can use in 2026 to streamline your data workflows.

What is Data Orchestration?

Data orchestration is the automated process of gathering, transforming, and consolidating siloed data from multiple sources for effective data analytics. It allows you to streamline data workflows, eliminate any discrepancies in your data, and implement an efficient data governance framework. This ensures the availability of reliable data for making informed business decisions.

Various data orchestration tools can help automate your data workflows effortlessly. Such tools can reduce your workload by coordinating the repetitive tasks performed during data movement, optimizing the data flow according to your requirements, and managing workflow orchestration to ensure efficient process execution. These tools facilitate data transformation, ensuring data is in a usable format for analysis.

Definition and Purpose

Data orchestration is managing and coordinating data flow across various systems and activities. It involves integrating, translating, and coordinating data from multiple sources to provide a unified view.

Data orchestration primarily organizes complex data in a cloud environment, ensuring data is consistent, accessible, and ready for analysis. By orchestrating data, organizations can streamline their data processes, reduce manual intervention, and ensure that data is available in a usable format for downstream tools and applications. This enhances data quality and enables more efficient data management and utilization.

12 Open Source Data Orchestration Tools

Here is a list of the best open-source tools you can use to orchestrate your data. These tools help create workflows that automate and manage end-to-end processes efficiently.

1. Apache Airflow

Apache Airflow is a popular open-source data orchestration tool written in Python. It schedules and automates data pipelines using DAGs (Directed Acyclic Graphs). A DAG is a collection of tasks that Airflow executes in a specific order.

Airflow’s simple user interface makes it easy for you to visualize your pipelines in production, monitor their progress as they run, and troubleshoot any issues that may arise. It also supports scripting, enabling you to incorporate complex logic required to orchestrate data across various systems and environments. Developers can initiate workflows using a single command, enhancing productivity. Furthermore, Airflow allows for parallel scaling of your data orchestration workflows using executors like Kubernetes or Celery. Airflow can also integrate shell scripts as part of its job management framework.

2. Dagster

Dagster is a robust open-source data orchestration tool inspired by Apache Airflow. It is designed to facilitate the development and management of data pipelines. Dagster offers functionalities for monitoring tasks, debugging runs, inspecting data assets, and launching backfills.

The dependencies and execution parameters in Dagster allow you to handle complex workflows. To simplify orchestration further, Dagster introduced the concept of software-defined assets. It is a declarative approach where assets are defined in code, detailing their expected functionalities and typically referencing objects or files in persistent storage.

Dagster's software-defined assets transform DAGs into interconnected assets with dependencies; you can define relationships between assets for clear orchestrations. This approach enables Dagster to define and run data pipelines using the Dagster command-line or Dagster Dagit web interface.

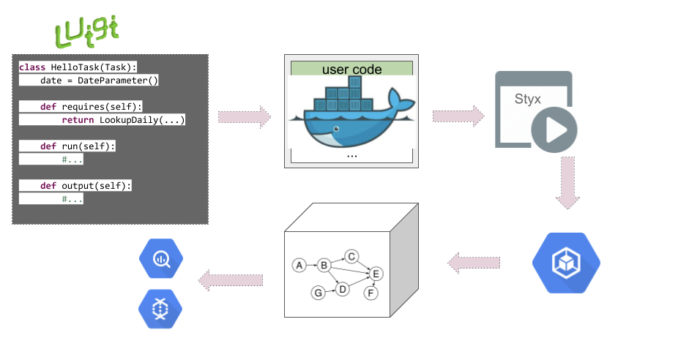

3. Luigi

Spotify developed Luigi, a Python-based data orchestration tool. It helps you build data pipelines by managing task dependencies, offering visualization, and ensuring robust workflow execution and failure handling. Luigi also supports command-line integration and provides a web interface to visualize your data pipelines effectively.

With Luigi, you can seamlessly integrate various tasks, like Hadoop jobs, Hive queries, or even local data processing tasks. Luigi utilizes dependency graphs to ensure that tasks are executed sequentially based on their interdependencies, which can include recursive task references. Additionally, Luigi's jobs API facilitates the scheduling, creation, and monitoring of tasks within data workflows. Luigi also offers A/B test analysis, the creation of internal dashboards, generating recommendations, and producing external reports to manage complex tasks.

4. Prefect

Prefect is a popular open-source data pipeline orchestration tool that uses Python to automate data pipeline construction, monitoring, and management. It facilitates complex data workflow with scheduling, caching, retries, logging, event-based orchestration, and observability.

Prefect’s architecture consists of two components: the execution layer and the orchestration layer. The execution layer operates tasks with agents and flows. Agents are polling services that check for scheduled tasks and execute flow runs accordingly.

Flows are defined functions that set up the tasks for execution, allowing you to specify task dependencies and triggers. On the other hand, the orchestration layer manages and monitors data workflows. It uses the Orion API server, REST API services, and Prefect’s UI to execute operations efficiently. Prefect can enhance decision-making by providing real-time access to customer data, identifying risky transactions, and ensuring compliance.

5. Kestra

Kestra is an open-source data orchestration platform for creating and managing complex data flows. Its user-friendly interface facilitates building tailored workflows without the need for programming languages. Kestra allows users to create flexible workflows that adapt to varying complexities and needs.

Kestra utilizes YAML, a descriptive language, to outline the sequence of tasks and their dependencies. This approach ensures that workflows are easy to understand and maintain.

6. Argo

Argo is an open-source container-native data orchestration tool. It runs on Kubernetes, a container orchestration system for automating software deployment, scaling, and management.

The working of Argo is based on Kubernetes Custom Resource Definition (CRD), which defines Kubernetes workflows using separate containers for each step. It also enables you to model workflows with DAGs. Using DAGs, you can capture dependencies between multiple tasks and define execution sequences.

7. Flyte

Flyte is an open-source data orchestration tool for building robust and reusable data pipelines. It supports built-in multitenancy, which allows decentralized pipeline development without affecting the rest of the platform. This feature enhances scalability, collaboration, and efficient pipeline management.

Flyte enables automation across multiple data environments, providing a comprehensive solution for data management in data-driven corporations.

One of Flyte’s key features is its versioning capability, which allows you to experiment with data pipelines within a centralized infrastructure. This eliminates the need for complex workarounds typically associated with version control in pipeline development. It also provides user-defined parameters, caching, data lineage tracking, and ML orchestration capabilities to enhance workflow development.

8. Mage

Mage is one of the best open-source data orchestration tools, known for its unique hybrid framework. This simplifies building data pipelines for data integration and transformation tasks. Mage allows you to seamlessly integrate and synchronize data from third-party sources; you can build real-time and batch pipelines using Python, SQL, and R.

Mage also integrates with data analysis tools to make transformed data accessible for analysis, enabling effective data collection and the derivation of business insights.

The interactive notebook UI of Mage provides instant feedback on the output of your code. The files containing code in Mage are called blocks, which can be executed independently or in the pipeline. Each block of code produces a “data product” when executed. Blocks combine to form DAGs. A pipeline in Mage is a collection of these blocks of code and charts for visualization.

9. Shipyard

Shipyard is a data orchestration tool known for its user-friendly features that simplify data sharing. It offers functionalities like on-demand triggers, automatic scheduling, and built-in notifications to automate data workflow execution. The platform has a visual interface that allows you to build data workflows, eliminating the need to write extensive code for basic workflows. Allowing data practitioners to collaborate seamlessly, Shipyard enhances productivity by providing a common platform for streamlined processes and improved workflow.

Shipyard has built-in observability for monitoring your workflows, retry functionalities for failed tasks, and alerting features for quick issue notification. Additionally, Shipyard offers automated scaling to handle fluctuating workloads and end-to-end encryption capabilities for secure data processing.

10. Apache Nifi

Apache NiFi is an open-source data orchestration tool known for its user-friendly interface. This interface simplifies designing data flows, making it particularly suitable for scenarios involving diverse data sources. NiFi simplifies organizing data from various sources, creating a unified inventory that facilitates better insights and data-driven decision-making.

NiFi enables data routing, transformation, and system mediation logic within workflows. These functionalities allow you to develop and deploy data pipelines that automate data movement between different systems. NiFi also supports various data protocols, allowing you to work with other data sources. Due to this, Apache Nifi has become a go-to choice for data orchestration tasks.

11. MLRun

MLRun is an open-source data orchestration tool for managing machine learning-based data pipelines or workflows. Built on a Python-based framework, it enables complete workflow management and allows you to orchestrate a massive amount of data through its elastic scaling features.

It also enables you to track, automate, and deploy pipelines to access and integrate data stored across various repositories. Additionally, MLRun can manage and process real-time data effectively, making it highly adaptable for dynamic data scenarios. Thus, MLRun significantly reduces the time, resources, and workload of deploying machine learning-based pipelines.

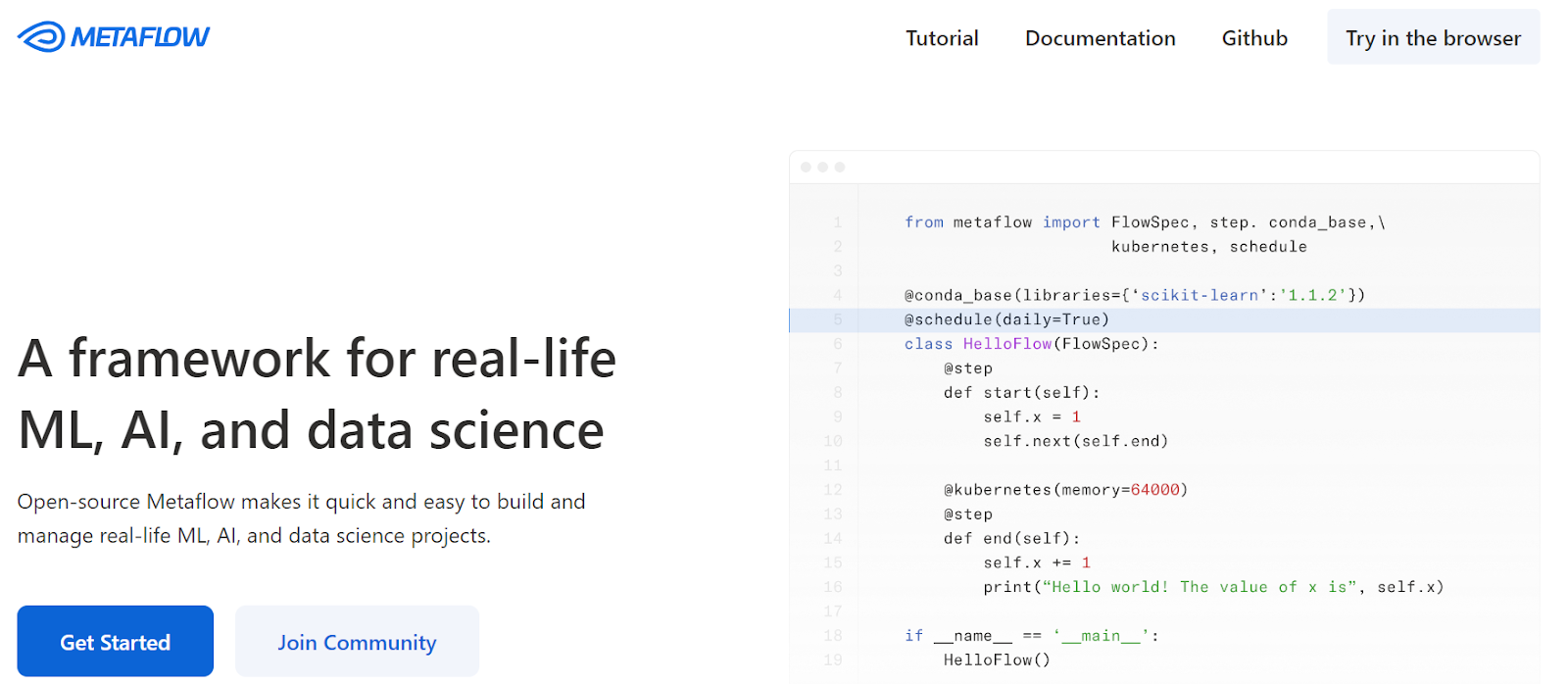

12. Metaflow

Metaflow is an open-source data orchestration tool developed at Netflix. It is designed to build and manage complex data workflows. MetaFlow uses a dataflow programming paradigm and represents programs as directed graphs termed “flows.” These flows consist of operations or steps, which can be organized in multiple ways, including linear sequences, branches, and dynamic iterations.

A key feature of Metaflow is its handling of “artifacts,” or data objects created during the execution of a flow. Artifacts simplify data management, ensuring automatic persistence and availability of data across the different steps of the workflow.

Streamline Data Workflows With Airbyte

Data orchestration becomes more efficient when data resides in a centralized location. Consolidating data from multiple sources, or data integration, plays a crucial role in achieving this. Consider using Airbyte, an AI-powered cloud-based data integration platform, for effortless data integration.

Airbyte offers a rich library of 600+ pre-built connectors to automate your data pipeline creation. And, if you cannot find a connector of your choice, you can build customized ones using AI-enabled Connector Builder or the connector development kit (CDK). In Connector Builder, the AI-assist feature scans through the API documentation of your preferred platform, simplifying the development of custom connectors. These connectors play an important role in data integration by establishing a seamless connection between your data sources and your target system.

Some of the ways in which Airbyte can aid data orchestration include:

- Seamless Data Ingestion: Airbyte provides an easy-to-use interface for building data pipelines. This simplifies data ingestion from various sources without requiring extensive coding expertise.

- Change Data Capture: Airbyte's CDC feature allows you to leverage data replication capabilities. It allows you to identify and capture incremental changes in your source data and replicate them in the target system.

- PyAirbyte: With Airbyte, you can take advantage of its open-source Python library, PyAirbyte. It allows you to programmatically extract data from Airbyte-supported connectors into SQL caches, which can then be transformed using Python libraries. Once it is made analysis-ready, you can use a supported destination connector to move your datasets.

- GenAI Workflow Support: Integrating Airbyte with LLM frameworks like LangChain facilitates automated RAG transformations, such as chunking and indexing. These transformations convert raw data into vector embeddings that can be stored in popular vector databases, including Qdrant, Pinecone, and Milvus. Migrating data into a vector database allows you to streamline GenAI workflows.

- Vast Data and AI Community: Airbyte supports a large community of data practitioners and developers. Over 20,000 individuals actively engage and collaborate with others within the community to build and extend Airbyte features.

Benefits of Using Data Orchestration Tools

Utilizing data orchestration tools can greatly optimize your data workflows. Some of its benefits include:

- Workflow Automation: Data orchestration automates various tasks within data workflows. This can save significant time and reduce the workload of resources, enabling you to focus on high-value tasks to optimize your organization’s output. Implementing code best practices can lead to dependable processes and seamless integration.

- Eliminates Data Silos: Data orchestration aids in data integration and transformation. This results in the consolidation and standardization of your data, thereby eliminating data silos.

- Monitoring: Data orchestration tools can help you monitor the status and progress of your data workflows. By tracking workflows, you can identify and resolve issues quickly. These tools provide more control over data workflows, allowing for efficient error management and scalability.

Improved Data Quality and Efficiency

Data orchestration significantly improves data quality by automating the cleansing and transformation processes. This automation ensures that data is consistent and standardized across the organization, reducing the risk of human error and data quality issues.

By streamlining data workflows, data orchestration tools save time and resources, allowing data teams to focus on high-value tasks such as data analysis and strategic decision-making. The automated process of managing data pipelines also enhances operational efficiency, enabling organizations to process large volumes of data quickly and accurately. This leads to more reliable data assets that can be leveraged for various business intelligence and analytics purposes.

Better Decision-Making and Business Processes

Data orchestration enables better decision-making by providing accurate and valuable data-driven insights. By automating data workflows and reducing manual errors, data orchestration improves the efficiency of business processes.

It ensures that data is readily available and in a standard format, allowing organizations to make informed decisions based on reliable data. This unified view of data helps identify trends, patterns, and opportunities, driving business growth and innovation. By leveraging data orchestration, organizations can enhance their decision-making capabilities, optimize their operations, and stay competitive in a data-driven world.

Common Data Orchestration Challenges

While data orchestration is essential for coordinating modern data pipelines, it’s not without its complexities. Here are some of the most common challenges data teams face when orchestrating workflows across systems and stages:

1. Pipeline Complexity and Sprawl

As organizations scale, so do their pipelines. With dozens—or even hundreds—of data sources, dependencies, and transformation steps, orchestration logic can quickly become tangled and difficult to manage. Without proper structure, teams risk creating brittle systems that are hard to debug or extend.

2. Managing Dependencies Across Tools

Modern data stacks often involve a patchwork of tools: ingestion platforms, transformation engines like dbt, cloud storage layers, and data warehouses. Coordinating tasks across this ecosystem requires precise dependency management. A delay or failure in one step can cause cascading issues downstream if not properly accounted for.

3. Lack of Visibility and Monitoring

Data teams often struggle to monitor the real-time status of their pipelines. When something breaks, it’s not always obvious where or why. Issues can go undetected without detailed logging, alerting, and lineage tracking, leading to data quality problems or missed SLAs.

4. Handling Dynamic or Unpredictable Workloads

Not all data flows are predictable. Some sources update irregularly, while others generate massive volumes with little warning. Orchestrating pipelines that can flexibly respond to changing inputs, without over-provisioning resources or breaking, requires advanced logic and dynamic scheduling capabilities.

5. Data Freshness vs. Cost Tradeoffs

Balancing near-real-time updates with infrastructure cost is an ongoing challenge. High-frequency orchestration improves data freshness, but increases compute usage and complexity. Teams must carefully choose where real-time updates are necessary—and where batch processing suffices.

6. Orchestration Tool Limitations

Not all orchestrators are created equal. Some tools lack native support for critical integrations or have rigid execution models that don't align with your workflows. Choosing the wrong orchestrator—or outgrowing a legacy system—can create bottlenecks that hinder scalability.

How to Choose the Right Data Orchestration Tool

Selecting the right data orchestration tool depends on several factors that align with your business needs and technical infrastructure. Here are key considerations:

1. Integration Capabilities

Ensure the tool supports your current and future tech stack — connectors, APIs, and support for various data sources and destinations (e.g., warehouses, lakes, SaaS apps).

2. Ease of Use

Some tools require heavy engineering expertise (e.g., Airflow), while others offer low-code or no-code interfaces (e.g., Shipyard, Kestra). Choose based on your team’s skill level.

3. Scalability and Performance

Assess how well the tool handles increasing workloads, parallel task execution, and high-throughput data ingestion.

4. Monitoring and Debugging

Look for tools with strong observability features, such as real-time logging, alerting, and error tracking (e.g., Prefect, Dagster).

5. Community and Support

Open-source tools rely heavily on community contributions. Tools like Airflow, Dagster, and Prefect have robust communities that provide plugins, documentation, and frequent updates.

6. Special Requirements

If your workflows involve ML (e.g., MLRun, Metaflow) or Kubernetes-native orchestration (e.g., Argo, Flyte), pick a tool specialized in those areas.

Conclusion

Data orchestration is an essential approach that can assist you in managing and optimizing your data pipelines. This article provides you with a comprehensive list of the 12 best data orchestration tools for coordinating your data workflows. You can use these tools to build complex data pipelines that facilitate seamless data integration, transformation, and analysis. As open-source solutions, they offer the benefit of community-driven innovation, ensuring you benefit from continuous updates and various customization options.

Suggested Reads:

What should you do next?

Hope you enjoyed the reading. Here are the 3 ways we can help you in your data journey:

.webp)