What Is Data Architecture: Best Practices, Strategy, & Diagram

Summarize this article with:

✨ AI Generated Summary

Data teams face an unprecedented paradox: while Apache Iceberg adoption has grown 340% in 2025 and organizations process over 181 zettabytes of data globally, traditional centralized architectures still consume 30-50% of engineering resources on maintenance rather than innovation. The emergence of domain-oriented data ownership through Data Mesh principles, combined with AI-driven automation and semantic intelligence, is fundamentally reshaping how enterprises approach data infrastructure.

This comprehensive guide explores how contemporary data architecture transforms operational bottlenecks into strategic advantages. You'll discover proven frameworks that reduce integration costs by 70%, implementation strategies leveraging federated governance models, and architectural patterns enabling real-time edge computing while maintaining enterprise-grade security. Learn how industry leaders harness semantic data mesh and data contracts to achieve operational excellence and sustainable competitive advantage.

TL;DR: Data Architecture at a Glance

- Modern data architecture is a comprehensive framework that transforms how organizations collect, store, process, and govern data across business functions, moving beyond traditional centralized approaches to domain-oriented ownership patterns.

- Key benefits include eliminating data silos through federated approaches, enabling real-time business responsiveness, and reducing infrastructure costs through intelligent automation and open table formats like Apache Iceberg.

- Contemporary frameworks emphasize semantic data mesh, data contracts for quality assurance, and AI-driven optimization that balances domain autonomy with enterprise-wide governance and security.

- Implementation success requires aligning architecture with business objectives, establishing federated governance models, and leveraging platforms like Airbyte for vendor-neutral data integration.

- Strategic outcomes enable organizations to achieve faster decision-making cycles, enhanced analytical capabilities, and sustainable competitive advantage through data-as-a-strategic-asset approaches.

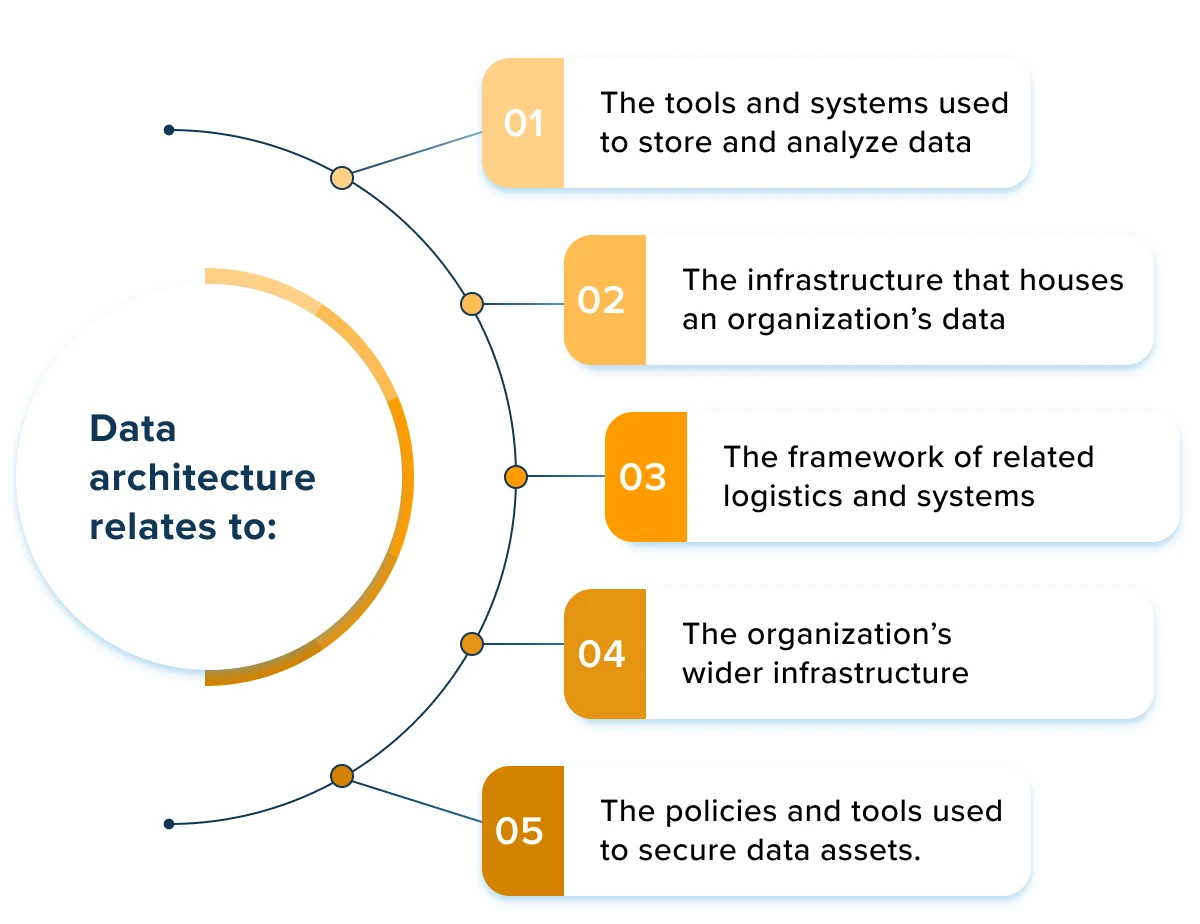

What Is Data Architecture and Why Does It Matter?

Data architecture is a comprehensive framework that defines how your organization collects, stores, processes, governs, and utilizes data across all business functions. Unlike simple database design, data architecture encompasses the entire data ecosystem, establishing policies, standards, and models that align data management with strategic business objectives.

Modern data architecture has evolved significantly beyond traditional centralized approaches, now incorporating domain-oriented ownership patterns, semantic intelligence layers, and AI-native capabilities. Contemporary frameworks prioritize decentralized governance, real-time processing, and contextual data products that serve as the foundation for advanced analytics, machine learning initiatives, and business intelligence while ensuring quality, security, and compliance across distributed hybrid environments.

The primary goal of data architecture is creating a unified, scalable, and adaptable data environment that transforms raw information into actionable business insights through intelligent automation. This enables organizations to make data-driven decisions in real-time, respond to market changes with unprecedented agility, and maintain competitive advantage through superior contextual data utilization that balances autonomy with enterprise-wide interoperability standards.

Why Is Data Architecture Critical for Modern Organizations?

Contemporary data architecture addresses fundamental business challenges that traditional monolithic approaches cannot solve, delivering measurable improvements in operational efficiency, cost management, and strategic agility through decentralized yet governed approaches.

Eliminates Data Silos Through Domain-Oriented Products

Modern architectures break down departmental barriers by creating unified data platforms where domain teams own and curate their data as products with clear service-level agreements. This federated approach enables cross-functional collaboration while maintaining local expertise, reduces data duplication through semantic interoperability, and provides comprehensive views of business operations that support informed decision-making across all organizational levels without central bottlenecks.

Enables Real-Time Edge-to-Cloud Business Responsiveness

Advanced architectures support streaming data processing, edge computing integration, and real-time analytics pipelines that process events within milliseconds rather than hours. Organizations can now respond to customer behavior, operational anomalies, and market changes through automated decision-making systems that operate at the speed of business rather than the pace of traditional batch processing cycles.

Reduces Infrastructure Costs Through Intelligent Automation

Well-designed architectures eliminate redundant systems through semantic data fabrics, optimize resource utilization via AI-driven workload management, and leverage open table formats like Apache Iceberg to avoid vendor lock-in while reducing storage costs. Organizations typically achieve 40-70% cost reductions while improving performance and reliability through architectural modernization that emphasizes sustainability and computational efficiency.

Ensures Regulatory Compliance Through Federated Governance

Modern frameworks embed governance controls, audit capabilities, and automated policy enforcement across decentralized domains while maintaining enterprise-wide compliance standards. This approach reduces regulatory risks through real-time monitoring, prevents data breaches via embedded security controls, and maintains stakeholder trust while enabling data democratization through domain-specific governance that scales with organizational growth.

Supports AI and Machine Learning Through Contextual Intelligence

Contemporary architectures provide the semantic foundation for artificial intelligence applications by ensuring data quality through automated contracts, enabling feature engineering via knowledge graphs, and supporting model training and deployment pipelines with embedded lineage tracking. This positions organizations to leverage AI for competitive advantage through contextually-aware systems that understand business meaning rather than just technical structure.

Adapts to Evolving Business Requirements Through Composable Design

Flexible architectures accommodate new data sources, emerging technologies like quantum computing preparation, and evolving business models through modular components that integrate without requiring complete infrastructure overhauls. This future-proofs investments while enabling rapid innovation in response to market opportunities through architectural patterns that embrace change as a constant rather than an exception.

What Are the Core Principles of Effective Data Architecture?

Successful data architecture implementation relies on fundamental principles that ensure long-term sustainability, scalability, and business value creation while balancing domain autonomy with enterprise-wide consistency and governance.

Clarity and Transparency in Data Management

Maintain comprehensive documentation through active metadata systems, standardized naming conventions enforced via automated governance, and well-defined semantic data models that enable all stakeholders to understand data structure, lineage, and usage context without technical mediation.

Data Quality and Integrity Through Contractual Agreements

Establish rigorous validation processes via data contracts, automated cleansing routines embedded in ingestion pipelines, and quality monitoring systems that ensure data accuracy, completeness, and consistency across all domains while enabling producer accountability for data product quality.

Compliance and Governance Integration Through Federated Models

Embed regulatory requirements, industry standards, and organizational policies directly into architectural design through computational governance that operates across decentralized domains while maintaining enterprise-wide compliance standards and audit capabilities.

Security and Access Control by Design Across Hybrid Environments

Implement comprehensive security measures including end-to-end encryption, zero-trust authentication, fine-grained authorization controls, and continuous monitoring systems that protect data throughout its lifecycle across cloud, edge, and on-premises deployments.

Scalability and Performance Optimization Through Edge-to-Cloud Integration

Design architectures that accommodate exponential growth in data volume, distributed processing requirements, and edge computing scenarios without degrading performance while optimizing for sustainability and computational efficiency across hybrid infrastructure environments.

Suggested read: Snowflake Data Warehouse Architecture

Which Data Architecture Frameworks Should You Consider?

Established frameworks provide structured approaches for designing and implementing enterprise data architectures, each offering unique advantages for different organizational contexts while incorporating modern principles of decentralization, semantic intelligence, and federated governance.

Zachman Framework for Enterprise Architecture

The Zachman Framework provides a comprehensive matrix for organizing and categorizing enterprise architecture elements, focusing on six fundamental questions (What, How, Where, Who, When, Why) across six perspectives. Modern adaptations now incorporate mesh domains as "who" columns, data products as "what" inventories, and streaming flows in "when" timing cycles to support contemporary decentralized architectures.

TOGAF Architecture Development Method

The Open Group Architecture Framework (TOGAF) offers a systematic approach to enterprise architecture development through its Architecture Development Method (ADM). Current implementations integrate data strategy alignment with business architecture, decentralized storage blueprints, and contract-driven design patterns that support domain-oriented data ownership while maintaining enterprise coherence.

DAMA-DMBOK Data Management Framework

The Data Management Body of Knowledge (DAMA-DMBOK) provides comprehensive guidance for data governance, quality management, metadata management, and data architecture. The framework now emphasizes interoperability as a dedicated knowledge area, data product lifecycle management, and federated governance models that balance domain autonomy with enterprise standards.

Federal Enterprise Architecture Framework (FEAF)

Originally designed for U.S. government agencies, FEAF emphasizes interoperability, reusability, and standardization across distributed systems. Contemporary implementations focus on semantic architecture principles that enable cross-agency data sharing while maintaining domain sovereignty and regulatory compliance across multiple jurisdictions.

Modern Cloud-Native and Data-Centric Frameworks

Cloud-specific frameworks (AWS Well-Architected Framework, Microsoft Cloud Adoption Framework, Google Cloud Architecture Framework) now provide guidance for designing scalable, secure, and cost-effective data architectures that incorporate edge computing, AI/ML optimization, and sustainability considerations while supporting multi-cloud deployment strategies that avoid vendor lock-in.

What Are the Essential Components of Data Architecture?

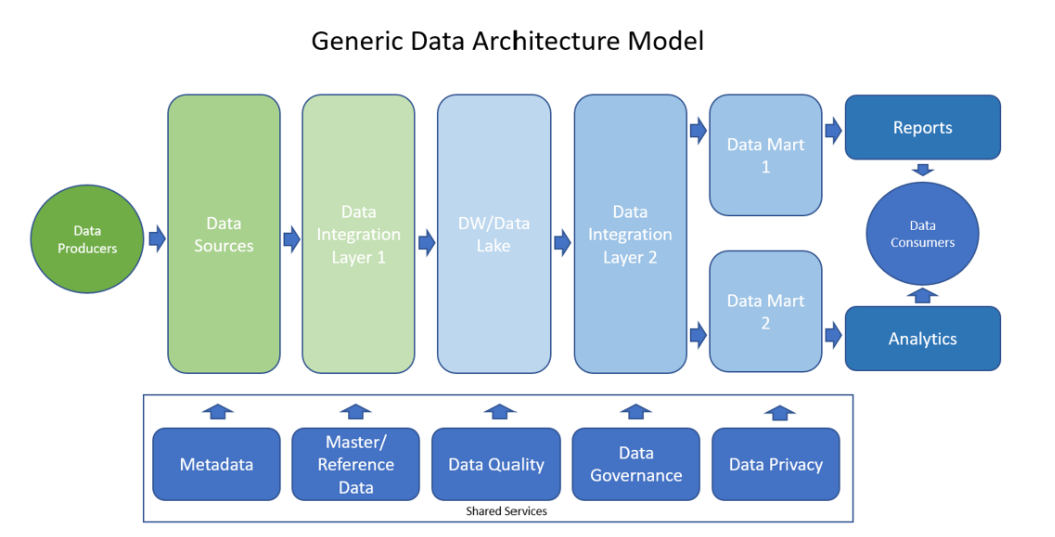

Data Source Integration and Management

Data sources represent the origin points where information is created, collected, or stored in its native format across diverse systems including IoT sensors, edge devices, SaaS applications, and legacy enterprise systems. Modern integration approaches emphasize real-time streaming capabilities, automated schema detection, and semantic enrichment that preserves business context while enabling cross-domain interoperability.

Data Ingestion and Pipeline Management

Data ingestion encompasses the processes and technologies used to collect, validate, and import data from various sources into centralized or distributed repositories. Contemporary pipelines incorporate change data capture for real-time synchronization, data contracts for quality assurance, and event-driven architectures that enable sub-second processing latencies while maintaining data integrity across hybrid environments.

Data Storage and Management Systems

Storage systems must accommodate diverse data types, access patterns, and performance requirements while optimizing for cost and scalability across cloud, edge, and on-premises environments. Modern approaches leverage open table formats like Apache Iceberg for vendor-neutral storage, implement tiered architectures for cost optimization, and support both transactional and analytical workloads through lakehouse patterns that eliminate data duplication.

Data Processing and Transformation Engines

Processing components transform raw data into meaningful information through operations like filtering, aggregation, enrichment, and normalization using both batch and stream processing paradigms. Advanced engines now incorporate AI-driven optimization, semantic transformation capabilities, and edge computing integration that enables localized processing while maintaining global consistency and governance standards.

Data Security and Privacy Controls

Security components protect data throughout its lifecycle using end-to-end encryption, zero-trust access controls, automated audit logging, and privacy-preserving techniques like differential privacy and federated learning. Modern implementations embed security policies within data contracts, enable fine-grained access controls through semantic layers, and support compliance automation that adapts to evolving regulatory requirements across multiple jurisdictions.

How Do Different Types of Data Architecture Address Business Needs?

Data Warehouse Architecture for Structured Analytics

Centralizes structured data from multiple sources into repositories optimized for analytical queries and business intelligence, typically using dimensional modeling and ETL processes. Modern implementations incorporate columnar storage, in-memory processing, and cloud-native scaling while maintaining ACID compliance for transactional consistency and regulatory reporting requirements.

Data Lake Architecture for Diverse Data Types

Data lake architecture stores vast amounts of raw data in its native format using object storage, supporting structured, semi-structured, and unstructured data without predefined schemas. Contemporary implementations leverage metadata catalogs for discoverability, implement data governance controls for quality assurance, and use open formats to prevent vendor lock-in while enabling multi-engine access patterns.

Lakehouse Architecture for Unified Analytics

Combines the flexibility of data lakes with the performance and governance of data warehouses using open table formats like Apache Iceberg, Delta Lake, and Apache Hudi. This architecture enables ACID transactions over object storage, supports both batch and streaming workloads, provides schema enforcement with evolution capabilities, and allows direct BI access without ETL duplication while maintaining cost-effectiveness and vendor neutrality.

Lambda Architecture for Hybrid Processing

Implements separate batch and speed layers that merge results in a serving layer to provide both historical accuracy and low-latency insights for real-time applications. Modern variations incorporate Apache Kafka for event streaming, Apache Spark for batch processing, and specialized systems for real-time computation while maintaining data consistency across processing paradigms.

Data Mesh Architecture for Decentralized Ownership

Treats data as products owned by domain-specific teams, enabling organizational scaling and improving data quality through domain expertise while maintaining interoperability through federated governance. This approach implements domain-oriented data products with clear APIs, establishes self-serve infrastructure platforms, and uses computational governance to enforce enterprise policies across decentralized domains without creating bottlenecks.

Microservices Architecture for Application Integration

Decomposes applications into small, independent services that communicate via APIs, enabling independent scaling and technology choice while supporting rapid development and deployment cycles. Data-oriented microservices manage domain-specific data stores, implement event-driven communication patterns, and maintain loose coupling through well-defined interfaces and data contracts.

Kappa Architecture for Stream-First Processing

Uses a single stream-processing pipeline for both real-time and batch processing, simplifying architectural complexity while maintaining event-driven consistency. This approach treats all data as continuous streams, implements event sourcing for state management, and enables reprocessing of historical events when business logic changes or corrections are needed.

Hybrid Data Architecture for Enterprise Flexibility

Combines multiple patterns including warehouses, lakes, streaming platforms, and edge computing to address diverse business requirements while maintaining architectural coherence. This approach enables gradual modernization of legacy systems, supports multi-cloud deployment strategies, and provides flexibility to adopt new technologies without disrupting existing operations while ensuring consistent governance across all components.

What Are the Best Practices for Implementing Data Architecture?

- Align architecture with strategic business objectives through domain-oriented product thinking and value-driven metrics.

- Establish comprehensive data governance policies via federated models that balance autonomy with enterprise consistency.

- Implement layered security and privacy controls using zero-trust principles and automated policy enforcement.

- Design for scalability and performance through edge-to-cloud integration and AI-driven optimization.

- Enable seamless data integration and interoperability using open standards and platforms like Airbyte for vendor-neutral connectivity.

- Ensure continuous data quality and monitoring through automated contracts and real-time observability systems.

- Plan for future technology evolution via composable architectures and quantum-ready design principles.

How Does Semantic Data Mesh Transform Decentralized Data Management?

Semantic Data Mesh represents the convergence of domain-oriented data ownership with contextual intelligence through knowledge graphs and semantic technologies. This architectural pattern enables self-describing data infrastructure where business meaning is structurally embedded, allowing both human and machine interpretation without extensive transformation pipelines while maintaining decentralized ownership and federated governance.

Foundations in Contextual Intelligence

Semantic enrichment augments domain-specific data products with machine-interpretable context using ontologies, taxonomies, and knowledge graphs that reduce data preparation time by 60% while increasing analytic accuracy through business context preservation. Healthcare providers implementing semantic data mesh can dynamically link patient records to medical ontologies, enabling clinicians to query complex relationships without understanding underlying technical schemas while maintaining domain expertise and ownership.

Knowledge Graph Integration Across Domains

The architecture implements three interconnected layers: domain-oriented data products with embedded semantic metadata, cross-domain knowledge graphs mapping entity relationships using RDF and OWL standards, and federated governance through machine-readable policies. Manufacturing implementations demonstrate supply chain anomaly detection reduced from 48 hours to 15 minutes through semantic relationships between production data, supplier databases, and IoT sensors without centralized data consolidation.

AI-Enhanced Cognitive Capabilities

Integration with generative AI creates cognitive data meshes where natural language queries are parsed through knowledge graphs for enterprise-reliable responses. Advanced implementations automatically classify pipeline anomalies through semantic context while maintaining domain autonomy, positioning semantic data mesh as foundational infrastructure for AI operationalization that requires contextual understanding rather than just technical data access.

What Role Do Data Contracts Play in Modern Data Architecture?

Data Contracts establish formal specifications governing data exchange between producers and consumers, functioning as binding agreements that guarantee structure, quality, and semantics similar to API contracts. This design-first approach shifts responsibility upstream, with quality issues resolved during development rather than production while enabling automated validation and compliance enforcement across decentralized architectures.

Machine-Readable Specifications and Standards

Contemporary implementations use YAML-based specifications comprising fundamental metadata (ownership, domain), schema definitions with constraints, data quality thresholds, and service-level agreements with automated breach protocols. These contracts enable automated validation at ingestion points while reducing data incidents by 73% compared to informal agreements through proactive quality assurance embedded in development workflows.

Integration with Domain-Oriented Architectures

Data Contracts become critical in decentralized architectures by establishing clear interfaces between domain teams that operationalize data-as-product principles including discoverability through self-documenting interfaces, trustworthiness via automated compliance checks, evolution management through versioned contracts, and observability integration where contractual SLAs trigger automated alerts and remediation processes.

Synergies with Event-Driven Real-Time Processing

Advanced implementations integrate with event meshes for real-time validation, performing schema validation within milliseconds of event emission to prevent invalid data propagation throughout downstream systems. This supports "data contract-as-code" practices where CI/CD pipelines automatically test contract compliance before deployment, ensuring quality gates are maintained across rapid development cycles while supporting real-time business requirements.

How Do AI-Driven Technologies Reshape Modern Data Architecture?

Semantic Layer Architecture for Business Context

Semantic layers translate technical data structures into business terminology through ontologies and metadata management, enabling self-service analytics without technical mediation. Modern implementations incorporate natural language processing for conversational queries, achieve 95% accuracy when grounded in semantic frameworks, and support real-time predictive capabilities through AI-enhanced data fabrics that merge historical patterns with live data streams.

Automated Data Quality and Observability

AI-driven systems monitor pipelines in real-time using machine learning algorithms for anomaly detection, schema evolution tracking, and quality issue identification that reduces manual oversight by 40% while improving reliability. Advanced platforms implement predictive governance where models forecast infrastructure needs, optimize resource allocation, and enable self-healing workflows that adapt to usage patterns without human intervention.

GenAI Integration and Retrieval-Augmented Generation

Retrieval-augmented generation (RAG) combines large language models with enterprise data through semantic layers to deliver accurate, contextual responses while maintaining data governance and security controls. Implementation requires careful grounding in business context to avoid hallucinations, with conversational interfaces enabling business users to generate complex queries and insights while ensuring responses are traceable to authoritative data sources.

What Are the Latest Approaches to Real-Time Data Governance and Compliance?

Dynamic Policy Enforcement in Streaming Environments

Real-time governance systems monitor data streams continuously, applying policies and controls as data flows through event-driven architectures rather than batch-oriented retrospective compliance. Modern implementations use AI-powered validation to flag non-compliant data within milliseconds of ingestion while maintaining audit trails across distributed processing environments, enabling business agility without compromising regulatory adherence.

Automated Compliance Monitoring and Reporting

Machine-learning compliance systems analyze data usage patterns, access logs, and processing activities to identify potential regulatory violations through behavioral analysis and pattern recognition. These systems generate automated compliance reports, track data lineage for audit purposes, and provide real-time alerts when anomalous access patterns or data usage behaviors indicate potential policy violations or security breaches.

Ethical AI and Bias Detection Frameworks

AI governance frameworks monitor machine-learning models for bias, fairness, and ethical implications through continuous evaluation of model outputs, training data quality, and decision impact analysis. Implementation includes explainable AI requirements for regulatory compliance, automated bias detection in data pipelines, and fairness constraints embedded in model training processes to ensure responsible AI deployment across enterprise systems.

Privacy-Preserving Analytics and Federated Learning

Technologies like differential privacy, homomorphic encryption, and federated learning enable analytics while protecting individual privacy rights through mathematical privacy guarantees and distributed computation models. These approaches allow organizations to derive insights from sensitive data without exposing individual records, support cross-organizational collaboration without data sharing, and maintain compliance with privacy regulations while enabling data-driven innovation.

How Does Data Architecture Compare to Data Modeling?

- Data Architecture constitutes the high-level, strategic framework that governs data management across the organization, establishing principles, patterns, and policies that guide technology decisions and business alignment while incorporating modern concepts like domain-oriented ownership, semantic intelligence, and federated governance models.

- Data Modeling involves detailed representation of data entities, relationships, and attributes within specific systems or domains, focusing on technical implementation details, schema design, and optimization for particular use cases while operating within the broader architectural framework and principles established at the enterprise level.

How Do Leading Companies Implement Data Architecture?

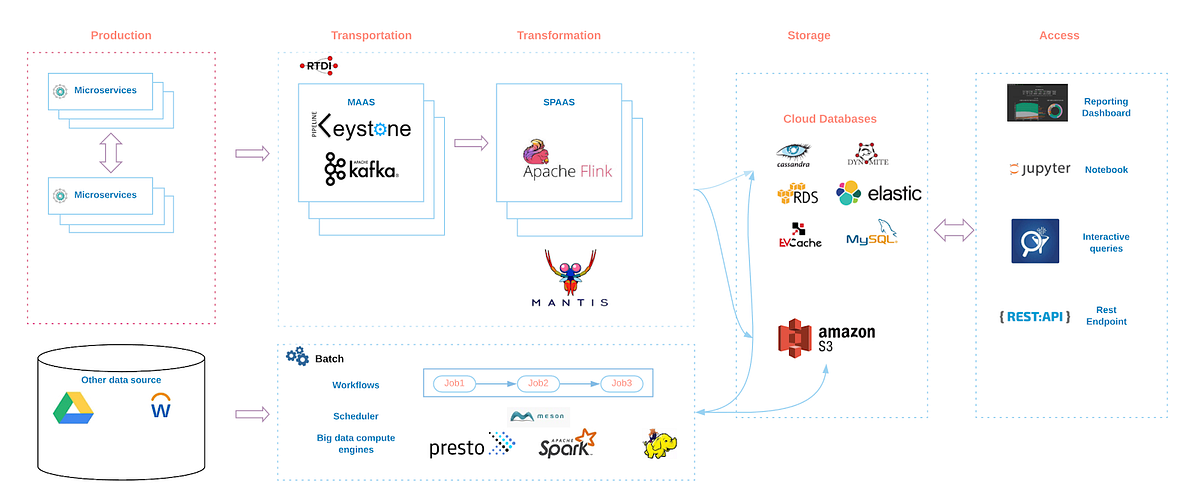

Netflix: Microservices and Streaming Architecture

Netflix processes billions of events daily using Apache Kafka for event streaming, Apache Flink and Spark for real-time processing, with Amazon S3 and Apache Iceberg powering its petabyte-scale data lake that supports personalization algorithms and content recommendation systems. The architecture enables real-time A/B testing, viewer behavior analysis, and content optimization across global markets while maintaining sub-second response times for streaming video delivery.

Uber: Lambda Architecture for Real-Time and Batch Processing

Uber employs Lambda architecture with Apache Hudi for incremental data processing, Kafka for event streaming, and Spark for both batch and real-time analytics to power dynamic pricing algorithms, driver matching systems, and route optimization that processes millions of rides daily. The architecture supports real-time marketplace balancing, fraud detection, and predictive analytics while maintaining data consistency across batch and streaming processing paradigms.

What Are the Key Takeaways for Modern Data Architecture?

Modern data architecture emphasizes decentralized ownership through Data Mesh principles, semantic intelligence integration, and AI-driven automation that transforms traditional bottlenecks into competitive advantages. Contemporary implementations leverage open table formats like Apache Iceberg for vendor neutrality, implement federated governance models that balance autonomy with enterprise standards, and incorporate edge-to-cloud processing capabilities that enable real-time decision-making at unprecedented scale.

Organizations that embrace domain-oriented data products, semantic layers for business context, and data contracts for quality assurance achieve faster decision-making cycles, lower operational costs through automation, and enhanced analytical capabilities that support AI/ML initiatives. The evolution toward composable, sustainable architectures that embed governance and security by design positions forward-thinking enterprises to leverage data as a strategic asset rather than a technical liability in an increasingly competitive marketplace.

FAQs

How does data architecture differ from data design?

Data architecture defines the overall strategic framework including governance models, technology patterns, and enterprise principles, while data design focuses on detailed structuring and organization within specific systems, including schema design, relationship modeling, and optimization for particular use cases and business requirements.

What data architecture patterns are most effective for modern organizations?

Lakehouse architectures combining lake flexibility with warehouse governance, and Data Mesh patterns enabling domain-oriented ownership are increasingly popular. Hybrid approaches that combine semantic layers, data contracts, and federated governance provide the most comprehensive solutions for diverse organizational requirements and scalability challenges.

What role does a data architect play in modern organizations?

Data architects design and oversee the strategic implementation of data management systems, ensuring alignment between business requirements and technical capabilities while incorporating modern principles like domain-oriented ownership, semantic intelligence, federated governance, and AI-driven automation that enable sustainable competitive advantage through data.

How should organizations document their data architecture?

Use architectural diagrams, data-flow documentation, semantic metadata catalogs, and automated tools that generate documentation from system metadata while incorporating data contracts for interface specifications, knowledge graphs for business context, and active metadata systems that maintain documentation currency through AI-driven updates.

What are the key considerations for cloud-based data architecture?

Data sovereignty and residency requirements, comprehensive security including zero-trust models, cost optimization through intelligent automation, multi-cloud flexibility to avoid vendor lock-in, federated governance frameworks that scale across cloud environments, and sustainability considerations for long-term operational efficiency and regulatory compliance.

.webp)