Demystifying Data Lake Architecture: A Beginner's Guide

Summarize this article with:

✨ AI Generated Summary

Modern data lake architectures provide scalable, flexible repositories for storing raw, diverse data types, supporting advanced analytics, AI, and real-time processing. Key benefits include:

- Schema-on-read flexibility, extensive language support, and cost-effectiveness compared to traditional data warehouses.

- AI-powered observability for automated metadata management, real-time anomaly detection, and predictive analytics enhancing data quality and operational efficiency.

- Best practices involve strong governance, data cataloging, security, performance optimization, and use of open table formats like Delta Lake.

- Tools like Airbyte streamline data ingestion with extensive connectors, enterprise-grade security, and AI-assisted development.

- Choosing a data lake solution requires evaluating architecture flexibility, integration ecosystem, governance, scalability, and AI/ML support.

Enterprise data teams face significant challenges managing growing volumes of diverse data while ensuring quick access for analytics and AI workloads. Legacy data management approaches often create technical debt, operational bottlenecks, and security concerns that prevent organizations from fully leveraging their data assets. Modern data lake architectures offer a solution by providing scalable, flexible repositories that accommodate raw data in its native format while supporting advanced analytics capabilities.

This comprehensive guide explores how data lake architectures have evolved from simple storage solutions into sophisticated platforms with AI-powered observability, lakehouse integration, and data mesh frameworks.

What Is a Data Lake and Why Does It Matter?

A data lake is a vast centralized repository that enables you to store raw and unprocessed data in its native format without requiring any modifications. It can accommodate diverse data types, including structured, semi-structured, and unstructured data. Data lakes are built on scalable and distributed storage systems, such as Apache Hadoop Distributed File System (HDFS) or cloud-based object storage solutions. These technologies enable you to store and process massive amounts of data cost-effectively.

Modern data lake architecture has evolved significantly beyond simple storage repositories to become sophisticated platforms that support advanced analytics, machine learning, and real-time processing capabilities. Today's data lakes incorporate intelligent automation, AI-powered observability, and seamless integration with cloud-native services to address the growing complexity of enterprise data management requirements.

What Are the Key Benefits of Implementing a Data Lake?

Data lakes offer several benefits that make them a valuable component of modern data architectures. Here are some key advantages:

- Data Exploration and Discovery: Data lakes offer robust metadata management capabilities, making it easier for you to discover, explore, and understand available data assets. Advanced cataloging systems automatically discover and document data assets as they are added to the platform, maintaining up-to-date inventories that support both data discovery and compliance requirements.

- Flexibility in Data Storage: Unlike traditional databases that require structured data types, data lakes can store raw, semi-structured, and unstructured data without predefined schemas, offering flexibility in data storage and access. This eliminates the need for extensive preprocessing and enables you to preserve data in its original format for future analysis needs.

- Scalability: Data lakes are highly scalable and capable of efficiently expanding to accommodate growing data volumes without sacrificing performance or flexibility. Modern cloud-native architectures provide virtually unlimited storage capacity while automatically scaling processing resources based on workload demands.

- Extensive Language Support: Unlike traditional data warehouses, which primarily rely on SQL for managing data, data lakes offer more diverse options to handle data. You can leverage Python, R, Scala, and other programming languages for advanced analytics and machine learning workloads.

- Cost-Effectiveness: A data lake is generally more cost-effective than a traditional data warehouse. It allows diverse storage options to reduce costs with large volumes of data while implementing consumption-based pricing models that optimize resource utilization.

How Do Data Lakes Differ From Data Warehouses?

Unlike data warehouses, which organize data with logical schemas such as star or snowflake models, data lakes utilize a flat architecture. In a data lake, each element is typically associated with metadata tags and unique identifiers, which facilitate flexible data retrieval.

What Are the Core Components of Data Lake Architecture?

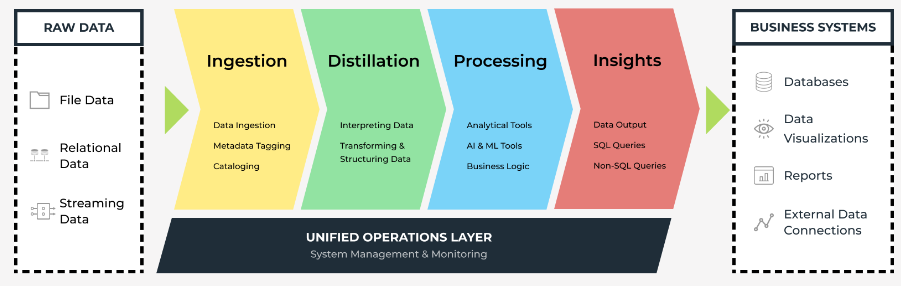

The above image represents a typical data lake architecture. Let's discuss each of the layers in detail:

Ingestion Layer

The data ingestion layer is primarily responsible for collecting and importing data from various sources. It acts as the entry point of the data lake, ensuring that data is efficiently ingested for subsequent processing. This layer can handle all data types, including unstructured formats such as video, audio files, and sensor data. It can ingest raw data in real time as well as in batch mode. Furthermore, with the use of effective metadata and cataloging techniques, you can quickly identify and access relevant datasets.

Distillation Layer

The distillation layer is crucial in data lake architecture as it bridges the gap between raw data ingestion and structured data processing. Raw data ingested from various sources often comes in different formats and structures. The distillation layer interprets this data and transforms it into structured data sets that can be stored in files and tables. This transformation involves tasks such as data cleansing, normalization, aggregation, and enrichment.

Processing Layer

The processing layer is responsible for executing queries on the data stored in the data lake. It acts as the computational engine that allows you to handle data for different AI/ML and analytics requirements. This layer offers flexibility in terms of how the data is processed. It supports batch processing, real-time processing, and interactive querying, depending on the specific requirements and use cases.

Insights Layer

The insights layer acts as the query interface of the data lake, enabling you to retrieve data through SQL or NoSQL queries. It plays a key role in accessing and extracting valuable insights from the data stored within the data lake. This layer not only allows you to retrieve data from the data lake but also displays it in reports and dashboards for easy interpretation and analysis.

Unified Operations Layer

The unified operations layer is responsible for monitoring the data lake to ensure its efficient operations. It involves overseeing various aspects of the system, including performance, security, and data governance, to maintain optimal functionality. This layer handles workflow management within the data lake architecture, ensuring processes are executed smoothly.

What Are AI-Powered Data Lake Observability and Automated Management Capabilities?

The integration of artificial intelligence into data lake observability represents one of the most significant architectural innovations emerging in modern data management. Traditional observability approaches, which relied heavily on manual monitoring and reactive troubleshooting, are being transformed by AI-driven systems that provide proactive, intelligent data management capabilities.

AI-powered observability systems fundamentally reimagine how data lakes handle the five pillars of data observability: freshness, quality, volume, schema, and lineage. Unlike traditional systems that treat logs, metrics, and traces as separate entities requiring distinct storage and indexing mechanisms, AI-powered approaches leverage data lake architectures to correlate all observability data in a unified knowledge graph.

This integration enables AI systems to identify patterns across different data types automatically, providing engineers with meaningful responses to complex queries rather than requiring manual dashboard filtering and correlation.

Automated Metadata Management and Quality Assurance

Advanced AI-powered systems automatically extract metadata from data sources, track data lineage in real-time, and update metadata repositories while retaining multiple versions of each file's metadata. This automation extends beyond basic metadata collection to include intelligent data cataloging, quality assessments, and compliance checks, significantly enhancing both efficiency and effectiveness of data management processes.

Automated data quality monitoring systems continuously analyze incoming data streams to identify inconsistencies, missing values, or other quality issues that could impact analytical accuracy. These systems can automatically apply data cleansing rules, flag potential problems for human review, and maintain comprehensive data quality metrics that support governance and compliance requirements.

Real-Time Anomaly Detection and Predictive Analytics

Real-time anomaly detection and predictive analytics form core components of AI-powered data lake observability systems. These capabilities enable organizations to shift from reactive troubleshooting to proactive issue prevention by identifying patterns that indicate potential problems before they manifest as system failures. The integration of machine learning algorithms allows observability systems to learn from historical incidents and improve their predictive accuracy over time, creating increasingly sophisticated automated response mechanisms.

Intelligent data cataloging systems automatically discover and document data assets as they are added to the platform. These systems can analyze data structures, identify relationships between different datasets, and generate comprehensive metadata that makes data discovery significantly easier for business users. The automation of cataloging processes ensures that data documentation remains current and accurate even as data volumes and complexity continue to grow.

Advanced Query Optimization and Automated Insights

Advanced query optimization capabilities leverage machine learning algorithms to automatically improve query performance by analyzing usage patterns and optimizing data placement and indexing strategies. These systems can predict which data will be accessed together and pre-position it for optimal query performance, while also identifying opportunities to create summary tables or materialized views that can accelerate common analytical queries.

Automated insight generation represents the next frontier in AI-driven data lake capabilities, where systems can automatically identify interesting patterns or anomalies in data and generate natural language summaries that highlight key findings for business stakeholders. These capabilities democratize access to data insights by removing the technical barriers that traditionally required specialized analytical skills, enabling business users to benefit from advanced analytics without requiring extensive training or technical expertise.

What Are the Best Practices for Optimizing Data Lake Architecture?

Implementing best practices is crucial for optimizing the performance and efficiency of data lakes. Here are some strategies to enhance the performance of data lakes:

- Define Data Policies and Standards: Establish clear data policies and standards to ensure consistency, quality, and governance across the data lake environment. These guidelines act as a foundation for effective data management, enabling you to derive meaningful insights. Modern governance frameworks should include automated policy enforcement, comprehensive audit capabilities, and sophisticated access control mechanisms that are essential for regulatory compliance and risk management.

- Data Catalogs: Employ data catalogs to organize and manage metadata. This makes it easier to discover and utilize data assets within the data lake. An effective data catalog should enable you to search for data using keywords, tags, and other metadata. Likewise, it should provide insights into data quality, lineage, and usage. Advanced cataloging systems automatically discover and document data assets as they are added to the platform, maintaining up-to-date inventories that support both data discovery and compliance requirements.

- Implement a Retention Policy: Set up a retention policy to avoid storing unnecessary data that may result in a data swamp. Identifying and deleting obsolete data is crucial for compliance with regulations and cost-effectiveness. Modern retention policies should include automated lifecycle management capabilities that transition data between storage tiers based on access patterns and business requirements.

- Enhance Data Security: Implement strong security measures to protect the data. Encryption techniques, data masking, and access controls should be used at various levels so that only authorized users can manage the data. Contemporary security implementations should include fine-grained access controls, real-time threat detection, and comprehensive audit logging that provides complete visibility into data operations for compliance and security monitoring.

- Optimize for Performance: A significant challenge in data lakes is achieving fast query performance. To optimize your data lake's performance, you can utilize techniques like partitioning, indexing, and caching. Partitioning involves dividing data into smaller segments to reduce the amount of scanned data for more efficient querying. Indexing is the process of creating indexes on the data to accelerate search operations. Caching temporarily stores frequently accessed data in memory to reduce query runtimes. Modern optimization strategies should include liquid clustering, intelligent data placement, and automated query optimization capabilities that continuously improve performance based on usage patterns.

- Leverage Open Table Formats: Implement open table formats like Delta Lake, Apache Iceberg, or Apache Hudi that provide ACID transaction support, schema evolution capabilities, and improved query performance. These formats address traditional data lake challenges, including consistency issues, schema drift, and the inability to perform reliable updates and deletes while maintaining cost-effectiveness and scalability.

- Implement Comprehensive Monitoring: Deploy advanced monitoring and observability solutions that provide real-time visibility into data pipeline health, performance metrics, and data quality indicators. Modern monitoring should include automated anomaly detection, predictive analytics for failure prevention, and comprehensive dashboards that enable proactive management of complex data lake environments.

How Does Airbyte Enhance Modern Data Lake Architecture?

Data ingestion is a critical component of modern data lake architecture, and this is where Airbyte excels as a comprehensive data integration platform. Airbyte follows an ELT (Extract, Load, Transform) approach, which aligns perfectly with modern data lake architectures and their scalability requirements. This methodology enables you to load raw data directly into data lakes and defer transformation processes until analysis time, leveraging the computational power of modern cloud data platforms.

To simplify data integration processes, Airbyte offers multiple user-friendly interfaces and a comprehensive library of over 600+ connectors. These connectors include databases, APIs, SaaS applications, data warehouses, and specialized data sources that continue to expand based on community contributions and market demand. With these connectors, you can efficiently extract data from multiple sources and load it into data lakes such as AWS S3, Azure Blob Storage, Google Cloud Storage, and modern lakehouse platforms.

Enterprise Features and Governance

Here are the key enterprise features of Airbyte:

- Comprehensive Security Framework: Encrypts data in transit, integrates role-based access control with enterprise identity systems, provides comprehensive audit logging, and is SOC 2 and ISO 27001 certified with GDPR-aligned practices for regulated industries.

- AI-Powered Development: The AI Assistant functionality uses large language models to automatically pre-fill and configure key fields in the Connector Builder, offering intelligent suggestions and generating working connectors from API documentation URLs in seconds.

- Multi-Region Deployments: Self-Managed Enterprise customers can build data pipelines across multiple isolated regions while maintaining centralized governance from a single Airbyte deployment, addressing compliance requirements and reducing cross-region egress fees.

- Advanced Connector Ecosystem: The Connector Development Kit enables rapid custom connector creation for specialized requirements, while the platform's extensive library continues to expand toward 1,000 connectors by the end of 2025.

- PyAirbyte Integration: The PyAirbyte library provides unique capabilities for data scientists building AI applications by enabling direct integration of data pipelines into machine learning workflows, supporting popular frameworks like LangChain and LlamaIndex.

- Performance Optimization: Airbyte provides efficient log-based change data capture for near-real-time data synchronization, minimizing impact on source systems and maintaining transactional consistency.

What Should You Consider When Choosing a Data Lake Solution?

When implementing a data lake solution, you should evaluate several critical factors that will determine the long-term success of your data architecture. The choice between different deployment models, governance frameworks, and technology stacks will significantly impact your organization's ability to scale data operations while maintaining security and compliance standards.

- Architecture Flexibility: Consider whether your chosen solution supports both traditional data lake and modern lakehouse patterns, enabling you to evolve your architecture as business requirements change. Solutions that provide native support for open table formats like Delta Lake and Apache Iceberg offer greater flexibility and prevent vendor lock-in while providing advanced capabilities like ACID transactions and schema evolution.

- Integration Ecosystem: Evaluate the breadth and depth of available connectors and integration capabilities, particularly for your specific data sources and destinations. Platforms with extensive connector libraries and active community development ensure that you can integrate with both current and future data sources without significant custom development overhead.

- Governance and Security: Assess the governance capabilities, including metadata management, data lineage tracking, access controls, and compliance features that are essential for enterprise deployments. Modern solutions should provide automated governance capabilities, comprehensive audit trails, and fine-grained security controls that scale with organizational growth.

- Performance and Scalability: Consider the platform's ability to handle your current and projected data volumes while maintaining query performance and operational reliability. Solutions that leverage cloud-native architectures with automatic scaling capabilities provide better long-term value than those requiring manual infrastructure management.

- AI and Machine Learning Support: Evaluate the platform's capabilities for supporting modern AI and machine learning workflows, including vector database integrations, real-time processing capabilities, and support for unstructured data processing that are increasingly important for competitive advantage.

Conclusion

Modern data lake architectures have evolved significantly beyond simple storage repositories into sophisticated platforms supporting advanced analytics and real-time processing. The integration of AI-powered observability, lakehouse architectures, and data mesh frameworks addresses traditional limitations while enhancing governance and performance. Organizations implementing data lakes should focus on architecture flexibility, robust integration capabilities, and comprehensive governance frameworks. With tools like Airbyte enhancing data ingestion processes, enterprises can build scalable data ecosystems that balance innovation with security and compliance requirements.

Frequently Asked Questions

1. What is the difference between a data lake and a traditional database?

A data lake stores raw, unprocessed data in native formats without predefined schemas, supporting structured, semi-structured, and unstructured data. Traditional databases require structured data with fixed schemas, offering less flexibility but optimized performance for predefined queries and transactional operations.

2. How do lakehouse architectures improve upon traditional data lakes?

Lakehouses merge data lake flexibility with warehouse governance, offering ACID transactions, schema enforcement, and advanced metadata management. They store diverse data in native formats, reduce duplication, simplify architecture, and support BI, analytics, and machine learning on a unified platform.

3. What role does AI play in modern data lake management?

AI transforms data lake management through automated observability, intelligent data cataloging, real-time anomaly detection, and predictive analytics for issue prevention. AI-powered systems can automatically extract metadata, track data lineage, optimize query performance, and generate insights, significantly reducing manual operational overhead while improving data quality and system reliability.

4. How does data mesh architecture change traditional data lake governance?

Data mesh architecture decentralizes data ownership from central teams to domain experts who understand their data best, while maintaining federated governance standards for consistency and interoperability. This approach reduces bottlenecks associated with centralized management while leveraging domain expertise to improve data quality and relevance for specific business contexts.

5. What are the key considerations for implementing a modern data lake architecture?

Key considerations include choosing solutions that support both traditional data lake and lakehouse patterns, evaluating integration ecosystems and connector availability, assessing governance and security capabilities, ensuring performance and scalability for projected data volumes, and confirming support for AI and machine learning workflows that are increasingly important for competitive advantage.

.webp)