Data Lake Vs Data Warehouse: Choosing the Right Data Storage Architecture

Summarize this article with:

✨ AI Generated Summary

Organizations are shifting from traditional data lakes or warehouses to hybrid lakehouse architectures that combine flexibility, performance, and cost-efficiency. Key distinctions include:

- Data lakes store raw, multi-format data with schema-on-read, ideal for exploratory analytics, IoT data, and cost-effective archival.

- Data warehouses use schema-on-write for high-quality, optimized query performance suited for business intelligence and regulatory reporting.

- Lakehouse architectures unify these benefits with ACID transactions, open standards, and support for diverse analytics workloads.

- AI-driven automation enhances data management through intelligent pipeline orchestration, query optimization, and predictive governance.

- Hybrid approaches leverage multi-tier storage and unified platforms to balance cost, scalability, and accessibility across user types.

Organizations today face an unprecedented challenge: managing massive volumes of data from diverse sources while ensuring it remains accessible, secure, and actionable. Recent industry shifts reveal that enterprises are fundamentally rethinking their approach to data storage and processing, moving beyond traditional either-or decisions between data lakes and warehouses.

The choice between flexible data lakes, high-performance data warehouses, or hybrid lakehouse architectures directly impacts analytical capabilities, operational costs, and competitive advantage. As AI-driven automation transforms data management and new architectural patterns emerge, understanding these storage paradigms becomes crucial for building scalable, future-ready data infrastructure.

This comprehensive guide examines the technical foundations, use cases, and strategic considerations for each approach, while exploring how modern integration platforms enable organizations to leverage the best of all worlds without vendor lock-in or operational complexity.

What Is a Data Lake and How Does It Enable Flexible Data Storage?

A data lake serves as a centralized repository where organizations store raw data in its native format without requiring upfront schema definition or transformation. This architecture supports structured data from traditional databases, semi-structured data like JSON and XML, and unstructured data including images, videos, and log files from diverse sources such as IoT devices, mobile applications, and operational systems.

The schema-on-read approach distinguishes data lakes from traditional storage systems. Rather than enforcing rigid data structures at ingestion, lakes defer schema application until query time, enabling rapid data collection without preprocessing bottlenecks. This flexibility proves particularly valuable for data science teams conducting exploratory analysis, machine-learning model development, and experimental analytics where data requirements evolve continuously.

Modern data lakes leverage distributed storage systems like Hadoop HDFS or cloud object-storage services including Amazon S3, Azure Data Lake, and Google Cloud Storage. These platforms provide horizontal scalability, allowing organizations to store large amounts of data cost-effectively while maintaining high availability and durability.

Core Capabilities of Data Lakes

- Schema Flexibility and Evolution: Data lakes accommodate changing data structures without requiring expensive schema migrations. New data sources integrate seamlessly, and evolving business requirements don't necessitate architectural overhauls.

- Multi-Format Data Support: Native support for diverse data formats including Parquet, ORC, Avro, JSON, CSV, and binary formats enables comprehensive data collection strategies without format conversion overhead.

- Cost-Effective Storage: Commodity storage pricing, particularly in cloud environments, makes data lakes economically viable for large-scale data retention and long-term archival requirements.

- Advanced Analytics Integration: Direct compatibility with big data processing frameworks like Apache Spark, distributed SQL engines like Presto, and machine learning platforms enables sophisticated analytics without data movement.

What Is a Data Warehouse and Why Is It Essential for Business Intelligence?

A data warehouse represents a purpose-built system designed for analytical processing, where data undergoes extraction, transformation, and loading processes before storage in predefined schemas. This architecture prioritizes data quality, query performance, and analytical consistency over storage flexibility, making it ideal for business intelligence, financial reporting, and operational dashboards.

The schema-on-write methodology ensures data validation, cleansing, and transformation occur before storage, guaranteeing high data quality and consistency across analytical workloads. Dimensional-modeling techniques, including star and snowflake schemas, optimize query performance while providing intuitive data relationships for business users.

Modern cloud data warehouses such as Snowflake, Amazon Redshift, Google BigQuery, and Azure Synapse Analytics offer elastic scaling, columnar-storage optimization, and sophisticated query-optimization engines. These platforms combine the performance advantages of traditional warehouses with cloud-native scalability and operational simplicity.

Core Capabilities of Data Warehouses

- Query Performance Optimization: Columnar storage, advanced indexing strategies, materialized views, and query result caching can significantly improve response times for complex analytical queries across large datasets, often enabling sub-second performance, though not universally guaranteeing it.

- Data Quality Assurance: Built-in validation rules, constraint enforcement, and transformation logic ensure analytical accuracy and consistency, reducing downstream data quality issues.

- Business Intelligence Integration: Native integration with BI platforms including Tableau, Power BI, Looker, and self-service analytics tools enables widespread data access without technical expertise requirements.

- Historical Data Management: Time-variant data storage capabilities support trend analysis, comparative reporting, and historical business intelligence requirements essential for strategic decision-making.

- Concurrent User Support: Multi-user architectures with workload isolation ensure consistent performance across diverse analytical workloads and user groups.

How Is AI-Driven Automation Transforming Data Management?

Artificial intelligence and machine learning are revolutionizing data management by automating traditionally manual processes, optimizing resource allocation, and enabling predictive data governance. These technologies transform static data infrastructure into adaptive, self-optimizing systems that reduce operational overhead while improving data quality and accessibility.

Autonomous Data-Pipeline Management

Modern AI systems automatically detect schema changes across source systems and adapt data pipelines accordingly. Predictive pipeline orchestration leverages historical execution patterns to optimize resource allocation, scheduling, and failure-recovery strategies.

AI-driven data-quality monitoring continuously analyzes incoming data streams to detect anomalies, inconsistencies, and quality degradation in real time. This automated approach reduces manual intervention while maintaining data integrity across complex data environments.

Intelligent Query Optimization and Resource Management

Machine-learning algorithms analyze query-execution patterns to automatically optimize database configurations, index strategies, and resource allocation. Automated cost-optimization features monitor resource utilization across cloud environments and recommend configuration changes to reduce expenses while maintaining performance requirements.

Natural-language interfaces powered by large-language models enable business users to interact with data systems using conversational queries rather than SQL or technical interfaces. This democratization of data access reduces the burden on technical teams while expanding analytical capabilities across organizations.

Predictive Data Governance and Compliance

AI-enhanced governance systems automatically classify sensitive data, apply appropriate security policies, and monitor compliance with regulatory requirements such as GDPR and HIPAA. Automated lineage tracking maps data relationships across complex multi-system environments, while synthetic-data generation techniques enable privacy-preserving analytics.

These capabilities ensure that organizations maintain regulatory compliance while enabling data-driven innovation across their operations.

What Are the Key Architectural Differences Between Storage Systems?

The architectural distinctions between data lakes and warehouses reflect fundamental trade-offs between flexibility and performance optimization. Data lakes prioritize storage flexibility and cost efficiency, while warehouses emphasize query performance and data consistency.

How Do Data Lakes and Enterprise Data Warehouses Compare Across Key Dimensions?

The data lake vs EDW comparison reveals fundamental differences in approach, capabilities, and optimal use cases. Understanding these distinctions helps organizations make informed architectural decisions based on their specific requirements and constraints.

Data Storage and Organization Strategies

Data lake approaches implement flat object-storage architectures where data retains its original format and structure. This methodology enables rapid ingestion of diverse data types without preprocessing requirements.

Data warehouse approaches employ dimensional-modeling techniques that optimize query performance and enforce data relationships. These structured environments prioritize analytical performance and data consistency over storage flexibility.

Processing and Transformation Methodologies

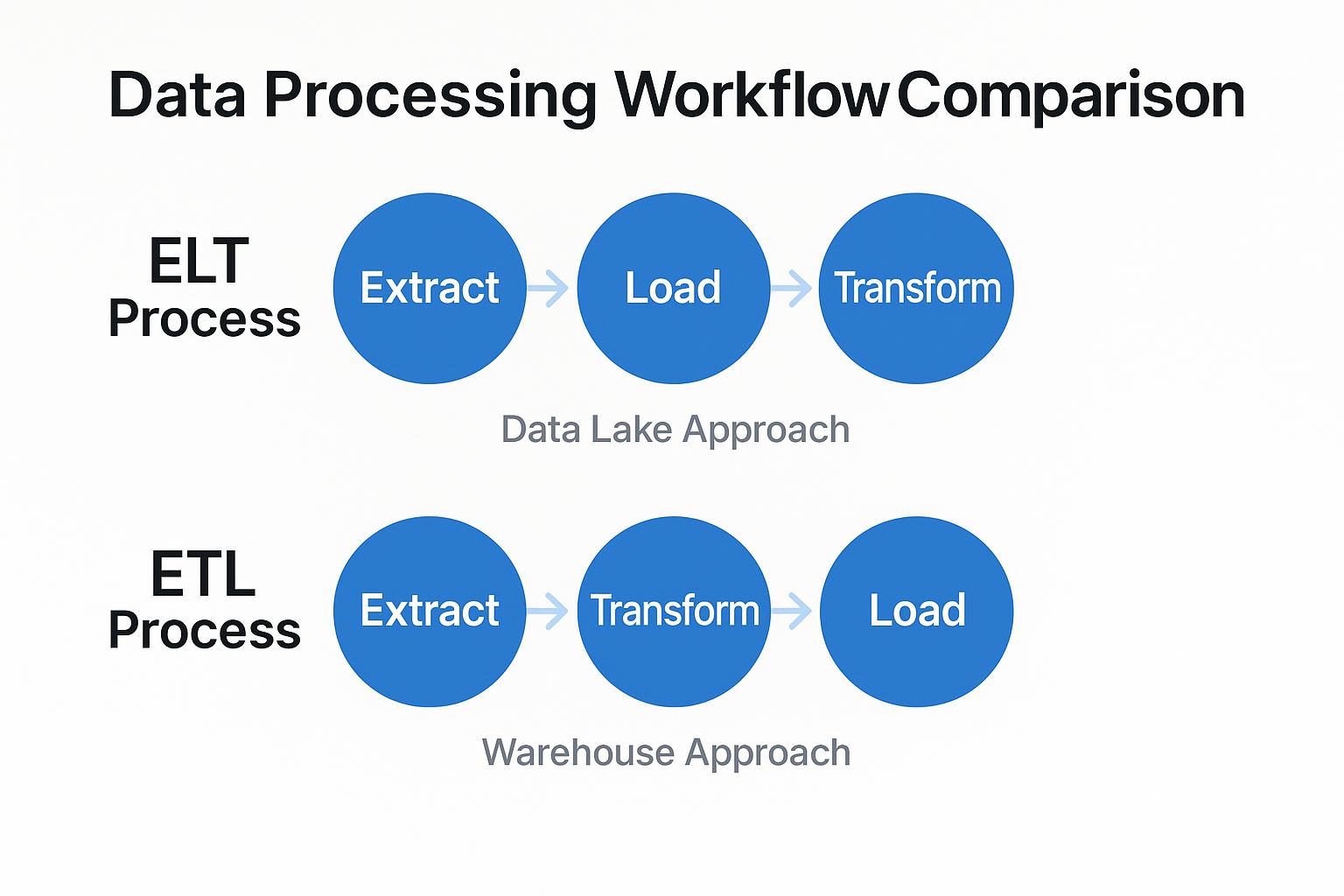

Data lake processing follows Extract, Load, Transform (ELT) patterns where raw data loads immediately, with transformations occurring during analysis. This approach enables faster data availability while deferring processing costs until analysis time.

Data warehouse processing implements Extract, Transform, Load (ETL) workflows where data undergoes cleansing and structuring before storage. This methodology ensures data quality and consistency but requires upfront processing investment.

Performance Optimization and Query Execution

Data lake performance optimization relies on partitioning strategies, columnar file formats, and distributed computing frameworks. These techniques enable scalable processing across large datasets while maintaining cost efficiency.

Data warehouse performance delivers sub-second responses through sophisticated indexing, materialized views, and query-optimization engines. These capabilities support interactive analytics and real-time decision-making requirements.

Scalability and Economic Considerations

Data lake scalability provides linear cost scaling with horizontal storage expansion. Organizations can grow their data storage economically while maintaining performance through distributed processing capabilities.

Data warehouse scalability offers elastic scaling in cloud environments but generally involves higher per-unit storage costs. These platforms optimize for query performance rather than storage economics.

What Is Lakehouse Architecture and Why Does It Matter?

Lakehouse architecture represents a convergence of data-lake flexibility with data-warehouse performance, addressing fundamental limitations of traditional storage paradigms. The technical foundation relies on open-table formats such as Apache Iceberg, Delta Lake, and Apache Hudi that enable ACID transactions, schema evolution, and time-travel capabilities directly on data-lake storage.

This unified approach eliminates the complexity of maintaining separate systems for different analytical workloads. Organizations can store raw data economically while providing high-performance analytics capabilities across diverse use cases.

ACID Transactions and Data Reliability

Lakehouse platforms implement full ACID compliance through sophisticated metadata management and transaction coordination mechanisms, ensuring data consistency across concurrent read/write operations. Schema evolution and time-travel functionality further enhance adaptability and auditability.

These capabilities bring enterprise-grade reliability to data lake environments while maintaining the flexibility that makes lakes attractive for diverse data types and analytical approaches.

Unified Analytics and Processing Capabilities

Lakehouses support batch processing, real-time analytics, machine learning, and business intelligence through unified interfaces and compatibility with tools like Apache Spark, Presto, and Trino. This consolidation reduces operational complexity while expanding analytical capabilities.

The unified approach eliminates data silos and reduces the need for complex data movement between systems, improving both performance and data governance.

Open Standards and Interoperability

Emphasis on open standards prevents vendor lock-in, supports multi-cloud deployments, and enables API-driven integration with existing data-management tools. This approach ensures that organizations retain control over their data architecture while benefiting from ecosystem innovation.

Open table formats facilitate interoperability between different processing engines and analytical tools, providing flexibility in technology choices without sacrificing functionality.

When Should You Choose Each Storage Solution?

Optimal Data-Lake Use Cases

- Exploratory Data Science and Machine Learning: Data lakes excel when organizations need flexible access to raw data for experimental analysis, model training, and algorithm development. The schema-on-read approach enables data scientists to iterate rapidly without predefined analytical requirements.

- IoT and Event Data Collection: High-volume, high-velocity data streams from IoT devices, application logs, and event systems benefit from immediate ingestion capabilities without processing bottlenecks. Data lakes accommodate irregular data patterns and evolving event schemas effectively.

- Long-Term Data Archival and Compliance: Regulatory requirements for data retention combined with infrequent access patterns make data lakes economically attractive for historical data preservation. Organizations can maintain compliance while minimizing storage costs.

- Multi-Format Data Integration: When organizations collect diverse data types, including images, videos, documents, and sensor data alongside traditional structured information, data lakes provide unified storage without format conversion requirements.

Optimal Data-Warehouse Use Cases

- Business Intelligence and Reporting: Organizations requiring consistent, reliable metrics for executive dashboards, financial reporting, and operational monitoring benefit from the data quality assurance and query performance optimization that warehouses provide.

- Regulatory and Financial Reporting: Industries with strict compliance requirements, including healthcare, finance, and government, benefit from the data validation and audit trail capabilities inherent in warehouse architectures.

- Self-Service Analytics for Business Users: When non-technical stakeholders need direct data access through familiar SQL interfaces and BI tools, warehouses provide the performance and usability characteristics required for widespread adoption.

- Transactional Analytics and Real-Time Decisions: Applications requiring sub-second query response times for customer-facing analytics, fraud detection, or operational automation benefit from warehouse optimization strategies.

What Are the Cost Considerations and Total Ownership Economics?

Cost optimization strategies vary significantly between architectures. Data lakes optimize through storage tiering, with frequently accessed data in high-performance tiers and archived data in low-cost cold storage. Compute costs scale with processing requirements, enabling organizations to minimize expenses during low-activity periods.

Data warehouses optimize through query performance improvements that reduce compute time requirements. While storage costs remain higher, efficient query execution can offset these expenses through reduced processing duration and improved user productivity.

Hybrid approaches often deliver optimal cost structures by leveraging each architecture's economic advantages. Organizations can store raw data in lakes for cost-effective retention and processed data in warehouses for high-performance analytics.

Who Uses These Systems and What Skills Are Required?

The skill requirements reflect the different approaches each platform takes to data management and analysis. Data lakes require more technical expertise for setup and optimization, while warehouses prioritize accessibility for business users.

Moderate

How Do Hybrid Approaches Combine the Best of All Worlds?

Modern data architectures increasingly adopt hybrid strategies through multi-tier storage, unified analytics platforms, and cross-system orchestration to balance cost, performance, and flexibility. These approaches enable organizations to leverage the economic advantages of data lakes while providing the performance capabilities of warehouses.

Multi-Tier Storage Strategies

Organizations implement sophisticated data lifecycle management policies that automatically move information between storage tiers based on access frequency, data age, and business value. Hot data requiring frequent access resides in high-performance warehouse storage, while warm data transitions to lakehouse platforms for occasional analytics, and cold data archives in cost-effective lake storage.

Automated tiering policies reduce manual data management overhead while optimizing costs across the entire data lifecycle. Machine learning algorithms analyze access patterns to predict optimal placement strategies and automatically execute data movement between tiers.

Unified Analytics Platforms

Leading cloud providers offer integrated platforms that combine lake and warehouse capabilities within unified management interfaces. Solutions like Azure Synapse Analytics, Google Cloud's BigQuery and Dataflow integration, and AWS's Lake Formation with Redshift Spectrum enable seamless analytics across diverse storage systems.

These platforms abstract the complexity of multi-system architectures while preserving the performance and cost advantages of specialized storage systems. Users access data through consistent interfaces regardless of underlying storage locations.

Cross-System Integration and Orchestration

Modern data orchestration platforms coordinate workflows across lakes, warehouses, and processing systems to deliver comprehensive analytical capabilities. These systems manage data movement, transformation scheduling, and dependency coordination across complex multi-platform environments.

API-driven integration strategies enable custom workflow development while maintaining loose coupling between system components. Organizations can evolve individual components without disrupting entire analytical ecosystems.

How Do You Choose the Right Data Storage Solution for Your Organization?

- Data Characteristics Analysis: Evaluate data volume, variety, velocity, and veracity requirements across current and projected workloads. Consider structured versus unstructured data ratios, real-time processing requirements, and data quality expectations.

- User Base and Access Patterns: Analyze who needs data access, their technical capabilities, and their specific use cases. Consider self-service requirements, concurrent user loads, and performance expectations for different user groups.

- Compliance and Governance Requirements: Assess regulatory requirements, data sovereignty constraints, audit capabilities, and security policies that influence architecture decisions. Consider data retention requirements and privacy protection needs.

- Technology Integration Requirements: Evaluate existing tool investments, preferred vendor ecosystems, and integration complexity tolerance. Consider API availability, standard compliance, and migration requirements.

Conclusion

The choice between data lakes, warehouses, and lakehouse architectures should align with specific organizational needs and data characteristics. Modern enterprises increasingly adopt hybrid approaches that leverage each system's strengths while mitigating limitations. Integration platforms enable seamless data movement across storage paradigms without sacrificing performance or governance. Ultimately, successful data architecture balances flexibility, performance, cost, and compliance requirements to create a sustainable competitive advantage.

Frequently Asked Questions

Can a data warehouse handle data from multiple sources and types?

Yes. After processing, transforming, and validating structured data, data warehouses can consolidate numerous sources. They are less flexible, however, with unstructured formats (e.g., images, audio) compared with data lakes.

Can a data warehouse be used by a single department within an organization?

Absolutely. A department (marketing, finance, etc.) can deploy a data warehouse—or a departmental data mart—to store and analyze datasets specific to its needs, enabling reliable, consistent reporting.

How do data lakes support analytics on real-time data from multiple sources?

Data lakes ingest raw data—including real-time feeds—without waiting for transformation. This lets organizations analyze current information quickly, supporting faster, up-to-date decision-making.

Suggested Read:

.webp)