Can ETL Tools Run Inside Kubernetes?

Summarize this article with:

✨ AI Generated Summary

Kubernetes has become the standard platform for running everything from applications to internal services, but ETL often sits outside that model. Many teams still run pipelines on separate VMs with their own scaling patterns, upgrade cycles, and monitoring workflows. This creates operational friction, higher overhead, and slow incident response.

Modern ETL platforms can run inside Kubernetes when they are built for containerized environments. Tools designed for distributed execution and stateless operation fit naturally into cluster scheduling, autoscaling, and failover. Older, VM-centric systems can run in containers but rarely benefit from Kubernetes and often require heavy workarounds.

This article explains how ETL behaves in Kubernetes, what challenges teams face, and what separates Kubernetes-native ETL tools from legacy platforms.

TL;DR: Can ETL Run Inside Kubernetes?

- Yes, but only modern, cloud-native ETL tools run well inside Kubernetes. Legacy ETL platforms struggle with scaling, state management, and deployment patterns.

- Teams want ETL on K8s for unified infrastructure, native autoscaling, shared observability, and automatic failover.

- Challenges include stateful workload management, resource contention, network/security complexities, and operational overhead.

- Kubernetes-native ETL tools offer Helm charts, stateless execution, distributed workers, and Prometheus/Loki integration.

- Legacy ETL containers usually provide no real horizontal scaling and require heavy operational work.

- Airbyte is designed for K8s with official Helm charts, distributed pods, Temporal orchestration, RBAC, external secrets, and 600+ connectors.

Why Do Teams Want ETL Running in Kubernetes?

Before diving into the technical details, it's worth understanding what drives teams to consolidate their data infrastructure onto Kubernetes in the first place. The motivations go beyond preference for a single tool.

1. Unified Infrastructure Operations

Running ETL on the same infrastructure as your applications means one control plane for all workloads. Your existing kubectl commands, GitOps workflows, and deployment pipelines extend to data integration without modification.

Teams already have Prometheus, Grafana, and alerting configured for their Kubernetes clusters. Adding ETL to that same monitoring surface eliminates the need for separate observability tooling and reduces context-switching during incident response.

2. Native Scaling and Resource Management

Kubernetes handles horizontal pod autoscaling out of the box. When sync workloads spike, the cluster can allocate additional worker pods without manual intervention. When demand drops, those resources return to the shared pool.

Resource quotas and limits let you control exactly how much CPU and memory your data pipelines can consume. This prevents runaway jobs from impacting other workloads and makes capacity planning predictable.

3. High Availability and Disaster Recovery

Pod rescheduling on node failures happens automatically. If a node running your ETL workers goes down, Kubernetes moves those pods to healthy nodes without operator intervention.

Multi-zone deployments use the same patterns your applications already follow. Rollback strategies work identically to application deployments. The operational knowledge your team has built transfers directly.

What Challenges Come With Running ETL in Kubernetes?

Running ETL in Kubernetes isn't trivial. Data workloads have characteristics that differ from typical web services, and ignoring those differences leads to reliability problems.

What Should You Look For in a Kubernetes-Native ETL Tool?

Not every tool that can run in a container is truly Kubernetes-native. When evaluating options, these criteria separate tools designed for Kubernetes from those that merely tolerate it.

1. First-Class Helm Chart Support

Official, maintained Helm charts signal that the vendor treats Kubernetes as a primary deployment target. Community-maintained charts often lag behind releases and may miss critical configuration options.

Look for configurable resource requests and limits, support for custom values files, and documentation covering environment-specific overrides. The chart should integrate with standard Kubernetes patterns, not work around them.

2. Stateless Architecture Where Possible

Connector execution that doesn't require persistent local state simplifies operations significantly. External state management using databases rather than local files means pods can be rescheduled freely.

Clean pod termination without data loss requires the tool to checkpoint state externally before shutdown. This allows Kubernetes to manage the pod lifecycle without special handling.

3. Horizontal Scaling Model

The ability to run multiple worker pods concurrently matters for throughput. Workload distribution across available nodes prevents single-pod bottlenecks from limiting sync performance.

Single-node architectures that can't distribute work don't benefit from Kubernetes scaling capabilities. You end up with a container that happens to run in Kubernetes rather than a truly distributed system.

4. Kubernetes-Native Observability

Prometheus metrics endpoints let you monitor ETL workloads alongside everything else in your cluster. Structured logging compatible with Fluentd or Loki integrates with your existing log aggregation.

Health check endpoints for liveness and readiness probes allow Kubernetes to make intelligent scheduling decisions. Without these, the cluster can't distinguish between a pod that's processing and one that's stuck.

How Do Legacy ETL Platforms Handle Kubernetes?

Legacy ETL platforms were not designed for containerized environments. Understanding their limitations helps explain why simply containerizing an existing tool does not deliver the benefits of a Kubernetes-native architecture.

These issues typically show up in three specific areas:

- Retrofitted containerization: Many legacy platforms wrap VM-based architectures in Docker images. This creates containers that behave like full virtual machines and run heavy application stacks for each connector instead of lightweight processes. Upgrade paths often require full cluster redeployments because the architecture does not support mixed versions or graceful transitions.

- Limited scaling flexibility: Single-node execution models prevent horizontal scaling because adding pods does not increase throughput when work can only run on one instance. Scaling typically requires infrastructure changes instead of configuration updates. This forces teams back into manual capacity planning rather than allowing Kubernetes to allocate resources dynamically.

- Operational overhead: Lifecycle management often depends on custom operators or controllers that teams must maintain themselves. Proprietary monitoring tools do not integrate with existing observability stacks, which increases context switching during troubleshooting. Backup and restore procedures often require special handling that does not align with standard Kubernetes patterns.

How Does Airbyte Deploy on Kubernetes?

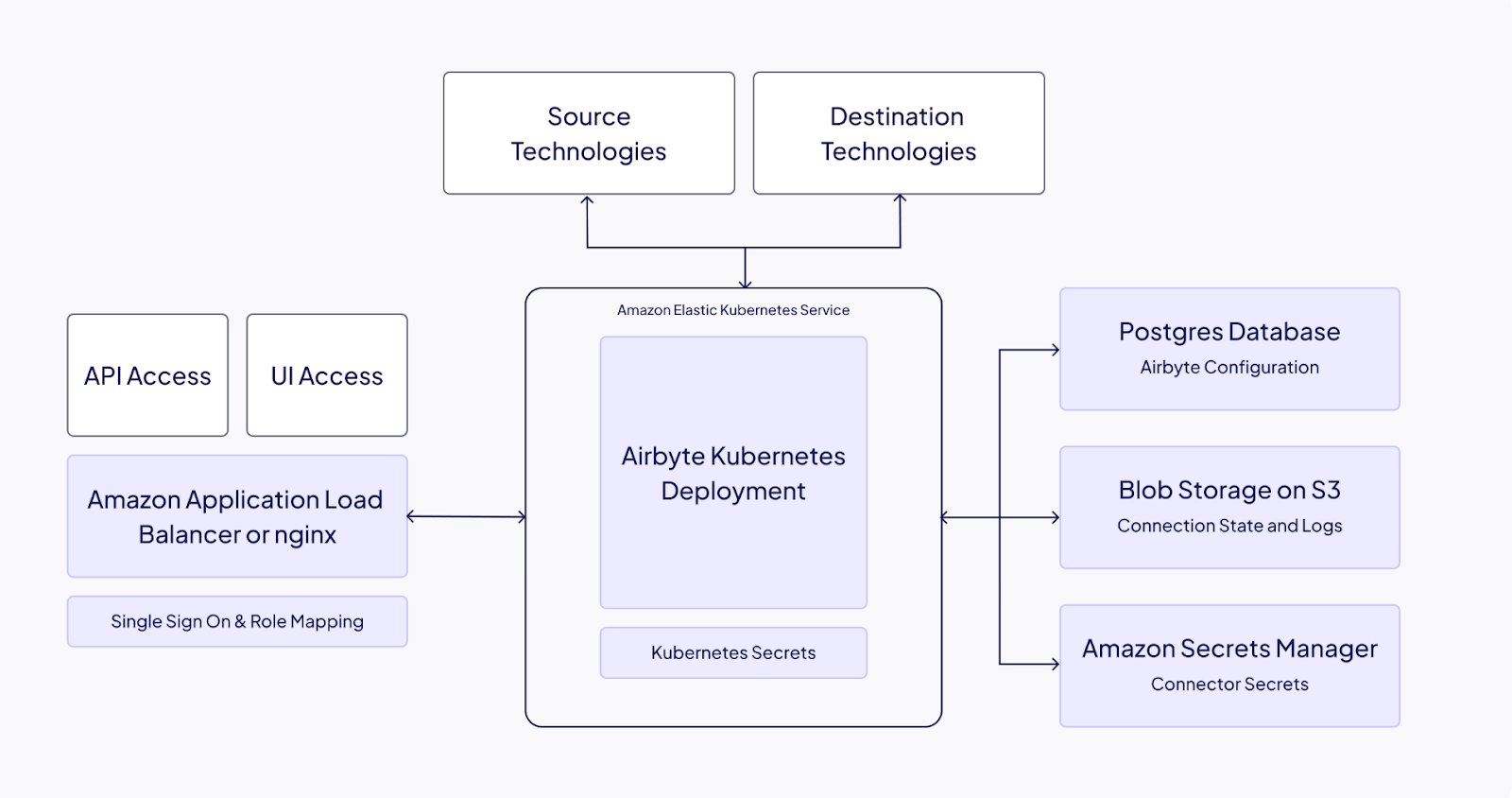

Airbyte's architecture was designed for containerized environments from the start. The platform distributes work across multiple components, each running in its own pod with appropriate resource allocation.

1. Official Helm Chart Deployment

Airbyte maintains production-ready Helm charts as a primary deployment method. The charts support configurations ranging from single-node development setups to multi-node production deployments.

Configuration options include custom namespaces, resource limits, node selectors, and tolerations. The charts integrate with standard Kubernetes patterns rather than requiring workarounds.

2. Distributed Architecture

Separate pods handle different responsibilities: scheduler, server, workers, and connectors. This separation allows independent scaling of each component based on actual workload demands.

Connector pods spin up on-demand when syncs start and terminate after completion. Temporal workflow engine orchestrates job execution, providing reliable handling of long-running operations and automatic retries on failure.

3. Flexible Deployment Options

Self-Managed Enterprise provides complete infrastructure control for organizations requiring data sovereignty. You run everything in your cluster, with data never leaving your infrastructure.

Hybrid architecture options combine a cloud control plane with customer-controlled data planes. This model provides managed upgrades and configuration while keeping actual data processing within your network boundaries. Air-gapped deployment support addresses regulated environments with no external connectivity requirements.

4. Enterprise Governance in K8s Environments

RBAC integration works with existing Kubernetes authentication mechanisms. Audit logging tracks all activity for compliance requirements, with logs exportable to your existing log aggregation systems.

External secrets management integrates with HashiCorp Vault, AWS Secrets Manager, and other standard solutions. Credentials don't need to be stored in Kubernetes secrets if your security policy prohibits it.

The same 600+ connectors available in Airbyte Cloud work identically in self-managed Kubernetes deployments. There's no feature split between hosted and self-managed versions.

What Does a Production Kubernetes Deployment Look Like?

Moving from documentation to production requires attention to specific configuration details. These recommendations reflect what works in actual customer deployments.

1. Cluster Requirements

Production deployments typically require:

- Worker nodes: Minimum 4 CPU cores and 16GB RAM per node, with additional capacity for connector pods

- Storage: Persistent volumes for state database and log storage, typically 100GB+ depending on sync volume

- Network: Egress access to data sources with network policies permitting connector traffic

2. High Availability Configuration

For production reliability:

- Multi-replica deployments: Run multiple instances of scheduler and server components

- External database: Replace bundled PostgreSQL with managed RDS or Cloud SQL for better reliability

- Load balancer: Configure ingress for web UI access with SSL termination

- Pod disruption budgets: Prevent cluster operations from taking down all replicas simultaneously

3. Scaling Considerations

Worker pod scaling determines sync throughput:

- Concurrent syncs: Each worker handles approximately 3 concurrent syncs, scale workers based on parallelism needs

- Resource quotas: Set limits to prevent individual syncs from consuming excessive cluster resources

- Autoscaling thresholds: Configure horizontal pod autoscaler based on queue depth or resource utilization

When Should You Self-Manage ETL vs. Use a Managed Service?

The decision between self-managed Kubernetes deployment and managed services depends on your operational context and compliance requirements.

When Self-Managed Kubernetes Makes Sense

- Data sovereignty requirements: Regulations mandate that data processing stays within your infrastructure

- Existing K8s expertise: Your team already operates Kubernetes clusters and has capacity for additional workloads

- Air-gapped environments: Network isolation requirements prohibit connections to external services

- Compliance frameworks: HIPAA, FedRAMP, ITAR, or similar requirements demand infrastructure control

- Cost optimization: High data volumes make capacity-based self-managed pricing more economical than per-row alternatives

When Managed Service Makes Sense

- Limited ops capacity: Your team lacks dedicated Kubernetes operations expertise or bandwidth

- Speed priority: Rapid deployment matters more than infrastructure control

- Variable workloads: Unpredictable sync volumes benefit from managed autoscaling

- No data residency requirements: Compliance framework permits cloud-based data processing

Is Kubernetes the Right Place for Your ETL Workloads?

Modern ETL tools run natively inside Kubernetes when they're architected for it rather than retrofitted into containers. For teams already operating Kubernetes clusters, deploying data integration alongside application workloads eliminates infrastructure sprawl and uses existing operational investment.

Ready to run data pipelines in your Kubernetes cluster?

Airbyte Self-Managed Enterprise deploys via Helm with the same 600+ connectors as our cloud platform. Complete infrastructure control, enterprise governance features, and no feature trade-offs between deployment models. Talk to Sales to discuss your Kubernetes deployment architecture.

Frequently Asked Questions

Can any ETL tool run inside Kubernetes?

Not effectively. While nearly any ETL tool can be containerized, only Kubernetes-native tools support proper horizontal scaling, externalized state, health checks, and operational patterns that align with K8s. Legacy ETL tools often break under real workloads.

Do ETL pipelines require stateful storage in Kubernetes?

Yes. Checkpoints, cursors, and job metadata must persist across pod restarts. Kubernetes-native ETL tools externalize this state to databases, while older tools store it locally and risk losing progress when pods reschedule.

Will running ETL in Kubernetes impact application workloads?

It can if not configured correctly. ETL workloads are CPU- and memory-heavy, and without resource limits, quotas, and scheduling rules, they may starve other pods. Kubernetes-native ETL platforms provide clear resource boundaries to prevent this.

Why use Airbyte instead of running a legacy ETL tool in Kubernetes?

Airbyte was architected for Kubernetes from day one: official Helm charts, distributed worker pods, Prometheus metrics, external secrets integration, and 600+ connectors. Legacy ETL tools typically lack native scaling, require complex workarounds, and don’t integrate with standard K8s operations.

.webp)