What are Redshift JSON Functions? 7 Essential Functions

Summarize this article with:

✨ AI Generated Summary

JSON buried in event logs, webhooks, and telemetry turns simple analyses into hours of string parsing. Without native JSON handling, you're stuck running Python preprocessors, maintaining Airflow DAGs, or accepting sluggish queries that burn cluster credits. Teams have measured 30-plus-minute slowdowns when queries repeatedly parse VARCHAR-stored JSON at scale.

Redshift ships with seven JSON functions that handle 90 percent of extraction, validation, and type-conversion tasks directly in SQL. They work on both legacy VARCHAR columns and the newer SUPER data type, which parses JSON once at load time for dramatically faster reads.

Master these functions now to skip the next late-night hotfix and focus on analysis instead of cleanup.

TL;DR: Redshift JSON Functions at a Glance

- Redshift provides seven native JSON functions that let you extract, validate, and convert JSON directly in SQL without external preprocessing.

- Classic JSON functions work on VARCHAR data but re-parse JSON on every query, making them best for lightweight or legacy use cases.

- The SUPER data type parses JSON once at load time and supports PartiQL dot notation for faster, cleaner queries on nested data.

- For high-volume or frequently queried JSON, converting to SUPER delivers 10x+ performance gains and simpler SQL.

What Are Redshift JSON Functions?

Redshift includes native SQL functions that parse, extract, and manipulate JSON directly in your queries without Python scrapers or regex required.

These functions fall into two camps:

- Classic JSON functions operate on VARCHAR or CHAR columns where JSON is stored as plain text

- SUPER functions work with the SUPER data type, which stores parsed JSON once at ingestion and enables PartiQL syntax for array and object navigation

Because SUPER values are parsed only once, queries run up to 31× faster than re-parsing text JSON on every scan.

Let's walk through the seven functions that cover extraction, validation, arrays, and type conversion.

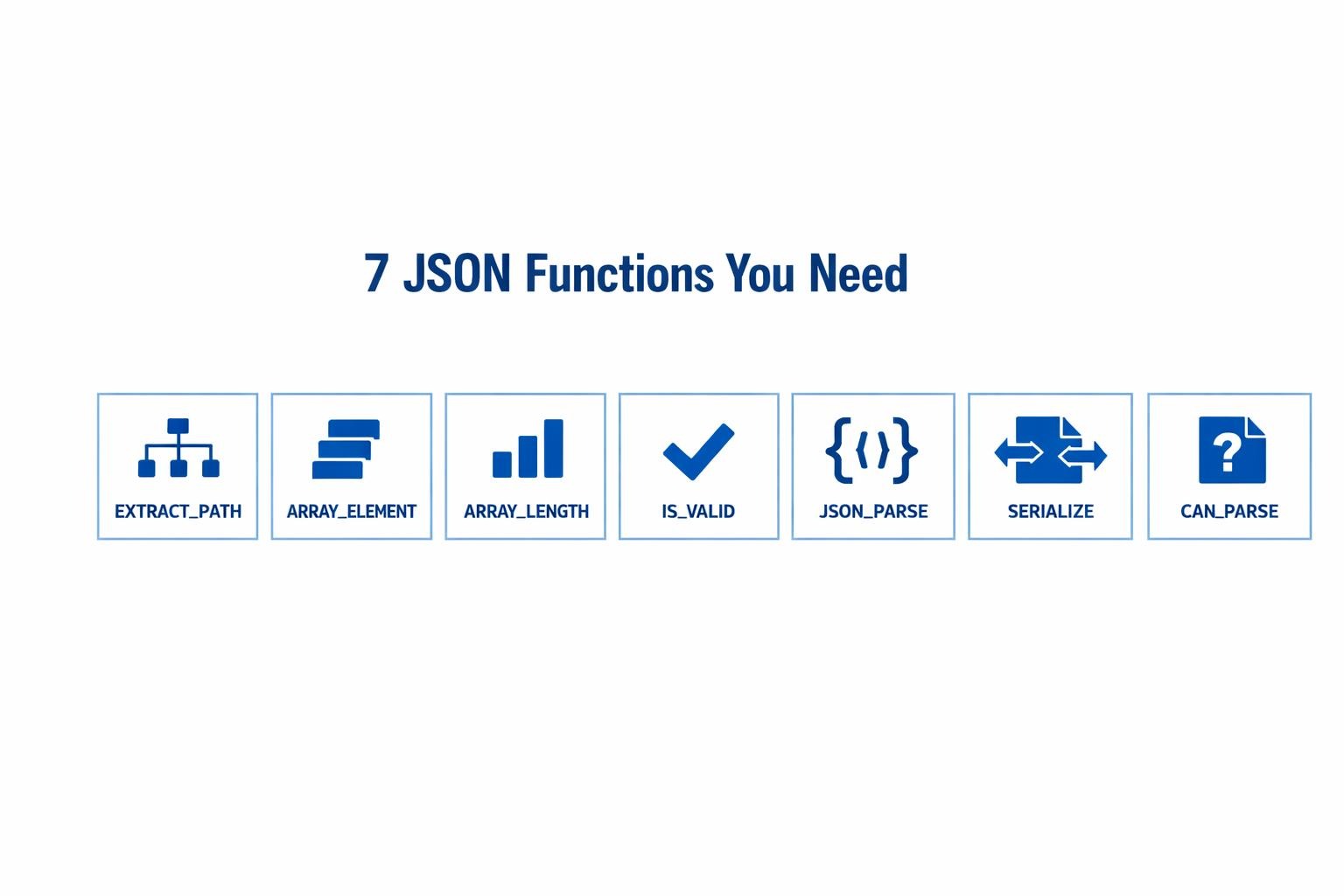

Which 7 JSON Functions Should You Know?

These seven functions eliminate manual string parsing for nested payloads, covering extraction, validation, array handling, and type conversion. The first four work on JSON stored as VARCHAR, while the last three unlock the higher-performance SUPER data type, which parses once at load time and then behaves like any other columnar value, delivering 10x+ faster repeat queries.

1. JSON_EXTRACT_PATH_TEXT

Pulls a scalar value from a JSON object or array given a path of up to five keys.

SELECT JSON_EXTRACT_PATH_TEXT(

'{"user": {"profile": {"name": "Alice", "id": 123}}}',

'user', 'profile', 'name'

) AS user_name; -- returns AliceWhen you only need a few fields from an API response or log line, this keeps your query short and predictable.

Missing keys or invalid JSON return NULL if you pass a final true flag, avoiding runtime errors.

2. JSON_EXTRACT_ARRAY_ELEMENT_TEXT

Returns one element from a JSON array by zero-based index.

SELECT JSON_EXTRACT_ARRAY_ELEMENT_TEXT(

'["postgres", "mysql", "mongodb"]',

0

); -- returns postgresUse it to grab the latest event in an activity feed or the first error in an errors array. If the index is out of bounds, you get NULL instead of an exception.

3. JSON_ARRAY_LENGTH

Counts the elements in a top-level JSON array so you can filter or aggregate without parsing the values themselves.

SELECT

session_id,

JSON_ARRAY_LENGTH(events) AS event_count

FROM clickstream

WHERE JSON_ARRAY_LENGTH(events) > 5;This works for quick quality checks ("did we capture at least one event per session?") or for computing metrics like average cart size.

4. IS_VALID_JSON

Returns true when a string is valid JSON, false otherwise. Pair it with conditional logic to keep bad payloads from crashing downstream jobs.

SELECT raw_payload

FROM ingestion_queue

WHERE IS_VALID_JSON(raw_payload) = false;Running this gate during ETL protects analytic queries from mysterious parsing errors later on.

5. JSON_PARSE

Converts a JSON string to the native SUPER type, enabling dot-notation queries that read like SQL on relational data.

CREATE TABLE events (id INT, data SUPER);

INSERT INTO events

VALUES (1, JSON_PARSE('{"connector":"salesforce","records":1500,"status":"synced"}'));

SELECT data.connector, data.records

FROM events; -- returns salesforce, 1500The parse happens once at insert time, so subsequent queries avoid the heavy CPU cost of re-parsing text.

6. JSON_SERIALIZE

Turns a SUPER value back into a JSON string. That's useful when you need to export data to a downstream system that expects plain text.

SELECT JSON_SERIALIZE(data) AS json_out

FROM events

WHERE id = 1;You can even mix paradigms: serialize the SUPER, then feed it into JSON_EXTRACT_PATH_TEXT if you're bridging old and new code paths.

7. CAN_JSON_PARSE

Checks whether a string can be parsed into SUPER without throwing an error, making it safer than attempting JSON_PARSE directly.

UPDATE raw_events

SET parsed = JSON_PARSE(payload)

WHERE CAN_JSON_PARSE(payload);Use it to filter out garbage rows before they hit your analytics tables. Unlike IS_VALID_JSON, this function focuses on the exact rules the SUPER parser enforces.

These functions let you extract just the fields you need, validate inputs early, and graduate to SUPER when performance matters, all without leaving SQL.

How Do You Handle Nested JSON in Redshift?

Quick answer: Combine JSON_EXTRACT_PATH_TEXT with multiple path arguments for VARCHAR JSON, or use the SUPER type with PartiQL dot notation for cleaner syntax on deeply nested structures.

Nested Extraction with Classic Functions

Text-based functions work with VARCHAR JSON columns up to five levels deep. JSON_EXTRACT_PATH_TEXT accepts each hierarchy level as a separate argument:

SELECT JSON_EXTRACT_PATH_TEXT(

'{"sync": {"source": {"type": "postgres", "schema": "public", "tables": ["users", "orders"]}}}',

'sync', 'source', 'type'

) AS source_type;

-- Returns: postgresThis approach works on any VARCHAR column without schema changes, making it ideal for legacy tables or simple extractions. The syntax becomes verbose beyond three levels, and every query re-parses the entire string, stretching CPU usage and query times on large tables.

Nested Access with SUPER Type

PartiQL dot notation provides more intuitive querying once you convert JSON to SUPER at ingest with JSON_PARSE:

-- table events(event_id INT, event_data SUPER)

SELECT

event_data.sync.source.type AS source_type,

event_data.sync.status AS sync_status

FROM events

WHERE event_data.sync.status = 'completed';Redshift parses the JSON once during load, then applies zone-map pruning and skips irrelevant blocks on disk during queries. This delivers 10x+ performance gains on repeated queries compared to VARCHAR parsing. Dot notation handles nesting depth up to the SUPER type's 100-level limit (versus the five-level limit) and produces cleaner, more maintainable SQL.

Migration tip: Convert existing VARCHAR JSON to SUPER for frequently accessed nested fields:

UPDATE table

SET super_col = JSON_PARSE(varchar_col)

WHERE CAN_JSON_PARSE(varchar_col);The SUPER type with PartiQL requires data loaded via JSON_PARSE or COPY with FORMAT JSON 'no shred', but the performance and readability gains justify the conversion for complex nesting.

How Does Airbyte Simplify JSON Data Pipelines to Redshift?

The Redshift destination connector stores incoming data in a _airbyte_data column as a JSON blob. You can configure it to use Redshift's SUPER type for faster, PartiQL-friendly queries without repeated string parsing.

When you enable SUPER, Redshift parses JSON once at load time. Subsequent queries use native dot notation instead of parsing text on every execution. If you need raw JSON, switch to JSONB. Either way, the full structure is preserved.

You get two post-load options: keep raw tables for schema-on-read analysis, or use the built-in normalization step to flatten nested objects into relational tables with dbt models. No brittle scripts that break when sources add new fields.

With 600+ connectors, you can pull data from SaaS APIs like Salesforce or Stripe and land it in Redshift without writing JSON handling code. Incremental sync modes track cursors and update only new records. Automatic schema evolution appends new attributes to JSON blobs so pipelines keep running when source formats drift.

This eliminates custom parser maintenance, speeds up queries through SUPER's columnar engine, and future-proofs pipelines by handling schema changes automatically. You configure a connection once and focus on analysis instead of data plumbing.

Start loading JSON to Redshift in minutes with Airbyte's free tier. Try Airbyte

Need help with enterprise deployments or custom integrations? Talk to Sales

Frequently Asked Questions

Can Redshift query JSON without converting it to SUPER?

Yes. Redshift’s classic JSON functions like JSON_EXTRACT_PATH_TEXT work directly on JSON stored as VARCHAR or CHAR. This is useful for legacy tables or one-off queries. However, these functions re-parse the JSON on every query, which can become expensive at scale. For production analytics and repeated access, converting JSON to the SUPER type is the recommended approach.

What’s the difference between SUPER and JSONB in Redshift?

SUPER is designed for semi-structured analytics and supports PartiQL dot notation, nested objects, and arrays with better performance for read-heavy workloads. JSONB stores raw JSON text in a binary format and is better suited for simple storage or export scenarios. If you plan to query nested fields frequently, SUPER is the better choice.

Does using SUPER require changing existing schemas?

Not immediately. You can keep existing VARCHAR JSON columns and gradually introduce SUPER columns for frequently accessed data. Many teams backfill SUPER columns using JSON_PARSE while leaving raw JSON intact, allowing a low-risk, incremental migration without breaking existing queries.

When should you avoid classic JSON functions?

Classic JSON functions become limiting when JSON is deeply nested, queried frequently, or stored in large volumes. They are capped at five levels of nesting, lack native array iteration, and incur repeated parsing costs. In these cases, loading data into SUPER or flattening hot paths into materialized views delivers significantly better performance and cleaner SQL.

.webp)