Retrieval-Augmented Generation (RAG) and Model Context Protocol (MCP) are two of the most common ways teams give AI agents access to data beyond a model’s training set. They address the same basic question of how an agent uses external information, but they rely on very different architectures, each with distinct tradeoffs around freshness, latency, cost, and control.

RAG focuses on grounding model responses in pre-indexed knowledge. It retrieves relevant documents at query time and injects them into the prompt, making it well suited for semantic search across large, mostly static corpora. MCP takes a different approach. It gives agents a standardized way to query and act on live systems during inference, pulling current data directly from APIs and services.

The choice between MCP and RAG affects how fresh your answers are, whether agents can take actions, how much infrastructure you need to maintain, and where failures are likely to occur. This guide breaks down how RAG and MCP work, where each approach fits best, and when production systems benefit from using both together.

TL;DR

- RAG retrieves pre-indexed documents at query time and injects them into the prompt. It works best for semantic search across large, mostly static knowledge bases where freshness matters less than relevance.

- MCP gives agents a standardized way to query and act on live systems during inference. It pulls current data directly from APIs and services, solving the N×M integration problem with a unified protocol.

- The core tradeoff is freshness versus efficiency. RAG keeps per-query token usage low through pre-filtering; MCP guarantees real-time accuracy but uses more tokens per query.

- Most production agents benefit from combining both architectures. Use RAG for historical context and semantic retrieval, MCP for live data access and system actions.

What Is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation is a framework that augments large language models with external knowledge by retrieving relevant information at query time and incorporating it into the generation context. Instead of relying solely on the model's training data, RAG pulls in documents from a knowledge base during inference.

LLMs lack current information, cannot access proprietary data, and generate hallucinations without proper context. RAG sidesteps these limitations by keeping the model static while dynamically retrieving relevant pre-indexed information from external sources at query time. The system augments the prompt with that context before generation, which is far cheaper and faster than retraining models on new information.

This pattern works well for enterprise scenarios with large document repositories, such as technical documentation, support tickets, and knowledge bases, where semantic understanding matters more than keyword matching.

How Does RAG Work?

RAG operates through an upfront indexing phase, followed by a three-stage runtime pipeline: retrieval, augmentation, and generation.

Pre-Processing and Indexing

Before any queries can run, the system must pre-process documents. It chunks them into manageable pieces, converts each chunk into vector embeddings using an embedding model, and stores them in a vector database such as Pinecone, Weaviate, or Chroma.

Retrieval

At query time, the system converts the user's query into a vector using the same embedding model. The vector database performs similarity search, comparing the query vector against all document vectors and returning the top-K most semantically similar chunks.

Augmentation and Generation

The system combines the retrieved documents with the original user query to create an augmented prompt. This prompt now contains both the question and the relevant context the LLM needs to answer accurately. The LLM generates a response using both its training knowledge and the retrieved context, with an orchestration framework (typically LangChain or LlamaIndex) managing the complete workflow.

What Is Model Context Protocol (MCP)?

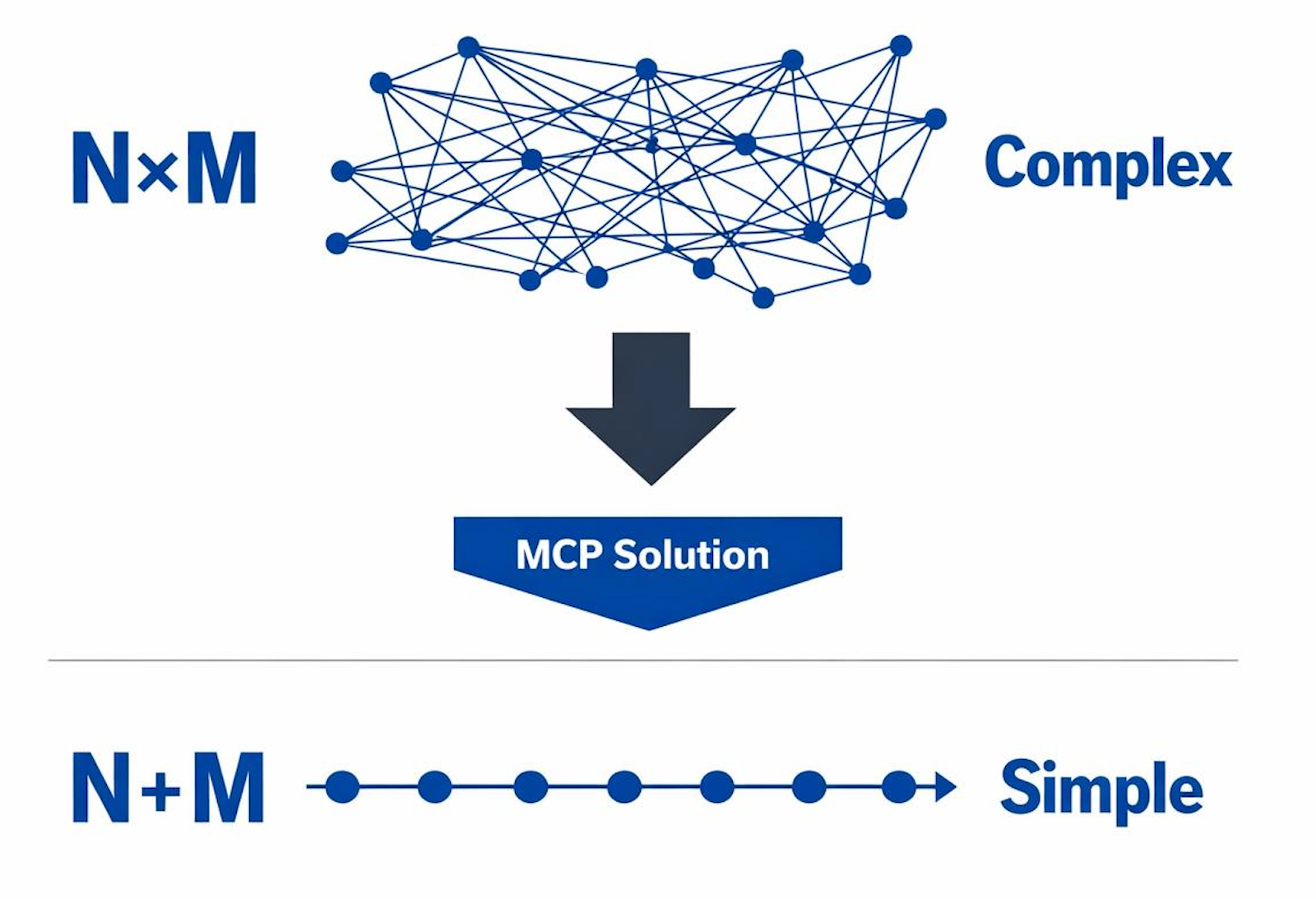

Model Context Protocol is an open-source protocol developed by Anthropic that provides a standardized interface for connecting AI applications to external data sources and tools. MCP addresses the N×M integration problem, which arises from the exponential complexity of connecting multiple AI applications to multiple data sources.

Without standardization, each AI application needs custom integration code for every data source. As you scale to N applications and M data sources, you need N×M custom integrations. MCP reduces this to linear complexity (N+M) by establishing a protocol that both sides implement once.

MCP takes direct inspiration from the Language Server Protocol (LSP), which solved the same problem between code editors and programming language tools. Where LSP replaced fragmented integrations between editors and languages with a single standard, MCP applies the same principle to AI systems. It replaces custom integrations between LLMs and external data sources with a unified protocol.

How Does MCP Work?

MCP allows AI agents to access and act on live systems during inference. Agents discover available tools on an MCP server, invoke them as needed, and use the returned data directly in their reasoning.

Transport Options

The protocol supports both local communication via stdio (for servers running on the same machine as the AI application) and remote communication via HTTP with Server-Sent Events (SSE) for streaming. This flexibility allows servers to run locally for low latency, remotely for shared resources, or in containers for scalability.

Capability Discovery

When a client connects to a server, it first discovers what capabilities that server provides through structured discovery mechanisms. Servers expose three types of capabilities: tools (executable functions the AI can invoke), resources (data the AI can access), and prompts (pre-defined interaction templates). This discovery step allows clients to understand what's available before attempting to use it.

Request-Response Flow

Here's the practical flow: An AI agent decides it needs current information from a database. It sends a tools/list request to discover available tools. The MCP server responds with a list of callable functions. The agent invokes a specific tool by sending a tools/call request with the tool name and parameters. The server executes the tool against the live data source and returns current results. The agent incorporates this data into its reasoning and generates a response.

Immediate Data Access

Unlike RAG, MCP performs no pre-indexing. Each query accesses current data directly from source systems. A database record updated at 10:00 AM can be queried at 10:00:01 AM through direct tool invocation during inference, without waiting for batch re-indexing.

MCP vs RAG: What Are the Key Differences?

The table below compares MCP and RAG across their architecture, data access patterns, and operational characteristics.

When to Use MCP vs RAG?

The choice comes down to what data your agent needs and how it will use it.

Choose RAG When Content Is Stable and Knowledge-Oriented

RAG is the right pattern when your agent needs to understand and retrieve information from large bodies of text that don’t change often.

Typical examples include technical documentation, internal knowledge bases, archived support tickets, and long-lived reference material. In these cases, semantic relevance matters more than real-time freshness.

Because content is embedded ahead of time, RAG keeps per-query token usage low by retrieving only the most relevant chunks. The tradeoff is upfront investment. You need to generate embeddings, maintain a vector database, and re-index when documents change. For high-volume querying against stable content, that cost pays off.

Choose MCP When Data Must Be Fresh and Operational

MCP is the better choice when your agent needs live access to operational data that changes frequently.

Inventory levels, account balances, system metrics, workflow states, and event streams all require freshness guarantees that pre-indexed embeddings cannot provide. MCP connects agents directly to APIs and databases at inference time, so changes are immediately visible.

This approach uses more tokens per query, but the cost is justified when stale data creates real risk. Showing out-of-stock items as available, displaying incorrect balances, or missing recent system events can cost far more than higher token usage.

Quick Decision Guide:

Most production agents benefit from combining both architectures.

When Should You Use Both MCP and RAG?

Use both MCP and RAG when your architecture requires historical knowledge and current data simultaneously. RAG excels at grounding responses in static, unstructured knowledge while MCP allows secure access to structured, dynamic data. A customer support agent might use RAG to retrieve relevant product documentation and past support tickets, and use Model Context Protocol to check current order status, update ticket systems, and execute actions in external systems.

This hybrid pattern treats RAG and MCP as complementary data layers. RAG handles semantic retrieval efficiently through pre-indexed vectors. MCP handles live queries and tool invocation through direct system access. Together, they combine low token overhead for historical context with freshness guarantees for operational data.

Airbyte's Agent Engine provides governed data access supporting both architectures. Change Data Capture maintains a fresh context for RAG pipelines while programmatic pipeline configuration enables MCP servers to access live data sources. PyAirbyte MCP enables AI agents to query and manage data pipelines through natural language, while Connector Builder MCP allows AI-assisted custom connector development.

Talk to us to see how Airbyte Embedded powers production AI agents with reliable, permission-aware data access.

Frequently Asked Questions

Can MCP and RAG Work Together?

Yes, they're highly complementary. RAG provides semantic search over pre-indexed knowledge bases while MCP enables direct access to live data sources and tool execution. Production systems often combine both, using RAG for historical context and MCP for current data access, with an orchestration layer assembling unified prompts.

Does MCP Replace RAG?

No. MCP provides standardized access to live data sources through direct API integration, while RAG excels at semantic search over large document repositories. They solve different problems: MCP for current structured data and actions, RAG for semantic retrieval from static knowledge bases.

What Is the Latency Difference Between MCP and RAG?

Both systems exhibit similar end-to-end task completion characteristics. The key difference is data freshness: MCP accesses current data directly from sources, whereas RAG retrieves it from pre-indexed embeddings, which require reprocessing for updates.

Which Uses More Tokens: MCP or RAG?

MCP uses significantly more tokens than RAG when processing unstructured documents. This higher token cost is offset by MCP's ability to access current data without pre-indexing overhead, making it worthwhile when freshness is critical.

Do I Need a Vector Database for MCP?

No. MCP connects directly to live data sources through API integration without requiring embedding or vector storage. RAG commonly uses vector databases such as Pinecone, Weaviate, or Chroma for semantic search over pre-indexed documents, but these are not strictly required. Alternative storage mechanisms can also be used.

Try the Agent Engine

We're building the future of agent data infrastructure. Be amongst the first to explore our new platform and get access to our latest features.

.avif)