Top 10 Big Data Integration Tools in 2026

Summarize this article with:

✨ AI Generated Summary

Modern big data integration combines diverse, large-scale data from multiple sources into unified, usable formats using advanced ETL/ELT pipelines, enabling real-time processing and improved decision-making. Key challenges include managing volume, variety, velocity, and ensuring data quality, security, and compliance.

- Top tools vary by deployment, transformation capabilities, and target users, with Airbyte, Fivetran, Talend, and Informatica among the leaders.

- AI/ML enhance integration through automated schema mapping, anomaly detection, and data quality remediation.

- Edge computing supports low-latency processing near data sources, syncing with central systems for comprehensive analysis.

- Best practices emphasize clear objectives, thorough data understanding, embedded quality/security, continuous monitoring, and tool fit.

Modern data teams face an impossible challenge: your organization generates massive volumes of data from countless sources—customer interactions, IoT sensors, social media, transactional systems, and operational logs—yet this valuable information remains fragmented across disparate systems. While traditional data-integration approaches might have sufficed when data volumes were manageable, today's reality demands processing large amounts of information from hundreds of sources in real time. The stakes are higher than ever: organizations that integrate their big data effectively gain faster decision-making, personalized customer experiences, and operational optimization, while those that struggle drown in data silos.

What Is Big Data Integration and Why Does It Matter?

Big data integration means combining large volumes of diverse data from multiple sources into a unified, consistent, and usable format. Sophisticated ETL (extract, transform, load) or ELT pipelines clean, standardize, and load data into warehouses, lakes, or real-time analytics platforms, ensuring quality, lineage, and accessibility for downstream consumption.

Understanding the Extraction Process

Data is acquired from relational databases, SaaS apps, social media feeds, IoT sensors, and streaming platforms, covering both structured and unstructured formats. The extraction phase requires careful consideration of source system limitations and data freshness requirements.

Modern extraction techniques support both full and incremental synchronization patterns. Real-time change data capture enables immediate data availability for time-sensitive business processes.

Transformation Capabilities

Key steps include cleaning, standardization, mapping, enrichment, deduplication, and validation—performed either before or after loading, depending on the architecture. Transformation logic handles data quality issues while ensuring consistency across different source formats.

Advanced transformation features support complex business rules and data enrichment. Schema mapping capabilities can often automatically detect and adapt to some source system changes, but manual input is still required in many cases, especially for complex or incompatible schema modifications.

Loading Strategies

Cleansed data is delivered to warehouses, lakes, or specialized analytics engines in batch or streaming modes, balancing performance with freshness requirements. Loading patterns optimize for both query performance and storage efficiency.

Modern loading approaches support multiple destination types simultaneously. Automated error handling ensures data integrity throughout the loading process.

What Are the Top Big Data Integration Tools Available Today?

1. Airbyte: Open-Source Flexibility with Enterprise Features

Open-source, highly customizable, with 600+ connectors and flexible deployment options including cloud, hybrid, and on-premises environments. Enterprise add-ons deliver SOC 2, GDPR, and HIPAA compliance capabilities.

Airbyte's approach eliminates vendor lock-in while providing enterprise-grade security and governance. The platform generates open-standard code that remains portable across different infrastructure environments.

Pros:

• Open-source foundation with no vendor lock-in

• 600+ connectors plus CDK for custom development

• Incremental sync and change data capture support

• Capacity-based pricing model

Cons:

• No reverse-ETL capabilities yet (coming soon)

2. Fivetran: Fully Managed ELT Automation

Fully automated, plug-and-play ELT pipelines designed for business teams seeking minimal setup and maintenance overhead.

Fivetran focuses on simplicity and reliability for standard data integration scenarios. The platform handles schema changes automatically and provides transparent monitoring capabilities.

Pros:

• Minimal setup and maintenance requirements

Cons:

• Limited transformation capabilities

• Costs rise quickly at scale

3. Talend: Enterprise-Grade ETL Platform

Enterprise-grade ETL solution with extensive governance capabilities and comprehensive transformation features for complex business requirements.

Talend provides visual development tools alongside code-based customization options. The platform supports both cloud and on-premises deployment models.

Pros:

• Powerful transformation capabilities

• Strong compliance and governance features

Cons:

• Steep learning curve for new users

• Resource-intensive infrastructure requirements

4. Informatica: Comprehensive Enterprise Suite

Comprehensive data integration suite designed for large, regulated enterprises requiring extensive governance and compliance capabilities.

Informatica offers AI-powered optimization features and enterprise-grade support services. The platform handles complex data integration scenarios across multiple industries.

Pros:

• Rich feature set with AI-powered optimization

• Excellent enterprise support services

Cons:

• High cost structure

• Complex onboarding process

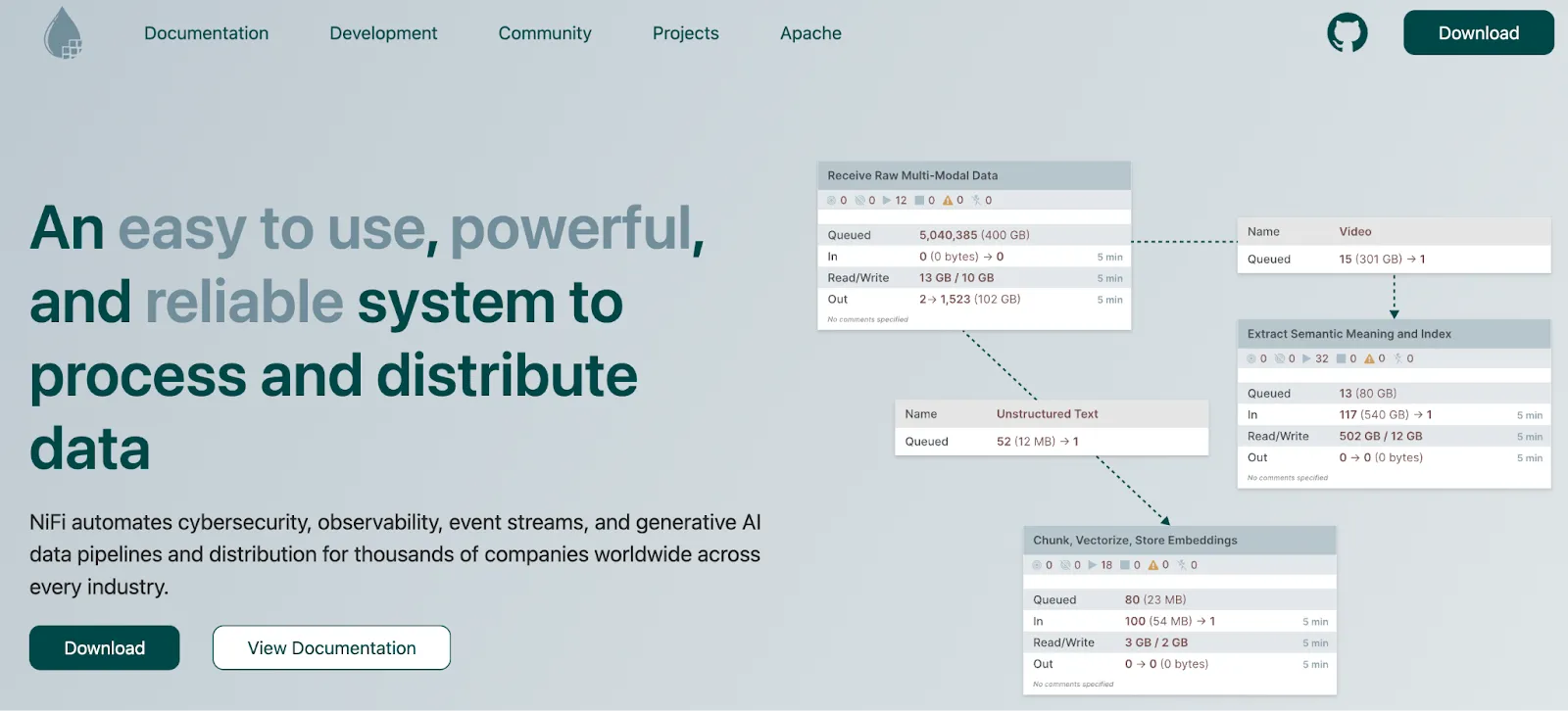

5. Apache NiFi: Visual Flow-Based Integration

Visual, flow-based, open-source integration platform particularly effective for real-time data streams and IoT scenarios.

NiFi provides a drag-and-drop interface for building data flows with built-in security and extensibility features. The platform excels at handling diverse data formats and sources.

Pros:

• Intuitive drag-and-drop user interface

• Secure and extensible architecture

Cons:

• Not optimized for large batch processing jobs

• User interface feels dated compared to modern alternatives

6. Stitch: Straightforward ELT Solution

Straightforward ELT platform with transparent pricing and simple setup process for organizations seeking quick deployment.

Stitch focuses on reliable data replication with minimal configuration overhead. The platform provides clear pricing models without hidden fees.

Pros:

• Fast setup and deployment process

• Simple, transparent pricing structure

Cons:

• Limited transformation capabilities

• Gaps in niche connector availability

7. Hevo Data: No-Code Integration Platform

No-code pipeline platform with real-time syncing capabilities designed for business users without technical backgrounds.

Hevo Data provides an intuitive interface with comprehensive connector library. The platform supports real-time data synchronization across multiple sources and destinations.

Pros:

• Intuitive, user-friendly interface

• Comprehensive connector library

Cons:

• Limited deep customization options

• Fewer enterprise-grade features

8. Microsoft Azure Data Factory: Cloud-Native Integration

Cloud-native data integration service deeply integrated with Azure ecosystem services and infrastructure.

Azure Data Factory offers both code-free and code-based development options. The platform provides serverless scaling capabilities for variable workloads.

Pros:

• Code-free and code-based development options

• Serverless scaling capabilities

Cons:

• Limited utility outside Azure ecosystem

• Learning curve for advanced use cases

9. AWS Glue: Serverless ETL Service

Serverless ETL service designed for AWS ecosystem with automatic scaling and built-in data catalog capabilities.

AWS Glue provides serverless compute resources that scale automatically based on workload demands. The platform includes comprehensive data cataloging and discovery features.

Pros:

• Automatic scaling capabilities

• Built-in data catalog and discovery

Cons:

• Debugging can be challenging

• Job startup latency issues

10. Google Cloud Dataflow: Beam-Based Processing

Beam-based stream and batch processing service on Google Cloud Platform with unified programming model.

Google Cloud Dataflow supports both streaming and batch processing using Apache Beam SDK. The platform provides automatic scaling and unified processing capabilities.

Pros:

• Automatic scaling infrastructure

• Unified streaming and batch processing

Cons:

• Requires Apache Beam expertise

• Complex pipeline authoring process

How Are AI and Machine Learning Transforming Big Data Integration?

AI and machine learning automate schema mapping, anomaly detection, data-quality remediation, and predictive workload scaling. Natural-language interfaces now let users describe desired data flows conversationally, while ML continuously optimizes transformations and routing rules.

Automated Schema Management

Machine learning algorithms detect schema changes automatically and suggest appropriate mapping strategies. These capabilities reduce manual intervention while maintaining data consistency across source system updates.

Predictive analytics help anticipate schema evolution patterns. Automated conflict resolution handles common data structure discrepancies without human intervention.

Intelligent Data Quality

AI-powered data quality tools identify patterns and anomalies that traditional rule-based systems might miss. Machine learning models learn from historical data patterns to improve accuracy over time.

Automated remediation capabilities fix common data quality issues in real-time. Smart profiling provides insights into data characteristics and potential quality concerns.

What Role Does Edge Computing Play in Big Data Integration?

Edge processing reduces latency for IoT and mobile scenarios by filtering and analyzing data near its source. Combined with modern connectivity technologies, it supports autonomous vehicles, smart cities, and industrial IoT applications, while still syncing curated data back to centralized warehouses and lakes.

Local Processing Benefits

Edge computing minimizes bandwidth requirements by processing data locally before transmission. This approach reduces costs while improving response times for time-sensitive applications.

Local processing capabilities enable autonomous decision-making in disconnected environments. Data filtering at the edge reduces storage and processing requirements in central systems.

Integration with Central Systems

Edge devices synchronize processed results with centralized data platforms for comprehensive analysis. Hybrid architectures balance local processing speed with central system capabilities.

Data orchestration tools manage the flow between edge devices and central systems. Automated synchronization ensures consistency across distributed processing environments.

What Are the Real-World Applications of Big Data Integration?

1. E-commerce Personalization

Unified customer profiles enable real-time recommendations and dynamic pricing strategies. Integration of browsing behavior, purchase history, and external data sources creates comprehensive customer insights.

Personalization engines use integrated data to deliver targeted marketing campaigns. Real-time inventory integration ensures accurate product availability across all channels.

2. Healthcare and Medical Research

Integrated EMRs, imaging systems, wearables, and clinical data enable precision medicine approaches. Data integration supports population health management and clinical research initiatives.

Medical device integration provides comprehensive patient monitoring capabilities. Research platforms combine clinical trial data with real-world evidence sources.

3. Financial Services

Real-time fraud detection systems integrate transaction data with behavioral patterns and external risk sources. Alternative data integration supports enhanced credit scoring and risk assessment models.

Regulatory reporting requirements benefit from integrated compliance data management. Customer analytics platforms combine transaction history with external market data.

4. Retail and Supply Chain

Demand forecasting models integrate sales data with weather patterns, economic indicators, and social trends. Inventory optimization systems balance supply chain costs with customer satisfaction requirements.

In-store analytics combine point-of-sale data with customer behavior patterns. Supply chain visibility integrates data from multiple vendors and logistics providers.

What Are the Best Practices for Successful Big Data Integration?

1. Define Clear Objectives and Metrics

Establish specific business outcomes and success measurements before beginning integration projects. Clear objectives guide technology selection and implementation priorities. Regular measurement against defined metrics ensures projects deliver expected business value. Stakeholder alignment on objectives prevents scope creep and resource waste.

2. Understand Your Data Landscape

Comprehensive data cataloging identifies all relevant sources and their characteristics. Data profiling reveals quality issues and integration challenges before implementation begins. Data lineage mapping helps understand dependencies and impact of changes. Source system documentation ensures accurate integration design and testing.

3. Embed Data Quality and Security from Day One

Data quality frameworks prevent poor quality data from entering downstream systems. Security controls protect sensitive information throughout the integration pipeline. Automated validation rules catch data quality issues in real-time. Role-based access controls ensure appropriate data access permissions across all systems.

4. Test, Monitor, and Iterate Continuously

Comprehensive testing validates integration logic and data quality before production deployment. Continuous monitoring identifies performance issues and data anomalies. Regular iteration cycles improve integration performance and adapt to changing business requirements. Automated testing frameworks enable rapid deployment of updates and enhancements.

5. Choose Tools That Fit Your Needs

Proof-of-concept projects evaluate tools against specific requirements and constraints. Vendor evaluation should consider long-term scalability and flexibility requirements. Total cost of ownership includes licensing, implementation, and ongoing operational costs. Integration with existing infrastructure reduces deployment complexity and maintenance overhead.

What Are the Key Challenges in Big Data Integration?

Volume and Scalability Requirements

Large data volumes require infrastructure that scales efficiently without performance degradation. Storage and processing costs should be managed efficiently to align with business value, not necessarily scale in direct proportion to it.

Network bandwidth limitations affect data transfer speeds and integration frequency. Compression and optimization techniques help manage volume-related challenges.

Variety and Format Complexity

Different data formats require specialized parsing and transformation capabilities. Schema evolution across source systems creates ongoing integration maintenance requirements.

Unstructured data integration requires advanced processing capabilities. Metadata management becomes critical for handling diverse data types effectively.

Velocity and Real-Time Processing Demands

Real-time integration requirements demand low-latency processing capabilities. Streaming data architectures handle continuous data flows without batch processing delays.

Event-driven architectures enable immediate response to data changes. Buffer management prevents data loss during processing spikes and system maintenance.

Security, Compliance, and Governance

Regulatory requirements vary across industries and geographic regions. Data protection regulations affect how data is collected, stored, and processed.

Audit trails document data access and modification activities for compliance reporting. Encryption protects sensitive data throughout the integration pipeline.

Latency and Performance Tuning

Network latency affects data freshness and system responsiveness. Processing optimization reduces computational overhead and improves throughput.

Caching strategies balance data freshness with query performance. Resource allocation optimization ensures efficient utilization of computational resources.

How Do You Ensure Data Quality in Big Data Integration?

Accuracy and Completeness Validation

Automated validation rules check data accuracy against business rules and external references. Completeness monitoring identifies missing data elements that affect downstream analysis.

Data profiling identifies patterns and anomalies that indicate quality issues. Statistical analysis detects data drift and distribution changes over time.

Consistency and Standardization Processes

Standardization rules ensure consistent data formats across different sources. Reference data management maintains consistent lookup values and code mappings. Cross-system validation identifies discrepancies between related data sources. Master data management provides single sources of truth for critical business entities.

Timeliness Management for Data Freshness

Data freshness requirements vary by use case and business criticality. Monitoring systems track data age and alert when freshness thresholds are exceeded. Processing schedules balance data freshness with system resource utilization. Real-time processing capabilities support time-sensitive business requirements.

Validity and Format Compliance

Schema validation ensures data conforms to expected formats and structures. Business rule validation checks logical consistency and value ranges. Data type validation prevents format mismatches that cause processing errors. Constraint checking enforces referential integrity across related data sources.

Conclusion

Big data integration has evolved from a technical necessity into a strategic competitive advantage for organizations across all industries. Modern integration platforms like Airbyte provide the flexibility, scalability, and governance capabilities needed to handle today's complex data landscape while avoiding vendor lock-in.

The key to success lies in choosing tools that align with your organization's specific requirements, implementing robust data quality processes, and maintaining a focus on business outcomes rather than just technical capabilities. As data volumes continue to grow and business requirements become more demanding, organizations that invest in proper integration infrastructure will be best positioned to leverage their data assets for competitive advantage.

FAQ

What makes a tool suitable for big data integration?

The ability to handle volume, variety, and velocity requirements along with rich connector ecosystems and real-time plus batch support capabilities. Robust transformation features and scalable, secure architecture are equally important for enterprise deployments.

Are open-source tools reliable for enterprise use?

Yes, platforms like Airbyte deliver enterprise-grade security, governance, and support capabilities while avoiding vendor lock-in. Open-source tools often provide greater flexibility and customization options than proprietary alternatives.

What is the difference between ETL and ELT approaches?

ETL transforms data before loading it into the destination system, while ELT loads raw data first and performs transformations inside the destination. ELT approaches leverage modern cloud computing power for transformation processing.

Can I integrate both structured and unstructured data?

Modern integration tools parse SQL databases, JSON files, images, social media feeds, and IoT streams effectively. Platforms like Apache NiFi, AWS Glue, and Airbyte provide comprehensive support for diverse data formats.

How do I ensure data quality in big data integration?

Use platforms with built-in profiling, validation, and monitoring capabilities while implementing automated quality rules and continuous auditing processes. Regular data quality assessments help maintain high standards throughout the integration pipeline.

Suggested Read:

.webp)