CI/CD Pipeline: Key Steps for Seamless Integration

Summarize this article with:

✨ AI Generated Summary

A CI/CD pipeline automates software development, testing, and deployment, enabling faster, reliable releases and improved collaboration. Integrating CI/CD into ETL workflows accelerates time-to-market, ensures data quality, enhances scalability, and reduces manual errors.

- Continuous Integration automates code merging and testing to detect bugs early.

- Continuous Delivery readies builds for deployment with manual release control.

- Continuous Deployment automates direct production releases after successful tests.

- Airbyte simplifies CI/CD for data pipelines with pre-built connectors, local testing via airbyte-ci CLI, and infrastructure-as-code support.

- Using Airbyte with GitLab and Snowflake enables automated syncing of CI/CD data for analysis and reporting.

Delivering high-quality applications is crucial for successful software development and deployment. A Continuous Integration and Continuous Deployment (CI/CD) pipeline enables you to optimize your development workflow for faster and more reliable software releases. By leveraging CI/CD pipelines, you can also collaborate more efficiently with your teams and secure a streamlined deployment cycle.

In this article, you’ll explore the CI/CD pipeline in detail, along with its benefits in ETL workflows.

What Is a CI/CD Pipeline?

A CI/CD pipeline is a structured process that facilitates the automation of software development, deployment, and testing. It assists in continuously merging code contributions from multiple developers into a shared code repository like GitHub or GitLab. Each integration automatically triggers an automated build and testing sequence, ensuring that new code embeds effortlessly with the existing repository. This approach helps in the early detection of defects and maintains a consistent, functional codebase.

After the successful integration, you can release the application code components to a production-like setting and perform additional tests for performance, regression, and user acceptance. These tests guarantee that the application is fully prepared for deployment. Once the application is deployment-ready, the CI/CD pipeline automates software delivery to the live production environment, eliminating the need for manual intervention.

Benefits of a CI/CD Pipeline in ETL

Integrating a CI/CD pipeline into ETL workflows helps you automate the entire data lifecycle— extraction, transformation, and loading—ensuring continuous integration and deployment practices. Here’s how the CI/CD pipeline benefits your ETL processes.

- Accelerated Time-to-Market: CI/CD pipelines enable you to automate deployment, reducing the time required to move from development to production. As a result, new data processing features, bug fixes, and updates in your ETL workflows are delivered quickly.

- Data Quality Assurance: Using CI/CD pipelines, you can implement automated validation through various tests. This aids in detecting inconsistencies in ETL processes early and preventing disruptions to downstream systems.

- Scalability: As data volumes increase, your ETL pipeline must scale efficiently. If you are working with cloud-based solutions and container orchestration tools, you can integrate the CI/CD approach into your ETL processes. This provides the flexibility to automate scaling based on demand and validate your infrastructure growth with your data processing needs.

- Increased Efficiency: By minimizing manual intervention in the ETL method, you can process data faster and with fewer errors. CI/CD further lets you enhance this by automating testing and deployment, ensuring that changes to ETL workflows are validated before being applied.

- Improved Collaboration: A CI/CD-driven ETL workflow facilitates easy collaboration with data engineers, analysts, and developers. With version control and continuous integration in place, multiple team members can contribute to the data pipeline without conflicts.

What Is Continuous Integration?

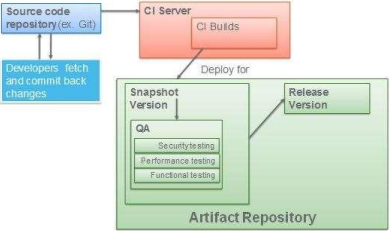

Continuous Integration (CI) is the process of frequently merging your code changes into a version control system like GitLab. When you push or commit code changes to the shared repository, the Continuous Integration pipeline automatically builds an application. This is done by compiling the source code and packaging it into a JAR file or Docker image. Once built, automated tests run to verify that new changes work as expected and no existing functionality is broken. With the automated CI pipeline, you can identify the bugs early and provide instant feedback on code quality.

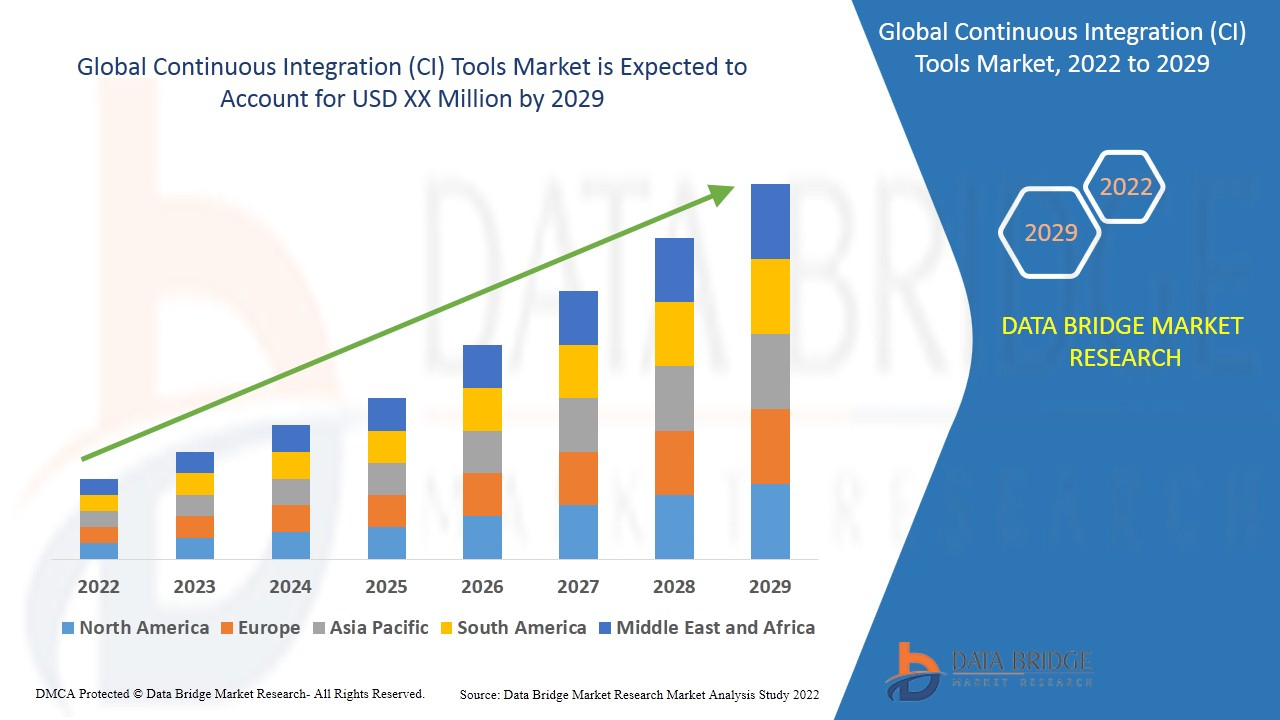

According to Data Bridge market research, the use of CI tools is expected to reach substantial financial levels globally By 2029. This market expansion reflects the increasing demand for automation in the software development process and maintaining a stable, functional application throughout the development cycle.

What Is Continuous Delivery?

Continuous Delivery refers to the ability to ensure that your application is always in a deployable state and ready to deliver into production. After passing automated tests on new code, the Continuous Delivery pipeline lets you create the application’s build artifact (JAR/Docker image). You can then store the artifact in an artifact repository for further deployment.

Finally, the pipeline deploys the application to a staging or pre-production environment, where it undergoes advanced tests such as performance or user acceptance testing. If all checks are successful in staging, the Continuous Delivery pipeline then prepares the application for automated deployment.

What Is Continuous Deployment?

Continuous Deployment is a strategy that enables you to automate the deployment of every code change that passes the test phase directly to production. Once your code passes automated tests, it is immediately available to your users without any manual intervention. This process assists in eliminating the need for a separate release step. As a result, it facilitates faster delivery of new features or bug fixes through Continuous Deployment practice.

Continuous Deployment vs. Continuous Delivery: Is There a Difference?

Yes. With Continuous Delivery, you ensure that your code is ready to be deployed to production at any time. However, the actual deployment is manually triggered, giving you control of when to release new updates. When you have control over when to deploy code changes, you can confirm that only thoroughly validated, stable versions of the application go live.

On the other hand, Continuous Deployment involves automation of the deployment process. When a change passes a suite of unit, build, and security tests, it is automatically pushed to the production environment without needing manual intervention.

How Can You Run CI/CD Pipelines with Airbyte?

Airbyte is a data movement platform that assists you in building data pipelines to streamline data integration workflows across various platforms. It offers 600+ pre-built connectors to help you gather data from diverse sources and load it into a preferred destination.

Some outstanding features that Airbyte offers:

- Tailored Connector Solutions: By providing various custom connector development tools, including no-code Connector Builder, low-code CDKs, and language-specific CDKs, Airbyte allows you to build personalized connectors. The AI assistant in the Connector Builder helps automate prefilling relevant configuration properties during connector development.

- Developer-Friendly Pipeline: Airbyte offers PyAirbyte, an open-source Python library. It provides a set of utilities for using various Airbyte connectors in your Python workflows. Using PyAirbyte, you can extract data from varied sources and load it in an SQL cache like Postgres, Snowflake, or BigQuery. You can then access the data to apply transformations using Pandas DataFrame.

- Terraform Provider: Using Airbyte’s Terraform Provider, you can define and manage Airbyte configurations as code with an Infrastructure-as-Code solution called Terraform. This lets you work directly with the development tools you use to create new connections or test configuration changes without affecting your production environment.

Besides these capabilities, Airbyte provides a developer-friendly command-line interface (CLI) airbyte-ci, to streamline CI/CD workflows. The tool uses Dagger, a CI/CD engine based on Docker BuildKit. As a result, this CLI helps you build, test, and deploy connector changes locally, just as in remote CI/CD. The changes could include modifying existing connectors, developing new connectors, and adjusting metadata or configuration settings.

Normally, when you make changes to a codebase, you need to:

- Commit the changes.

- Push them to a remote repository.

- Wait for the CI/CD pipeline to run and check if your changes work as expected.

This process can be time-consuming, especially if the CI/CD pipeline fails, and you must repeat the cycle after fixing errors. With airbyte-ci, you can run the same pipelines on your local machines before committing and pushing changes. This helps you reduce the number of unnecessary commit-push cycles and make the development process efficient.

Now, let’s explore the steps on how to run CI/CD pipelines with Airbyte CLI:

Prerequisites:

- A running Docker engine with version 20.10.23 or above.

Steps:

- Open your terminal and clone the Airbyte repository.

- Navigate to the root of the Airbyte repository

- Install or update airbyte-ci using the Makefile:

- Verify the installation by running the following:

- Run a build pipeline to create a Docker image for the connector and export the image to the local Docker host using the following command:

- Execute a test pipeline to run automated tests, such as connector QA checks, unit tests, integration tests, and connector acceptance tests, on the built connector.

For Python-based connectors, you can use pytest to run unit and integration tests after installing the latest version of Poetry and Python.

- Publish the connector update to Airbyte’s DockerHub by executing the command:

With this structured approach, you can confirm that all connector changes go through rigorous building, testing, and validation before deployment. By utilizing the airbyte-ci CLI, you can simplify the CI/CD workflow and maintain high-quality connectors.

How Can Airbyte Help Once You Build a CI/CD Pipeline- A Real-World Use Case

Once your CI/CD pipeline is operational, collecting and analyzing data like issues, branches, jobs, or commits for reporting and visualization is essential. However, extracting and transforming raw data usually require separate processes.

Instead of creating an additional pipeline specifically for this, you can use Airbyte’s pre-built connectors for CI/CD tools like GitLab, Jenkins, or Harness. These connectors let you simplify the workflow by centralizing data from your chosen code repository into a destination of your choice. Such integration ensures that data is readily available for further business intelligence and analysis tasks.

Here, let’s start the seamless integration by configuring a CI/CD tool, GitLab, as the source, and Snowflake as the destination using Airbyte.

Prerequisites:

- A GitLab account or a locally hosted GitLab instance.

- An Airbyte account.

- A Snowflake account with an ACCOUNTADMIN role.

1. Set Up a GitLab CI/CD Pipeline Job

- Create a public project for free in the GitLab repository and assign a Maintainer or Owner role for the project.

- Define your CI/CD pipeline jobs, stages, and actions in the .gitlab-ci.yml file for automated code building, testing, and deployment.

- When you start the pipeline, the jobs will run automatically using a GitLab Runner.

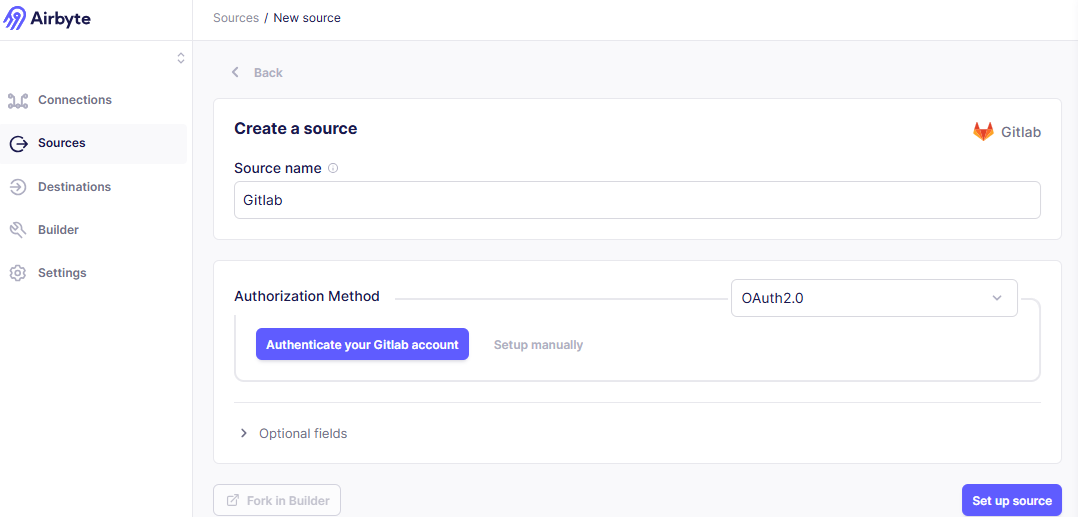

2. Configure GitLab as the Source

- Sign in to Airbyte Cloud.

- Navigate to the Sources section and search for the GitLab connector on the Set up a new source page.

- On the GitLab source configuration page, authenticate your GitLab account via OAuth2.0 or private token authentication.

- Click on the Set up source button to finish the source configuration.

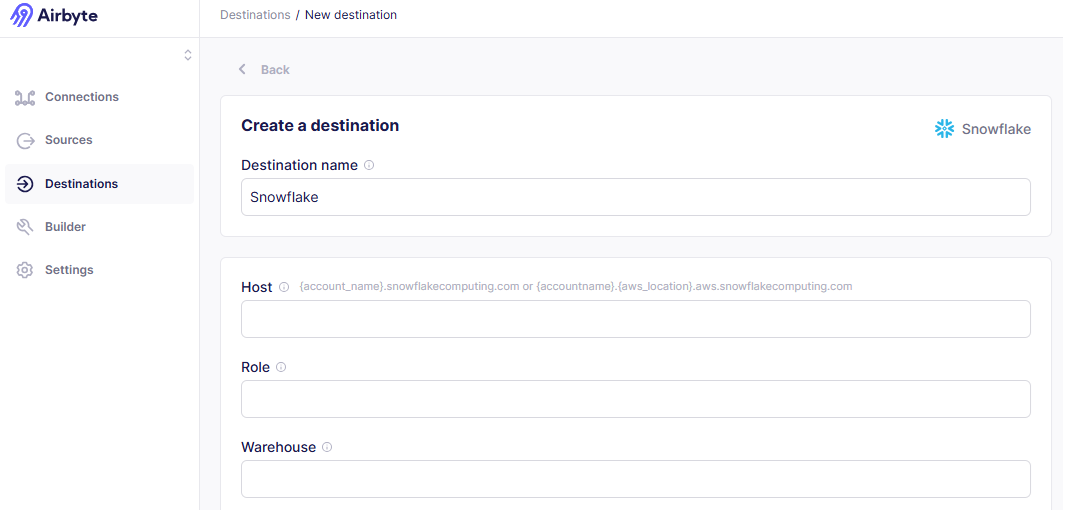

3. Configure Snowflake as the Destination

- Navigate to the Destinations section and search for Snowflake on the Set up a new destination page.

- Once you’re redirected to the Snowflake configuration page, fill in the necessary fields.

- After specifying the mandatory information, click the Set up destination button.

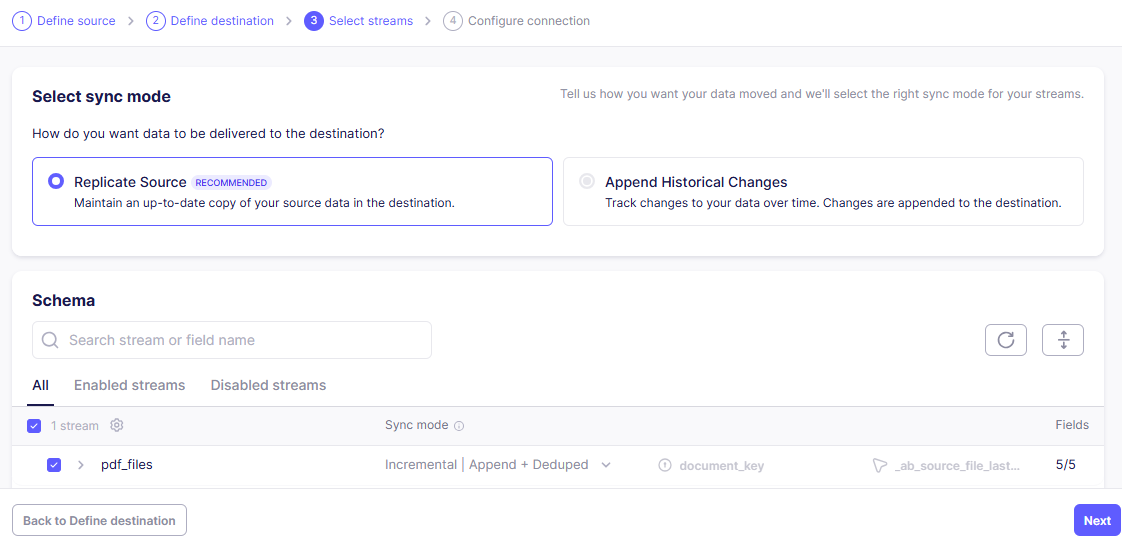

4. Establish a Connection Between GitLab and Snowflake

- Select the Connections tab on the left navigation bar of the dashboard.

- Choose the configured GitLab and Snowflake connectors for your connection setup.

- You’ll navigate to the Select streams page, where you can select the desired sync mode and required number of data streams.

- Click Next.

- In the Configure connection page, you can select the Replication Frequency from 15 minutes to 24 hours to streamline the data sync from GitLab to Snowflake.

- Click the Finish & Sync button to establish a successful connection.

5. Modify .gitlab-ci.yml to Trigger Airbyte Sync

- Launch GitLab UI and go to the GitLab project that you created to use CI/CD.

- Select Code > Repository > Find File and choose your file .gitlab-ci.yml.

- Append the following cURL command in the existing GitLab CI job to trigger the sync in Airbyte. Then, specify the necessary credentials and connection IDs to the API request.

By adding this sync_data job to your .gitlab-ci.yml file, you’re automating the data sync process after each deployment. It ensures that the necessary GitLab data, such as comments, build logs, and releases, is consistently moved to your chosen destination for further analysis.

Conclusion

With a CI/CD pipeline, you can automate critical stages of your development and deployment process. This automation reduces human error, accelerates software delivery, and assures consistent code quality. Using a data movement platform like Airbyte, you can simplify the CI/CD workflow.

Airbyte enables you to streamline data sync between your version control system and the destination you prefer. To utilize Airbyte for your needs, connect with our experts.

Frequently Asked Questions

What is the difference between CI and CD in data pipelines?

Continuous Integration in data pipelines focuses on automatically testing and validating data transformations when code changes are committed. Continuous Deployment automatically deploys validated data pipeline changes to production environments without manual intervention.

How do you test data quality in CI/CD pipelines?

Data quality testing in CI/CD pipelines involves automated validation checks for data schema, completeness, accuracy, and consistency. These tests run automatically during the integration phase to catch data issues before they reach production systems.

Can CI/CD pipelines handle real-time data processing?

Yes, modern CI/CD pipelines can support real-time data processing by integrating with streaming platforms and event-driven architectures. The key is ensuring automated testing covers both batch and streaming data scenarios.

What are the best practices for version control in data CI/CD?

Best practices include storing all data pipeline code, configuration files, and schema definitions in version control systems. Use branching strategies that separate development, staging, and production environments while maintaining clear merge policies.

How do you monitor data CI/CD pipeline performance?

Monitor data CI/CD pipelines through automated alerts for pipeline failures, data quality metrics, processing times, and resource utilization. Implement logging and observability tools that provide visibility into each stage of the data pipeline lifecycle.