Data-Driven Insights: Turning Data into Actionable Results

Summarize this article with:

✨ AI Generated Summary

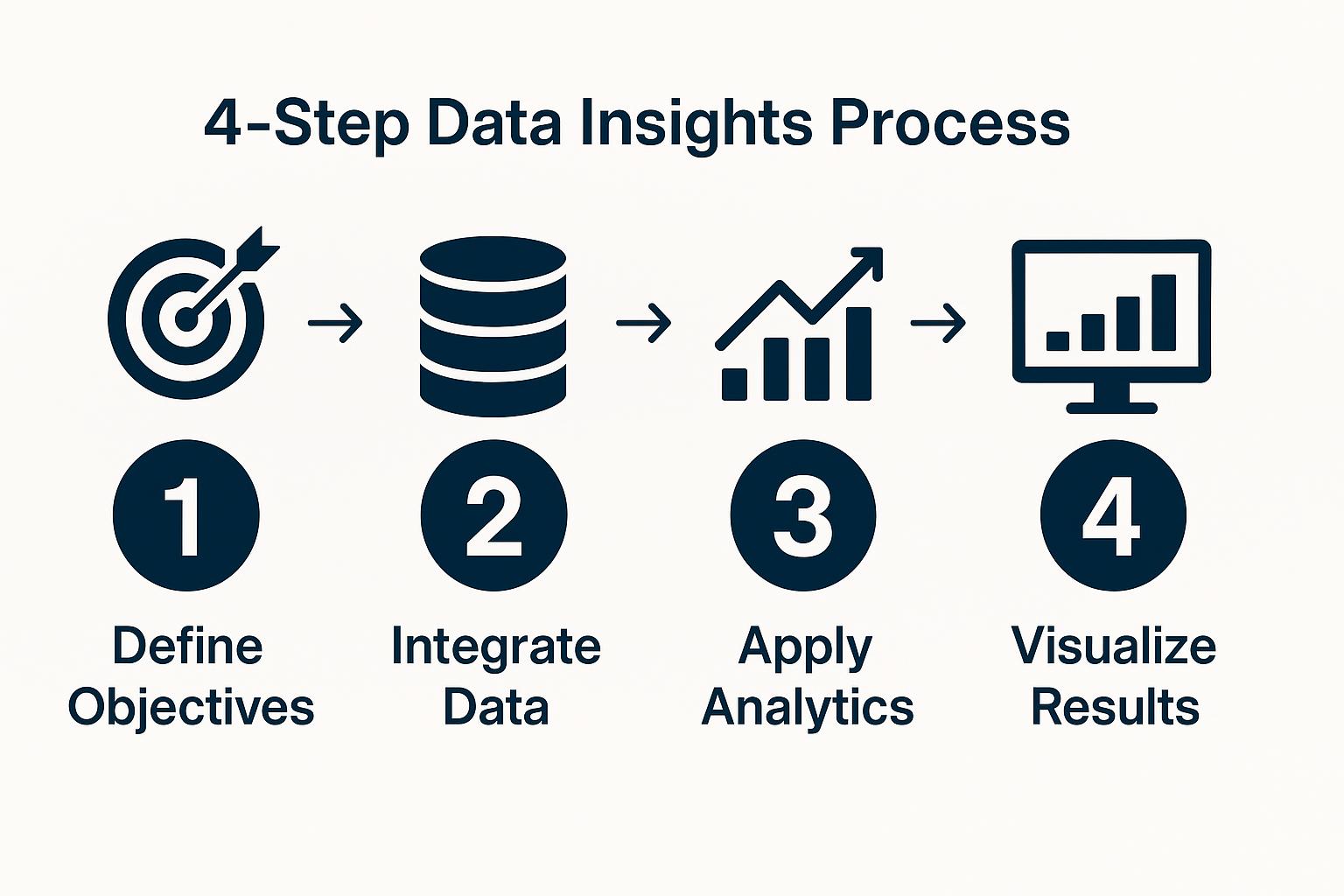

Organizations face challenges turning vast, diverse data into actionable business insights that drive strategic decisions and competitive advantage. Key steps include defining clear objectives, integrating and cleansing data, applying advanced analytics, and effectively visualizing results to support decision-making.

- Data-driven insights enhance customer relationships, product development, operational efficiency, risk mitigation, and competitive positioning.

- Educational frameworks and real-time data integration architectures improve reliability and responsiveness of insights.

- Platforms like Airbyte accelerate data consolidation with extensive connectors, real-time sync, and integration with modern analytics and AI tools.

- Future trends emphasize AI automation, democratized analytics, and continuous real-time processing for faster, more accessible insights.

In today's business environment, organizations generate unprecedented volumes of data from diverse sources, yet many struggle to transform this raw information into meaningful business value. The challenge isn't simply collecting data but developing the systematic approaches needed to extract actionable intelligence that drives strategic decisions.

Data-driven insights represent the culmination of sophisticated analytical processes that reveal hidden patterns, predict future trends, and illuminate opportunities for competitive advantage across every aspect of business operations. This comprehensive guide explores the fundamental concepts behind data-driven insights, examines proven methodologies for generating reliable intelligence, and demonstrates how modern integration platforms can accelerate your journey from raw data to strategic business value.

What Are Data-Driven Insights and Why Do They Matter?

Data-driven insights are valuable information obtained through meticulously analyzing large datasets using systematic methodologies and advanced analytical techniques. By streamlining data-extraction and integration processes, organizations can identify patterns, trends, and relationships that influence strategic decisions across multiple business domains.

These insights emerge from the intersection of robust data infrastructure, sophisticated analytical capabilities, and structured decision-making frameworks that ensure findings translate into actionable business strategies.

The transformation of raw data into actionable intelligence involves multiple stages of refinement, from initial data collection and standardization through advanced analytical processing and visualization. Each stage requires specialized expertise and sophisticated tools that can handle the complexity and scale of modern enterprise data environments while maintaining the accuracy and timeliness essential for effective business decision-making.

Why Are Data-Driven Insights Essential for Modern Business Operations?

Data-driven insights have become fundamental to competitive business operations because they provide the evidence-based foundation necessary for strategic decision-making across all organizational departments. The systematic analysis of business data reveals opportunities for optimization, identifies potential risks before they impact operations, and enables proactive responses to market changes that can determine competitive success or failure.

Strengthen Customer Relationships

Insights from various customer touchpoints reveal pain points, preferences, and buying patterns that enable personalized engagement strategies. Organizations can craft targeted messages for loyal customers while developing sophisticated re-engagement campaigns for dormant segments.

Advanced customer analytics reveal lifetime value patterns, churn prediction indicators, and cross-selling opportunities that maximize revenue from existing relationships while identifying the most promising prospects for acquisition efforts.

Drive Product Development

Customer data analysis provides a detailed understanding of product performance, feature utilization patterns, and unmet market needs that guide development priorities. Research and development teams can modify existing offerings or develop entirely new products based on empirical evidence of market demands, helping organizations tap into emerging niches and boost profitability.

Market intelligence derived from customer feedback, usage analytics, and competitive analysis enables product teams to anticipate future requirements and develop solutions that address evolving customer needs.

Enhance Operational Efficiency

Monitoring key performance indicators and operational benchmarks provides comprehensive visibility into departmental progress, process inefficiencies, and improvement opportunities. This analytical approach helps organizations reduce bottlenecks, optimize resource allocation, and enhance collaboration between departments through data-driven process improvements.

Advanced operational analytics can identify automation opportunities, predict maintenance requirements, and optimize workflow designs that significantly reduce operational costs while improving service quality.

Calculate and Mitigate Risks

Predictive analytics can prevent costly mistakes such as over-hiring, poorly timed investments, or inventory management errors that impact cash flow and operational efficiency. Making calculated decisions based on comprehensive risk analysis minimizes exposure to adverse outcomes while fostering continuous growth and innovation.

Risk modeling capabilities enable organizations to scenario-plan for various business conditions and develop contingency strategies that maintain operational resilience during market volatility.

Gain Sustainable Competitive Advantage

Advanced analytical capabilities, coupled with real-time data processing, provide organizations with significant competitive advantages through faster response times and more informed strategic decisions. Real-time analytics empower swift decision-making that can capture first-mover advantages in rapidly changing markets.

Becoming a data-driven organization enables businesses to anticipate industry trends, address operational issues proactively, and optimize performance continuously based on empirical evidence rather than intuition or outdated information.

What Are the Essential Steps for Generating Reliable Data-Driven Insights?

Each day, modern businesses gather substantial volumes of raw data from customer transactions, marketing platforms, operational systems, and various internal databases. Successfully transforming this diverse data into actionable intelligence requires a systematic approach that ensures data quality, analytical rigor, and practical applicability.

Follow these comprehensive steps to streamline your data discovery and management processes while generating meaningful insights that drive business value.

1. Define Clear Business Objectives

Gather key stakeholders to assess business plans, strategic priorities, and expected outcomes from analytical initiatives. Set specific, measurable objectives that align with organizational goals, identify required data sources, and establish success metrics that enable progress measurement and outcome validation.

2. Integrate and Consolidate Data Sources

Implement comprehensive data integration processes that combine information from multiple sources into centralized repositories while applying governance frameworks that ensure data quality and consistency. Include data cleansing, standardization, and validation procedures that prepare information for reliable analysis.

3. Apply Advanced Analytical Techniques

Utilize sophisticated analytical tools, including machine-learning models, data-mining algorithms, and AI-powered platforms that can process large datasets efficiently while identifying complex patterns and relationships. Select analytical approaches that match your specific business questions, ensuring statistically valid results.

4. Visualize and Communicate Results

Present analytical findings in clear, accessible formats such as dashboards, reports, and visualizations that enable stakeholders across different functional areas to understand and act upon insights effectively.

How Do Educational Frameworks Enhance Data-Driven Decision Making?

Educational frameworks provide structured methodologies that transform ad-hoc analytical efforts into systematic, repeatable processes for generating reliable insights and implementing evidence-based decisions. These proven approaches address common challenges such as insufficient stakeholder alignment and difficulty translating analytical findings into actionable strategies.

Collaborative Inquiry and Systematic Improvement Models

The Data Wise Improvement Process offers an eight-step collaborative model guiding teams through systematic inquiry. It emphasizes establishing collaborative cultures, developing data literacy, and implementing governance structures that support responsible data analysis.

Data Transformation and Knowledge Development Processes

The Light Framework outlines six phases from data collection to synthesis that help organizations convert raw data into actionable knowledge, ensuring analytical findings are contextually relevant and practically applicable.

Continuous Improvement and Iterative Refinement Approaches

Plan-Do-Study-Act (PDSA) cycles provide a structured, iterative approach for testing improvements on a small scale, studying results, and refining strategies before organization-wide implementation.

What Are the Key Technical Considerations for Real-Time Data Integration?

Real-time data integration demands sophisticated architectural decisions and specialized expertise that address the unique challenges of processing continuous data streams while maintaining accuracy and performance.

Streaming Architecture Design and Performance Optimization

Event-driven architectures must address challenges such as event ordering, state management, exactly-once processing semantics, and backpressure. These technical considerations ensure that real-time systems can handle varying data volumes while maintaining data integrity and processing reliability.

Change Data Capture and Real-Time Synchronization

CDC technologies monitor database transaction logs and replicate changes to target systems while preserving data consistency and handling schema evolution. This approach enables organizations to maintain synchronized data across multiple systems without impacting source system performance.

Integration with Modern Data Stack Components

Seamless connectivity between streaming systems and existing warehouses, analytics platforms, and orchestration tools requires robust adapters, workflow management, and continuous observability. Modern integration platforms must support diverse data formats and processing requirements while maintaining enterprise-grade security and governance standards.

How Can Airbyte Accelerate Your Path to Data-Driven Insights?

A comprehensive data integration platform like Airbyte streamlines data consolidation and accelerates insight generation through enterprise-grade capabilities designed for modern data operations. Airbyte provides the foundation needed to transform disparate data sources into unified, analysis-ready datasets that power meaningful business insights.

Airbyte offers 600+ pre-built connectors for popular sources and destinations, eliminating the development overhead typically required for custom integrations. Multiple sync modes, including incremental and real-time CDC, ensure that organizations can maintain current data across all systems while optimizing for performance and cost efficiency.

The platform includes no-code and low-code connector builders that enable business users to create integrations without extensive technical expertise. Airbyte Cloud offers enterprise-grade security features, including SOC 2 Type II certification and support for GDPR-related data protection, helping ensure sensitive data remains protected for cloud deployments. HIPAA compliance and some certifications may not apply to self-hosted or open-source versions.

Native integrations with dbt, Airflow, Prefect, and Python via PyAirbyte enable seamless incorporation into existing data workflows and analytics pipelines. Support for advanced analytics and LLM frameworks such as LangChain and LlamaIndex positions organizations to leverage cutting-edge AI capabilities for enhanced insight generation.

What Are the Future Trends Shaping Data-Driven Insights?

The landscape of data-driven insights continues evolving rapidly as new technologies and methodologies emerge to address growing data complexity and business requirements. Understanding these trends enables organizations to prepare for future analytical capabilities and competitive advantages.

What Are the Future Trends Shaping Data-Driven Insights?

These emerging trends represent fundamental shifts in analytical capabilities that will determine which organizations can leverage data as a competitive advantage:

- AI integration: Revolutionizes analytics by automating pattern recognition, predictive modeling, and insight generation that once required specialized expertise and significant manual effort.

- Analytics democratization: Low-code and no-code platforms enable business users to generate sophisticated insights without deep technical expertise or dependence on data science teams.

- Real-time processing: Continuous data analysis is becoming standard as organizations recognize that batch processing delays compromise competitive positioning in fast-changing markets.

Conclusion

Data-driven insights have evolved from competitive advantages to business necessities that determine organizational success in modern markets. Achieving meaningful insights requires sophisticated integration capabilities, proven analytical methodologies, and platforms capable of handling contemporary data scale and complexity.

Solutions like Airbyte, combined with structured decision-making frameworks, empower organizations to become truly data-driven and consistently outperform competitors through superior insights and faster responses to market opportunities.

Organizations that invest in comprehensive data integration and analytical capabilities position themselves to capture the strategic advantages that data-driven insights provide across all aspects of business operations.

Frequently Asked Questions

What Are the Most Common Challenges in Implementing Data-Driven Insights?

Organizations typically face three critical challenges when implementing data-driven insights: poor data quality, siloed information, and cultural resistance. Successful adoption requires executive sponsorship, demonstrable success stories, and targeted data literacy programs that build confidence across traditionally resistant teams.

How Long Does It Take to See Results from Data-Driven Initiatives?

Results from data-driven initiatives depend on data quality, infrastructure, and project scope. Smaller analytics projects can deliver insights in weeks, while larger transformations may take months. Mature data setups see faster outcomes, whereas foundational improvements take longer but yield lasting benefits.

What Skills Are Essential for Building Data-Driven Capabilities?

Essential skills include data integration, statistical analysis, and visualization. Equally important are business acumen, domain knowledge, and communication. Strong storytelling helps translate complex data into actionable insights, enabling stakeholders to make informed, strategic decisions across the organization.

How Do You Measure the Success of Data-Driven Insight Initiatives?

Measure success by business impact - improved decision speed, operational efficiency, and measurable revenue or cost gains. Track baseline KPIs, stakeholder adoption, data quality, and workflow efficiency to evaluate how effectively data-driven insights drive performance and support business goals.

What Are the Best Practices for Ensuring Data Privacy and Security?

Implementing comprehensive data governance frameworks that address access controls, encryption, and audit trails ensures sensitive information remains protected throughout analytical processes. Regular security assessments and compliance monitoring help maintain regulatory requirements, while data minimization principles—collecting and processing only necessary information for specific analytical purposes—reduce privacy risks while maintaining analytical effectiveness.

.webp)

.png)