What is Data Obfuscation?

Summarize this article with:

✨ AI Generated Summary

Data obfuscation is essential for protecting sensitive information while maintaining data utility, employing techniques like masking, encryption, tokenization, and differential privacy to meet regulatory requirements such as GDPR, HIPAA, and CCPA. Advanced methods, including AI-powered masking and homomorphic encryption, enhance privacy protection by enabling dynamic, context-aware data handling and secure computation on encrypted data. Implementation challenges involve balancing security with data usability, managing computational overhead, and navigating complex, multi-jurisdictional compliance landscapes.

Every time you think you've mastered data protection, a new breach makes headlines, and suddenly your organization's reputation hangs in the balance. With organizations struggling with data quality issues and poor data quality costing companies significantly, data professionals find themselves caught between the urgent need to protect sensitive information and the business demand for accessible, usable data.

The challenge isn't just technical—it's existential. One poorly protected dataset can destroy years of customer trust and trigger regulatory penalties that reshape entire business strategies.

Data obfuscation emerges as a critical solution to this fundamental tension, offering sophisticated techniques that protect sensitive information while preserving data utility for legitimate business purposes. This comprehensive guide explores the essential methodologies, implementation strategies, and emerging technologies that enable organizations to safeguard their most valuable asset while maintaining competitive advantage through data-driven insights.

What Is Data Obfuscation and Why Does It Matter?

Data obfuscation represents the systematic process of transforming sensitive information to make it difficult to interpret while maintaining its usability for authorized purposes. This practice serves as a fundamental privacy-preserving technique, protecting personally identifiable information during various stages of data handling, including storage, transfer, integration, and analysis.

The technique operates on the principle of hiding data in plain sight, where sensitive information becomes unrecognizable to unauthorized observers while remaining functionally useful for legitimate applications. Unlike simple data deletion or access restriction, obfuscation enables organizations to leverage data value while ensuring privacy protection and regulatory compliance.

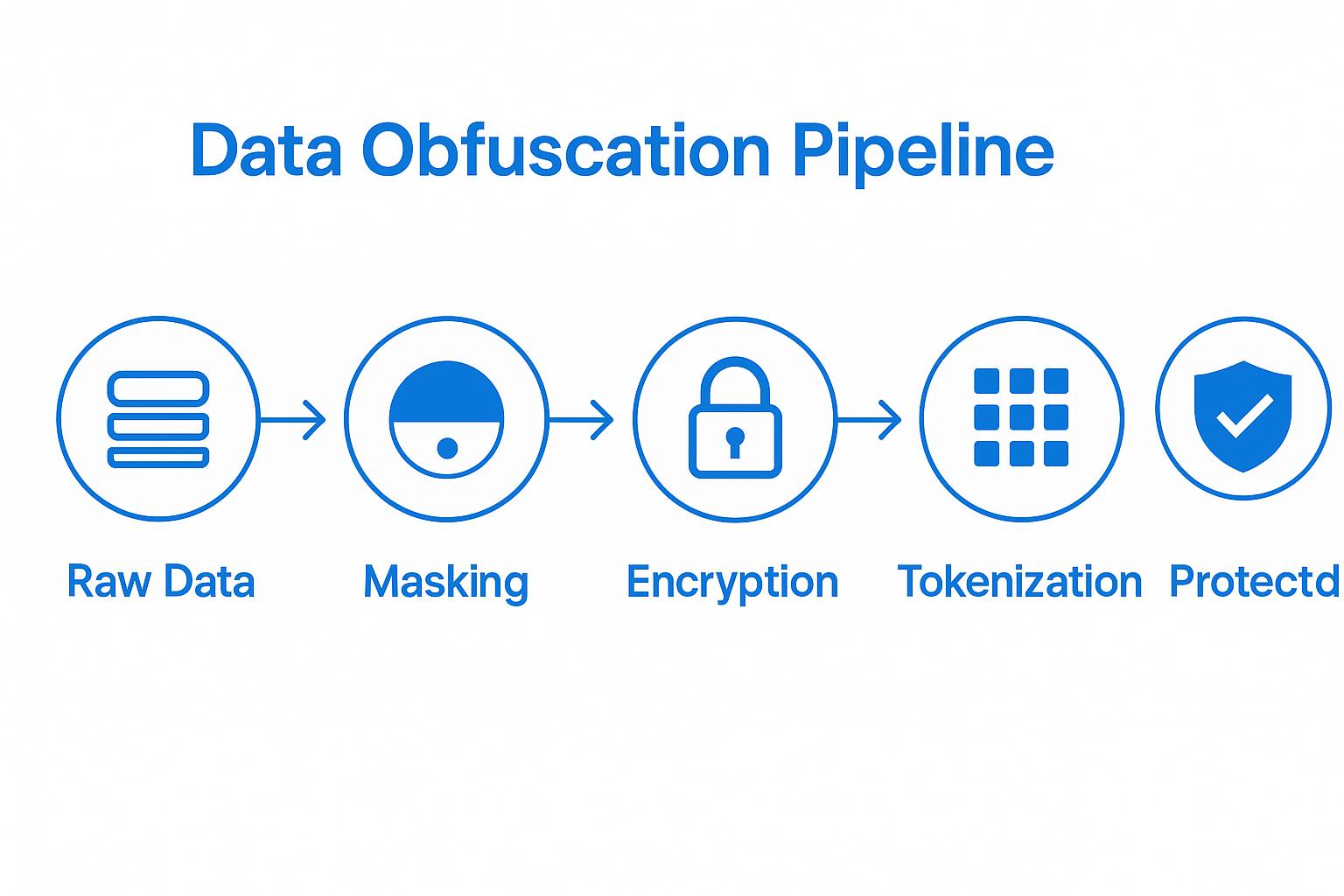

Modern data obfuscation encompasses multiple specialized approaches including data masking, encryption, tokenization, differential privacy, and synthetic data generation. Each technique addresses specific privacy challenges and use cases, with sophisticated implementations often combining multiple approaches to create comprehensive protection frameworks that balance security requirements with operational needs.

The reversibility property serves as a crucial characteristic for categorizing obfuscation techniques. Some methods, like tokenization, are designed to be irreversible without access to secure mapping systems, while others, like format-preserving encryption, maintain mathematical relationships that enable authorized decryption. This spectrum of reversibility allows organizations to select appropriate techniques based on their specific security requirements and data usage patterns.

How Do Current Data Protection Regulations Drive Obfuscation Requirements?

Data protection regulations worldwide increasingly mandate specific technical measures for safeguarding personal information, with obfuscation techniques playing a central role in compliance strategies. The General Data Protection Regulation (GDPR) explicitly recognizes pseudonymization and anonymization as privacy-enhancing techniques, while the California Consumer Privacy Act (CCPA) requires businesses to implement reasonable security measures to protect personal information.

Healthcare organizations must comply with HIPAA requirements that mandate specific safeguards for protected health information, often utilizing advanced obfuscation techniques to enable research and analytics while preventing patient identification. Financial services organizations face PCI DSS requirements for protecting cardholder data, typically implementing tokenization and format-preserving encryption to secure payment information throughout processing workflows.

The distinction between pseudonymization and anonymization becomes crucial for regulatory compliance, as different jurisdictions classify obfuscated data differently under their legal frameworks. GDPR treats pseudonymized data as personal data requiring continued protection, while properly anonymized data falls outside regulatory scope entirely. This classification significantly impacts data handling requirements, cross-border transfer restrictions, and individual rights obligations.

Emerging regulations continue to expand technical requirements for data protection, with some jurisdictions prescribing specific obfuscation standards and implementation guidelines. Organizations operating across multiple jurisdictions must implement flexible obfuscation frameworks capable of meeting varying regulatory requirements while maintaining operational efficiency and data utility for business purposes.

What Are the Traditional Data Obfuscation Techniques and Their Applications?

Data Masking and Value Substitution

Data masking creates realistic but fictitious alternatives to sensitive data while preserving underlying structure and format characteristics. This approach enables organizations to maintain functional datasets for development, testing, and analytics without exposing actual sensitive information to unauthorized personnel or systems.

Static data masking creates permanent copies of production databases with sensitive information replaced by masked alternatives, making it ideal for non-production environments where data remains relatively stable over time. Dynamic data masking applies obfuscation rules in real time as users query data, enabling role-based security where different users see different levels of data exposure based on their authorization levels.

Implementation approaches include substitution methods that replace sensitive values with alternatives from predefined datasets, shuffling techniques that reorder values within columns to maintain statistical properties, and variance application that adds controlled modifications to numerical data. Deterministic masking ensures identical input values consistently produce identical masked outputs, maintaining data relationships across systems and enabling consistent analytical results.

Format-preserving masking maintains original data formats while obscuring actual values, enabling seamless integration with existing systems and applications without requiring extensive modifications. This approach proves particularly valuable for legacy system environments where changing data formats would create significant technical challenges or operational disruptions.

Encryption and Cryptographic Protection

Data encryption transforms plaintext information into ciphertext using sophisticated cryptographic algorithms, rendering data completely inaccessible without appropriate decryption keys. While encryption provides exceptional security levels, it creates limitations for data manipulation and analysis while information remains in encrypted form.

Symmetric encryption utilizes identical keys for encryption and decryption processes, offering computational efficiency for bulk data protection scenarios. This approach requires secure key distribution and management protocols to maintain security integrity, with key compromise potentially exposing all encrypted data protected under that key.

Asymmetric encryption employs paired public and private keys for encoding and decoding operations, providing enhanced security through separation of encryption and decryption capabilities. The dual-key system enables secure data sharing scenarios where multiple parties require access to encrypted information without compromising overall security architecture.

Format-preserving encryption represents a specialized cryptographic approach that maintains original data structure while providing encryption protection. This technique ensures encrypted output retains the same length, format, and character composition as original data, enabling seamless integration with existing databases and applications without system modifications.

Tokenization and Placeholder Systems

Tokenization substitutes sensitive data elements with meaningless placeholder values that maintain no mathematical relationship to original information. Unlike encryption, tokenization cannot be reversed through mathematical operations, making it highly secure against cryptographic attacks and providing irreversible protection for sensitive data elements.

Vault-based tokenization systems store original data separately from tokenized representations in secure databases, ensuring that data breaches cannot expose sensitive information without access to both token systems and secure vaults. This separation creates multiple security layers while enabling authorized token-to-data mapping when required for legitimate business purposes.

Vaultless tokenization eliminates secure database requirements by using cryptographic algorithms to generate tokens directly from sensitive data, improving performance and reducing infrastructure complexity. This approach utilizes mathematical functions that cannot be reversed without specific cryptographic keys, providing security benefits while simplifying system architecture and reducing operational overhead.

The tokenization process enables organizations to maintain operational functionality with tokenized data while eliminating exposure risks associated with storing or processing actual sensitive information. Tokens preserve data format characteristics necessary for system operations while ensuring that compromise of tokenized systems cannot reveal underlying sensitive data.

How Do Mathematical Frameworks Enhance Data Obfuscation Effectiveness?

Differential Privacy and Statistical Protection

Differential privacy provides mathematically rigorous privacy guarantees by adding carefully calibrated noise to data queries and outputs, ensuring that individual participation in datasets cannot be determined from analytical results. This technique enables organizations to publish statistical information and support research initiatives while providing quantifiable privacy protection for individual data subjects.

The mathematical foundation involves privacy budget management that controls cumulative privacy loss across multiple queries, preventing gradual erosion of privacy protection through repeated data access. Advanced implementations utilize sophisticated noise distribution algorithms—including Laplace and Gaussian mechanisms—optimized for different query types and accuracy requirements.

Local differential privacy applies noise addition at individual data points before collection, providing stronger individual protection by ensuring sensitive information never leaves individual control in unprotected form. Global differential privacy applies noise to aggregate query results, enabling more accurate analytical outputs while maintaining privacy guarantees at the population level.

Implementation frameworks incorporate randomized response mechanisms that enable truthful responses to sensitive survey questions while maintaining plausible deniability through structured randomization processes. These approaches have proven particularly effective for collecting honest responses about sensitive topics where traditional data collection methods might produce biased or incomplete results.

K-Anonymity and Group-Based Protection Models

K-anonymity ensures that each individual record becomes indistinguishable from at least k – 1 other records when considered against identifying attributes, preventing singling out of specific individuals without additional information. This approach groups records with similar quasi-identifying characteristics, creating ambiguity that protects individual privacy while maintaining analytical utility.

Implementation typically involves generalization techniques that replace specific values with broader categories—such as converting exact ages to age ranges—and suppression methods that remove data elements potentially enabling identification. The balance between generalization and suppression determines both privacy protection levels and analytical utility for intended purposes.

L-diversity extends k-anonymity by ensuring sufficient diversity in sensitive attributes within each anonymity group, preventing attribute disclosure attacks where group membership might reveal sensitive information. This enhancement addresses fundamental limitations of basic k-anonymity that arise when anonymity groups contain homogeneous sensitive attributes.

T-closeness represents the most sophisticated group-based approach, requiring that sensitive attribute distributions within anonymity groups closely match overall dataset distributions. This technique prevents statistical inference attacks that exploit distribution differences between groups and populations to deduce sensitive information about specific individuals.

What Are AI-Powered Data Masking and Advanced Techniques?

Artificial intelligence is revolutionizing data obfuscation through intelligent, adaptive systems that understand context, recognize patterns, and make sophisticated decisions about privacy protection requirements. AI-powered data masking represents a fundamental shift from static, rule-based approaches toward dynamic, learning-enabled systems that evolve with changing data landscapes and emerging threats.

1. Intelligent Pattern Recognition and Detection

Advanced machine-learning algorithms excel at identifying and categorizing sensitive data across diverse datasets by recognizing complex patterns indicative of personal information. These systems adapt to emerging data types and formats while continuously refining detection accuracy through iterative learning processes, identifying subtle indicators of sensitive information that traditional pattern-matching approaches might miss.

2. Contextual Awareness and Smart Masking

Contextual awareness enables AI-driven systems to differentiate between sensitive and non-sensitive information based on data relationships and usage contexts. This intelligence allows systems to apply masking strategies that preserve data utility while enhancing privacy protection, minimizing risks associated with both over-masking that reduces analytical value and under-masking that leaves sensitive information exposed.

3. Adaptive Anonymization Systems

Adaptive anonymization capabilities provide dynamic, real-time data masking that evolves with changing conditions and requirements. These systems apply varying levels of anonymization based on user roles and access permissions, adjust masking intensity dynamically to balance utility and privacy requirements, and ensure robust protection as usage patterns and threat landscapes shift over time.

4. Unstructured Data Protection

The integration of natural language processing and computer vision technologies expands AI-powered masking capabilities to address unstructured data protection challenges. NLP algorithms identify and mask personal information within free-text fields, handling complex linguistic patterns that traditional approaches cannot address effectively, while computer vision algorithms enable detection and obscuring of sensitive data within images and documents.

5. Automated Compliance Management

Compliance management with AI-powered masking tools supports the identification, anonymization, and reporting of sensitive data as required by privacy regulations—such as GDPR, HIPAA, and PCI DSS. These systems generate comprehensive audit trails and compliance reports and can proactively identify potential compliance issues, providing significant operational advantages for organizations dealing with multiple regulatory frameworks alongside necessary human oversight.

What Privacy-Preserving Technologies and Mathematical Frameworks Are Emerging?

Homomorphic Encryption and Computation on Encrypted Data

Fully homomorphic encryption enables arbitrary computations on encrypted data without requiring decryption, fundamentally changing how organizations can protect sensitive information while maintaining analytical capabilities. This breakthrough technology allows mathematical operations, machine-learning model execution, and complex analytical processes to be performed on ciphertexts, with results matching those of operations performed on unencrypted data when finally decrypted.

The practical applications extend across healthcare diagnostics, financial risk analysis, and consumer behavior prediction, where models never access actual data while providing accurate analytical results. Libraries such as SEAL-Python, TenSEAL, and HElib support encrypted vector and matrix operations, enabling developers to embed encrypted computation directly into processing pipelines while maintaining complete data confidentiality.

Cloud computation has been transformed through homomorphic encryption implementation, enabling encrypted data to be uploaded, processed, and returned to clients without ever being decrypted on cloud infrastructure. This capability addresses fundamental trust issues in cloud computing by eliminating the need to expose sensitive data to third-party service providers while leveraging cloud-scale computational resources.

Performance improvements in homomorphic encryption implementations have addressed historical concerns about computational overhead, with recent developments significantly reducing processing demands while improving efficiency. The combination of improved algorithms, optimized implementations, and specialized hardware support has brought performance to levels supporting practical deployment across various industry applications.

Synthetic Data Generation and Advanced Anonymization

Synthetic data generation creates artificial datasets that approximate the statistical properties of the original data and are designed to minimize the inclusion of actual personal information, enabling safer data sharing and analysis with reduced risk to individual privacy when carefully validated. Advanced generative models—including generative adversarial networks and variational autoencoders—learn complex data patterns to generate realistic synthetic alternatives that preserve analytical utility.

Deep-learning-based synthetic data generation employs sophisticated neural-network architectures to capture complex relationships among multiple data features, enabling generation of high-quality synthetic datasets that maintain multi-dimensional statistical relationships. These systems learn distributions, correlations, and patterns within original datasets while generating new data points that exhibit similar characteristics without replicating actual records.

Differential privacy integration with synthetic data generation provides mathematical privacy guarantees while maintaining data utility for analytical purposes. This approach applies differential-privacy mechanisms during synthetic data generation, ensuring that individual participation in training datasets cannot be determined from synthetic outputs while preserving essential statistical characteristics.

Quality-evaluation frameworks assess both privacy protection and analytical utility characteristics of synthetic data through sophisticated metrics measuring statistical similarity, privacy protection levels, and preservation of analytical utility for intended use cases. Advanced evaluation methodologies utilize multiple independent metrics to provide comprehensive quality assessment across different dimensions of synthetic data effectiveness.

What Role Does Airbyte Play in Modern Data Protection Strategies?

Airbyte provides comprehensive data-protection solutions that integrate advanced obfuscation techniques into modern data-integration workflows, enabling organizations to maintain security and compliance while leveraging their data assets for competitive advantage. The platform combines robust encryption, role-based access controls, and automated privacy-protection features to address the complex security requirements of contemporary data environments.

The platform offers over 600+ pre-built connectors with built-in security features—including encryption-in-transit through SSL and HTTPS protocols—ensuring data remains protected during movement between systems. Industry-standard encryption protocols protect data both in transit and at rest, while role-based access-control mechanisms provide granular security management tailored to organizational hierarchies and data-sensitivity levels.

Airbyte adheres to stringent regulatory frameworks—including GDPR, HIPAA, SOC 2, and ISO 27001—ensuring that data-handling practices meet the highest compliance standards across multiple jurisdictions. The platform's compliance-first approach enables organizations to implement data-integration strategies that support business objectives while maintaining regulatory adherence and risk-management requirements.

What Are the Implementation Challenges and Best Practices?

Balancing Security and Utility Requirements

The fundamental challenge in data-obfuscation implementation lies in achieving optimal balance between privacy-protection strength and analytical-utility preservation, as these objectives often create conflicting requirements that must be carefully managed through systematic evaluation and optimization processes.

Quantifying the security-utility trade-off requires sophisticated evaluation frameworks that measure both privacy-protection effectiveness and analytical-utility preservation across diverse data types and usage scenarios. Organizations must develop custom evaluation criteria reflecting their specific security requirements, business needs, and stakeholder expectations while maintaining flexibility for evolving requirements.

Dynamic optimization approaches utilize machine-learning algorithms to monitor usage patterns and adjust protection levels automatically, but these approaches introduce additional complexity and potential vulnerabilities that must be carefully managed. The optimization process must consider multiple factors—including data-sensitivity levels, user-authorization requirements, and current threat intelligence—while maintaining system performance and reliability.

Stakeholder alignment requires comprehensive communication and education efforts ensuring that business users understand implications of different obfuscation choices and their impact on analytical capabilities. Technical teams must provide clear explanations of protection levels and utility impacts to enable informed decision-making by business stakeholders who depend on data for critical operations.

Computational and Operational Considerations

Large-scale obfuscation implementations create significant computational demands that impact system performance and operational costs, particularly for organizations processing massive datasets or requiring real-time obfuscation capabilities. Advanced algorithms require substantial computational resources that may strain existing infrastructure and necessitate careful resource planning and optimization.

Memory-utilization challenges arise when obfuscation systems must process entire datasets in memory to maintain referential integrity or apply complex transformation algorithms. Large datasets may exceed available system memory, requiring sophisticated streaming-processing architectures or distributed computing approaches that introduce additional complexity and resource requirements.

Storage overhead from obfuscation implementations can be substantial, particularly for techniques requiring multiple data versions, audit trails, or mapping information between original and obfuscated data. Organizations must consider long-term storage cost implications when evaluating obfuscation strategies, especially for high-volume data environments with extended retention requirements.

Processing latency introduced by obfuscation mechanisms can impact real-time applications and user experience, particularly for systems requiring immediate data access or high-throughput processing capabilities. Organizations must carefully evaluate latency requirements against obfuscation benefits when designing system architectures for performance-critical applications.

Regulatory Compliance and Legal Framework Navigation

Navigating complex regulatory requirements across multiple jurisdictions creates significant challenges for organizations implementing comprehensive obfuscation strategies, as different regulations may have conflicting requirements for data protection, retention, access, and cross-border transfer that must be simultaneously addressed.

Pseudonymization versus anonymization distinctions in various regulatory frameworks create complex compliance challenges, as different jurisdictions may classify identical obfuscation techniques differently. Organizations must carefully evaluate whether their techniques meet anonymization standards or merely provide pseudonymization protection, as this classification significantly impacts regulatory compliance requirements and legal obligations.

Cross-border data-transfer regulations add complexity for organizations operating in multiple jurisdictions, as obfuscation techniques satisfying requirements in one jurisdiction may be insufficient for another. Advanced obfuscation systems must provide configurable protection levels meeting varying regulatory requirements across different geographic regions while maintaining operational consistency.

Audit and compliance-verification requirements necessitate comprehensive documentation and testing capabilities demonstrating obfuscation effectiveness to regulatory authorities. Organizations must invest in compliance-management capabilities that can demonstrate regulatory adherence across all obfuscation implementations while providing detailed audit trails and verification mechanisms for regulatory inspection.

Conclusion

Data obfuscation is no longer just a technical safeguard—it’s a strategic necessity. As data volumes grow, regulations tighten, and breaches become costlier, organizations must adopt obfuscation frameworks that not only protect sensitive information but also preserve its value for innovation, analytics, and customer trust. By combining traditional methods with emerging technologies and AI-driven approaches, businesses can build a resilient data-protection strategy that balances compliance, security, and competitive advantage.

Frequently Asked Questions

What is the difference between data obfuscation and data encryption?

Data obfuscation uses masking, tokenization, or anonymization to protect data while keeping it usable, whereas data encryption converts data into ciphertext requiring keys to decrypt. Obfuscation preserves analytical utility; encryption provides stronger security but needs decryption for analysis.

How does differential privacy differ from traditional anonymization methods?

Differential privacy adds controlled, mathematically calibrated noise to queries, ensuring individuals cannot be identified, providing formal, quantifiable guarantees. Traditional anonymization modifies or masks data but is vulnerable to re-identification and lacks rigorous, provable privacy protection.

What are the main challenges in implementing data obfuscation at enterprise scale?

Enterprise-scale data obfuscation faces challenges like computational overhead, maintaining data usability, regulatory compliance, performance impacts on real-time systems, storage overhead, and consistently protecting diverse data types. Integration with existing systems and ongoing maintenance add further complexity.

How do AI-powered obfuscation systems improve upon traditional approaches?

AI-powered systems provide context-aware protection that automatically identifies sensitive information across diverse datasets and adapts to data characteristics. They offer dynamic risk adjustment, predictive threat analysis, and automated compliance management. Unlike static rule-based approaches, AI systems continuously learn and improve while reducing manual configuration overhead.

What should organizations consider when selecting obfuscation techniques for their specific needs?

Organizations should evaluate data sensitivity levels, intended use cases, regulatory requirements, and performance constraints. Key factors include reversibility requirements, computational overhead, integration complexity, and stakeholder access needs. Consider trade-offs between privacy protection and analytical utility, long-term scalability, and alignment with organizational risk tolerance and compliance obligations.

.webp)