What Is Data Pipeline Automation: Techniques & Tools

Summarize this article with:

✨ AI Generated Summary

Automated data pipelines streamline the extraction, transformation, and loading of large, diverse datasets, significantly reducing processing time and errors while improving data quality and consistency. Key benefits include:

- Use of AI and machine learning for predictive maintenance, anomaly detection, and self-optimization.

- Support for various pipeline types (ETL, ELT, batch, real-time) and deployment models (on-premises, cloud-native).

- Enhanced data governance through schema enforcement, data lineage tracking, and automated remediation.

- Popular tools like Airbyte, Google Cloud Dataflow, and Apache Airflow facilitate scalable, reliable, and customizable pipeline automation.

Overall, automation transforms data infrastructure into a competitive advantage by enabling faster, more accurate decision-making and operational efficiency.

Data pipeline automation has revolutionized how organizations handle their growing data volumes, with many companies processing terabytes of information daily across multiple systems. The challenge lies not just in managing this data, but in transforming it from scattered, inconsistent formats into actionable business intelligence. Manual data processing creates bottlenecks that slow decision-making and introduce costly errors, making automation essential for competitive advantage.

Modern data pipeline automation leverages artificial intelligence, real-time processing capabilities, and cloud-native architectures to create self-optimizing systems that adapt to changing business needs. Companies that implement automated pipelines reduce their data processing time from weeks to hours while significantly improving data quality and consistency across their operations.

Various tools support building and automating different data pipelines, including batch, ELT, ETL, and streaming. Among these, ELT pipeline tools are projected to lead the market by 2031 due to their ability to handle large datasets.

By selecting a data pipeline tool that suits your use case, you can configure and automate your pipeline to simplify data processing. With automation in place, you can entirely focus on leveraging the data rather than managing complex data-flow processes.

In this article, you'll learn different tools and techniques for data pipeline automation and explore the significance and benefits of automating data pipelines.

What Is an Automated Data Pipeline?

An automated data pipeline is a configured set of processes that helps you move and prepare data across various sources. It ensures efficient extraction, transformation, and loading (ETL/ELT) of data for detailed analysis or other use cases. By automating these steps, the pipeline aids in maintaining consistency and accuracy throughout the workflow. As a result, you can access high-quality data for smart and accurate decision-making.

Besides these capabilities, you can streamline repetitive tasks, minimize errors, and reduce human effort with an automated data pipeline. This enables you to handle large volumes of data smoothly.

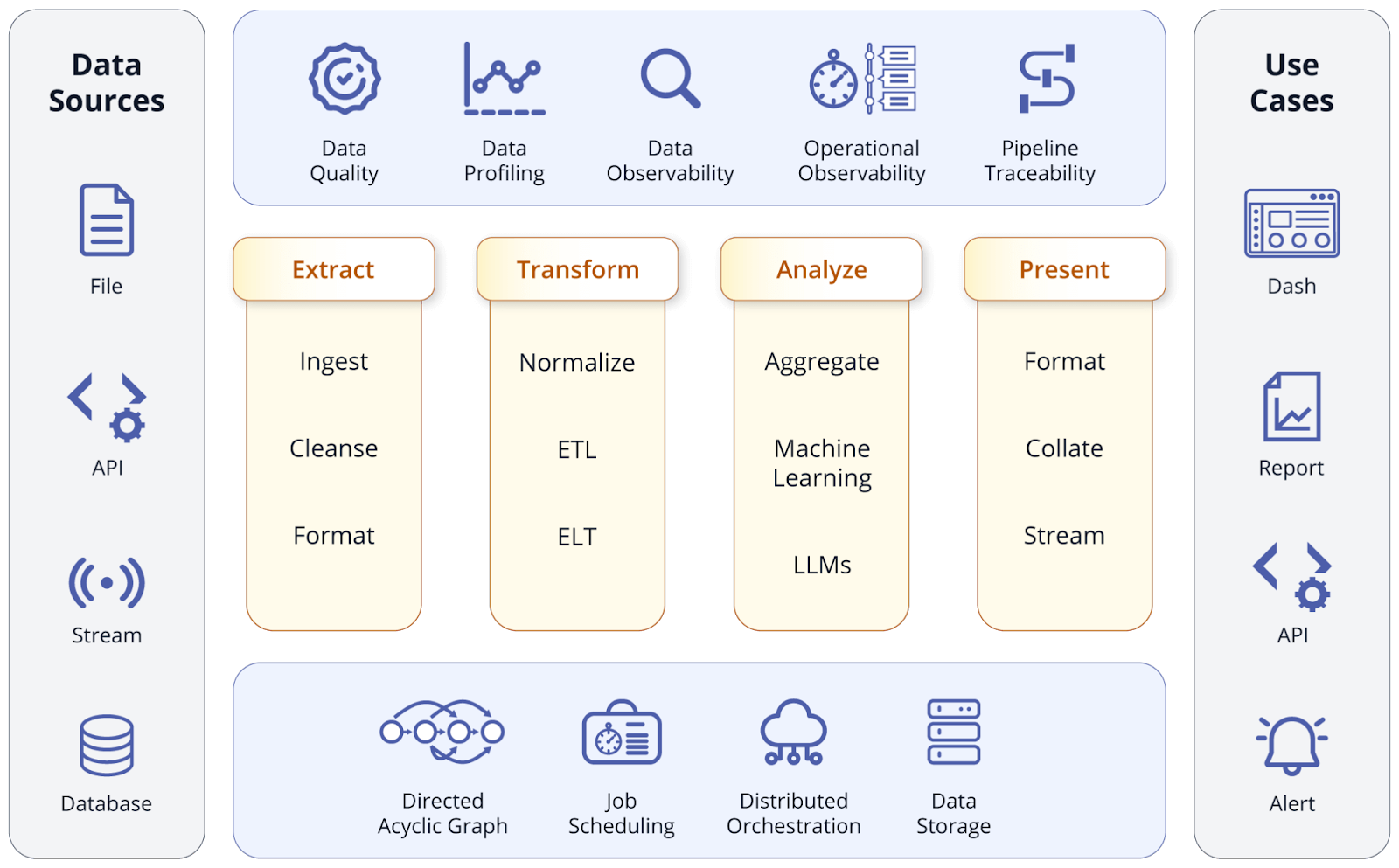

The key phases of data pipeline automation involve:

- Data Collection: Raw data is gathered from diverse sources, including databases, APIs, CRMs, or enterprise applications.

- Data Ingestion: The collected data is brought into the pipeline and loaded into a staging area for further processing.

- Data Transformation: The ingested data is cleansed, normalized, and structured for suitable analysis. The transformation process involves tasks like removing duplicates, handling missing values, and standardizing formats. Any business logic required for analysis is applied during this phase.

- Orchestration and Workflow Automation: Data workflows are scheduled, monitored, and managed using automation tools like Prefect or Dagster. This step ensures that each task is executed in the correct order and on time.

- Data Storage: The processed data is stored in the preferred destination, such as a data warehouse, data lake, or any other database. You can further integrate data systems with analytics tools for detailed analysis.

- Data Presentation: Once analyzed, the insights are visualized through dashboards or reporting tools to make strategic business decisions.

- Monitoring and Logging: Monitoring and logging mechanisms are implemented to track data flow, identify errors, and maintain pipeline health.

By automating these phases, you can efficiently handle voluminous datasets, minimize errors, and enhance decision-making.

How Are Automated Data Pipelines Classified?

To better understand the various approaches for data pipeline automation, it is important to explore their classification based on key factors like architecture, functionality, and integration capability. This will provide a clear perspective on which pipeline is best for specific needs.

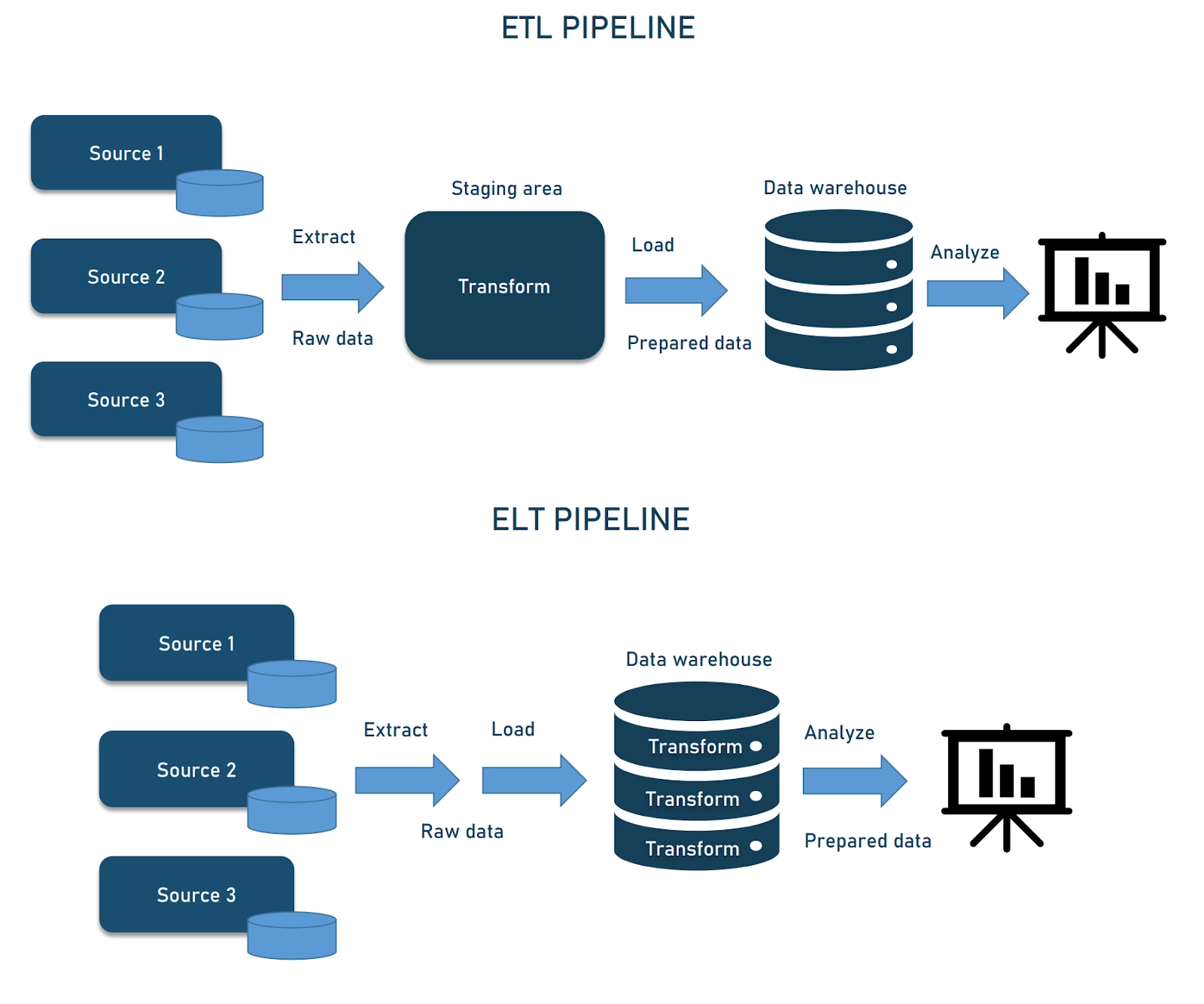

ETL vs. ELT Pipelines

ETL (Extract, Transform, Load) pipelines involve extracting data from diverse sources, enriching it into a standardized format, and loading it into a target system. These pipelines are commonly used when transformations are necessary before data storage.

In contrast, ELT (Extract, Load, Transform) pipelines allow you to collect data from the source, transfer it to a destination, and then perform transformations when required. This approach allows you to leverage modern data-warehouse capabilities, enabling faster transformations and real-time analytics while reducing the source-system load.

Batch vs. Real-Time Pipelines

Batch pipelines enable you to process data in large chunks at scheduled intervals. Such pipelines are suitable for historical data analysis and situations where you can afford delays in delivering insights.

Real-time or streaming data pipelines, on the other hand, help you handle data continuously as it is generated. If your use case requires immediate actions like monitoring financial markets, real-time pipelines are the right choice. Modern streaming architectures use platforms like Apache Kafka and AWS Kinesis to enable event-driven processing that responds to data changes within milliseconds, supporting edge computing scenarios where local analytics drive immediate operational decisions.

On-Premises vs. Cloud-Native Pipelines

With on-premises pipelines, your organization can store and process data within your physical data centers. This setup offers more control over the data but can be resource-intensive in terms of both time and cost.

Conversely, cloud-native pipelines are designed to run entirely in cloud platforms like AWS, Google Cloud, or Microsoft Azure. The main benefit is that cloud-native pipelines offer scalability and easy integration with other cloud-based tools. You do not have to worry about managing physical infrastructure, as the cloud provider handles that. Cloud-native pipelines are a great option if you are looking for cost-efficiency and want to minimize overhead. Modern serverless architectures within cloud-native pipelines automatically scale resources based on workload demands, eliminating the need for manual capacity planning.

How Can You Implement Automated Data Quality Enforcement and Proactive Governance?

Modern data pipeline automation extends beyond basic data movement to include comprehensive quality enforcement and governance frameworks that ensure data integrity throughout the entire workflow. This approach transforms traditional reactive data management into proactive systems that prevent quality issues before they impact business operations.

Schema Enforcement and Validation

Automated schema enforcement monitors data structure changes in real-time, flagging unexpected modifications before they propagate through your pipeline. Tools integrate machine learning models that analyze historical data patterns to identify anomalies in data volume, distribution, or freshness that might indicate quality issues. When schema drift occurs, automated systems can quarantine affected data streams while triggering alerts to engineering teams, preventing downstream analytics from receiving corrupted information.

Modern validation systems extend beyond basic type checking to include business rule enforcement, such as ensuring customer IDs match existing records or verifying that financial transactions fall within expected ranges. These validations occur at multiple pipeline stages, creating checkpoints that maintain data integrity from source to destination.

Proactive Anomaly Detection

Machine learning-powered anomaly detection systems continuously analyze data flows to identify patterns that deviate from established baselines. These systems learn from historical data to recognize normal operational patterns, automatically flagging outliers that might indicate data quality issues, system failures, or unusual business events requiring attention.

Advanced anomaly detection goes beyond simple statistical thresholds to understand contextual relationships within your data. For example, the system might recognize that customer order volumes typically spike during promotional periods but flag similar spikes occurring outside expected timeframes as potential data quality issues requiring investigation.

Automated Remediation and Error Handling

Self-healing capabilities enable pipelines to automatically respond to common data quality issues without manual intervention. When the system detects problems like missing values or format inconsistencies, automated remediation processes can apply predefined correction rules, reroute problematic data to quarantine areas, or trigger alternative processing workflows that maintain pipeline operations while issues are resolved.

These systems maintain detailed logs of all automated corrections, providing audit trails that support compliance requirements while enabling continuous improvement of data quality rules. Automated escalation procedures ensure that issues requiring human attention are promptly routed to appropriate team members with sufficient context for rapid resolution.

Data Lineage and Impact Analysis

Comprehensive data lineage tracking automatically documents the complete journey of data elements through your pipeline, creating detailed maps that show how source data transforms into final analytics outputs. This documentation proves invaluable for troubleshooting quality issues, supporting compliance audits, and understanding the potential impact of upstream changes on downstream business processes.

When quality issues are detected, lineage information enables rapid impact assessment, showing exactly which reports, dashboards, or automated processes might be affected. This visibility allows teams to prioritize remediation efforts and communicate potential impacts to business stakeholders before problems affect critical operations.

What Role Does AI-Driven Dynamic Pipeline Orchestration Play in Modern Automation?

Artificial intelligence has evolved from a supplemental tool to a foundational component of modern data pipeline automation, enabling systems that self-optimize, predict failures, and adapt to changing business requirements without manual intervention. This represents a fundamental shift from static, rule-based pipelines to intelligent systems that continuously improve their performance.

Predictive Maintenance and Failure Prevention

AI-powered predictive maintenance analyzes historical pipeline performance data, system metrics, and external factors to anticipate potential failures before they occur. Machine learning models identify patterns that precede common issues such as resource bottlenecks, connection timeouts, or data quality degradation, enabling proactive interventions that prevent pipeline disruptions.

These systems extend beyond simple threshold monitoring to understand complex relationships between system components, workload patterns, and external dependencies. For example, predictive models might recognize that certain API sources become unreliable during specific time periods and automatically adjust retry logic or switch to alternative data sources to maintain pipeline reliability.

Advanced predictive maintenance also optimizes resource allocation by forecasting compute and storage requirements based on historical patterns and upcoming business events. This capability ensures adequate resources are available during peak processing periods while avoiding over-provisioning during low-demand intervals.

Generative AI for Pipeline Development

Generative AI tools now automate significant portions of pipeline development, creating optimized code for common ETL tasks and suggesting improvements to existing workflows. These systems analyze business requirements expressed in natural language and generate appropriate pipeline configurations, transformation logic, and error handling procedures that would traditionally require extensive manual coding.

AI-driven code generation extends to connector development, where systems can analyze API documentation and sample data to automatically create integration logic for new data sources. This capability dramatically reduces the time required to onboard new data sources while ensuring consistent quality and error handling across all pipeline components.

Self-Optimizing Performance Management

Machine learning algorithms continuously monitor pipeline performance metrics to identify optimization opportunities and automatically implement improvements. These systems analyze factors such as data volume patterns, transformation complexity, and resource utilization to dynamically adjust processing parameters for optimal performance.

Self-optimizing systems can automatically repartition data processing tasks, adjust parallelization settings, and modify resource allocation based on real-time performance feedback. This continuous optimization ensures pipelines maintain peak efficiency as data volumes grow and business requirements evolve, reducing the need for manual performance tuning.

Intelligent Error Resolution and Root Cause Analysis

AI-powered error resolution systems analyze pipeline failures using natural language processing to interpret error messages, system logs, and operational context. These systems can automatically diagnose common issues and apply appropriate remediation strategies, such as adjusting timeout settings, retrying failed operations with modified parameters, or routing data through alternative processing paths.

Advanced root cause analysis combines multiple data sources to understand the underlying factors contributing to pipeline issues. The system might correlate pipeline failures with external events such as API changes, infrastructure updates, or unusual data patterns to provide comprehensive explanations that enable more effective long-term solutions.

How Do You Create an Automated Data Pipeline with Airbyte?

Automating data pipelines is crucial to achieve seamless integration across organization systems. One way to accomplish this is by utilizing a no-code data-movement tool like Airbyte. To simplify data pipeline automation, Airbyte offers 600+ pre-built connectors and a user-friendly interface. Follow these steps to get started:

1. Sign Up or Log In to Airbyte Cloud

- Visit Airbyte Cloud.

- Create an account or log in if you already have one.

2. Add a Source Connector

- On the Airbyte dashboard, click Connections → Create your first connection.

- Search and select your data source from the available connectors.

- Fill in the connection details (Host, Port, Database Name, Username, Password).

- Click Set up source. After Airbyte tests the source connection, you will be directed to the destination page.

3. Add a Destination Connector

- Choose your destination.

- Provide the necessary credentials.

- Click Set up destination.

4. Configure the Data Sync

- Select the data streams and columns you want to replicate.

- Choose a sync mode (Full refresh or Incremental). See the sync-mode docs.

- Schedule the sync frequency.

- Click Save changes and then Sync now.

5. Set Up Transformation (Optional)

If you need advanced transformations, integrate Airbyte with dbt or use SQL queries for custom logic.

By following these steps, you can create a robust, automated data pipeline with Airbyte, ensuring reliable data integration.

Why Should You Automate Data Pipelines?

Transferring data manually across systems is not only labor-intensive but also increases the likelihood of mistakes. As your business grows, so does the amount of data, and the task of managing it can be more challenging. Trying to handle this complex process may lead to inconsistencies, delays, and errors in the data.

Automating your data pipelines simplifies the collection, cleaning, and movement of data from its source to its final destination. In many teams, release orchestration tooling aligns these automated data flows with application deployments and environment rollouts. By automating workflows, you reduce time spent on data-handling tasks and focus on more strategic activities. This improves operational efficiency and ensures data accuracy and reliability.

Modern automation leverages artificial intelligence and machine learning to create self-optimizing systems that adapt to changing data patterns and business requirements. These intelligent pipelines can predict potential issues, automatically scale resources based on demand, and implement corrective actions without human intervention, transforming data infrastructure from a maintenance burden into a competitive advantage.

What Are the Key Benefits of Automating Data Pipelines?

- Enhances Data Quality: Automation reduces the risk of human errors inherent in manual processing and ensures data is consistently cleaned, formatted, and validated. Advanced automated systems implement continuous monitoring and validation rules that catch data quality issues before they impact downstream analytics or business processes.

- Allows Faster Decision-Making: Automated pipelines move data effortlessly from source to downstream applications, enabling timely business decisions. Real-time processing capabilities ensure that business intelligence systems receive updated information within minutes or seconds of data generation, supporting rapid response to market changes or operational issues.

- Change Data Capture: Integrating CDC technology within an automated pipeline keeps data across multiple databases in sync. This capability ensures that updates, insertions, and deletions in source systems are reflected accurately in destination systems, maintaining data consistency across distributed architectures.

- Scalability: Automated pipelines adapt to growing workloads by scaling horizontally or vertically, optimizing resource usage. Modern cloud-native solutions can automatically provision additional computing resources during peak processing periods and scale down during low-demand intervals, ensuring cost-effective operations regardless of data volume fluctuations.

- Cost Reduction: Automation minimizes reliance on manual work, lowering labor costs and reducing the risk of expensive errors. By eliminating repetitive manual tasks and reducing the need for specialized technical maintenance, organizations can redirect human resources toward higher-value activities such as advanced analytics and strategic data initiatives.

Which Tools Are Best for Data Pipeline Automation?

1. Airbyte

Airbyte stands out as a comprehensive data integration platform that combines open-source flexibility with enterprise-grade capabilities. The platform processes over 2 petabytes of data daily across customer deployments, supporting organizations from fast-growing startups to Fortune 500 enterprises in their infrastructure modernization initiatives.

Key features include:

- Build Custom Connectors: Use the no-code connector builder or low-code/language-specific CDKs to develop custom connectors, assisted by AI suggestions. The platform's Connector Development Kit enables rapid creation of custom integrations while maintaining enterprise-grade reliability and performance standards.

- Streamline AI Workflows: Move semi-structured or unstructured data into popular vector databases such as Chroma, Pinecone, or Qdrant with automatic chunking, embedding, and indexing. This capability supports modern AI and machine learning applications that require structured data processing alongside unstructured content analysis.

- Developer-Friendly Pipeline: PyAirbyte, an open-source Python library, lets you extract data with Airbyte connectors and load it into caches like Snowflake, DuckDB, or BigQuery. This tool is ideal for analytics and LLM applications, enabling developers to build data-enabled applications quickly while maintaining compatibility with enterprise data infrastructure.

- Multi-Region Deployment: Enterprise customers can deploy data planes across multiple regions while maintaining centralized governance through a single control plane, ensuring compliance with data sovereignty requirements while optimizing for performance and cost.

- Advanced File Handling: Support for transferring unstructured data up to 1GB with automated metadata generation, enabling hybrid data pipelines that combine structured analytics with document and media processing workflows.

2. Google Cloud Dataflow

Google Cloud Dataflow provides serverless batch and stream processing capabilities that automatically scale based on workload demands. The platform excels in handling both real-time data streams and large-scale batch processing operations within Google Cloud's ecosystem.

- Portable: Built on Apache Beam, so pipelines developed for Dataflow can run on other runners like Apache Flink or Spark. This portability ensures that investments in pipeline development remain valuable even if underlying infrastructure requirements change over time.

- Exactly-Once Processing: Guarantees each record is processed once by default, ensuring accuracy; at-least-once semantics are also available for lower-latency, cost-efficient needs. This reliability feature proves essential for financial transactions, inventory management, and other business-critical data processing scenarios.

3. Apache Airflow

Apache Airflow serves as a powerful workflow orchestration platform that enables complex data pipeline management through programmatic workflow definition and monitoring. The platform excels at coordinating dependencies between multiple data processing tasks and external systems.

- Directed Acyclic Graphs (DAGs): Define workflows as DAGs, enabling clear task dependencies and scheduling. This approach provides visual representation of complex data workflows while ensuring that processing steps execute in the correct order and handle failures gracefully.

- Object-Storage Abstraction: Unified support for S3, GCS, Azure Blob Storage, and more without requiring code changes for each service. This abstraction simplifies multi-cloud deployments and enables pipeline portability across different infrastructure environments.

FAQ

How do automated data pipelines handle real-time data processing?

Automated data pipelines use streaming architectures and tools like Apache Kafka, Flink, or AWS Kinesis to ingest, transform, and deliver data in real time. This enables immediate analysis and response for use cases like fraud detection, dynamic pricing, and operational monitoring.

What are the main security considerations for automated data pipelines?

Secure automated pipelines with end-to-end encryption, role-based access, audit logging, and data masking. Implement network security, secure credential management, compliance with regulations like GDPR/HIPAA, automated security scans, and regular assessments to protect data throughout ingestion, processing, and storage.

How do you measure the success of data pipeline automation?

Measure automated pipeline success using reliability, error rates, data quality, processing speed, throughput, and cost efficiency. Also track business impact, including faster decisions, improved data access, and team productivity. Regular monitoring and reviews ensure continuous optimization and alignment with goals.

What challenges should you expect when implementing automated data pipelines?

Automated pipeline challenges include data quality issues, schema changes, legacy system integration, scaling during peak loads, and skills gaps. Organizations must also manage change resistance and governance balance, addressing these through planning, stakeholder education, phased implementation, and robust monitoring.

How do automated data pipelines support compliance and governance requirements?

Automated pipelines support compliance with audit logging, data lineage tracking, and policy enforcement. They apply access controls, retention policies, and data masking automatically, while monitoring and reporting ensure adherence to regulations and provide auditable records of all data processing activities.

Conclusion

Data pipeline automation is crucial for businesses seeking to streamline data processing, enhance operational efficiency, and maintain data consistency. Automation reduces manual tasks, scales with growing data volumes, and ensures efficient data integration, transformation, and movement across systems. Modern automated pipelines leverage artificial intelligence for predictive maintenance, implement comprehensive data quality enforcement, and provide the flexibility needed to adapt to changing business requirements.

By selecting the right tools and configuring pipelines for specific needs, organizations can focus on extracting insights and driving value from their data. The combination of automated quality enforcement, AI-driven orchestration, and robust monitoring capabilities transforms data infrastructure from a maintenance burden into a competitive advantage that enables faster decision-making and improved business outcomes.

.webp)