Mastering Database Schema Design: Key Practices & Exemplary Designs

Summarize this article with:

✨ AI Generated Summary

Effective database schema design is crucial for ensuring data integrity, scalability, and performance in modern enterprise environments. Contemporary practices emphasize:

- Balancing normalization with strategic denormalization for optimized query performance.

- Incorporating domain-driven design, automation, and schema evolution to support dynamic business needs.

- Leveraging specialized schema types (physical, logical, view) tailored for cloud-native, distributed, and event-driven architectures.

- Utilizing collaborative tools and automated testing to manage schema changes safely and efficiently.

- Addressing challenges like schema migration, distributed consistency, and compliance through advanced patterns and technologies.

- Airbyte simplifies schema management by automating schema evolution, harmonizing multi-database schemas, and providing enterprise-grade governance features.

Imagine a vast library with shelves overflowing with knowledge without a proper catalog. Data professionals face this exact challenge when managing enterprise databases without well-designed schemas. Poor schema design creates data integrity issues that consume development resources while limiting analytical capabilities. Modern database schema design continues to evolve in tandem with advances in distributed architectures, real-time processing, and artificial-intelligence-enabled platforms, aligning schema practices to support these capabilities rather than embedding them directly within the schema itself.

Database schema design serves as the architectural foundation that determines how your data infrastructure scales, performs, and adapts to changing business requirements. Contemporary approaches balance traditional normalization principles with performance-oriented denormalization, leverage automation for schema evolution, and incorporate specialized patterns for time-series, graph, and document data. Organizations that master these modern schema design practices achieve faster deployment cycles, reduced maintenance overhead, and improved data accessibility across teams.

This comprehensive guide explores essential database schema design practices, addresses common challenges with proven solutions, and examines emerging trends reshaping data architecture. You will discover how contemporary tools and methodologies transform schema design from a static planning exercise into a dynamic, collaborative process that enables rather than constrains business innovation.

What Is Database Schema?

A database schema represents the logical structure and organization of your entire database system. It defines how data elements relate to each other, establishes constraints for data integrity, and provides a blueprint for storage and retrieval operations. Think of it as the architectural plan that guides database implementation while abstracting complex storage details from application developers.

Modern database schemas extend beyond traditional table definitions to encompass metadata annotations, validation rules, and integration specifications. They serve as contracts between data producers and consumers, ensuring consistent data interpretation across distributed systems and microservices architectures. Schema registries now manage these contracts through version control, enabling backward-compatible evolution without breaking downstream applications.

Contemporary schema design incorporates domain-driven modeling principles where schemas align with business boundaries rather than purely technical considerations. This approach facilitates better collaboration between business stakeholders and technical teams while supporting modern architectural patterns like data mesh and event-driven systems. The schema becomes a living document that evolves with business requirements while maintaining data consistency and integrity.

What Are the Different Types of Database Schemas?

Different schema types serve distinct purposes in modern data architectures, each optimized for specific access patterns and operational requirements.

1. Physical Schema

Physical schemas specify how data is organized within storage systems, determining performance characteristics and resource utilization. Modern physical schema design leverages cloud-native storage patterns including columnar formats for analytical workloads, partitioning strategies for distributed processing, and tiered storage for cost optimization.

Contemporary implementations use adaptive storage formats that automatically adjust based on access patterns. For instance, cloud data warehouses like Snowflake dynamically organize data into micro-partitions optimized for query performance, while time-series databases employ specialized compression algorithms that reduce storage requirements while maintaining query efficiency.

Physical schemas now incorporate advanced indexing strategies including machine-learning-driven index selection, partial covering indexes for read-heavy workloads, and distributed indexing across multiple nodes. These techniques optimize query performance while managing storage costs and maintenance overhead across diverse deployment environments.

2. Logical Schema

Logical schemas define the conceptual structure of your data including entities, attributes, relationships, and constraints. Modern logical design emphasizes domain-driven modeling where schemas reflect business concepts rather than technical implementation details. This approach improves collaboration between technical and business teams while supporting schema evolution as business requirements change.

Contemporary logical schemas support hybrid data models combining relational structures with document, graph, and key-value patterns. PostgreSQL implementations demonstrate this flexibility through JSONB columns that embed semi-structured data alongside normalized tables, enabling schema flexibility without sacrificing relational integrity.

Event-driven architectures require logical schemas that capture temporal aspects of data including state changes, business events, and processing timestamps. These schemas prioritize immutability and traceability, supporting advanced analytics and compliance requirements through built-in audit trails and data lineage tracking.

3. View Schema

View schemas provide abstraction layers that simplify data access for specific use cases while hiding underlying complexity. Modern view implementations leverage materialized views for performance optimization, parameterized views for dynamic filtering, and federated views that span multiple data sources.

Cloud-native view schemas enable cross-database queries through virtual data layers, allowing business users to access integrated data without understanding source system complexities. These views automatically refresh based on data change events, ensuring analytical workloads always access current information without manual intervention.

Contemporary view schemas incorporate security and governance policies directly within view definitions, automatically applying data masking, row-level security, and column-level permissions based on user contexts. This approach centralizes security enforcement while maintaining performance and usability for authorized users.

What Are the Essential Database Schema Design Best Practices?

Modern database schema design practices integrate traditional principles with contemporary requirements for scalability, flexibility, and automation.

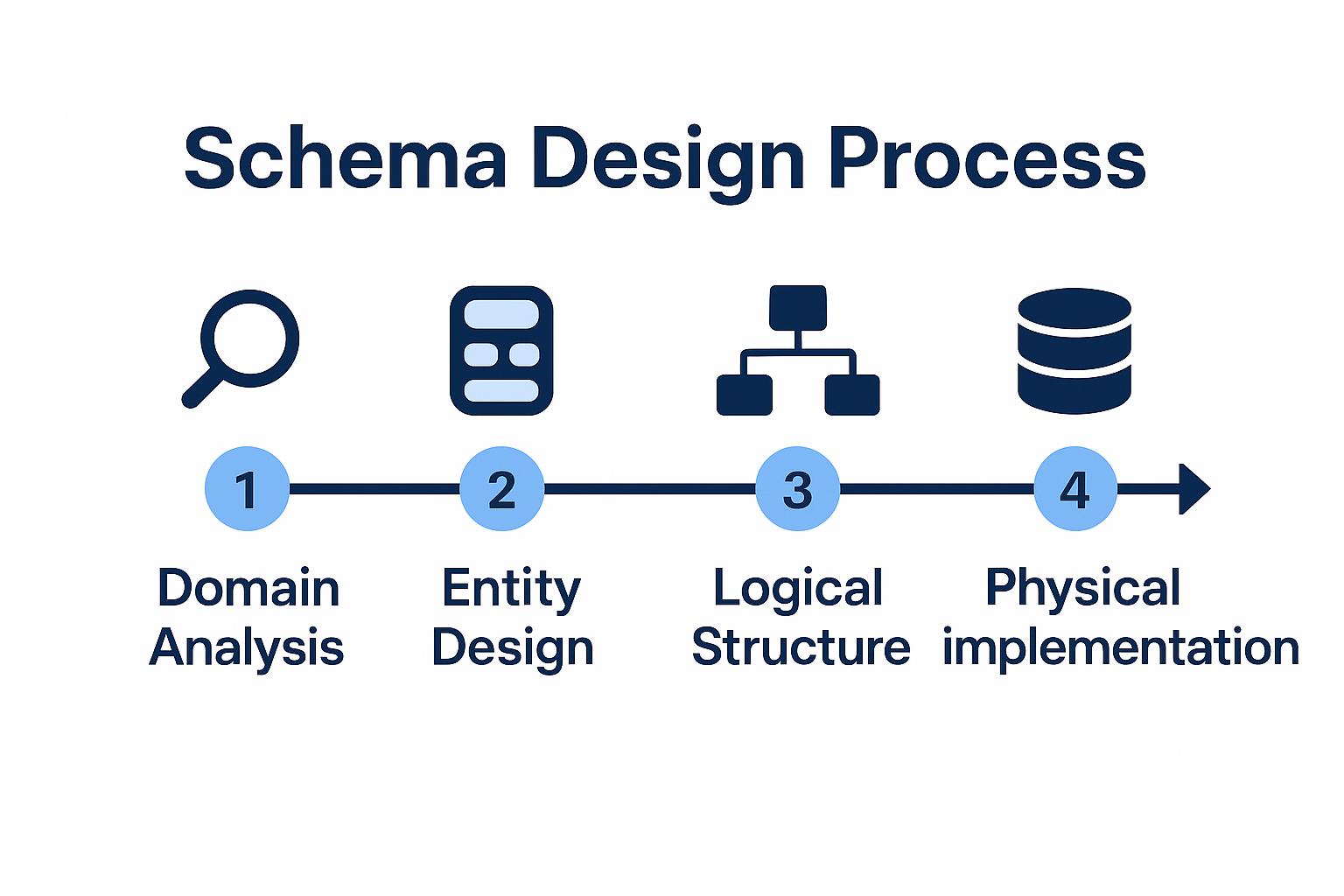

1. Define Your Purpose and Requirements Through Domain Modeling

Successful schema design begins with understanding business domains and data usage patterns rather than technical implementation details. Domain-driven design principles help identify bounded contexts where specific entities and relationships have clear business meaning, leading to schemas that align with organizational structure and operational processes.

Modern requirements gathering includes analyzing query patterns, data access frequency, and growth projections to inform structural decisions. Understanding whether your system prioritizes read or write operations, requires real-time or batch processing, and serves analytical or transactional workloads directly influences schema design choices including normalization levels, indexing strategies, and partitioning approaches.

Contemporary schema planning incorporates data governance requirements from the beginning, ensuring compliance with privacy regulations, audit trail needs, and data retention policies. This proactive approach prevents costly schema modifications later while establishing a foundation for automated governance enforcement through schema-embedded policies and constraints.

2. Identify Data Entities and Attributes Using Modern Techniques

Entity identification has evolved beyond simple table mapping to include event modeling, aggregate design, and temporal data patterns. Modern approaches recognize that data represents business events and state changes rather than static records, leading to schemas that capture data lineage and historical context.

Contemporary attribute design considers data types that support modern applications including JSON columns for flexible schemas, spatial data types for location-based features, and vector columns for machine-learning embeddings. These specialized types reduce application complexity while leveraging database-native optimization capabilities.

Advanced entity modeling incorporates polyglot persistence patterns where different entity types use optimal storage technologies. Customer profiles might use relational tables for structured attributes while storing preferences in document databases and relationship data in graph systems, with schemas coordinated through shared identifiers and data contracts.

3. Start with a Conceptual Model Using Collaborative Tools

Modern conceptual modeling leverages collaborative tools that enable real-time sharing between business stakeholders and technical teams. Visual modeling platforms allow domain experts to contribute directly to schema design through intuitive interfaces while automatically generating technical specifications for development teams.

Contemporary Entity-Relationship Diagrams incorporate temporal aspects, aggregate boundaries, and event flows rather than static entity relationships. These enhanced models capture business processes and data lifecycle requirements, providing clearer guidance for implementation decisions including table structures, constraint definitions, and integration patterns.

Schema modeling now includes impact analysis capabilities that identify downstream effects of proposed changes across applications, reports, and data pipelines. This analysis prevents breaking changes while enabling confident schema evolution that supports business agility without compromising system stability.

4. Create Logical Design with Performance Considerations

Logical design translates conceptual models into detailed specifications that balance normalization principles with performance requirements. Modern approaches use selective denormalization based on query pattern analysis, creating hybrid schemas that maintain data integrity while optimizing for specific access patterns.

Contemporary logical design incorporates distributed system considerations including data locality, cross-shard queries, and consistency requirements. Schemas designed for microservices architectures emphasize bounded contexts and minimize cross-service dependencies while maintaining referential integrity through eventual consistency patterns.

Advanced logical design includes schema flexibility mechanisms such as extension tables for custom attributes, configuration-driven field definitions, and schema evolution patterns that support backward compatibility. These techniques enable schema adaptation without requiring application code changes or data migration efforts.

5. Create Physical Design Optimized for Cloud Environments

Physical schema design for cloud environments emphasizes elasticity, cost optimization, and managed-service integration. Modern implementations leverage cloud-native storage formats including columnar compression for analytical workloads, partitioning strategies for parallel processing, and tiered storage for cost-effective data lifecycle management.

Contemporary physical design incorporates automated optimization features available in cloud databases including adaptive query optimization, automatic index tuning, and workload-based resource scaling. These capabilities reduce administrative overhead while maintaining optimal performance as data volumes and access patterns evolve.

Cloud-optimized physical schemas consider multi-region deployment requirements including data residency compliance, cross-region replication strategies, and disaster recovery capabilities. Design decisions account for network latency, bandwidth costs, and regional service availability to ensure consistent performance across global deployments.

6. Normalize Schema with Strategic Denormalization

Schema normalization remains important for data integrity but modern approaches balance normalization with performance requirements through strategic denormalization. Third Normal Form provides the foundation while specific access patterns justify controlled redundancy that improves query performance without compromising data consistency.

Contemporary normalization considers aggregate design patterns where related entities are stored together to reduce join operations and improve transaction boundaries. Customer orders might denormalize product information to optimize order processing while maintaining normalized product catalogs for inventory management and reporting.

Advanced normalization techniques include computed columns that materialize derived values, partial denormalization for read-heavy attributes, and temporal normalization that maintains historical versions without duplicating current state information. These approaches optimize for specific workload patterns while preserving data quality and consistency.

7. Implement Schema Evolution and Testing Strategies

Modern schema implementation emphasizes evolution capabilities from initial deployment, using version control systems to track changes and automated migration tools to manage deployments across environments. Schema-as-code approaches treat database structures as software artifacts with testing, peer review, and deployment automation.

Contemporary testing includes data quality validation, performance regression testing, and compatibility verification across application versions. Automated testing pipelines validate schema changes against representative datasets while measuring query performance impact and identifying potential breaking changes.

Production schema management incorporates blue-green deployment techniques, feature flag integration, and rollback capabilities that enable confident schema evolution with minimal business impact. These practices support continuous integration workflows while maintaining high availability and data consistency requirements.

How Can You Address Common Database Schema Design Challenges?

Modern database environments present complex challenges that require sophisticated solutions beyond traditional schema design approaches.

Resolving Schema Evolution and Migration Complexities

Schema evolution in production systems requires careful coordination between database changes and application deployments to prevent service disruptions. Online schema change techniques enable structural modifications without downtime through shadow table creation, incremental data migration, and atomic table swapping that maintains consistency throughout the process.

Modern migration strategies use expand-contract patterns where schema changes deploy in phases: first adding new structures alongside existing ones, then migrating applications to use new structures, and finally removing deprecated elements. This approach allows gradual transition while maintaining backward compatibility and enabling easy rollback if issues emerge.

Automated migration tools now incorporate dependency analysis that identifies potential conflicts before deployment, suggesting optimal change sequences that minimize risk. These tools generate rollback scripts automatically and provide impact analysis showing which applications and queries might be affected by proposed schema modifications.

Overcoming Performance and Scalability Limitations

Database performance challenges often stem from suboptimal schema designs that require expensive join operations or full table scans for common queries. Modern solutions include materialized view strategies that pre-compute complex aggregations, denormalization patterns that reduce join complexity, and partitioning schemes that enable parallel processing.

Scalability issues require schema designs that support horizontal partitioning across multiple nodes while maintaining query efficiency. Contemporary approaches include shard key selection based on access patterns, cross-shard query optimization through distributed indexes, and data locality optimization that minimizes network overhead.

Performance optimization leverages machine-learning-assisted index selection that analyzes query patterns to recommend optimal indexing strategies. These systems continuously monitor query performance and automatically adjust index configurations as workload patterns evolve, reducing administrative overhead while maintaining optimal performance.

Managing Data Consistency Across Distributed Systems

Distributed architectures create consistency challenges where related data spans multiple databases or services, requiring careful coordination to maintain integrity constraints. Event-driven consistency patterns use message queues and event streams to propagate changes across systems while handling failures and ensuring eventual consistency.

Modern distributed schema designs implement saga patterns for complex transactions that span multiple services, breaking large operations into smaller, compensatable steps that can be rolled back if any component fails. This approach maintains data consistency while supporting the independence and scalability benefits of microservices architectures.

Cross-system referential integrity requires alternative approaches to traditional foreign keys, including distributed identifier schemes, eventual consistency verification processes, and reconciliation procedures that detect and resolve inconsistencies. These techniques maintain data quality while supporting the operational independence required for modern distributed systems.

Addressing Security and Compliance Requirements

Contemporary schema designs must incorporate security and privacy requirements directly into database structures rather than relying solely on application-layer controls. Column-level encryption, data masking, and tokenization become integral schema features that automatically protect sensitive information regardless of access method.

Compliance requirements like GDPR's right to be forgotten necessitate schema designs that support efficient data deletion across related tables and systems. Modern approaches include soft-deletion patterns that maintain referential integrity, data retention policies embedded in schema definitions, and automated cleanup processes that respect business and legal constraints.

Audit trail requirements drive temporal schema designs that maintain complete change history without impacting operational performance. These schemas automatically capture who changed what data when, supporting compliance reporting while enabling point-in-time recovery and trend analysis capabilities that provide business value beyond compliance needs.

What Tools Can You Use to Design Your Database Schema?

Contemporary schema design tools emphasize collaboration, automation, and integration with modern development workflows while supporting diverse database technologies and deployment environments.

Open-Source Visual Design Platforms

Diagrams.net is a versatile diagramming platform supporting collaborative editing via cloud storage services and offering export formats compatible with development and documentation workflows. While it has an extensible template library and supports various use cases, it does not natively offer real-time collaborative editing or built-in version control integration tailored for schema design.

DBML and dbdiagram.io represent the evolution toward code-first schema design where database structures are defined through markup language rather than visual editors. This approach enables version control, automated testing, and integration with continuous deployment pipelines while generating visual diagrams for communication with non-technical stakeholders.

Specialized Schema Modeling Tools

Anchor Modeler provides open-source tooling for temporal schema design that captures data history and evolution requirements. This browser-based tool generates schema structures optimized for data warehousing and analytics workloads where historical analysis and audit trails are essential.

erdantic and similar code-generation tools bridge the gap between application development and database design by automatically generating schema diagrams from existing code structures. This approach helps ensure schema documentation remains synchronized with actual implementation, which can facilitate modern development practices.

Enterprise Integration Platforms

Lucidchart provides enterprise-grade collaboration features including advanced sharing controls, integration with corporate identity systems, and administrative capabilities required for large organizations. Its template library includes modern schema patterns while supporting the governance and approval workflows common in enterprise environments.

DataVault4dbt and AutomateDV represent automated schema implementation tools that generate complex data warehouse structures from configuration files. These open-source packages implement modern data warehouse patterns while supporting continuous integration and deployment practices that align with software development best practices.

How Does Airbyte Simplify Database Schema Management?

Modern data integration challenges require sophisticated schema management capabilities that adapt to diverse source systems while maintaining consistency across destination databases. Airbyte addresses these challenges through comprehensive schema handling features that automate complex integration scenarios.

Airbyte provides flexible deployment for complete data sovereignty, allowing you to move data across cloud, on-premises, or hybrid environments with one convenient UI. With over 600 pre-built connectors plus an AI-assisted connector builder, Airbyte ensures every source and destination is covered for your database schema integration needs.

Automated Schema Evolution and Synchronization

Airbyte automatically detects schema changes in source systems before each synchronization cycle, ensuring data pipelines remain reliable as business requirements evolve. This proactive monitoring prevents pipeline failures while maintaining data quality through intelligent schema mapping and transformation capabilities that adapt to structural changes without manual intervention.

The platform's schema evolution handling supports both additive and destructive changes through configurable policies that determine how new columns, deleted fields, and data type modifications are processed. This flexibility enables organizations to maintain data pipeline stability while supporting business agility requirements for rapid application development and deployment.

Airbyte delivers 99.9% uptime reliability with pipelines that "just work" so teams can focus on using data, not moving it. Built for modern data needs, Airbyte uses CDC methods and open data formats like Iceberg to preserve data context and enable AI-ready data movement of both structured and unstructured data.

Multi-Database Schema Harmonization

Organizations often need to integrate data from diverse database systems with different schema conventions, data types, and constraint patterns. Airbyte's extensive connector library includes sophisticated schema mapping capabilities that normalize these differences while preserving data integrity and business meaning across systems.

Cross-database data consistency management becomes more approachable through Airbyte's change data capture capabilities, which keep destination systems synchronized and up to date, although formal referential integrity enforcement across multiple databases requires additional architectural solutions. This approach supports modern architectures where master data management spans multiple databases while ensuring transactional consistency.

The platform's open source flexibility allows you to modify, extend, and customize without vendor restrictions while balancing innovation with safety through natively sovereign and secure AI and product development capabilities.

Enterprise-Grade Schema Governance

Airbyte incorporates schema governance features that support enterprise compliance and security requirements including field-level data masking, column-level encryption, and automated PII detection that protects sensitive information throughout the integration process. Audit trail capabilities track all schema modifications and data transformations, providing complete visibility into data pipeline evolution for compliance reporting and troubleshooting.

With developer-first experience through APIs, SDKs, and clear documentation, Airbyte handles the data plumbing so your team can focus on what matters: building products, not pipelines. Scale easily with capacity-based pricing that charges for performance and sync frequency, not data volume.

Conclusion

Database schema design has transformed from static structural planning into dynamic, intelligent architecture that enables business agility while ensuring data integrity and performance. Modern approaches integrate traditional normalization principles with contemporary requirements for scalability, flexibility, and automation, creating schemas that serve as strategic assets rather than technical constraints. The evolution toward AI-assisted design, cloud-native architectures, and event-driven patterns represents fundamental shifts in how organizations conceptualize and implement their data infrastructure. Organizations that master these modern practices achieve faster deployment cycles, reduced maintenance overhead, and improved data accessibility that drives competitive advantage through better decision-making capabilities.

FAQ

What Is the Most Important Factor in Database Schema Design?

Understanding your business domain and data usage patterns is the most critical factor in successful database schema design. This foundation ensures your schema aligns with organizational needs and supports anticipated growth while maintaining data integrity and performance requirements.

How Often Should You Update Your Database Schema?

Database schemas should evolve continuously through automated monitoring and planned migration cycles rather than infrequent major overhauls. Modern approaches use schema-as-code practices with version control and testing to support regular, low-risk updates that adapt to changing business requirements.

What Are the Key Differences Between Logical and Physical Schema Design?

Logical schema design focuses on business concepts, relationships, and data integrity rules independent of implementation details, while physical schema design addresses storage optimization, indexing strategies, and performance considerations specific to your database technology and deployment environment.

How Do You Handle Schema Changes in Production Databases?

Production schema changes require careful planning using techniques like expand-contract patterns, online schema migration tools, and automated rollback capabilities. Blue-green deployment strategies and feature flags enable gradual transition with minimal business impact while maintaining data consistency.

What Role Does Normalization Play in Modern Database Schema Design?

Normalization remains important for data integrity but modern approaches balance normal forms with performance requirements through strategic denormalization. The goal is maintaining data consistency while optimizing for specific access patterns and query performance needs.

.webp)