ETL vs Reverse ETL vs Data Activation

Summarize this article with:

✨ AI Generated Summary

Modern data integration has evolved from traditional ETL, which consolidates and prepares data for analytics, to reverse ETL and data activation that enable real-time operational use of insights. Key distinctions include:

- ETL: Extracts, transforms, and loads raw data into warehouses for analytical readiness and compliance.

- Reverse ETL: Pushes refined insights from warehouses back into operational systems like CRMs for real-time personalization and decision-making.

- Data Activation: Integrates end-to-end data ingestion, advanced analytics, and operational execution to automate business outcomes.

Advancements in AI and streaming technologies enhance automation, real-time processing, and data quality, while privacy and governance frameworks ensure compliance across complex regulatory environments. Organizations benefit most from hybrid strategies aligned with their maturity, infrastructure, and business goals.

Data professionals face a fundamental challenge that has only intensified with the explosion of data sources and business applications: how to effectively move, transform, and activate data across increasingly complex technology ecosystems. While traditional ETL processes handle the foundational work of getting data into warehouses, the modern business reality demands more sophisticated approaches that can bridge the gap between analytical insights and operational execution.

This challenge has given rise to reverse ETL and data activation methodologies that transform static data repositories into dynamic engines of business value. Organizations today collect overwhelming amounts of data from customer interactions, operational systems, and external sources, yet many struggle to translate this information into meaningful business outcomes.

The traditional approach of storing data in warehouses for periodic analysis no longer meets the demands of real-time personalization, immediate customer response, and agile business operations. Understanding the distinctions between ETL, reverse ETL, and data activation becomes critical for organizations seeking to extract maximum value from their data investments while building scalable, efficient data operations.

The evolution from simple data movement to sophisticated data activation represents a fundamental shift in how organizations approach data strategy. Rather than viewing data integration as a one-way process from sources to warehouses, modern data architectures embrace bidirectional flows that ensure insights generated through analysis can immediately impact business operations. This transformation requires understanding not just the technical mechanisms of different integration approaches, but also their strategic implications for organizational agility and competitive advantage.

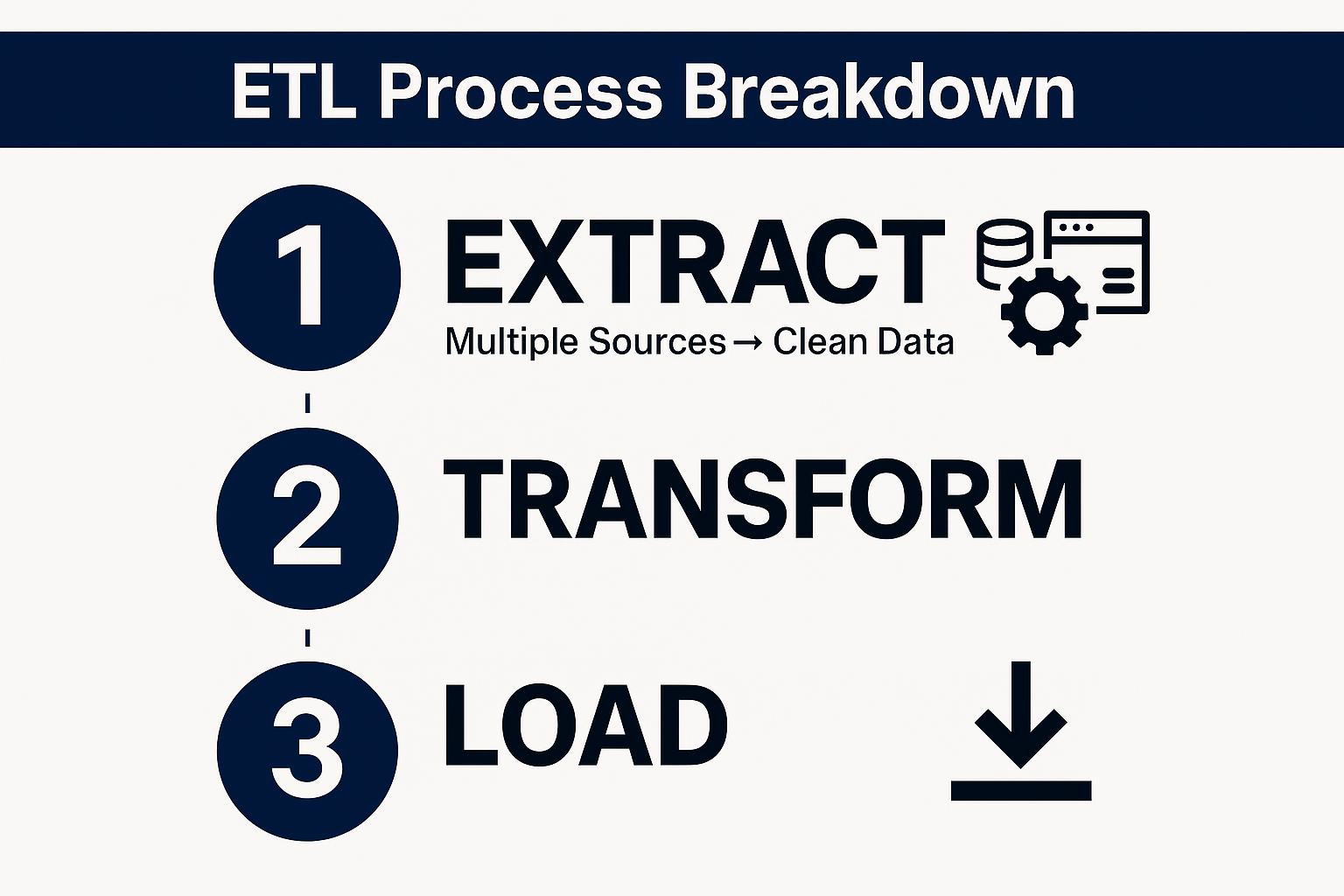

What Is ETL and How Does It Form the Foundation of Data Integration?

Extract Transform Load (ETL) represents the foundational methodology for data integration that has evolved from its origins in mainframe computing to become the backbone of modern data warehousing initiatives. This time-tested approach addresses the fundamental challenge of consolidating data from disparate sources into centralized repositories where it can be standardized, cleaned, and prepared for analytical workloads.

The ETL process provides the critical foundation upon which most organizational data strategies are built, establishing the single source of truth that enables consistent reporting and analysis across business functions.

1. Data Extraction

The extraction phase involves systematically pulling data from various source systems, including transactional databases, flat files, APIs, and streaming data sources. Modern extraction processes leverage sophisticated techniques such as change data capture (CDC) to identify and capture only the data that has changed since the last extraction.

This approach significantly reduces processing overhead and improves system performance. Organizations can maintain real-time visibility into their data flows while minimizing the impact on operational systems.

2. Data Transformation

Transformation represents the most complex and business-critical phase of the ETL process, where raw data undergoes standardization, cleansing, validation, and enrichment to meet the requirements of target analytical systems. These transformations may include data type conversions, format standardization, business rule application, and data quality improvements.

The transformation phase ensures downstream analytical processes operate on reliable, consistent information. Business rules applied during transformation help maintain data integrity and support regulatory compliance requirements across different jurisdictions.

3. Data Loading

The loading phase completes the ETL cycle by efficiently writing transformed data to target systems such as data warehouses, data lakes, or analytical platforms. Modern loading processes must balance speed and reliability while handling large data volumes and maintaining referential integrity across complex data relationships.

Contemporary ETL implementations leverage cloud-native architectures that provide automatic scaling, improved reliability, and reduced operational overhead compared with traditional on-premises solutions. These platforms enable organizations to process growing data volumes without proportional increases in infrastructure management complexity.

What Is Reverse ETL and Why Has It Become Essential for Modern Data Operations?

Reverse ETL emerged as organizations recognized that their carefully curated data warehouses, while excellent for analysis, created a new form of data silo that prevented operational teams from accessing valuable insights for real-time decision-making.

This methodology addresses the critical gap between analytical insights and operational execution by systematically moving processed data from centralized repositories back to the operational systems where business teams interact with customers and manage daily operations.

1. Data Extraction From Warehouses

The extraction phase of reverse ETL begins by systematically retrieving transformed and enriched data from data warehouses or analytical platforms where it has already been processed and validated. Unlike traditional ETL that works with raw operational data, reverse ETL extracts refined data products representing the culmination of analytical processing, machine learning outputs, and business intelligence insights.

This approach leverages data that has already undergone rigorous quality control and enrichment processes, enabling organizations to activate high-value insights across operational systems without duplicating analytical workloads.

2. Operational Transformation

Transformation in reverse ETL involves adapting warehouse-optimized data structures to meet the specific requirements of diverse operational systems. This process must account for different data models, field requirements, and integration patterns across various platforms, including CRMs, marketing automation tools, customer service applications, and business dashboards.

The complexity often exceeds traditional ETL transformations because operational systems tend to be more rigid in their data requirements and less forgiving of format inconsistencies. Effective transformation ensures insights remain accurate and usable when deployed to frontline business applications.

3. Operational System Loading

The loading phase completes the reverse ETL cycle by synchronizing processed data with operational systems through various integration mechanisms, including APIs, file transfers, and database connections. This synchronization handles complex scenarios such as conflict resolution when data has been modified in both systems, incremental updates that maintain consistency without overwhelming target applications, and sophisticated error handling.

Modern reverse ETL platforms provide automated monitoring, intelligent retry mechanisms, and detailed logging that ensure operational systems remain functional even when synchronization challenges occur. This reliability is critical when customer-facing applications depend on timely data updates for personalization and decision support.

Reverse ETL eliminates bottlenecks associated with accessing insights locked inside analytics platforms. Sales teams can access enriched customer profiles directly in their CRM systems, while marketing teams receive behavioral insights in their automation platforms without requiring technical intervention.

What Is Data Activation and How Does It Transform Business Operations?

Data activation represents the strategic culmination of data management efforts, transforming processed information into actionable business outcomes through systematic integration with operational decision-making processes. Unlike pure data-movement approaches, data activation encompasses the entire journey from raw data collection through insight generation to operational implementation.

This comprehensive approach ensures organizations extract maximum value from their data investments through measurable business impact rather than simply moving data between systems.

1. Comprehensive Data Ingestion

Ingestion captures data from all relevant sources, including streaming and batch feeds, while maintaining lineage and governance. This phase establishes the foundation for downstream processing by ensuring data quality and completeness from the beginning of the pipeline.

Modern ingestion processes support both structured and unstructured data sources, enabling organizations to leverage diverse information types for comprehensive business insights.

2. Advanced Analytics and Insight Generation

Unlocking applies advanced analytics and machine-learning models to generate actionable insights, predictions, and enhanced data products. This phase transforms raw information into business intelligence that can drive automated decision-making processes.

Organizations leverage this phase to create predictive models, customer segmentation algorithms, and anomaly detection systems that support proactive business operations.

3. Operational Integration and Execution

Execution integrates those insights into operational systems for automated decision-making, real-time personalization, and predictive alerting. This phase bridges the gap between analytical insights and business actions, enabling organizations to respond immediately to changing conditions.

The execution phase supports various integration patterns, from simple data synchronization to complex workflow automation that spans multiple operational systems.

4. Continuous Optimization and Measurement

Measurement and optimization continuously evaluate business outcomes and refine workflows to maximize impact. This phase ensures data activation initiatives deliver measurable business value while identifying opportunities for improvement.

Data activation platforms differentiate themselves by offering end-to-end orchestration, automated ML deployment, and real-time decision engines with feedback loops that tie data activity directly to business KPIs.

How Do ETL vs Reverse ETL Differ in Their Use Cases and Business Applications?

Understanding when to apply ETL versus reverse ETL requires examining the specific business outcomes each methodology supports and the organizational contexts where each approach delivers maximum value.

Traditional ETL Applications and Strategic Value

Data warehousing for consolidated analytics and reporting represents the primary use case for traditional ETL processes. Organizations leverage ETL to create comprehensive historical datasets that support complex analytical workloads and cross-functional reporting requirements.

Business intelligence requiring clean, historical data across functions benefits significantly from ETL's focus on data quality and standardization. This enables consistent metrics and reporting across different business units and time periods.

Regulatory compliance demanding detailed lineage and auditable processes makes ETL essential for organizations operating in highly regulated industries. The systematic approach to data transformation and loading provides the documentation and traceability required for compliance audits.

Reverse ETL Applications and Operational Impact

Operational analytics that feeds insights back to CRMs, MAPs, and support tools represents the core application for reverse ETL methodologies. This enables business teams to leverage analytical insights without requiring specialized analytics tools or technical expertise.

Customer-experience enhancement through enriched profiles and proactive service becomes possible when analytical insights reach customer-facing systems in real-time. Sales and support teams can access complete customer context during interactions.

Real-time personalization for marketing, e-commerce, and product experiences leverages reverse ETL to deliver behavioral insights and predictive analytics directly to systems that interact with customers.

Strategic Considerations for Implementation

Mature analytics programs often expand into reverse ETL to operationalize insights after establishing strong data foundation and analytical capabilities. This progression ensures organizations have reliable insights worth distributing before investing in operational integration.

Organizations still building foundations focus first on ETL to establish data quality and governance before attempting to distribute insights to operational systems. This approach prevents the propagation of data quality issues across operational processes.

What Key Differences Distinguish ETL, Reverse ETL, and Data Activation Approaches?

The fundamental distinction between these approaches lies in their primary objectives and target outcomes. ETL focuses on creating reliable analytical foundations, reverse ETL emphasizes operational insight delivery, and data activation encompasses the entire value chain from raw data to business impact.

Organizations often implement these approaches in combination rather than choosing a single methodology, with each serving different aspects of their overall data strategy.

How Are AI and Streaming Technologies Transforming Modern Data Integration?

Artificial intelligence and streaming technologies are fundamentally reshaping how organizations approach data integration, moving beyond traditional batch processing toward intelligent, continuous data flows that adapt automatically to changing business requirements.

AI-powered pipeline generation enables GenAI to create mappings and transformations from natural-language prompts. This dramatically reduces the technical expertise required to implement complex data integration workflows while improving development speed and accuracy.

Self-healing pipelines leverage ML models to detect schema changes and adapt transformations automatically. Organizations can maintain data flow continuity even when source systems undergo updates or modifications without manual intervention.

Real-Time Processing Capabilities

Streaming ETL and CDC support continuous processing for real-time analytics and alerting. Modern data integration platforms process data as it arrives rather than waiting for scheduled batch jobs, enabling immediate response to business events and customer interactions.

Event-driven architectures treat each data change as a business event, enabling immediate action. This approach transforms data integration from a background process into an active component of business operations that can trigger automated responses and workflows.

Intelligent Quality Management

AI-based data-quality monitoring learns normal patterns and flags anomalies proactively. Machine learning algorithms can detect data quality issues before they impact downstream processes, reducing the need for manual data validation and improving overall pipeline reliability.

These technologies enable organizations to build more resilient and responsive data integration capabilities that adapt to changing business needs without requiring constant manual adjustment.

What Privacy and Governance Challenges Must Modern Data Integration Address?

Modern data integration faces increasingly complex privacy and governance requirements that span multiple jurisdictions and regulatory frameworks. Organizations must balance data accessibility for business operations with strict privacy protection and compliance requirements.

Data anonymization and differential privacy protect identities while preserving insight value. These techniques enable organizations to leverage data for analytical purposes while meeting privacy requirements and reducing the risk of data breaches or unauthorized access.

Consent management enforces individual preferences across all workflows, ensuring organizations respect customer privacy choices throughout their data processing activities. This requires sophisticated tracking and enforcement mechanisms that span multiple systems and processes.

Regulatory Compliance Framework

Regulatory compliance, including GDPR, CCPA, HIPAA, and AI-focused laws, requires lineage, auditability, and policy enforcement capabilities that extend across all data integration processes. Organizations must maintain detailed records of data movement and transformation while implementing automated policy enforcement.

Data sovereignty ensures locality where mandated, using techniques like federated analytics that enable insights without centralizing sensitive data. This approach becomes increasingly important as organizations operate across multiple jurisdictions with different data localization requirements.

Advanced Access Control Systems

Attribute-based access control (ABAC) provides fine-grained, context-aware permissions that adapt to user roles, data sensitivity, and business context. This enables organizations to maintain security while enabling appropriate data access for business operations.

Modern governance frameworks must support dynamic policy enforcement that adapts to changing regulatory requirements and business needs without requiring manual policy updates across multiple systems.

How Should You Choose Between ETL, Reverse ETL, and Data Activation Approaches?

Selecting the appropriate data integration approach requires careful assessment of organizational capabilities, business requirements, and strategic objectives. Most organizations benefit from implementing multiple approaches that serve different aspects of their data strategy rather than choosing a single methodology.

1. Organizational Readiness Assessment

Assess organizational readiness, including data maturity, infrastructure, and skills. Organizations with limited data engineering capabilities may benefit from starting with managed ETL solutions before progressing to more complex reverse ETL or data activation implementations.

Technical infrastructure evaluation should consider existing systems, cloud readiness, and integration capabilities. Organizations with mature cloud infrastructure can leverage advanced streaming and real-time processing capabilities more effectively.

2. Business Outcome Alignment

Align with business outcomes by evaluating analytics depth versus operational immediacy requirements. Organizations focused on historical analysis and reporting may prioritize ETL, while those emphasizing customer experience and operational responsiveness may benefit more from reverse ETL or data activation.

Consider the balance between insight generation and insight operationalization in your data strategy. Some organizations require deep analytical capabilities before operational integration, while others benefit from immediate operational improvements even with basic analytics.

3. Implementation Strategy Development

Adopt a phased strategy that starts with high-value, low-risk use cases and expands based on success and learning. This approach reduces implementation risk while building organizational capability and confidence in data integration technologies.

Plan resources and change management to account for implementation, maintenance, and cultural impact. Successful data integration initiatives require not just technical implementation but also organizational change management and training.

4. Platform Evaluation Criteria

Evaluate platforms holistically, considering capabilities, ecosystem fit, scalability, and total cost of ownership. Consider how different approaches integrate with existing technology investments and support long-term strategic objectives.

Modern data integration platforms like Airbyte provide comprehensive capabilities spanning ETL, reverse ETL, and data activation use cases. With 600+ connectors and flexible deployment options including cloud, hybrid, and on-premises environments, Airbyte enables organizations to implement unified data integration strategies without vendor lock-in while maintaining enterprise-grade security and governance capabilities.

Conclusion

The choice between different data integration approaches ultimately depends on your organization's current capabilities, strategic objectives, and resource constraints. Most successful organizations implement hybrid approaches that leverage the strengths of multiple methodologies while building toward comprehensive data activation capabilities that drive measurable business outcomes.

Frequently Asked Questions

When should I choose ETL over reverse ETL for my data integration needs?

Choose ETL when your main goal is building a centralized, high-quality data foundation for analytics, BI, and regulatory reporting.

How can reverse ETL improve my customer experience and operational efficiency?

By pushing analytical insights into operational tools, reverse ETL enables personalized campaigns, enriched lead data, and proactive service.

What technical expertise is required to implement data activation successfully?

A mix of data engineering, business analysis, and systems integration skills—though modern platforms reduce the heavy lifting through automation.

How do I measure the ROI and business impact of different data integration approaches?

Track cost savings (manual effort, maintenance) and revenue gains (conversion lift, faster insights). Establish baselines and compare post-implementation metrics.

What security and compliance considerations are most important for modern data integration?

End-to-end encryption, automated data classification, granular access controls, comprehensive audit logging, and adherence to privacy regulations across jurisdictions.

Suggested Read:

.webp)