How to Build a Private LLM: A Complete Guide

Summarize this article with:

✨ AI Generated Summary

Private large language models (LLMs) offer enterprises enhanced data control, security, and customization, addressing challenges like regulatory compliance and integration complexities. Key considerations include:

- Choosing appropriate architectures (autoregressive, autoencoding, encoder-decoder) and deployment models (open-source vs proprietary).

- Implementing robust security measures such as Trusted Execution Environments, encryption, and federated learning for privacy-preserving training.

- Leveraging tools like Hugging Face and Airbyte for streamlined development, data integration, and fine-tuning.

- Addressing infrastructure, operational complexity, and cost management to ensure sustainable, compliant, and high-performance private LLM deployments.

Data teams implementing private LLMs face a critical paradox: while these models promise unprecedented data control and security, organizations struggle with complex deployment challenges, regulatory compliance gaps, and integration barriers that can undermine their effectiveness. As enterprises increasingly demand AI solutions that maintain data sovereignty while delivering business value, understanding how to build and deploy private LLMs effectively has become essential for data professionals navigating this evolving landscape.

Private large language models represent a transformative approach to enterprise AI, enabling organizations to harness powerful language capabilities while maintaining complete control over their data and intellectual property. This comprehensive guide explores the technical foundations, implementation strategies, and advanced methodologies needed to successfully build, deploy, and maintain private LLMs in enterprise environments.

What Are Large Language Models and How Do They Function?

Large language models are advanced AI systems that analyze, understand, and generate text by processing billions of words to learn linguistic patterns and context. These sophisticated neural networks leverage transformer architectures to handle complex language tasks including question answering, summarization, translation, and conversation generation.

A crucial component in LLM functionality is tokenization, which breaks text into manageable units called tokens. These tokens can represent words, sub-words, or characters, allowing the model to process language efficiently while maintaining semantic understanding across diverse contexts.

LLMs utilize deep-learning neural networks trained on massive datasets to develop comprehensive language understanding. Popular architectures include GPT (Generative Pre-trained Transformer) models that excel at text generation and BERT (Bidirectional Encoder Representations from Transformers) models optimized for language comprehension tasks.

The power of LLMs lies in their ability to generate coherent, contextually appropriate responses by predicting likely word sequences based on learned patterns. This capability enables applications ranging from automated customer service to complex document analysis and content creation workflows.

What Are the Different Types of Large Language Models Available?

LLMs can be categorized across multiple dimensions including architectural design, availability models, and domain specialization. Understanding these distinctions helps organizations select appropriate models for their specific use cases and deployment requirements.

1. Architecture-Based Classification

Autoregressive LLMs predict the next word in a sequence, making them ideal for text generation tasks. Models like GPT excel at creating coherent, contextually appropriate content by leveraging previous tokens to inform subsequent predictions.

Autoencoding LLMs reconstruct input text from compressed representations, developing deep understanding of sentence structure and semantic relationships. BERT exemplifies this approach, focusing on bidirectional context analysis for comprehension-focused applications.

Encoder-Decoder LLMs utilize separate components for input processing and output generation, making them particularly effective for sequence-to-sequence tasks. Models like T5 demonstrate strong performance in translation, summarization, and structured text transformation applications.

2. Availability and Access Models

Open-Source LLMs provide publicly available code and model weights, enabling organizations to modify, customize, and deploy models according to their specific requirements. Examples include GPT-2, BLOOM, and various community-developed models that prioritize transparency and customization.

Proprietary LLMs are maintained by companies and accessed through paid APIs or licensed deployments. These models, such as GPT-4 and Google Bard, often incorporate the latest advancements but limit customization and control over deployment environments.

3. Domain-Specific Specialization

Domain-specific LLMs are trained on vertical-specific datasets to master specialized terminology, concepts, and contextual nuances. These models demonstrate superior performance in their target domains compared to general-purpose alternatives.

Medical LLMs trained on clinical literature, research papers, and healthcare documentation can assist with diagnostic support, treatment recommendations, and medical research applications. Legal LLMs develop expertise in contract analysis, regulatory compliance, and legal document generation. Financial LLMs specialize in market analysis, risk assessment, and regulatory reporting within financial services contexts.

Why Do Organizations Need Private Large Language Models?

Organizations across industries are recognizing that private LLMs address fundamental limitations of public AI services while providing strategic advantages that generic solutions cannot match. The shift toward private implementations reflects growing awareness of data sovereignty, competitive differentiation, and operational control requirements.

Data Security and Privacy Protection represents the primary driver for private LLM adoption. Organizations handling sensitive information require complete control over data processing, storage, and access patterns. Private LLMs ensure that proprietary data never leaves organizational boundaries, eliminating risks associated with third-party data processing and potential exposure through shared cloud services.

Regulatory Compliance Requirements demand that organizations in regulated industries maintain strict control over AI processing workflows. GDPR, HIPAA, SOX, and industry-specific regulations require documented data lineage, processing transparency, and audit trails that public LLM services cannot adequately provide. Private implementations enable organizations to embed compliance controls directly into AI workflows.

Customization and Performance Optimization allow organizations to fine-tune models on proprietary datasets, terminology, and business processes. This specialization yields significantly higher accuracy and relevance compared to generic models, particularly for domain-specific applications requiring deep understanding of organizational context and specialized knowledge.

Intellectual Property Protection becomes critical when AI applications process competitive intelligence, proprietary research, or strategic information. Private LLMs ensure that valuable insights, methodologies, and business intelligence remain within organizational control while preventing inadvertent disclosure through model training or inference processes.

Operational Independence reduces dependency on external services that may experience outages, pricing changes, or policy modifications that disrupt business operations. Private LLMs provide predictable costs, consistent availability, and long-term operational stability essential for mission-critical applications.

How Do You Build Your Own Private Large Language Model?

Building a private LLM requires systematic planning, technical expertise, and strategic decision-making across multiple dimensions. The process involves both technical implementation and organizational considerations that determine long-term success.

1. Define Clear Objectives and Success Criteria

Establish specific use cases that justify private LLM development, such as internal knowledge management, automated customer service, regulatory document analysis, or proprietary research assistance. Clear objectives guide architectural decisions, training data requirements, and performance evaluation criteria throughout the development process.

Document success metrics including accuracy thresholds, response latency requirements, compliance standards, and business impact measurements. These criteria inform resource allocation decisions and help stakeholders evaluate return on investment throughout the implementation process.

2. Select Appropriate Model Architecture

Choose transformer, encoder-decoder, or hybrid architectures based on scalability requirements, latency constraints, and task-specific performance needs. Consider computational resources, deployment environments, and maintenance capabilities when selecting base architectures.

Evaluate trade-offs between model size and performance, balancing accuracy requirements against infrastructure costs and operational complexity. Smaller models may provide adequate performance for specific use cases while reducing deployment and maintenance overhead.

3. Implement Comprehensive Data Collection and Preprocessing

Gather domain-specific training data from internal sources, external repositories, and curated datasets relevant to organizational use cases. Ensure data quality through systematic cleaning, deduplication, and format standardization processes that eliminate noise and inconsistencies.

Apply tokenization strategies appropriate for your data characteristics and model architecture. Techniques like Byte-Pair Encoding or SentencePiece enable efficient text processing while preserving semantic meaning across diverse content types and languages.

4. Execute Strategic Model Training Approaches

Fine-tuning adapts pre-trained models using organizational data, significantly reducing computational requirements compared to training from scratch. Training from scratch provides maximum customization but requires substantial computational resources and extensive datasets.

Implement advanced techniques including curriculum learning, weight decay, and distributed training strategies to optimize model performance and training efficiency. Monitor training progress through validation metrics and adjust hyperparameters to prevent overfitting and ensure generalization.

5. Establish Robust Security and Access Controls

Apply comprehensive security measures including encryption for data in transit and at rest, role-based access controls, and audit logging for all model interactions. Implement network segmentation and secure deployment practices that protect models and training data from unauthorized access.

Design authentication and authorization systems that integrate with organizational identity management while providing granular control over model access and usage patterns.

6. Implement Continuous Monitoring and Governance

Deploy monitoring systems that track model performance, detect drift, and identify potential bias or security issues. Establish automated alerts for performance degradation, unusual usage patterns, or compliance violations that require immediate attention.

Conduct regular audits of model outputs, training data, and operational processes to ensure continued compliance with regulatory requirements and organizational policies.

7. Provide User Education and Ethical Usage Guidelines

Develop comprehensive documentation covering model capabilities, limitations, and appropriate usage patterns. Train users on ethical AI principles, bias awareness, and organizational policies governing AI tool usage.

What Are Advanced Security Architectures for Private LLM Inference?

Modern private LLM deployments require sophisticated security architectures that protect sensitive data throughout the entire inference pipeline. Advanced security frameworks combine hardware-based protection, cryptographic techniques, and architectural innovations to create comprehensive defense-in-depth strategies.

Trusted Execution Environments and Confidential Computing

Trusted Execution Environments (TEEs) provide hardware-enforced security by isolating computation within dedicated secure enclaves, ensuring that data and model weights remain encrypted during processing.

NVIDIA's H100 Confidential Computing, Intel TDX, AMD SEV, and ARM TrustZone exemplify production-ready technologies that secure LLM inference with minimal performance impact.

Hybrid Deployment Models for Optimal Security and Performance

Organizations increasingly adopt hybrid architectures including edge-to-cloud, split computation, federated learning hybrids, and multi-cloud strategies to balance security requirements with operational efficiency while complying with regulatory mandates.

How Can You Implement Federated Learning and Privacy-Preserving Training Methods?

Advanced training methodologies enable organizations to develop sophisticated private LLMs while addressing data privacy constraints and enabling collaborative learning across organizational boundaries.

Federated Fine-Tuning for Collaborative Model Development

Federated learning distributes model training across multiple devices or servers while keeping training data localized, addressing fundamental privacy challenges in centralized LLM development.

Parameter-efficient fine-tuning techniques such as LoRA and adapters, gradient aggregation strategies, and subset participation protocols collectively optimize communication efficiency, convergence stability, and fairness.

Differential Privacy for Synthetic Data Generation

Differential privacy enables organizations to generate training datasets that preserve statistical properties of original data while providing mathematical guarantees about individual privacy protection.

Adaptive epsilon allocation, privacy-aware token aggregation, and quality-privacy optimization balance privacy guarantees with model utility, supporting regulated industries such as healthcare, finance, and legal services.

How Can You Build a Private LLM Using Airbyte?

Airbyte's open-source data-integration platform simplifies collecting, transforming, and preparing diverse data sources for private LLM development while ensuring data security and governance.

600+ pre-built connectors, a no-code connector builder, and PyAirbyte integration streamline data ingestion into ML workflows. Built-in chunking, embedding, and indexing accelerate retrieval-augmented generation (RAG) and semantic-search use cases.

Change Data Capture (CDC) is supported across all deployment types, while features like encryption, RBAC, and comprehensive audit logging are natively available in Airbyte Cloud but require external setup and management in on-prem and hybrid deployments to meet enterprise-grade security and compliance needs.

Is Retrieval-Augmented Generation Different from a Private LLM?

Yes, these approaches serve different purposes and architectural patterns.

Retrieval-Augmented Generation (RAG) couples a language model with external retrieval systems to provide up-to-date, factual information at inference time. Private LLMs internalize all knowledge within model parameters and require re-training to incorporate new information.

RAG offers higher currency and granular access controls, while private LLMs provide fully self-contained knowledge with simplified inference infrastructure.

What Is the Significance of Private Large Language Models?

Private LLMs offer several strategic advantages for organizations seeking to maintain control over their AI capabilities.

Customization and deep domain expertise allow organizations to develop specialized knowledge that generic models cannot match. Intellectual property protection ensures that valuable organizational knowledge and competitive advantages remain secure.

Scalability and optimized performance enable organizations to fine-tune resources according to specific workload requirements. Reduced external dependencies and predictable costs provide operational stability essential for mission-critical applications.

Built-in regulatory compliance capabilities ensure that AI implementations meet industry-specific requirements while maintaining audit trails and governance controls.

What Are the Key Challenges and Considerations?

Technical Infrastructure requirements include GPU clusters, storage, networking, and data governance systems that support high-performance model training and inference operations.

Security and Privacy considerations encompass encryption, TEEs, differential privacy, and continuous monitoring to protect sensitive data throughout the model lifecycle.

Operational Complexity involves lifecycle management, bias detection, versioning, and updates that require specialized expertise and systematic processes.

Cost Management requires assessing total cost of ownership and resource optimization to ensure sustainable operations that deliver measurable business value.

Organizational Change encompasses skill development, user adoption, and change-management processes that enable successful implementation and long-term success.

What Role Does Hugging Face Play in Building Private LLMs?

Hugging Face accelerates private LLM projects through comprehensive tools and community resources.

An extensive model and dataset hub provides access to state-of-the-art pre-trained models and high-quality training datasets. The transformers library offers standardized implementations of cutting-edge architectures.

AutoTrain enables no-code fine-tuning for organizations without extensive machine learning expertise. Parameter-efficient tuning methods such as LoRA reduce computational requirements while maintaining model performance.

Enterprise deployment tools support on-prem, cloud, and hybrid environments while a vibrant community drives rapid innovation and knowledge sharing.

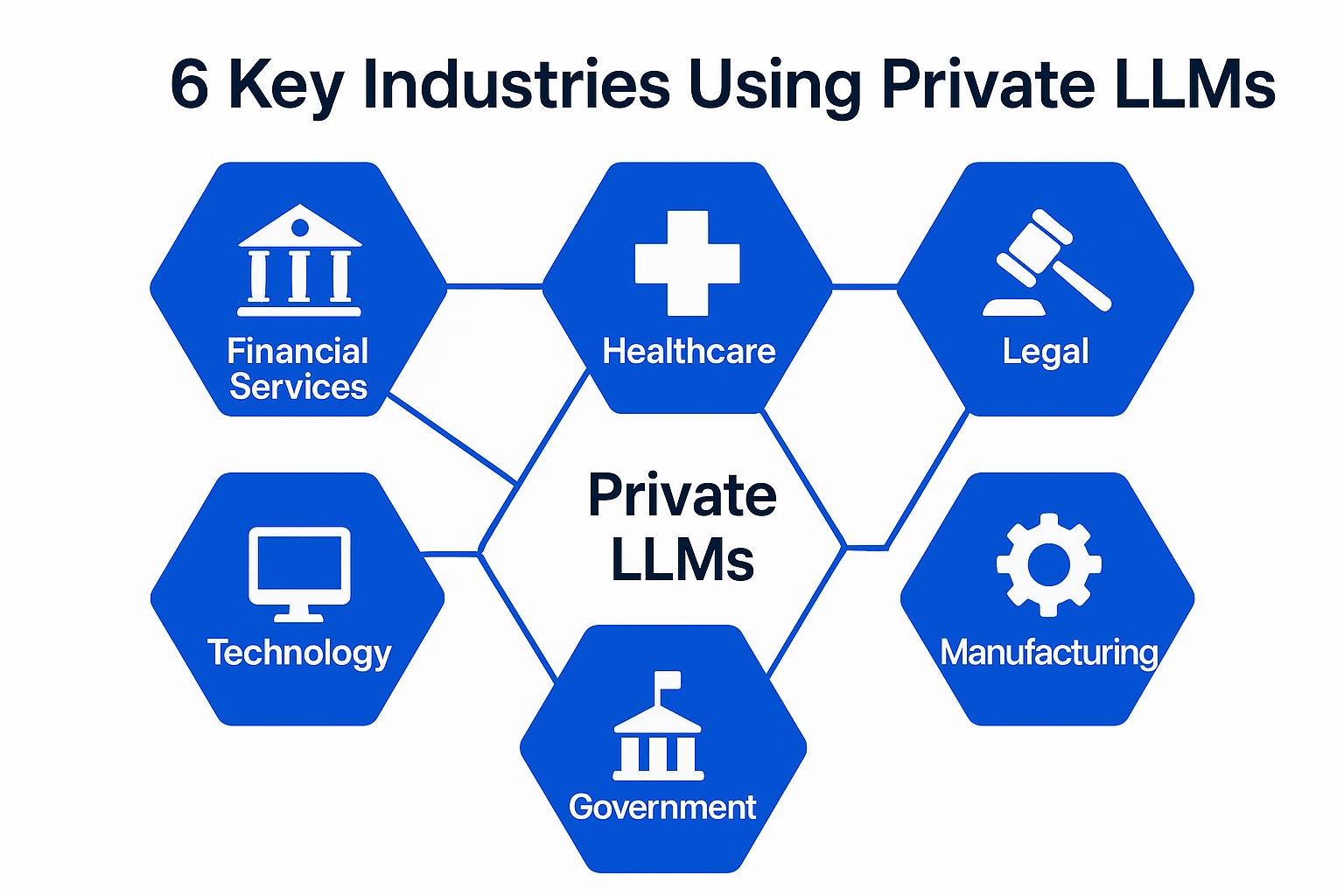

Which Industries Benefit Most from Private LLMs?

Financial Services leverage private LLMs for fraud detection, risk assessment, and compliance monitoring while maintaining strict data security requirements.

Healthcare and Life Sciences use private models for clinical decision support, drug discovery, and documentation while ensuring patient privacy and regulatory compliance.

Legal and Professional Services employ private LLMs for contract analysis, legal research, and regulatory monitoring while protecting client confidentiality.

Technology and Software companies utilize private models for code generation, knowledge management, and technical support while safeguarding proprietary information.

Manufacturing and Industrial organizations implement private LLMs for predictive maintenance and supply-chain optimization while protecting operational intelligence.

Government and Defense agencies deploy private models for intelligence analysis, cybersecurity, and operational planning while maintaining national security requirements.

FAQs

What is the main difference between private and public LLMs?

Private LLMs are built and run within an organization's infrastructure, providing complete control over data and processing. Public LLMs are accessed via third-party APIs, limiting customization and control over data handling.

How much does it cost to build a private LLM?

Costs vary significantly based on scale, data requirements, and infrastructure needs. Organizations should evaluate total cost of ownership including development, deployment, and ongoing operational expenses.

What infrastructure is required?

High-performance GPUs, large-capacity storage, and robust networking form the foundation for private LLM infrastructure. Deployments can be on-premises, in cloud environments, or hybrid configurations depending on organizational requirements.

How do you ensure security and compliance?

End-to-end encryption, role-based access controls, continuous monitoring, and comprehensive audit logging protect data and form critical components of compliance efforts with regulations such as GDPR and HIPAA, but must be supported by broader organizational and governance measures to ensure full regulatory compliance.

Can private LLMs be updated with new information?

Yes, private LLMs can incorporate new information through retraining, fine-tuning, or hybrid architectures that combine private models with retrieval-augmented generation components.

Conclusion

Private LLMs represent a strategic approach to enterprise AI that addresses fundamental concerns around data privacy, security, and competitive differentiation. By maintaining complete control over AI capabilities, organizations can develop specialized solutions that deliver superior performance for domain-specific applications while ensuring compliance with regulatory requirements. These implementations protect valuable intellectual property while providing the operational independence necessary for mission-critical business applications. The investment in private LLM infrastructure ultimately enables organizations to harness AI capabilities without compromising on security, compliance, or competitive advantage.

.webp)