How to Extract Data from PDF to Excel: A Comprehensive Guide

Summarize this article with:

✨ AI Generated Summary

PDF-to-Excel data extraction faces challenges due to PDFs' visual-focused structure, format variability, and multi-page tables, making manual methods error-prone and unscalable. Five key extraction methods include:

- Manual copy-paste: suitable only for small, simple tasks but time-consuming and error-prone.

- Online converters: easy for medium-volume, structured PDFs but limited by privacy, accuracy, and batch processing constraints.

- Power Query: integrates natively with Excel for repeatable workflows but struggles with complex layouts and non-Microsoft ecosystems.

- Python pipelines: offer maximum customization and ML integration but require developer expertise and maintenance.

- Airbyte: enterprise-grade, automated data integration platform with extensive connectors, OCR, and scalable workflows ideal for large, complex datasets.

AI-powered extraction platforms provide the highest accuracy through intelligent document understanding, zero-shot learning, and context-aware validation, transforming PDF data workflows beyond traditional OCR limitations.

PDF files dominate professional document sharing because they preserve formatting across devices, but this strength becomes a liability when you need structured data for analysis. Finance teams waste hours manually transcribing quarterly reports, researchers struggle to collate literature data into analyzable formats, and operations managers find themselves trapped between static PDF reports and the dynamic Excel spreadsheets required for decision-making. The fundamental mismatch between PDF's visual consistency design and Excel's data-centric structure creates extraction challenges that manual copying simply cannot solve at scale.

This comprehensive guide presents five reliable methods to transform PDF data into Excel format, from quick manual solutions to fully-automated, AI-powered pipelines that handle complex document structures with unprecedented accuracy.

What Are the Key Challenges in PDF Data Extraction?

Structural Complexity and Layout Inconsistencies

PDF documents prioritize visual fidelity over logical data organization, creating fundamental barriers to automated extraction. Unlike Excel's grid-based structure with defined cells and relationships, PDFs encode content as text paths, images, or embedded vectors without inherent semantic meaning. A two-column academic paper might extract as alternating lines from each column, destroying contextual coherence. Financial reports with tables spanning multiple pages often lack consistent headers, forcing extraction tools to infer column relationships through whitespace patterns rather than structural metadata.

Multi-page documents compound these challenges when tables break across pages without clear continuation markers. A quarterly earnings report might segment revenue data across three pages, with subtotals appearing mid-table and footnotes interrupting data flows. Traditional extraction methods struggle to maintain row integrity across these breaks, often producing fragmented datasets that require extensive manual reconstruction.

Format Heterogeneity and Content Complexity

No universal template governs PDF creation, resulting in extreme format variability even within document batches from single sources. Scanned PDFs introduce additional complexity by converting structured data into pixel-based images that require Optical Character Recognition (OCR) to digitize text. When fonts degrade, scans skew, or backgrounds contain noise, character recognition errors cascade through the extraction process.

Complex invoices exemplify these challenges: unit prices may align vertically without delimiters, merged cells span multiple rows, and handwritten notes overlay printed text. Documents with mixed content types—such as technical manuals where diagrams interrupt tabular data—further complicate extraction. The absence of standardized markup means extraction tools must interpret spatial relationships through pattern recognition rather than explicit structural cues.

Scale and Accuracy Trade-offs

Enterprise workflows often require processing thousands of documents with varying layouts, creating scale challenges that manual methods cannot address. A financial services firm processing loan applications might encounter forms from dozens of different banks, each with unique formatting conventions. Traditional extraction approaches either fail with unfamiliar layouts or require extensive template configuration for each variant.

Quality assurance becomes critical when extracted data drives business decisions. A single misaligned column in expense reports can propagate through financial analysis, while incorrect invoice totals trigger payment discrepancies. The computational overhead required for high-accuracy extraction often conflicts with performance demands, forcing organizations to choose between speed and precision in their data processing workflows.

How Can You Extract Data From PDF to Excel Using Five Proven Methods?

To overcome these challenges and maintain accuracy in your analytical workflows, here are five proven methods for transferring data from PDF to Excel, each optimized for different use cases and technical requirements.

1. Why Is Manual Copy Paste an Unproductive Method to Transform PDF to Excel?

- Open the PDF file in a viewer such as Adobe Acrobat Reader.

- Select the content you want to copy using click-and-drag or keyboard shortcuts.

- Use

CTRL + Cto copy the selected content to your clipboard. - Open Microsoft Excel and navigate to the target worksheet.

- Use

CTRL + Vto paste the content into Excel cells. - Manually adjust column widths, data types, and formatting to ensure data integrity.

- Clean up any formatting artifacts such as extra spaces or merged text.

- Save the file in your preferred Excel format.

Manual copying introduces human error through inconsistent selection boundaries and formatting interpretation. Table structures often collapse during clipboard transfer, with multi-column data flattening into single columns or cells merging unexpectedly. Headers frequently separate from data rows, and numerical values may convert to text format, breaking downstream calculations.

The time investment scales exponentially with document complexity. A single financial statement might require 30-45 minutes of careful copying and cleanup, while batch processing hundreds of similar documents becomes prohibitively time-consuming. Quality control becomes nearly impossible at scale, as subtle selection errors or formatting inconsistencies accumulate across large document sets.

When to use:Only for very small, one-off jobs with simple table structures. Copy-pasting hundreds of PDFs quickly becomes unmanageable and error-prone.

2. How Does Airbyte Provide an Automated Approach to Move Data From PDF to Excel?

Airbyte transforms PDF data extraction through its comprehensive data integration platform featuring 600+ pre-built connectors and advanced document processing capabilities. The platform's Document File Type Format leverages the Unstructured library to extract text while preserving hierarchical structure, including OCR processing for scanned documents. This approach enables automated workflows that handle document variability without manual template configuration.

Airbyte's strength lies in its ability to create end-to-end data pipelines that extend far beyond simple PDF conversion. The platform provides incremental sync capabilities for large document repositories, schema management for consistent data structure, and integration with modern data stacks including cloud warehouses and transformation tools. Enterprise-grade security features ensure compliance with GDPR, HIPAA, and SOC2 requirements while maintaining data sovereignty across hybrid and on-premises deployments.

Step 1: Configure Your Source to Extract Data From PDFs

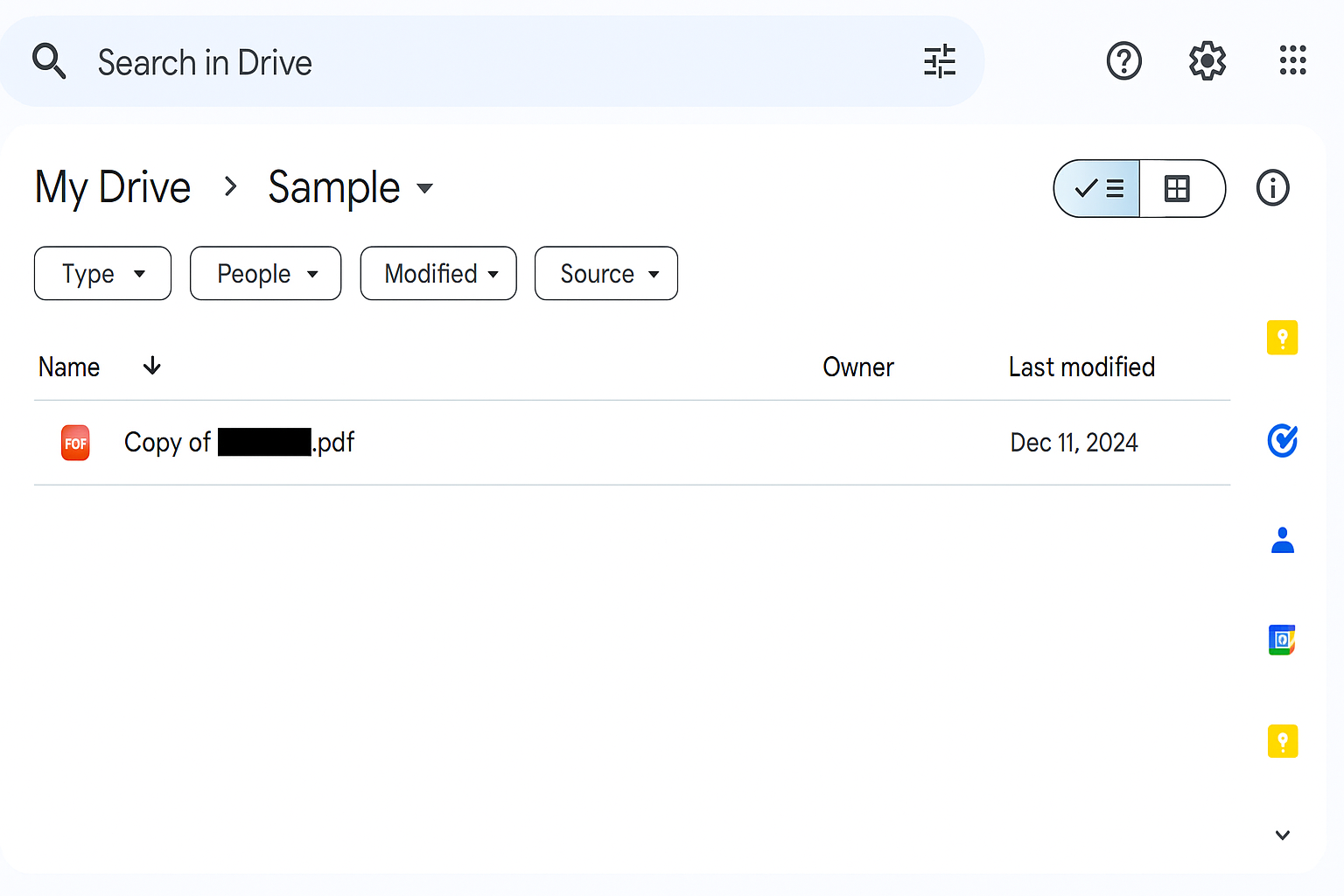

Prerequisite – create a Google Drive folder and upload the PDF files requiring extraction.

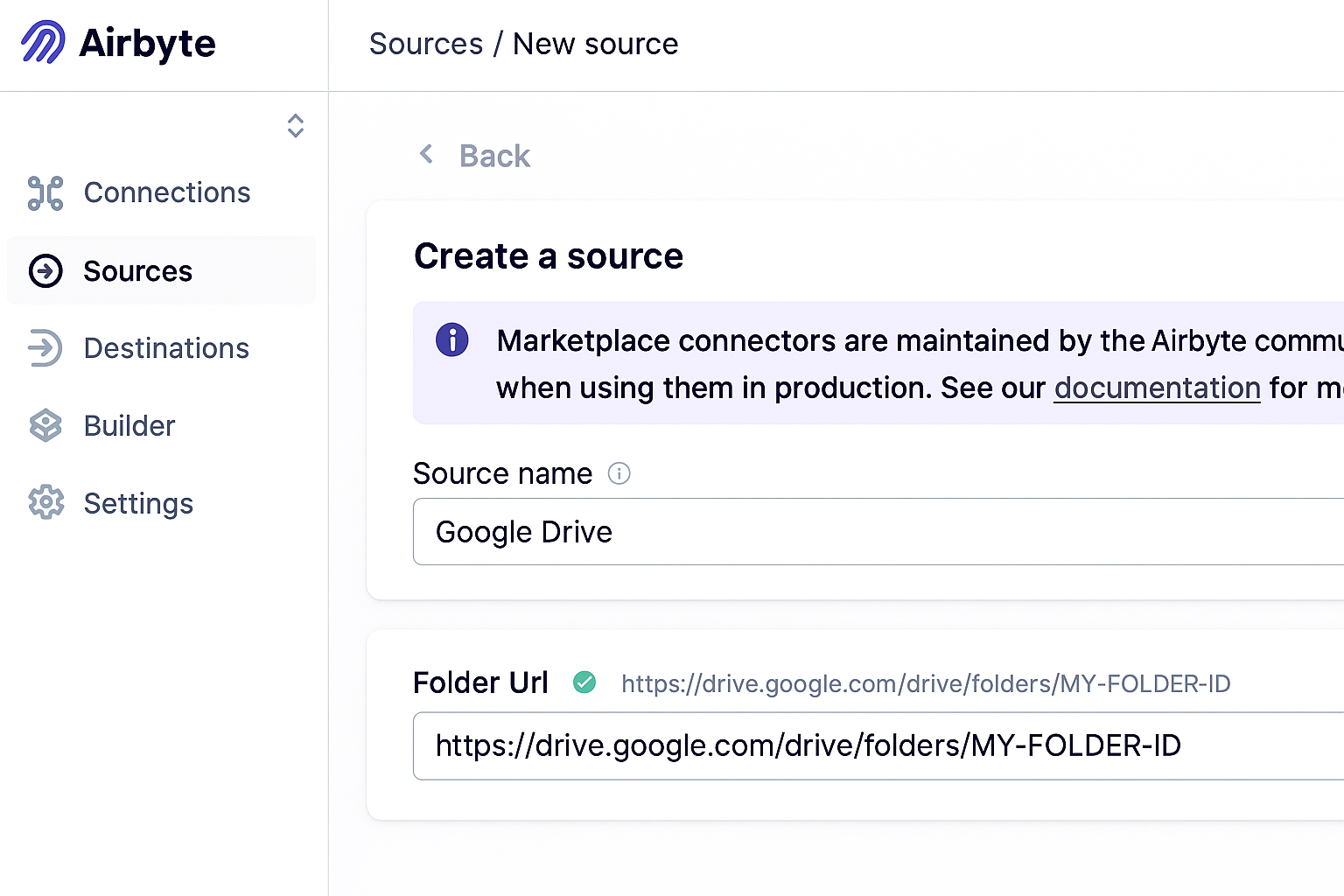

- Sign in to Airbyte Cloud or deploy using your preferred self-managed option.

- Create a Google Drive source connector from the connector catalog.

- Enter the Drive folder URL containing your target PDF documents.

- Configure authentication using Google OAuth for secure access.

- Add a new stream with the following configuration:

- Format:

Document File Type Format (Experimental) - Name:

pdf_files - Globs:

*.pdfto target all PDF files in the folder - Parse Options: Enable OCR for scanned documents and specify text extraction parameters

- Configure incremental sync mode to automatically process new PDF files as they're added to the folder.

- Test the connection to verify proper authentication and file access.

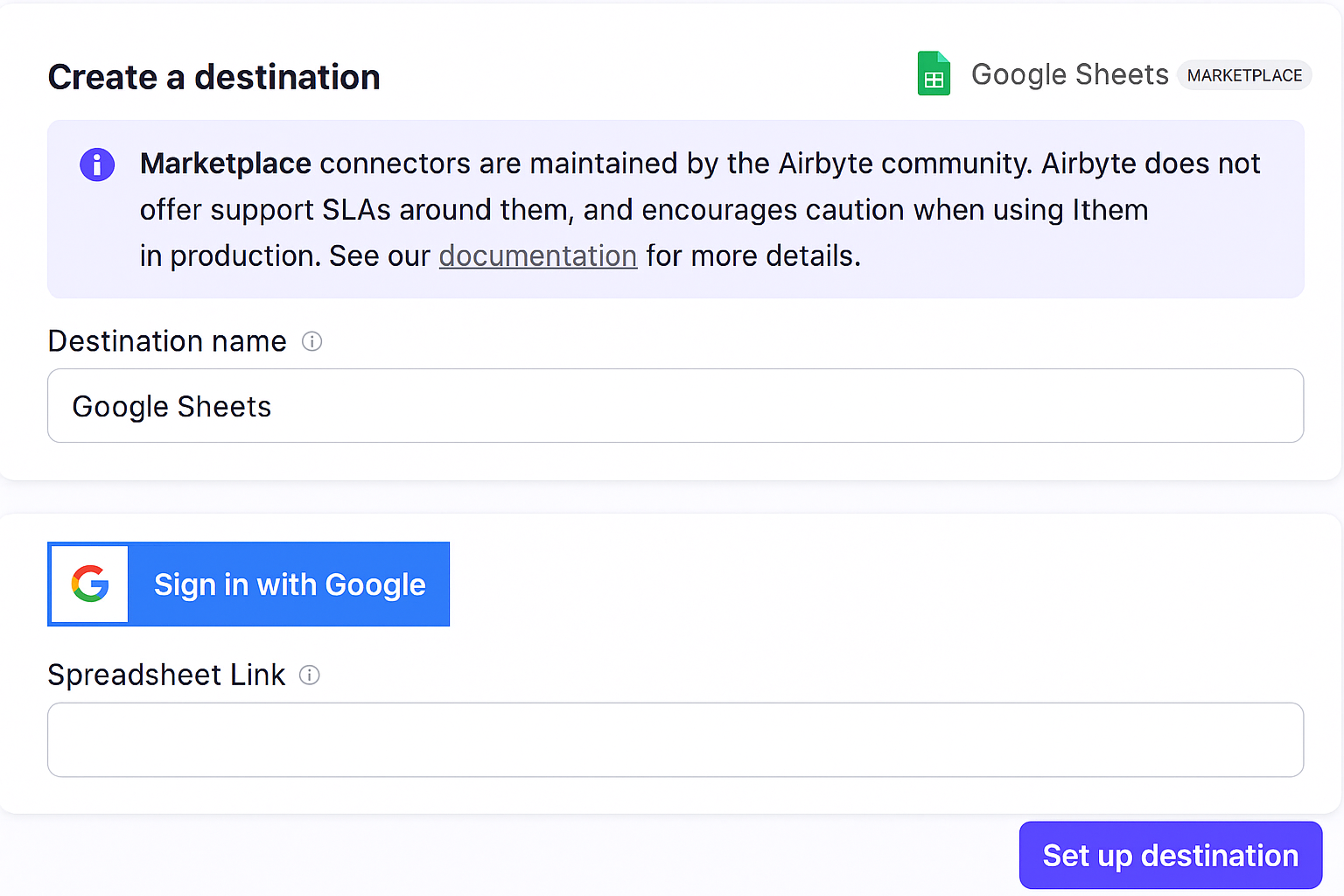

Step 2: Configure Your Destination to Load Extracted Data into Excel

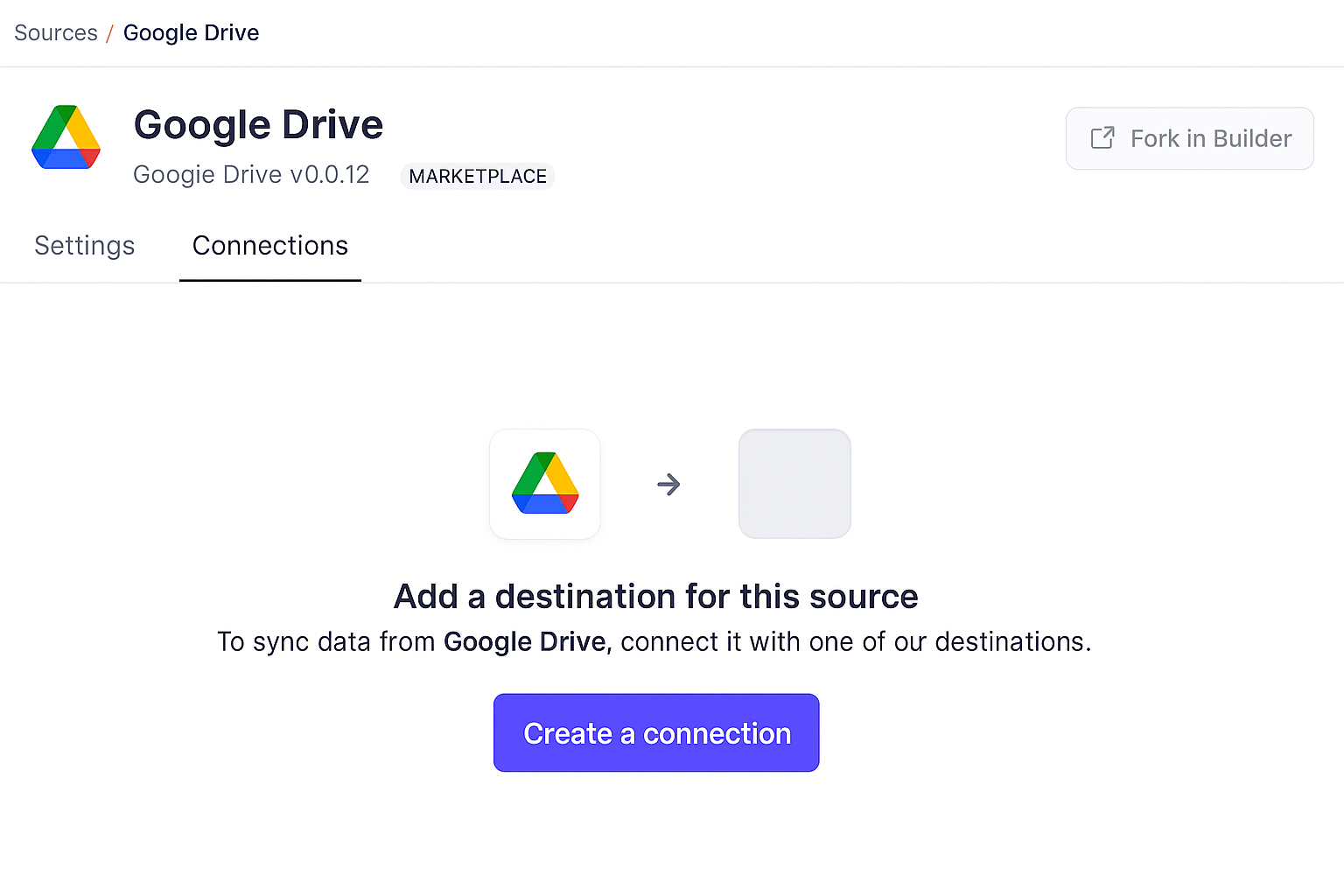

Airbyte's Google Sheets destination enables collaborative data access with seamless Excel export capabilities through native download options.

- Select Google Sheets as your destination connector.

- Authenticate using Google OAuth and provide the target spreadsheet URL.

- Configure write mode settings for data insertion and update behavior.

- Map the

pdf_filesstream to your target worksheet. - Set replication frequency based on your data freshness requirements (hourly, daily, or triggered).

- Configure normalization settings to optimize data structure for Excel compatibility.

The extracted PDF content syncs automatically into a dedicated pdf_files worksheet. Use Google Sheets' built-in functions for data manipulation, or export directly to Excel format via File → Download → Microsoft Excel (.xlsx) for offline analysis. Advanced users can leverage Airbyte's dbt integration for custom transformations during the extraction process.

Airbyte's competitive advantage over single-purpose PDF converters: comprehensive connector ecosystem supporting 600+ data sources, automated incremental syncs for large document repositories, enterprise-grade security and compliance features, open-source extensibility through custom connector development, and seamless integration with modern data transformation and analytics workflows.

3. What Are PDF to Excel Converters and Their Limitations?

Online conversion tools such as SmallPDF, ILovePDF, and Docparser offer specialized PDF-to-Excel functionality through web-based interfaces. These platforms typically employ OCR engines combined with table detection algorithms to identify and extract structured data from PDF documents. Users upload files through browser interfaces and receive converted Excel files within minutes, making them accessible to non-technical users.

However, these tools face significant limitations in enterprise contexts. Free tiers often restrict file sizes to 2-5MB, add watermarks to outputs, or limit batch processing capabilities. Quality issues arise with complex layouts where table boundaries are ambiguous or when documents contain mixed content types. Privacy concerns emerge when processing sensitive documents through third-party servers, particularly for organizations with strict data governance requirements.

Subscription-based versions of these tools offer improved accuracy and higher processing limits but remain specialized for single-use conversions. Unlike comprehensive data platforms, they cannot integrate with existing data workflows, lack incremental processing for document repositories, and provide no transformation capabilities beyond basic format conversion. For organizations requiring ongoing PDF processing as part of larger analytical workflows, these tools create operational silos rather than integrated solutions.

The accuracy of these converters varies significantly with document complexity. Simple forms with clear table boundaries often convert successfully, while complex financial reports with nested tables, merged cells, and irregular spacing frequently produce fragmented outputs requiring manual cleanup. This inconsistency makes them unsuitable for automated workflows where data quality must remain predictable.

4. How Can Power Query Transform PDF Files to Excel Within Microsoft's Ecosystem?

Power Query provides native PDF import capabilities within Microsoft Excel 2016 and later versions, eliminating the need for external tools in Microsoft-centric environments. This built-in ETL engine offers sophisticated data transformation capabilities beyond simple format conversion, including column splitting, data type inference, and relationship mapping between multiple tables within a single PDF.

The extraction process leverages Microsoft's document parsing algorithms optimized for business documents common in enterprise environments. Power Query automatically detects table structures and presents them in a navigator interface, allowing users to select specific tables or data ranges for import. The tool preserves formatting information and enables preview-based refinement before final data loading.

Available in Excel 2016+ (built-in) or as an add-in for Excel 2010/2013.

- Navigate to Data → Get & Transform Data → Get Data → From File → From PDF in the Excel ribbon.

- Select the target PDF file and click Import to initiate the parsing process.

- In the Navigator dialog, preview available tables and select those requiring extraction.

- Choose Transform Data to open the Power Query editor for advanced data manipulation, or Load to import data directly into the current worksheet.

- Apply data transformations such as column renaming, type conversion, or filtering as needed.

- Configure refresh settings for dynamic updating when source PDFs change.

Power Query excels in scenarios involving regular PDF reports with consistent structures, such as monthly financial statements or standardized forms. The tool's strength lies in its ability to create repeatable extraction patterns that automatically apply to new versions of similar documents. Users can save query definitions and refresh data with updated PDF files without reconfiguring extraction parameters.

However, Power Query's effectiveness diminishes with highly variable document formats or complex multi-page tables that span different sections. The tool works best within Microsoft's ecosystem but offers limited integration with non-Microsoft data platforms or cloud-based analytics workflows.

5. What Makes Python Data Pipelines a Developer-Friendly Approach?

Python offers maximum flexibility for PDF data extraction through specialized libraries that handle document parsing, table detection, and Excel output generation. This programmatic approach enables custom logic for complex document structures, batch processing capabilities, and integration with machine learning models for intelligent content recognition. Developer teams can create sophisticated extraction pipelines that adapt to document variations and integrate seamlessly with existing data infrastructure.

Key libraries for PDF extraction include pdfplumber for coordinate-based text extraction, Camelot for table detection and extraction, PyPDF2 for metadata handling, and pandas for data manipulation and Excel output. Advanced implementations incorporate Tesseract OCR for scanned document processing and OpenCV for image-based table recognition in complex layouts.

Python pipelines excel in scenarios requiring custom logic, such as processing documents with irregular layouts, applying domain-specific data validation rules, or integrating extraction results with machine learning workflows. The approach provides complete control over data quality, transformation logic, and output formatting while enabling sophisticated error handling and logging capabilities.

The development overhead is significant, requiring Python expertise and ongoing maintenance as document formats evolve. However, the investment pays off for organizations processing large volumes of complex documents or requiring integration with existing Python-based data science workflows.

How Are AI and Machine Learning Transforming PDF Data Extraction?

Modern artificial intelligence has revolutionized PDF data extraction by moving beyond traditional OCR limitations to intelligent document understanding. AI-powered platforms now combine computer vision, natural language processing, and deep learning to interpret document contexts rather than simply digitizing text. This transformation addresses fundamental challenges in handling complex layouts, variable document structures, and semantic relationship understanding that traditional tools cannot resolve.

Intelligent Document Processing and Multimodal AI

Contemporary extraction platforms like Google's Gemini API and DocparserAI utilize large language models with native vision capabilities to process PDF documents holistically. These systems analyze text, images, tables, and diagrams within unified frameworks, interpreting contextual relationships between document elements. Unlike traditional OCR systems that process text sequentially, AI models understand semantic hierarchies such as associating invoice line items with corresponding totals or linking contract clauses with effective dates.

Multimodal deep learning architectures integrate multiple specialized processors within single pipelines. Advanced frameworks automatically route documents through optimal processing paths: digital PDFs utilize transformer-based table detection models, scanned documents employ cascaded neural networks for visual element segmentation, and hybrid documents leverage attention mechanisms to align text and visual features across different content types.

Zero-Shot Learning and Adaptive Extraction

Modern AI platforms demonstrate remarkable adaptability through zero-shot learning capabilities that require minimal training data for new document types. Generative AI models can interpret extraction requirements through natural language descriptions, eliminating traditional template configuration overhead. For example, users can describe desired data fields in plain English, and AI systems adapt extraction logic without predefined rules or sample documents.

This adaptability proves particularly valuable for organizations processing diverse document types from multiple sources. Financial services firms can extract data from loan applications across dozens of bank formats without creating individual templates for each variant. Healthcare organizations process clinical forms with varying layouts using AI models that recognize medical terminology and standard reporting structures regardless of specific formatting conventions.

Context-Aware Validation and Quality Assurance

AI-enhanced extraction platforms incorporate intelligent validation mechanisms that understand business logic and data relationships. These systems flag inconsistencies such as invoice totals that don't match line item sums, or expense categories that deviate from historical patterns. Machine learning models continuously improve accuracy through feedback loops, learning from correction patterns to prevent similar errors in future extractions.

Advanced platforms now offer collaborative human-AI workflows where AI suggests extraction results while flagging uncertain fields for human review. When confidence scores drop below specified thresholds, systems pause processing for validation while continuing with high-confidence extractions. This approach balances automation efficiency with accuracy requirements for critical business applications.

Which Method Should You Choose for Your PDF to Excel Extraction Needs?

Airbyte provides the optimal balance of automation capabilities, enterprise-grade security, and extensibility for most organizational needs. The platform's connector ecosystem enables PDF extraction as part of comprehensive data integration workflows rather than isolated conversion tasks. For organizations requiring ongoing PDF processing, Airbyte's infrastructure approach proves more cost-effective and maintainable than accumulating multiple specialized tools.

The choice often depends on organizational maturity in data operations. Early-stage companies might begin with online converters or Power Query before graduating to Airbyte as data volumes and complexity increase. Established enterprises with existing data infrastructure often find Airbyte's comprehensive approach immediately valuable for replacing multiple specialized tools with unified data integration workflows.

Conclusion

The evolution from manual PDF transcription to AI-powered intelligent extraction represents a fundamental shift in how organizations handle unstructured document data. We explored six approaches for extracting data from PDF to Excel, each serving different organizational needs and technical capabilities:

- Manual copy-paste for immediate, small-scale needs

- Online PDF-to-Excel converters for medium-volume, structured documents

- Power Query for Microsoft-integrated workflows

- Python data pipelines for maximum customization and control

- Airbyte for enterprise-grade automation and scalable data integration

- AI-powered platforms for intelligent, context-aware extraction

Modern organizations face increasing document volumes while demanding higher accuracy and faster processing times. Traditional manual methods cannot scale to meet these requirements, while specialized conversion tools create operational silos that limit data integration capabilities.

Airbyte emerges as the strategic choice for most organizations because it addresses PDF extraction within the broader context of enterprise data integration. Rather than solving PDF conversion in isolation, Airbyte enables organizations to build comprehensive data pipelines that connect document repositories with analytical workflows, business intelligence platforms, and operational systems.

The platform's 600+ connector ecosystem, enterprise-grade security features, and flexible deployment options position it as infrastructure for long-term data operations rather than a point solution for document conversion. Organizations investing in Airbyte gain capabilities that extend far beyond PDF processing while maintaining the governance, scalability, and reliability required for mission-critical data workflows.

Ready to transform your PDF extraction into automated data pipelines? Talk to our experts to design a solution that scales with your organization's data ambitions. 🚀

Frequenty Asked Questions

What Is the Most Accurate Method to Extract Data From PDF to Excel?

AI-powered platforms currently provide the highest accuracy for complex and variable document layouts, combining computer vision with large language models to understand document context. For structured documents with consistent formatting, Python-based solutions using libraries like Camelot and pdfplumber can achieve excellent results with proper configuration.

Can I Extract Data From Password-Protected PDFs?

Most extraction methods can handle password-protected PDFs if you provide the password during the extraction process. Python libraries like PyPDF2 support password authentication, while Airbyte and other automated platforms typically include password handling in their PDF connector configurations.

How Do I Handle Scanned PDFs That Contain Only Images?

Scanned PDFs require Optical Character Recognition (OCR) technology to convert images back to text before extraction. Tools like Tesseract (free) or Adobe Acrobat Pro (paid) can perform OCR processing. Airbyte's document processing includes OCR capabilities for handling scanned documents automatically.

What Should I Do When PDF Tables Span Multiple Pages?

Python libraries like Camelot offer page range specifications. Power Query can handle multi-page scenarios through data transformation steps, while AI-powered platforms typically handle page spanning automatically through context understanding.

How Can I Automate PDF to Excel Conversion for Regular Reports?

For recurring PDF processing, set up automated workflows using platforms like Airbyte that support scheduled data synchronization. You can configure source connectors to monitor folders for new PDF files and automatically process them according to your defined schedule, eliminating manual intervention for regular reporting cycles.

.webp)