iPaaS vs ETL: A Deeper Look Into the Data Integration Methods

Summarize this article with:

✨ AI Generated Summary

Modern data integration demands balancing real-time connectivity and complex data transformations, with iPaaS and ETL serving distinct but complementary roles. Key points include:

- ETL: Best for batch-oriented, large-scale data consolidation, complex transformations, and enterprise analytics with strong data-quality controls.

- iPaaS: Cloud-native, real-time integration platform ideal for workflow automation, event-driven architectures, and low-code accessibility.

- AI enhances both by automating mappings, predictive maintenance, and adaptive processing.

- Security and compliance require robust encryption, access controls, and auditability across both approaches.

- Choosing between iPaaS and ETL depends on use case, cost, infrastructure, and team expertise, with hybrid models common.

- Airbyte bridges the gap by combining open-source flexibility, extensive connectors, AI-driven tools, and enterprise-grade security.

The modern business landscape demands sophisticated data-integration strategies that can handle everything from real-time analytics to complex AI workloads. Two primary approaches have emerged as leaders in this space: Integration Platform as a Service (iPaaS) and Extract, Transform, Load (ETL) processes.

Understanding the distinctions between these methodologies is crucial for organizations seeking to optimize their data infrastructure and drive meaningful business outcomes. The choice between iPaas vs ETL is no longer a simple matter of picking one technology over another.

Today's enterprises require solutions that can adapt to evolving business requirements while maintaining security, performance, and scalability standards. This comprehensive analysis explores the fundamental differences, use cases, and strategic considerations that should guide your integration decisions in an increasingly complex data environment.

What Is ETL and How Does It Transform Your Data Strategy?

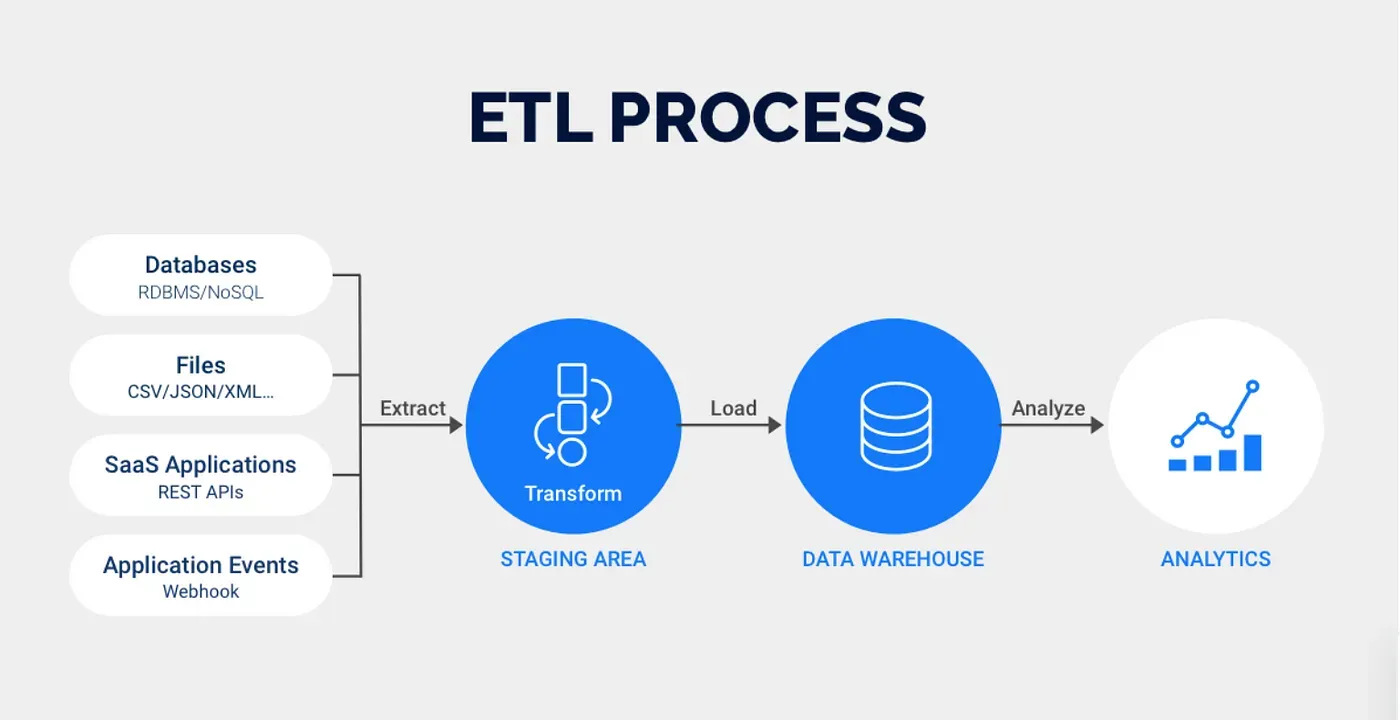

ETL (Extract, Transform, Load) represents a systematic approach to moving data from various sources into a centralized destination where it can be analyzed and utilized for business-intelligence purposes. This methodology has served as the backbone of enterprise data management for decades.

ETL provides organizations with reliable mechanisms for consolidating diverse data sources into unified analytical environments. The process operates through three distinct phases that ensure data quality and consistency across your entire data ecosystem.

- Extract – Accesses data from multiple sources including databases, APIs, files, and external systems while ensuring data integrity. This phase handles the complexity of connecting to disparate systems and retrieving data in its native format.

- Transform – Cleanses, standardizes, and applies business rules to the data to ensure quality and consistency. This critical step converts raw data into meaningful information that supports business decision-making.

- Load – Moves the transformed data into target systems such as data warehouses, data marts, or analytical databases. The loading process ensures data is properly indexed and optimized for analytical queries.

Why ETL Excels in Enterprise Environments

ETL approaches excel at handling complex transformation logic and data-quality controls that enterprise organizations require. The methodology enables comprehensive data validation and error handling throughout the integration process.

Organizations benefit from ETL's ability to consolidate massive volumes of information into unified repositories. This centralized approach supports consistent reporting and analytics across business units.

Understanding ETL Limitations

Traditional ETL requires significant technical expertise to build and maintain effectively. Organizations often need specialized data engineering teams to manage ETL infrastructure and troubleshoot integration issues.

The batch-oriented nature of ETL can introduce latency for time-sensitive insights. Real-time analytics requirements may not be well-served by traditional ETL approaches.

ETL may struggle with unstructured or semi-structured data without preprocessing. Modern data sources often require additional transformation steps to fit into traditional ETL frameworks.

What Is iPaaS and Why Are Organizations Embracing This Approach?

iPaaS (Integration Platform as a Service) is a cloud-native approach to connecting applications, data sources, and business processes through standardized APIs and pre-built connectors. This platform-based methodology emphasizes real-time connectivity and workflow automation.

iPaaS enables organizations to create responsive integration architectures that adapt quickly to changing business requirements. The cloud-native foundation provides automatic scaling and reduces infrastructure maintenance overhead.

Strategic Benefits of iPaaS Implementation

Low-code and no-code environments democratize integration work across technical and business teams. This accessibility reduces dependency on specialized data engineering resources for routine integration tasks.

Event-driven, real-time data synchronization across systems enables immediate response to business events. Organizations can trigger automated workflows based on data changes or system events.

Automatic scaling, reduced infrastructure maintenance, and global accessibility make iPaaS attractive for distributed organizations. Cloud-native deployment eliminates many traditional infrastructure management challenges.

Potential Challenges with iPaaS Adoption

Platform dependence and potential vendor lock-in represent significant concerns for enterprise organizations. Proprietary integration logic may create switching costs and limit long-term flexibility.

Distributed architecture can complicate troubleshooting and monitoring across multiple systems. Organizations need robust observability tools to maintain visibility into integration performance.

How Do iPaaS vs ETL Approaches Differ in Their Core Philosophy?

The fundamental distinction between iPaaS and ETL lies in their architectural philosophy and primary use cases. iPaaS is a cloud-based platform designed for real-time application and data integration, whereas ETL is a process focused on extracting, transforming, and loading data into centralized repositories for analytics.

According to Google Trends, ETL continues to dominate search interest patterns, reflecting its established position in enterprise data-management strategies. This trend indicates the continued relevance of ETL approaches in modern data architectures.

What Are the Detailed Technical and Strategic Differences Between These Approaches?

Primary Objectives and Business Focus

iPaaS prioritizes application integration, device connectivity, and business-process automation. These platforms excel at connecting disparate business applications and enabling real-time workflow automation.

ETL focuses on data consolidation, quality assurance, and analytical data preparation. The methodology emphasizes creating clean, consistent datasets for business intelligence and reporting purposes.

Architectural Design Patterns

iPaaS employs distributed, event-driven architectures with API gateways and message brokers. This design pattern supports real-time connectivity and enables loose coupling between integrated systems.

ETL utilizes centralized processing with staging areas for comprehensive transformation. The centralized approach provides better control over data quality and transformation logic.

Big-Data and Scalability Considerations

iPaaS integrates with streaming platforms like Kafka for high-velocity data processing. This capability supports real-time analytics and immediate response to business events.

ETL leverages parallel batch processing for large volumes of structured data. The batch-oriented approach optimizes resource utilization for massive data transformation workloads.

How Are AI and Automation Transforming Modern Integration Approaches?

AI and machine learning are driving both iPaaS and ETL toward intelligent, self-optimizing systems that fundamentally change how organizations approach data integration. These technologies enable predictive capabilities that anticipate issues before they impact business operations.

Modern AI-enabled integration platforms can predict failures and recommend remediation strategies based on historical patterns and real-time monitoring. This predictive capability significantly reduces downtime and maintenance overhead.

Auto-generation of data mappings and transformation logic reduces implementation effort dramatically. AI analyzes source and target schemas to suggest optimal mapping strategies and even writes transformation code automatically.

Intelligent Data Mapping and Transformation

AI analyzes source schemas, suggests field mappings, and even writes transformation code, reducing implementation effort dramatically. Machine learning algorithms learn from historical mapping decisions to improve accuracy over time.

These systems can identify semantic relationships between data elements across different systems. This capability enables more accurate data integration and reduces manual mapping errors.

Real-Time Decision Making and Adaptive Processing

AI-enabled platforms reroute data, adjust processing strategies, and trigger downstream actions automatically based on live conditions. This adaptive capability ensures optimal performance even as data volumes and patterns change.

Dynamic resource allocation based on workload patterns optimizes cost and performance automatically. AI monitors system performance and adjusts resources to maintain service levels while minimizing costs.

What Security and Compliance Considerations Should Guide Your Integration Choice?

Modern integration environments must address increasingly complex security and regulatory requirements that span multiple jurisdictions and data types. Organizations need comprehensive security frameworks that protect data throughout the integration lifecycle.

Data sovereignty requirements under regulations like HIPAA demand strict controls over data location and processing, while GDPR focuses on processing safeguards and restrictions on international data transfers rather than explicit controls over data location. Integration platforms should provide visibility and control over where data is processed and stored to support compliance, particularly under HIPAA, and to facilitate GDPR obligations related to cross-border transfers.

End-to-end encryption protects data in transit, while modern integration platforms employ additional encryption mechanisms to secure data at rest and increasingly in use through advanced cryptographic techniques.

Identity and access management with granular role-based access control (RBAC) and multi-factor authentication (MFA) provides fine-grained security controls. These systems ensure that only authorized users can access sensitive data and integration functions.

Comprehensive logging, monitoring, and audit trails enable compliance reporting and security incident investigation. Integration platforms must provide detailed logs of all data access and transformation activities.

Vendor risk management and disaster-recovery planning ensure business continuity even in adverse scenarios. Organizations need integration platforms with robust backup and recovery capabilities.

Which Use Cases Favor iPaaS Implementation Over ETL?

Real-time workflows and event-driven architectures benefit significantly from iPaaS capabilities. Organizations with immediate response requirements find iPaaS platforms more suitable than batch-oriented ETL processes.

IoT data ingestion and automation scenarios require the real-time connectivity that iPaaS platforms provide. Manufacturing, logistics, and smart city applications often require immediate processing of sensor data.

SaaS-to-SaaS business-process automation eliminates manual data entry and reduces process delays. Organizations using multiple cloud applications benefit from seamless connectivity between systems.

E-commerce synchronization for inventory, orders, and customer data requires real-time updates across multiple systems. iPaaS platforms enable immediate synchronization that supports customer experience and operational efficiency.

Supply-chain coordination across suppliers, logistics, and internal systems requires real-time visibility and automated responses. iPaaS platforms support the complex workflows needed for modern supply chain management.

Which Scenarios Are Best Suited for ETL Approaches?

Large-scale data warehousing and business intelligence initiatives require the comprehensive data transformation capabilities that ETL provides. Organizations building enterprise data warehouses typically rely on ETL processes for data consolidation.

Legacy-system migrations requiring complex transformations benefit from ETL's structured approach to data processing. Mainframe migrations and system consolidation projects often require sophisticated transformation logic.

Regulatory or financial reporting with strict data-quality requirements demands the validation and error-handling capabilities that ETL provides. Banking, insurance, and healthcare organizations often require ETL for compliance reporting.

Customer 360 analytics aggregating data from many operational systems requires comprehensive data consolidation and transformation. ETL processes excel at creating unified customer views from disparate source systems.

How Should You Choose Between iPaaS vs ETL for Your Organization?

Primary goal considerations should drive your initial decision framework. Organizations prioritizing real-time operational workflows should consider iPaaS, while those focused on comprehensive analytics should evaluate ETL approaches.

Cost profile analysis includes both initial implementation and ongoing operational costs. iPaaS typically involves subscription and managed services costs, while ETL may offer better economics for high-volume batch processing.

Infrastructure preferences significantly impact platform selection. Cloud-first organizations often prefer iPaaS solutions, while organizations with hybrid or on-premises requirements may favor ETL approaches.

Team skills and organizational capabilities influence implementation success. Organizations with API expertise and low-code preferences may find iPaaS more accessible, while those with data engineering and SQL expertise might prefer ETL.

How Does Airbyte Bridge the Gap Between Modern iPaaS and ETL Requirements?

Airbyte positions itself as "the open data movement platform," delivering comprehensive integration capabilities that combine the best aspects of both iPaaS and ETL approaches. The platform addresses the fundamental trade-offs that have historically forced organizations to choose between flexibility and functionality.

Airbyte provides 600+ pre-built connectors, eliminating the development overhead associated with custom integration work. This comprehensive connector library covers databases, APIs, files, and SaaS applications across all major business domains.

The platform's AI-driven connector builder enables rapid configuration of custom integrations without traditional development cycles. Organizations can create specialized connectors for proprietary systems and industry-specific applications through automated code generation.

Native vector-database destinations support RAG and other AI use cases that are becoming increasingly important for modern enterprises. This capability positions Airbyte at the forefront of AI-driven data integration requirements.

Enterprise-Grade Security and Compliance

SOC 2 Type II, ISO 27001, GDPR, and HIPAA compliance ensure that Airbyte meets enterprise security standards across multiple regulatory frameworks. Multi-region deployments provide data-sovereignty control for organizations operating across international boundaries.

The platform's open-source foundation combined with enterprise security features eliminates the vendor lock-in concerns that often constrain integration platform selection. Organizations maintain control over their integration logic while benefiting from enterprise-grade security and governance.

Developer-Friendly Integration Tools

Developer-friendly tools like PyAirbyte and a flexible Connector Development Kit enable technical teams to customize and extend integration capabilities. These tools bridge the gap between no-code accessibility and developer flexibility.

The platform generates open-source code that remains portable across different deployment environments. This approach ensures that organizations can evolve their integration architecture without being constrained by proprietary formats or vendor-specific requirements.

Frequently Asked Questions

What is the main difference between iPaaS and ETL?

iPaaS is a cloud-based integration platform for real-time application and data connectivity, whereas ETL is a process for extracting, transforming, and loading data into centralized repositories for analytics.

Can iPaaS replace traditional ETL processes?

iPaaS can handle many ETL-like tasks—especially real-time synchronization—but complex analytical transformations often still require ETL. Many organizations run hybrid models.

Which approach is more cost-effective for growing organizations?

It depends on volume and use case: iPaaS offers predictable subscription costs and low infrastructure overhead; ETL can be more economical at scale for heavy analytical workloads.

How do security requirements impact the choice?

Both can meet enterprise-grade security. iPaaS provides managed, built-in controls; ETL offers more direct control in on-prem or hybrid deployments. Evaluate certifications and compliance needs.

What skills are needed?

iPaaS leans on low-code tools, API familiarity, and workflow design. ETL requires data-engineering know-how, SQL expertise, and experience with complex transformations.

.webp)