How to Build a Data Orchestration Pipeline Using Luigi in Python?

Summarize this article with:

✨ AI Generated Summary

Luigi, developed by Spotify in 2011, is a Python-based framework designed for building and orchestrating batch data pipelines with a target-based dependency resolution system. It remains useful for simple, reliable batch workflows such as machine learning training, BI reporting, and ad-performance analytics but lacks advanced scheduling, cloud-native features, and robust monitoring found in modern platforms like Apache Airflow and Prefect.

- Core features include task dependency management, atomic file operations, and built-in templates for common big-data tasks.

- Limitations involve basic scheduling, minimal enterprise security, limited cloud integration, and challenges in handling complex parallel workflows.

- Modern alternatives like Airbyte offer enhanced data integration with AI-powered connectors, enterprise-grade security, scalability, and native cloud platform support.

Luigi, developed by Spotify in 2011, stands as one of the foundational frameworks in Python's data-orchestration ecosystem, though its position has evolved significantly as the industry has matured toward more sophisticated orchestration platforms. While newer tools like Apache Airflow and Prefect have gained market dominance, Luigi maintains relevance for specific use cases where simplicity and batch-processing reliability are prioritized over advanced scheduling and monitoring capabilities.

To increase the profitability of your business and ensure rational use of resources, developing high-performance data pipelines can be beneficial. Data orchestration can help you with this as it simplifies the management of various pipeline tasks, including scheduling and error handling.

Luigi, a Python library built to streamline complex workflows, can help you effectively build and orchestrate data pipelines. Here, you will learn how to build Luigi Python data-orchestration pipelines and their real-world use cases.

You can utilize this information to create robust data pipelines and improve the operational performance of your organization.

What Is Luigi and How Does It Work in Python Data Orchestration?

Luigi is an open-source Python package that provides a framework to develop complex batch data pipelines and orchestrate various tasks within these pipelines. It simplifies the development and management of data workflows by offering features such as workflow management, failure handling, and command-line integration.

Developed by Spotify, a digital music service company, Luigi was initially developed to coordinate tasks involving data extraction and processing. The developers at Spotify enhanced Luigi's functionalities, particularly features like resolving task dependencies.

However, Spotify itself no longer actively maintains Luigi and has shifted to different orchestration tools like Flyte, though the open-source community continues to contribute to its development.

Luigi's target-based architecture distinguishes it from more modern DAG-based approaches, utilizing a backward dependency-resolution system where tasks are defined with specific outputs and dependencies. This architectural choice reflects Luigi's origins in batch-processing environments where sequential task execution was the primary concern, contrasting with today's emphasis on parallel execution and dynamic workflow management.

Why Should You Use Luigi Python for Building Data Pipelines?

Luigi is one of the most preferred solutions for developing data-orchestration pipelines. Some of the reasons for this are as follows:

Automation of Complex Workflows

Luigi enables you to chain multiple tasks in long-running batch processes. These processes include running Hadoop jobs, transferring data between databases, or running machine-learning algorithms.

After linking the tasks, Luigi allows you to define dependencies between tasks and ensure execution in the correct order.

The framework's idempotent task design ensures that completed tasks are not re-executed unless explicitly required, providing efficiency in large, complex pipelines where only failed components need reprocessing. This architectural decision contributes to Luigi's reputation for reliability and resource efficiency in production environments.

Built-in Templates for Common Tasks

Luigi offers pre-built templates for common tasks, such as running Python MapReduce jobs in Hadoop, Hive, or Pig. These templates can save significant time and effort when performing big-data operations, making it easier to implement complex workflows.

The contrib module has seen substantial enhancements, particularly in cloud-platform integration. BigQuery support has been expanded with Parquet-format compatibility and network-retry logic, addressing reliability concerns in production environments.

The addition of a configure_job property provides greater control over BigQuery job-execution parameters, enabling optimization for specific use cases.

Atomic File Operations

Atomic file operations are tasks that you must execute completely without interruptions or partial completion. Luigi supports such operations by providing file-system abstractions for HDFS (Hadoop Distributed File System) and local files.

These abstractions, implemented as Python classes with various methods, ensure that data pipelines remain robust. If an error occurs at any stage, Luigi helps prevent crashes and maintains the integrity of the process.

The framework's retry mechanism provides resilience against transient failures, with configurable retry counts and retry delay strategies. Task failures can be handled gracefully through the built-in retry system, which can be configured globally or on a per-task basis.

What Are the Core Components of Luigi Python Architecture?

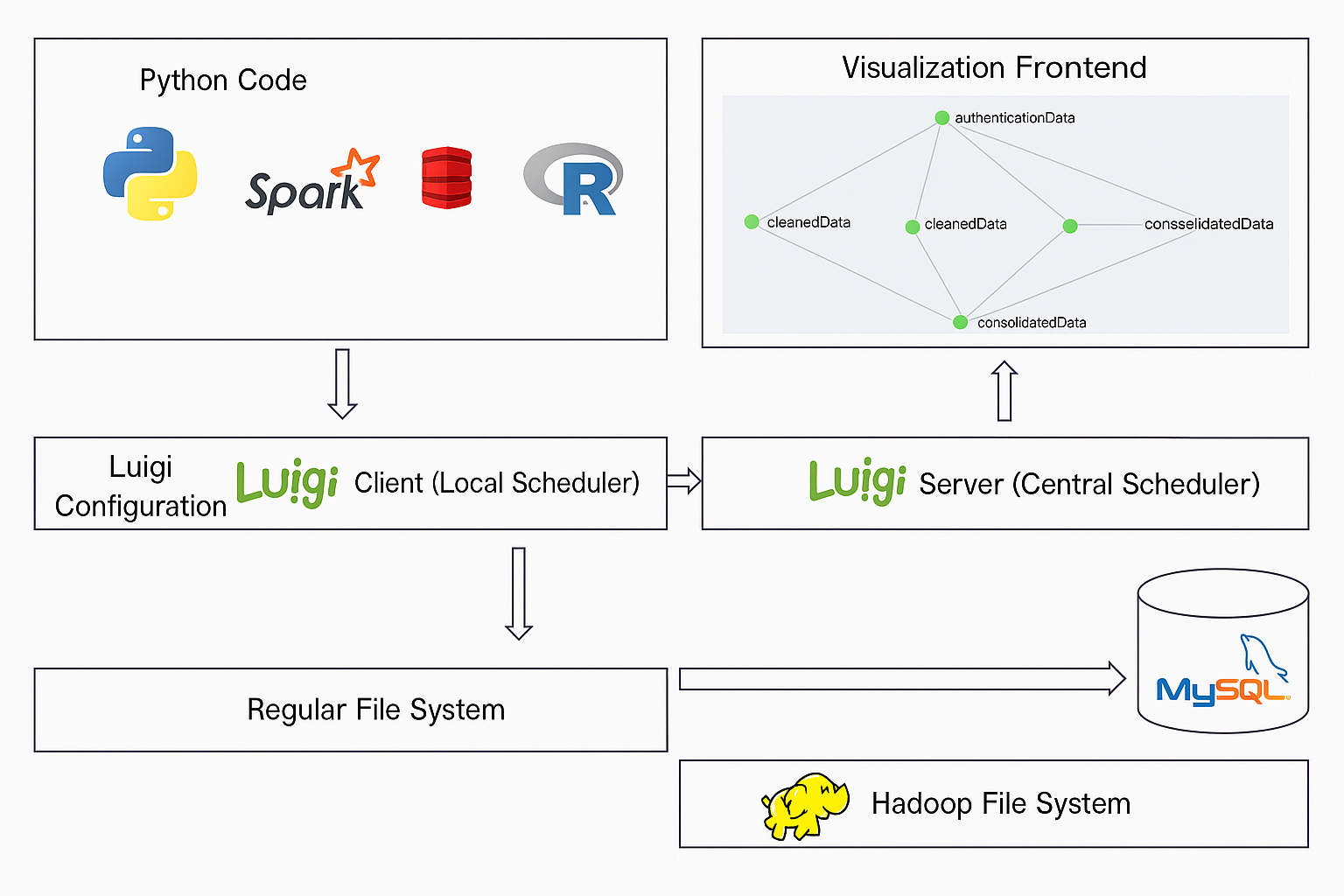

To effectively use Luigi for building data-orchestration pipelines, it is important to understand its core components and how they work together. It has a simple architecture based on tasks written in Python.

The architecture includes the option of a centralized scheduler, which helps ensure that two instances of the same task aren't running simultaneously. It also provides visualizations of task progress.

Tasks

Tasks in Luigi are Python classes where you define the input, output, and dependencies for executing data-pipeline jobs. The tasks depend on each other and the output targets.

The task-definition model in Luigi requires three core methods:

- requires() – specify dependencies

- output() – define the task's target output

- run() – implement the actual task logic

Dynamic-dependency resolution represents one of Luigi's more sophisticated features, allowing tasks to yield additional dependencies during execution. This mechanism enables workflows where the full dependency graph cannot be determined until runtime, such as scenarios where the number of input files or data partitions varies based on external conditions.

Targets

Targets represent the resources produced by tasks. They are like outputs produced after the execution of code for desired jobs.

A single task can create one or more targets as output and is considered to be complete only after the production of all of its targets. To check if the task has created a target or not, you can use the exists() method.

The framework provides built-in support for various target types, including local files, HDFS files, and database records, with the flexibility to create custom target types for specialized use cases. This target-abstraction layer provides a foundation for implementing custom storage backends that integrate with cloud services like Amazon S3, Google Cloud Storage, and Azure Blob Storage.

Dependencies

In Luigi, dependencies represent the relationships between tasks of a data-pipeline workflow. You can utilize dependencies to execute all the tasks in the correct order, ensuring that each task is executed after completing the previous one.

This enables you to streamline your workflows and prevent errors caused by out-of-order task execution.

Parameter handling in Luigi supports type-safe configuration through specialized parameter classes like IntParameter, DateParameter, and BoolParameter. These parameters can be specified via command-line arguments, configuration files, or programmatically, providing flexibility in how workflows are parameterized and executed.

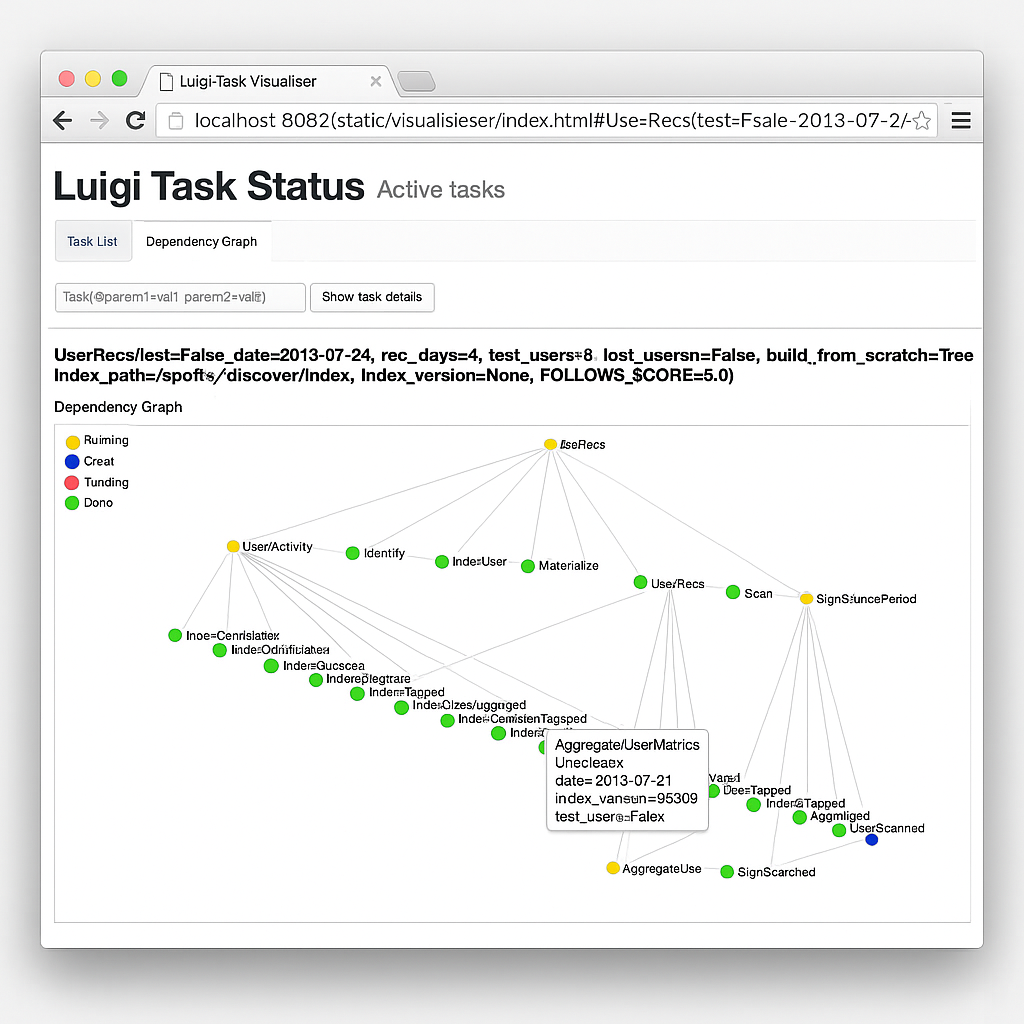

Dependency Graphs

Dependency graphs in Luigi are illustrations that provide a visual overview of your data workflows. In these graphs, each node represents a task.

Typically, the completed tasks are represented in green, while pending tasks are indicated in yellow. These graphs help you manage and monitor the progress of your pipelines effectively.

Luigi's visualization capabilities include a web-based interface that displays task dependency graphs and execution status. The visualizer enables searching, filtering, and prioritizing tasks while providing a visual overview of workflow progress.

This interface is particularly valuable for debugging complex pipelines and understanding the relationships between different components of a data-processing workflow.

How Can You Create a Data Orchestration Pipeline Using Luigi Python?

With Python Luigi, you can build ETL data pipelines to transfer data across different data systems. In this tutorial, let's develop a simple data pipeline for transferring data between MongoDB and PostgreSQL with the Luigi Python package.

1. Install Luigi

pip install luigi

2. Import Important Libraries

3. Extract Data

The first step involves extracting data from MongoDB and saving it as a JSON file for processing.

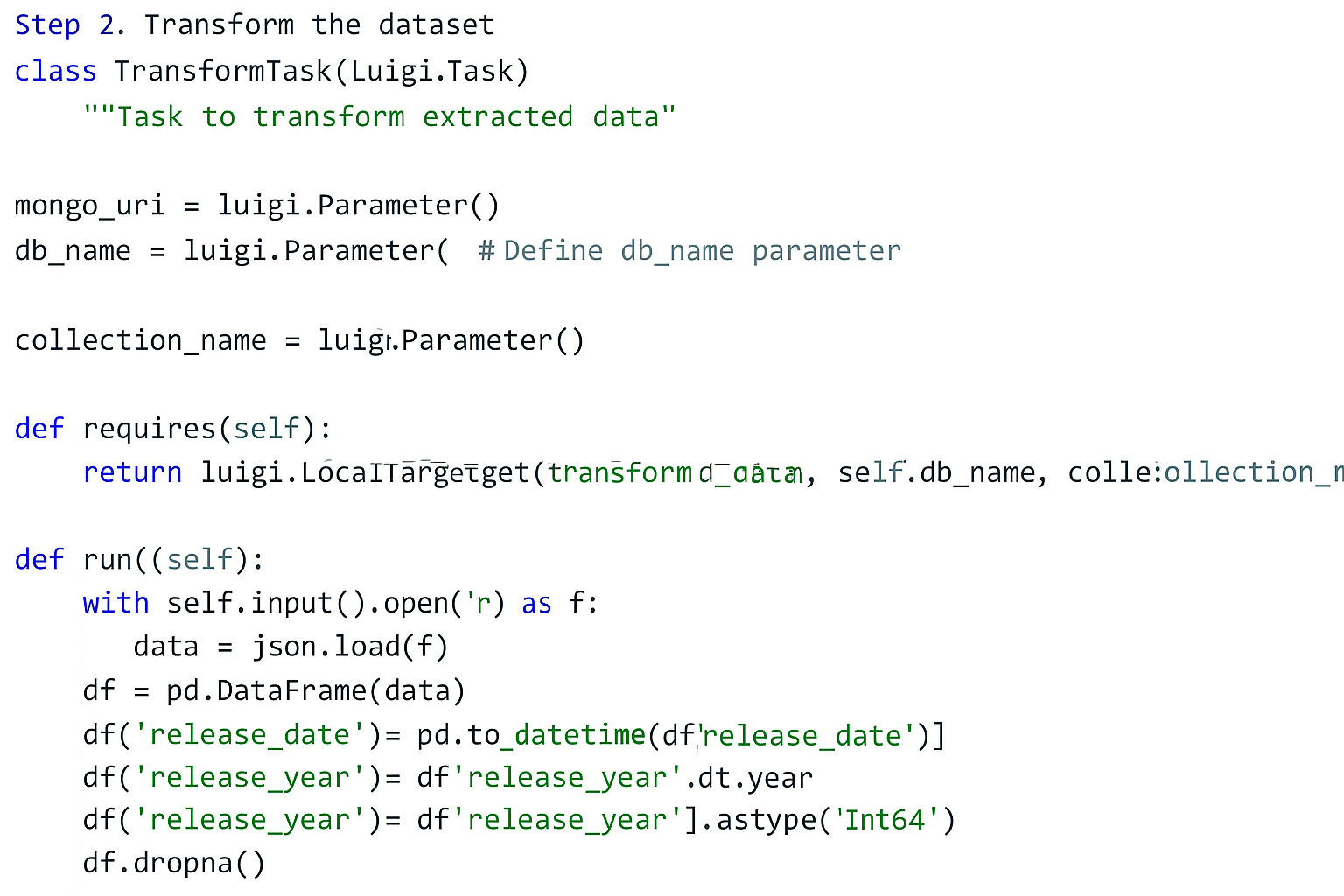

4. Transform the Data

Convert the data in a JSON file into a Pandas DataFrame to perform essential transformations and then save the standardized data as a CSV file.

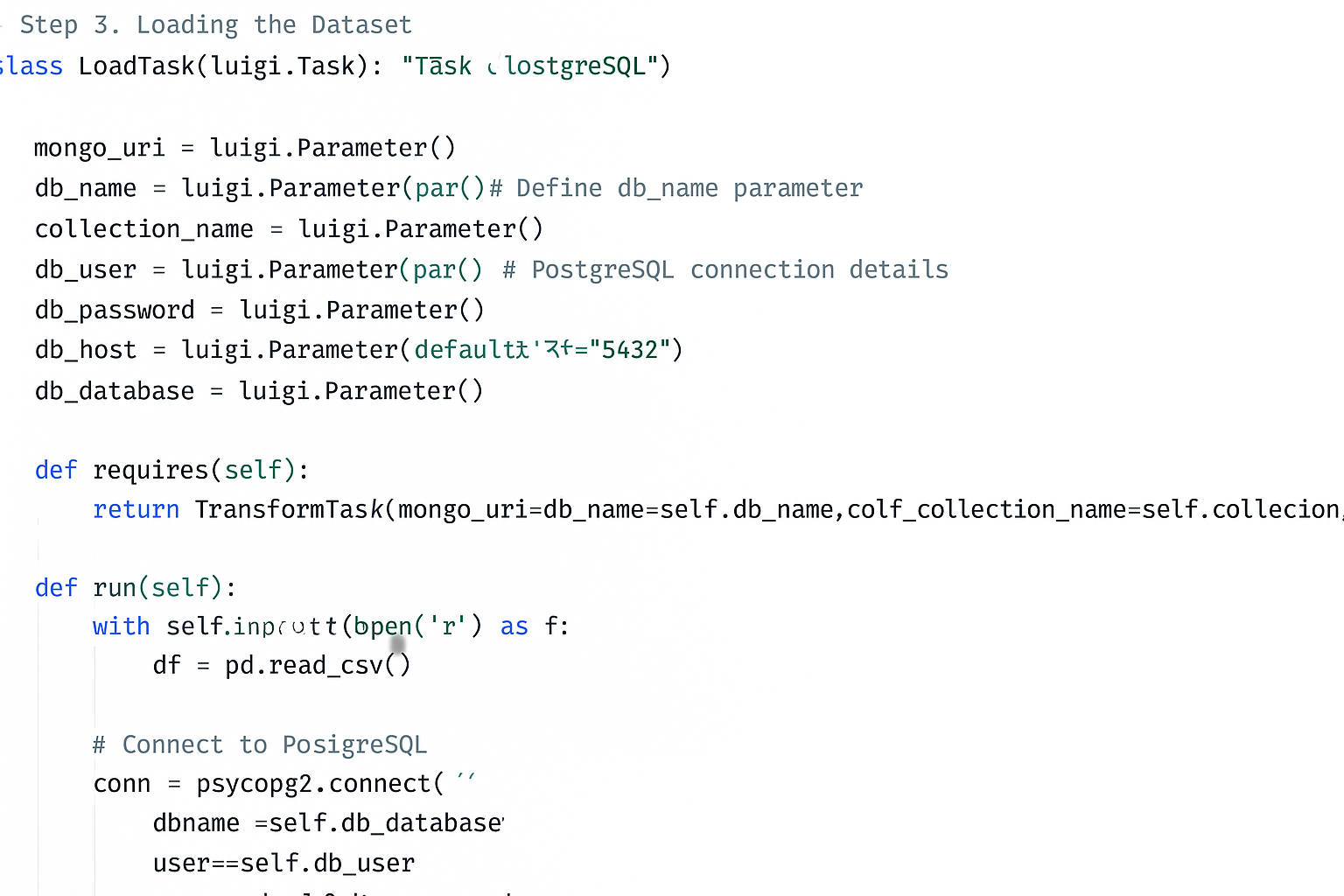

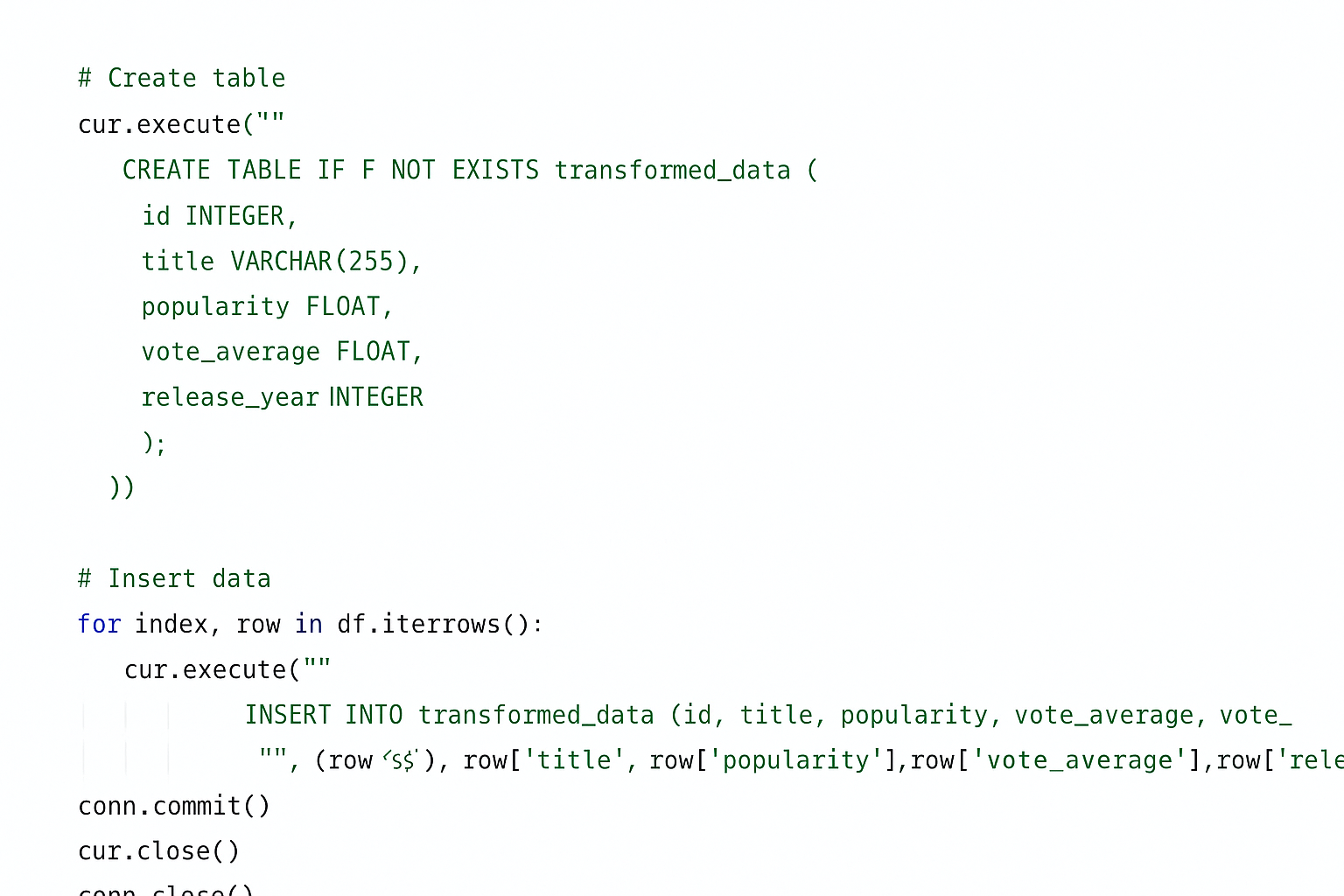

5. Load Data to PostgreSQL

Define a Luigi task LoadTask to load the transformed data into PostgreSQL tables.

If the table doesn't exist yet, create it first and then transfer data from the CSV file to Postgres:

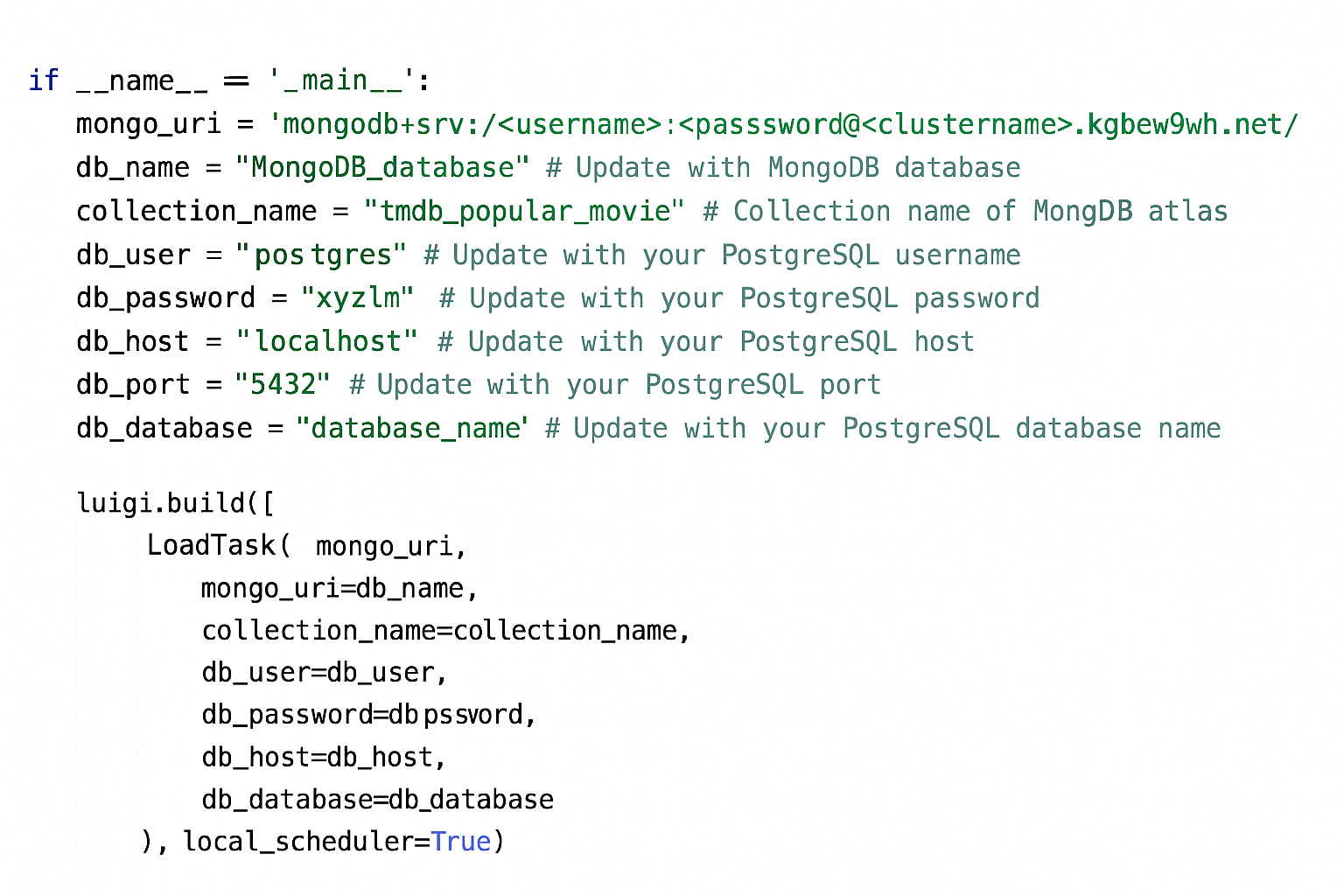

6. Run the ETL Pipeline

Trigger LoadTask with MongoDB URI, database name, and PostgreSQL credentials. Luigi orchestrates the tasks in the correct order, ensuring data integrity and consistency.

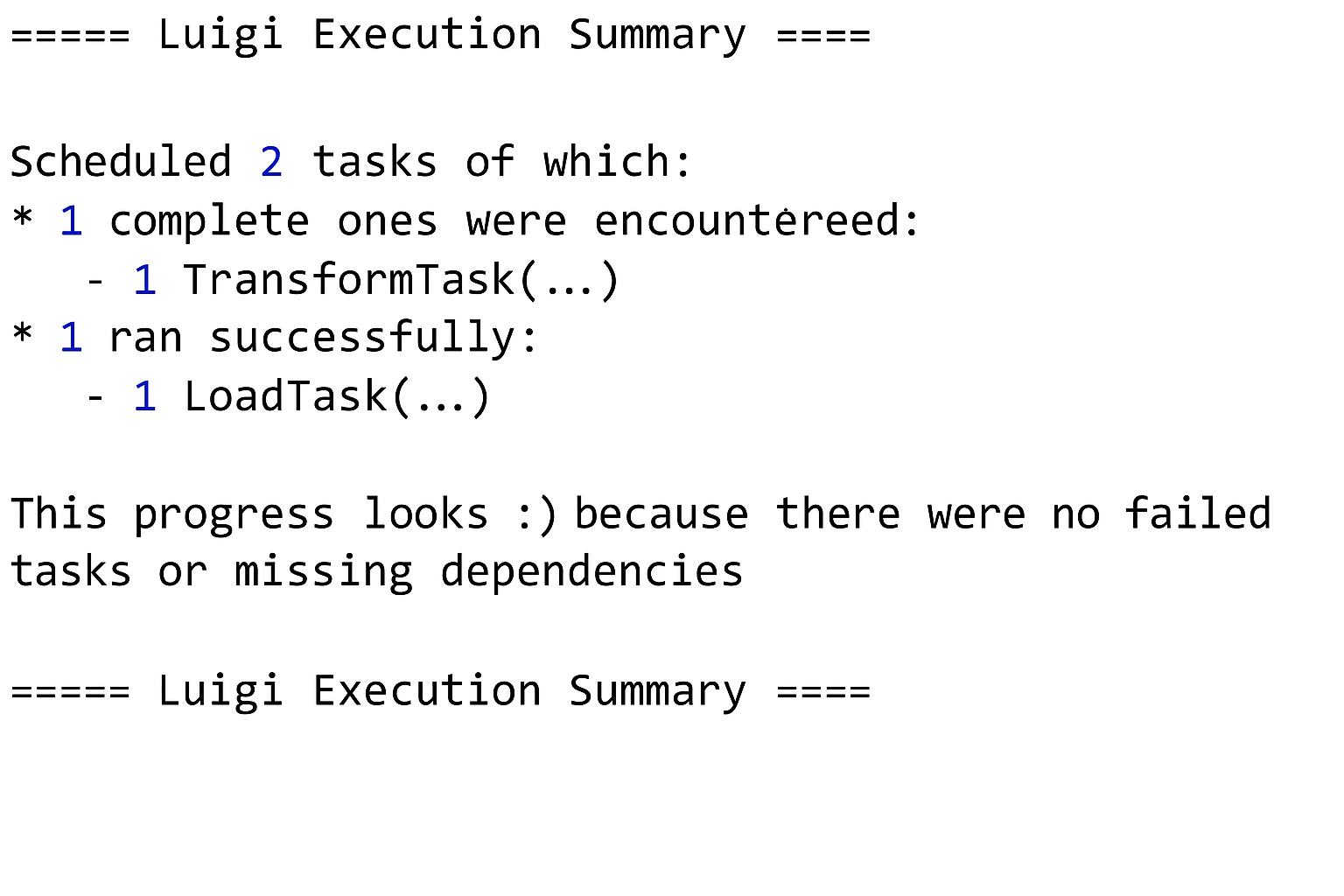

After successful execution, you will get a confirmation message:

These steps demonstrate how to build a data pipeline using Luigi.

How Does Luigi Compare to Modern Data-Orchestration Platforms?

The data-orchestration landscape has evolved significantly since Luigi's introduction, with newer frameworks addressing many of the limitations inherent in Luigi's original design philosophy. Understanding these differences helps organizations make informed decisions about their orchestration strategy.

Architectural Philosophy Differences

Luigi's target-based approach contrasts sharply with the DAG-based architectures of modern platforms like Apache Airflow and Prefect. While Luigi determines dependencies backward from desired outputs, modern frameworks model workflows as forward-flowing directed acyclic graphs.

This architectural difference impacts how teams conceptualize and build workflows. Luigi's approach works well for linear batch processes but becomes cumbersome for complex workflows requiring parallel execution or dynamic task generation.

Modern orchestration platforms embrace cloud-native architectures from the ground up, with features like auto-scaling, containerized task execution, and native cloud service integration. Luigi's architecture reflects its origins in on-premises batch processing environments.

Scheduling and Monitoring Capabilities

Luigi provides basic scheduling through cron integration but lacks the sophisticated scheduling capabilities that modern data teams require. Apache Airflow offers advanced scheduling with timezone awareness, complex time-based triggers, and external event-based scheduling.

Monitoring and observability represent significant gaps in Luigi's capabilities compared to modern alternatives. While Luigi provides basic web visualization, platforms like Prefect and Dagster offer comprehensive monitoring dashboards, alerting systems, and performance analytics.

The lack of built-in alerting mechanisms in Luigi requires additional tooling for production monitoring, while modern platforms include integrated alerting and notification systems.

Development Experience and Maintenance

Luigi's simplicity can be both an advantage and a limitation. While it offers a straightforward development experience for basic workflows, it lacks the development tools and debugging capabilities that modern platforms provide.

Code maintainability becomes challenging in Luigi as workflows grow in complexity. The framework's global state management and limited testing utilities make refactoring and testing more difficult compared to modern alternatives that embrace better software engineering practices.

Modern platforms offer features like workflow versioning, rollback capabilities, and better testing frameworks that support more robust development practices.

Cloud Integration and Scalability

Luigi's cloud integration capabilities lag significantly behind modern orchestration platforms. While community contributions have added some cloud connectors, the framework lacks the comprehensive cloud-native features that organizations require for modern data architectures.

Auto-scaling capabilities, containerized execution, and managed service integrations are standard features in modern platforms but require significant additional work in Luigi deployments.

The operational overhead of maintaining Luigi in production environments often exceeds that of managed orchestration services, particularly for cloud-first organizations.

Market Position and Community Support

Luigi's market position has shifted from a leading orchestration platform to a specialized tool for specific use cases. While the open-source community continues development, the pace of innovation has slowed significantly compared to actively maintained alternatives.

Organizations considering Luigi should evaluate whether its simplicity advantages outweigh the feature gaps compared to more modern alternatives that better align with contemporary data engineering practices.

What Are the Enterprise Security and Governance Considerations for Luigi?

Enterprise deployment of Luigi requires careful consideration of security and governance requirements that modern organizations demand from their data infrastructure.

Authentication and Authorization

Luigi's basic authentication model presents challenges for enterprise environments requiring sophisticated access control. The framework lacks built-in role-based access control (RBAC) capabilities that are standard in modern orchestration platforms.

Organizations must implement custom authentication layers and integrate with enterprise identity providers manually. This additional development overhead often exceeds the complexity of adopting platforms with native enterprise authentication support.

Audit logging capabilities in Luigi are limited compared to enterprise requirements for compliance and security monitoring. Organizations need to implement additional logging infrastructure to meet governance requirements.

Data Security and Compliance

Data encryption in transit and at rest requires manual configuration in Luigi deployments. Modern orchestration platforms typically include encryption as a default feature with enterprise key management integration.

Compliance requirements for regulations like GDPR, HIPAA, or SOC 2 often require features that Luigi doesn't provide natively. Organizations must implement additional security layers and controls, increasing operational complexity.

Secret management in Luigi requires integration with external systems, while modern platforms include built-in secret management with rotation and access controls.

Monitoring and Observability

Production monitoring of Luigi workflows requires significant additional tooling compared to modern platforms that include comprehensive observability features. Organizations must implement custom monitoring, alerting, and metrics collection systems.

Performance monitoring and optimization capabilities in Luigi are limited, making it difficult to identify bottlenecks and optimize resource utilization in complex workflows.

Error tracking and debugging capabilities require custom implementation, while modern platforms provide built-in error management and debugging tools.

Operational Governance

Change management and deployment practices for Luigi workflows often rely on basic version control without the sophisticated deployment and rollback capabilities that modern platforms provide.

Workflow testing and validation capabilities in Luigi require custom implementation, while modern platforms include built-in testing frameworks and validation tools.

Documentation and lineage tracking must be implemented manually in Luigi, while modern platforms provide automatic documentation generation and data lineage visualization.

How Does Airbyte Support Modern Data Orchestration Beyond Luigi Python?

While Luigi serves specific use cases in data orchestration, modern organizations require comprehensive data integration platforms that address the full spectrum of data movement challenges. Airbyte transforms how organizations approach data integration by providing enterprise-grade capabilities without the complexity and limitations of traditional orchestration-only solutions.

Modern Data Integration Architecture

Airbyte's architecture addresses the fundamental limitations that make tools like Luigi inadequate for modern data integration requirements. Rather than focusing solely on task orchestration, Airbyte provides a complete data integration platform with 600+ pre-built connectors that eliminate the custom development overhead inherent in Luigi-based solutions.

The platform's cloud-native design enables organizations to focus on data value rather than infrastructure maintenance. Unlike Luigi's requirement for extensive custom coding and maintenance, Airbyte provides production-ready integration capabilities with built-in monitoring, error handling, and performance optimization.

Organizations can deploy Airbyte across cloud, hybrid, and on-premises environments while maintaining consistent functionality and management capabilities, addressing the deployment flexibility challenges that Luigi implementations often face.

AI-Powered Connector Builder

Airbyte's Connector Builder leverages AI to generate custom connectors in minutes rather than the weeks or months required for Luigi-based custom integrations. This capability transforms how organizations approach new data source integration, eliminating the development overhead that makes Luigi implementations expensive and time-consuming.

The AI-powered approach reduces technical debt by generating maintainable, standards-compliant connectors that follow best practices automatically. This contrasts with Luigi implementations where custom connector code often becomes difficult to maintain and update over time.

Organizations can respond to new integration requirements in days rather than quarters, enabling business agility that Luigi's development-intensive approach cannot match.

Enterprise Security and Governance

Airbyte provides enterprise-grade security and governance capabilities that Luigi lacks natively. End-to-end encryption, role-based access control, and comprehensive audit logging are built-in features rather than custom implementations required in Luigi deployments.

SOC 2, GDPR, and HIPAA compliance capabilities enable organizations to meet regulatory requirements without additional development overhead. Luigi deployments typically require significant custom security implementation to achieve similar compliance posture.

Data sovereignty and security requirements are addressed through flexible deployment options and comprehensive governance controls, enabling organizations to maintain compliance while scaling data integration operations.

Performance and Scalability Advantages

Airbyte processes over 2 petabytes of data daily across customer deployments, demonstrating production-scale performance that Luigi implementations struggle to achieve without significant infrastructure investment and optimization.

Automated scaling and resource optimization eliminate the manual capacity planning and performance tuning required in Luigi deployments. Organizations can focus on data utilization rather than infrastructure management.

Airbyte provides foundational features like data replication and backups, but high availability and disaster recovery require additional configuration or external solutions, similar to Luigi, which also needs custom implementations for production reliability.

Integration with Modern Data Platforms

Native integration with modern cloud data platforms like Snowflake, Databricks, and BigQuery enables organizations to leverage their existing infrastructure investments while adding comprehensive integration capabilities.

Unlike Luigi's requirement for custom integration development, Airbyte provides optimized connectors for modern data platforms with automatic schema management and change detection capabilities.

The platform's compatibility with orchestration tools like Airflow and Prefect enables organizations to maintain their preferred orchestration approach while upgrading their data integration capabilities beyond Luigi's limitations.

What Are the Key Use Cases for Luigi Python Data Pipelines?

Despite the emergence of more sophisticated orchestration platforms, Luigi maintains relevance for specific use cases where its simplicity and reliability provide advantages over more complex alternatives.

Machine-Learning Model Training Workflow

Luigi excels in scenarios requiring sequential batch processing for machine learning model training pipelines. The framework's idempotent task execution and dependency management provide reliability for long-running training processes.

Organizations with established machine learning workflows that prioritize simplicity over advanced scheduling capabilities find Luigi's straightforward approach advantageous. The ability to chain preprocessing, feature engineering, model training, and validation steps provides clear workflow structure.

However, teams requiring parallel hyperparameter tuning, dynamic resource allocation, or integration with modern MLOps platforms often find Luigi's limitations constraining compared to specialized machine learning orchestration tools.

Business Intelligence and Analytics

Luigi works well for traditional business intelligence workflows that involve periodic data extraction, transformation, and loading into analytical systems. The framework's batch-oriented design aligns with typical BI requirements for daily, weekly, or monthly reporting cycles.

Organizations with established BI processes that require reliable execution without complex scheduling needs find Luigi's simplicity valuable. The target-based architecture ensures that reports are only regenerated when underlying data changes.

Teams requiring real-time analytics, complex scheduling, or integration with modern cloud BI platforms often need more sophisticated orchestration capabilities than Luigi provides.

Ad-Performance Analytics

Luigi's origins at Spotify make it particularly well-suited for ad-performance analytics workflows that require processing large volumes of event data in batch mode. The framework's reliability and atomic operations provide consistency for revenue-critical analytics.

Organizations processing advertising data from multiple sources benefit from Luigi's dependency management and failure handling capabilities. The ability to reprocess failed tasks without affecting completed work provides operational efficiency.

However, teams requiring real-time bidding analytics, complex audience segmentation, or integration with modern advertising platforms often need more sophisticated data processing capabilities than Luigi offers.

Frequently Asked Questions

What Is Luigi Python and How Does It Work?

Luigi is an open-source Python framework developed by Spotify for building and orchestrating complex batch data processing workflows. It provides a target-based architecture where tasks are defined with specific outputs and dependencies, enabling reliable execution of data pipeline operations.

How Does Luigi Python Compare to Apache Airflow?

Luigi and Apache Airflow differ significantly in their architectural approaches and capabilities. While Luigi uses a target-based backward dependency resolution system optimized for batch processing, Airflow employs a DAG-based forward-flowing architecture that supports more complex scheduling and parallel execution patterns.

What Are the Main Limitations of Luigi for Modern Data Teams?

Luigi's main limitations include lack of sophisticated scheduling capabilities, limited cloud-native features, basic monitoring and observability tools, and architectural constraints that make complex parallel workflows challenging to implement effectively.

How Can You Install and Set Up Luigi Python?

Luigi can be installed using pip with the command pip install luigi. The framework requires Python and can be configured through command-line arguments, configuration files, or programmatic parameter setting for workflow customization and execution.

What Are the Best Alternatives to Luigi Python for Data Orchestration?

Modern alternatives to Luigi include Apache Airflow for comprehensive workflow orchestration, Prefect for cloud-native data workflows, Dagster for asset-oriented data pipelines, and specialized platforms like Airbyte for data integration that eliminate the need for custom orchestration development.

Conclusion

Luigi Python offers a foundation for building data orchestration pipelines, particularly for organizations with straightforward batch processing requirements. However, the framework's architectural limitations and lack of modern enterprise features create challenges for teams building sophisticated data infrastructure. While Luigi's simplicity provides advantages in specific use cases, organizations should carefully evaluate whether its capabilities align with their long-term data strategy and orchestration requirements.

.webp)