OpenAI Embeddings 101: A Perfect Guide For Data Engineers

Summarize this article with:

✨ AI Generated Summary

OpenAI embeddings transform text into semantic vector representations that capture contextual meaning rather than just literal matches. Unlike traditional approaches that rely on exact keyword matching, embeddings enable machines to understand relationships between concepts, making unstructured data queryable and actionable at enterprise scale. This technology has become essential infrastructure for organizations building intelligent search systems, personalized recommendations, and automated content analysis pipelines.

For data engineering teams, embeddings represent a paradigm shift from rule-based data processing to semantic understanding. Whether you're building real-time anomaly detection systems, enhancing customer experience through intelligent search, or creating automated content classification pipelines, OpenAI embeddings provide the foundational technology to unlock value from your organization's unstructured data assets.

TL;DR: OpenAI Embeddings at a Glance

- OpenAI embeddings transform text into semantic vectors that understand meaning and context, not just keywords

- Third-generation models (text-embedding-3-small and text-embedding-3-large) offer configurable dimensions and improved cost efficiency

- Key use cases include semantic search, content classification, recommendation systems, and anomaly detection

- Airbyte automates embedding workflows with 600+ connectors for vector databases like Pinecone, Weaviate, and Qdrant

- Strategic benefits include operational efficiency, revenue enhancement through personalization, and improved fraud detection

What Are Embeddings and Why Do They Matter for Modern Data Processing?

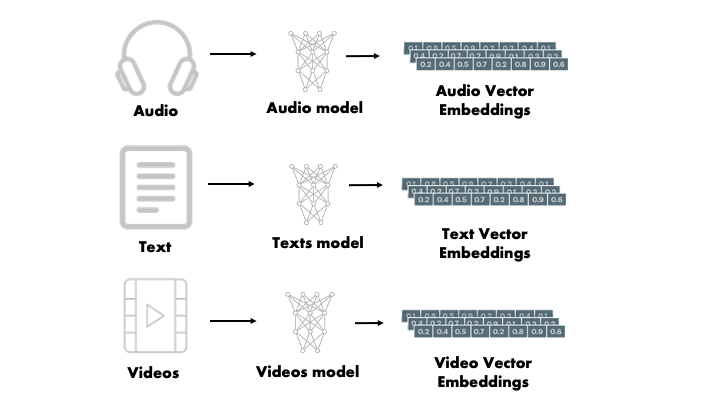

Embeddings are numerical representations of data that help machine-learning models understand and compare different items. These embeddings convert raw data—such as images, text, videos, and audio—into vectors in a high-dimensional space where similar items are placed close to each other. This process simplifies the task of processing complex data, making it easier for ML models to handle tasks like recommendation systems or text analysis.

The mathematical foundation of embeddings relies on the principle that semantic similarity can be captured through geometric proximity in vector space. When two concepts are conceptually related, their corresponding embedding vectors will have a smaller distance between them, typically measured using cosine similarity or Euclidean distance. This mathematical relationship enables automated reasoning about content relationships without explicit programming of domain-specific rules.

How Do OpenAI Embedding Models Differ from Traditional Approaches?

OpenAI embeddings are numerical representations of text created by OpenAI models such as GPT. They convert words and phrases into vectors, making it possible to calculate similarities or differences—useful for clustering, searching, and classification.

Key Differentiators

OpenAI embeddings stand out from other embedding solutions through several key characteristics:

- Trained on massive, diverse datasets covering multiple domains and languages

- Use transformer-based attention mechanisms to capture context-dependent meaning—so the same word is embedded differently based on surrounding context

- Exhibit state-of-the-art performance on semantic-understanding benchmarks

How Do OpenAI Embeddings Work Behind the Scenes?

Understanding the workings of embeddings gives you insights into how text is transformed into significant numerical data. Explore all the steps in detail:

1. Start With a Piece of Text

First, begin by selecting a piece of text, whether a phrase, sentence, or other fragment. This text will act as raw input for creating embeddings.

2. Break the Text Into Smaller Units

The text is then broken down into smaller units called tokens. Each token represents a word, character, or phrase, depending on the tokenization method. OpenAI uses byte-pair encoding (BPE) tokenization, which efficiently handles subword units and provides robust handling of out-of-vocabulary terms.

3. Convert Each Token Into a Numeric Representation

Each token is converted into a numeric representation that can be processed by algorithms. These numeric values are initial embeddings that reflect the basic properties of the text.

4. Neural Network Processing

The numeric representation of each token is passed through a neural network, which captures deeper patterns and relationships between the tokens. This network employs transformer architecture with multi-head attention mechanisms that allow the model to focus on different aspects of the input simultaneously. The attention layers enable the model to weigh the importance of different tokens relative to each other, creating rich contextual understanding that goes far beyond simple word co-occurrence patterns.

5. Vector Generation for the Input

After processing, the neural network generates a vector that contains the context and meaning of the input text. This vector (the embedding) can then be used in applications such as searching, clustering, and classification. The final embedding represents a compressed semantic fingerprint of the original text, encoding not just individual word meanings but the complex relationships and contextual nuances that make human language so expressive.

Which OpenAI Embedding Models Should You Choose for Your Use Case?

Selecting the right embedding model depends on your specific use case, performance requirements, and budget constraints.

What Are the Key Use Cases for OpenAI Embeddings in Data Engineering?

Data engineers leverage OpenAI embeddings across multiple high-impact applications that directly address business challenges. These use cases represent areas where traditional keyword-based approaches fall short.

- Semantic Search & Retrieval – Understand intent beyond keywords

- Text Classification & Clustering – Group documents by topic or sentiment

- Recommendation Systems – Recommend semantically related products or content

- Anomaly Detection – Distinguish genuine anomalies from routine data variance

- NLP Pre-training – Feed downstream tasks such as summarization or translation

What Are the Strategic Business Advantages of Implementing OpenAI Embeddings?

- Operational Efficiency and Cost Reduction: Organizations implementing OpenAI embeddings report significant operational improvements across multiple dimensions. These efficiency gains translate directly into cost savings, with organizations reducing data integration maintenance overhead while reallocating technical resources to higher-value innovation projects.

- Revenue Enhancement Through Personalization: Embedding-driven recommendation engines consistently outperform traditional collaborative filtering approaches, with organizations reporting higher conversion rates attributable to context-aware matching.

- Risk Mitigation and Fraud Prevention: Financial institutions implement embedding-based anomaly detection systems that identify sophisticated fraud patterns invisible to rules-based approaches. By analyzing transaction narratives and behavioral patterns through semantic vector analysis, these systems detect money laundering schemes and fraudulent activities.

- Competitive Intelligence and Market Analysis: Enterprises deploy embedding-powered systems to analyze competitor communications, market sentiment, and emerging trend patterns at scale. Semantic analysis of social media, news content, and industry publications enables identification of market opportunities and competitive threats that keyword-based monitoring overlooks.

- Organizational Knowledge Management: Large enterprises struggle with knowledge silos and information discovery across distributed teams and systems. Embedding-powered knowledge graphs enable semantic search across diverse content types, reducing time-to-insight for strategic decision making while preventing duplicate work across organizational boundaries.

How Do You Use OpenAI Embeddings in Practice?

1. Set Up the Python Environment

Make sure you have Python 3.7+ installed. Create a virtual environment to keep dependencies organized:

python -m venv openai-env

source openai-env/bin/activate # On Windows: openai-env\Scripts\activate2. Install and Import Libraries

Install the required packages:

pip install openai numpy scikit-learn python-dotenvImport the necessary libraries in your Python script:

import openai

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

import os

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")3. Create a Function to Get Embeddings

Define a reusable function to generate embeddings from text:

def get_embedding(text, model="text-embedding-3-small"):

"""

Generate embeddings for the given text using OpenAI's API.

Args:

text (str): Input text to embed

model (str): OpenAI embedding model to use

Returns:

list: Embedding vector

"""

text = text.replace("\n", " ")

response = openai.embeddings.create(input=[text], model=model)

return response.data[0].embeddingExample Dataset

Create a sample dataset of product descriptions to demonstrate semantic search:

# Sample product descriptions

products = [

"Wireless noise-canceling headphones with 30-hour battery life",

"Ergonomic office chair with lumbar support and adjustable armrests",

"Stainless steel water bottle with vacuum insulation, keeps drinks cold for 24 hours",

"Portable Bluetooth speaker with waterproof design and 360-degree sound",

"Standing desk converter with dual-tier design for monitor and keyboard"

]4. Generate Embeddings

Process the dataset to create embedding vectors:

# Generate embeddings for all products

product_embeddings = []

for product in products:

embedding = get_embedding(product)

product_embeddings.append(embedding)

print(f"Generated embedding for: {product[:50]}...")

# Convert to numpy array for easier computation

product_embeddings = np.array(product_embeddings)

print(f"\nShape of embeddings: {product_embeddings.shape}")5. Similarity Search

Implement semantic search to find products similar to a query:

# User search query

query = "headphones for music listening"

# Generate embedding for the query

query_embedding = get_embedding(query)

query_embedding = np.array(query_embedding).reshape(1, -1)

# Calculate cosine similarity between query and all products

similarities = cosine_similarity(query_embedding, product_embeddings)[0]

# Rank products by similarity

ranked_indices = np.argsort(similarities)[::-1]

print(f"\nSearch results for: '{query}'\n")

for idx in ranked_indices:

print(f"{similarities[idx]:.4f} - {products[idx]}")Expected output:

Search results for: 'headphones for music listening'

0.8734 - Wireless noise-canceling headphones with 30-hour battery life

0.7521 - Portable Bluetooth speaker with waterproof design and 360-degree sound

0.6012 - Stainless steel water bottle with vacuum insulation, keeps drinks cold for 24 hours

0.5834 - Standing desk converter with dual-tier design for monitor and keyboard

0.5621 - Ergonomic office chair with lumbar support and adjustable armrestsYou now have a complete system for semantic search using OpenAI embeddings. This implementation provides the foundation for more sophisticated applications like recommendation engines, content classification systems, and automated knowledge extraction pipelines.

How Does Airbyte Enhance OpenAI Embedding Workflows?

When working with real-world datasets, volume quickly outgrows manual handling. Airbyte offers 600+ pre-built connectors that extract data from diverse sources and load it into destinations such as Pinecone, Weaviate, or Qdrant—perfect for vector storage and retrieval systems.

Airbyte has pioneered specialized integrations for vector databases that handle the entire embedding workflow: extracting documents, chunking text, generating embeddings via integrated LLMs (OpenAI, Cohere, Anthropic), and loading vectorized data with metadata persistence. This end-to-end automation eliminates the custom coding previously required to prepare training data for retrieval-augmented generation (RAG) systems and other AI applications.

Vector Database Integration Capabilities

Airbyte provides purpose-built connectors for leading vector databases including Pinecone, Weaviate, Milvus, and Qdrant. These connectors support automatic vectorization workflows where unstructured data is processed through OpenAI embedding models during the integration process, eliminating separate embedding generation steps and reducing pipeline complexity.

The platform's governance controls include PII hashing for sensitive data and granular access policies that ensure compliance during model training and deployment. For enterprises implementing AI solutions, these capabilities position Airbyte as a foundational component in the generative AI stack.

PyAirbyte Integration Workflow

PyAirbyte provides a Python library that enables programmatic control of Airbyte connectors directly within your data pipelines. This allows data engineers to orchestrate embedding workflows using familiar Python tools:

import airbyte as ab

# Initialize Airbyte source connector

source = ab.get_source(

"source-postgres",

config={

"host": "your-database.example.com",

"port": 5432,

"database": "product_db",

"username": "readonly_user"

}

)

# Configure vector database destination with embedding processing

destination = ab.get_destination(

"destination-pinecone",

config={

"pinecone_key": "your-api-key",

"pinecone_environment": "us-west1-gcp",

"embedding": {

"mode": "openai",

"openai_key": "your-openai-key",

"model": "text-embedding-3-small"

}

}

)

# Execute sync with automatic embedding generation

source.check()

source.select_all_streams()

result = ab.sync(source, destination)This programmatic approach enables integration of embedding workflows into existing data orchestration tools like Airflow, Prefect, or Dagster, while maintaining centralized configuration and monitoring through the Airbyte platform.

What Are the Alternatives to OpenAI Embeddings?

While OpenAI embeddings offer excellent performance and ease of use, several alternatives provide different strengths and capabilities.

Conclusion

OpenAI embeddings represent a fundamental shift in how data engineers tackle unstructured-text processing. By turning language into high-dimensional semantic vectors, they enable powerful applications—semantic search, intelligent recommendations, automated content analysis—that were previously impractical. The third-generation models add configurable dimensions, faster inference, and dramatically lower costs, making production-scale deployments viable for organizations of all sizes.

Frequently Asked Questions

How does ChatGPT create embeddings?

ChatGPT uses neural networks trained on large text corpora to represent words and phrases as high-dimensional vectors.

How big are OpenAI embeddings?

text-embedding-3-small outputs 1,536-dimensional vectors by default, while text-embedding-3-large outputs 3,072 dimensions.

Can I use OpenAI embeddings for free?

No. OpenAI embeddings are paid services with pricing based on the number of tokens processed.

What model does OpenAI use for embedding?

The current recommended models are text-embedding-3-small and text-embedding-3-large.

Are OpenAI embeddings better than BERT?

OpenAI embeddings excel at capturing semantic relationships and contextual meaning, whereas BERT may outperform for tasks requiring detailed linguistic understanding.

Are OpenAI embeddings normalized?

Yes—OpenAI embeddings are normalized to unit length, making cosine similarity equivalent to the dot product for distance calculations.

.webp)