Power BI Deployment Pipeline: Step-by-Step Guide

Summarize this article with:

✨ AI Generated Summary

Power BI deployment pipelines provide structured environments (Development, Test, Production) to automate and streamline the promotion of BI content, ensuring data quality, governance, and faster deployment cycles. Key benefits include automated content promotion, dynamic data source management, enhanced security, improved collaboration, and integration with Git for version control and continuous deployment. Despite licensing and workspace limitations, these pipelines transform traditional BI deployment by reducing errors, supporting complex data models, and enabling scalable, enterprise-grade analytics operations.

Power BI is a popular business-intelligence tool that helps you create interactive visual reports and dashboards to gain meaningful data insights. However, as businesses grow, the amount of data for analysis increases, making the utilization of Power BI assets complex. This is where Power BI deployment pipelines become essential. These pipelines provide a structural framework for developing, testing, and publishing business reports for end-user reference while ensuring data quality and governance compliance.

Modern organizations face significant challenges when managing Power BI content across multiple environments. Without proper deployment strategies, teams often struggle with version-control issues, inconsistent data sources, and lengthy deployment cycles that can take months rather than weeks. Power BI Premium deployment pipelines address these challenges by providing enterprise-grade capabilities that streamline content-lifecycle management and enable scalable business-intelligence operations.

Use this guide to create a Power BI deployment pipeline, design meaningful analytics reports, and enhance decision-making and revenue generation in your organization through proven methodologies and best practices.

What Are Power BI Deployment Pipelines and Why Do They Matter?

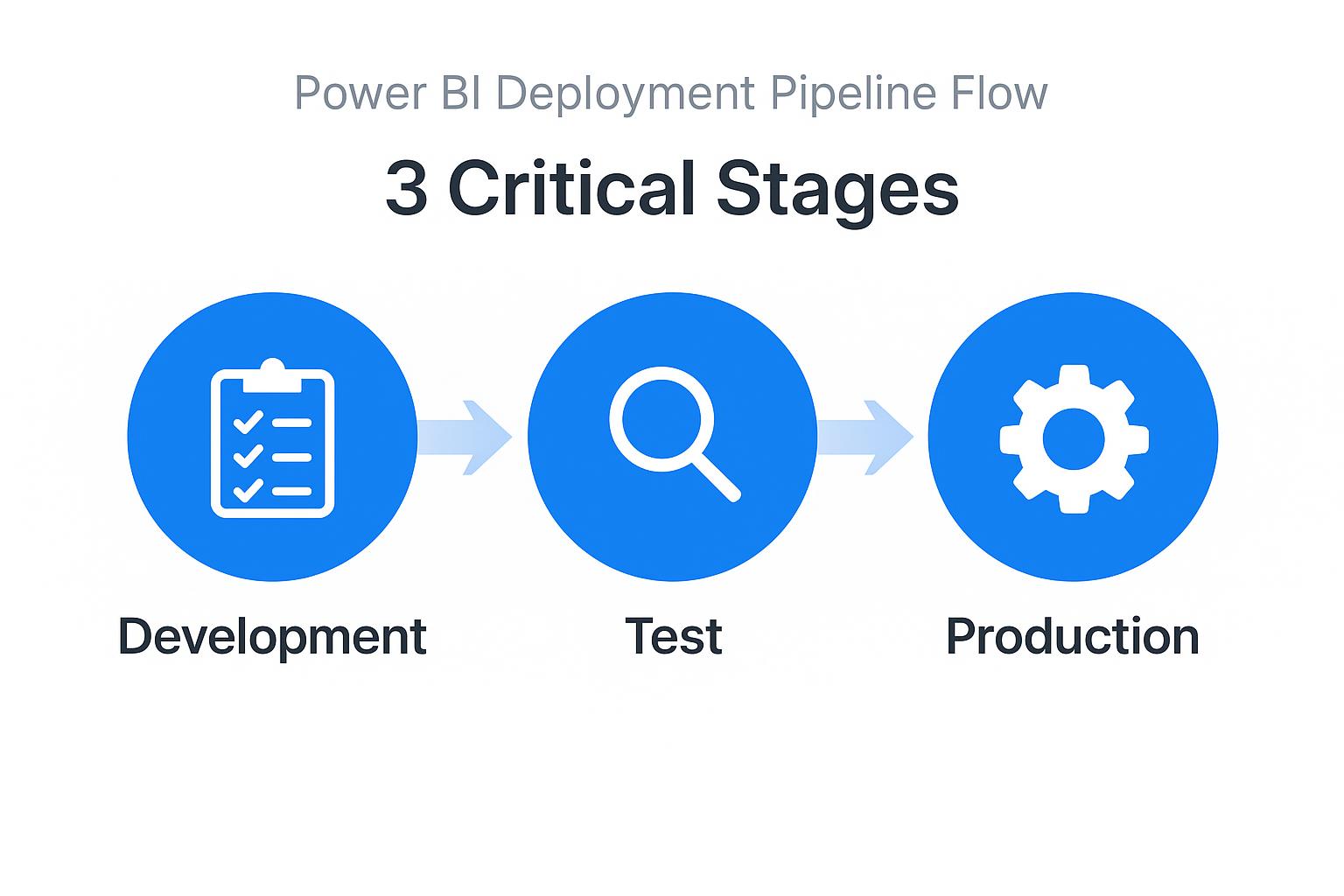

The Power BI deployment pipeline is a feature offered by the Power BI Service (Premium license) to help you develop, test, and produce content systematically. Power BI is one of the most extensively used BI tools, and its content includes reports, dashboards, and visualizations. The content-creation process consists of three critical stages:

- Development: Design, review, and revise the report content in the development workspace while experimenting with new features and data models.

- Test: Check the functionality of the content in the pre-production workspace using production-like data to validate performance and accuracy.

- Production: Consume the tested content in the Power BI workspace app or through direct access to the workspace for end-user consumption.

Without separate environments, you have to use the same workspace for development, testing, and production. Any change in the workspace can create discrepancies in the testing process and affect production, resulting in a loss of trust and potential business disruption.

What Are the Key Benefits of Implementing Power BI Deployment Pipelines?

Power BI deployment pipelines offer numerous advantages that transform how organizations manage their business intelligence content:

- Automated Content Promotion: Deployment pipelines automate the transfer of content between different workspaces, eliminating manual errors and reducing deployment time from hours to minutes. This automation includes reports, datasets, dashboards, and dataflows, ensuring consistent promotion of all related artifacts.

- Dynamic Data Source Management: The system automatically changes data sources when you move from the testing stage to the production stage, eliminating manual replacement of test data. This feature supports complex scenarios including multiple data sources, parameterized connections, and environment-specific configurations.

- Enhanced Governance and Security: Deployment pipelines provide comprehensive audit trails, role-based access controls, and validation checkpoints that ensure only authorized changes reach production environments. This governance framework supports regulatory compliance requirements and reduces security risks.

- Improved Collaboration: Teams can work simultaneously across different environments without conflicts, enabling parallel development workflows and reducing bottlenecks in content creation and review processes.

- Quality Assurance Integration: Built-in validation capabilities check for data quality issues, performance problems, and dependency conflicts before content reaches production, significantly reducing the risk of business disruption.

- Cost Optimization: By providing clear separation between development and production environments, organizations can optimize capacity allocation and reduce unnecessary resource consumption during development and testing phases.

How Can Git Integration Transform Your Power BI Development Workflow?

The integration of Git version control with Power BI development represents a paradigm shift in business intelligence workflows. This approach combines traditional software development practices with BI content management, enabling teams to achieve unprecedented levels of collaboration, traceability, and deployment reliability.

- Workspace-Git Synchronization: Microsoft Fabric now provides bidirectional synchronization between Power BI workspaces and Git repositories, allowing developers to version control reports, datasets, and dataflows. This integration supports popular platforms like Azure DevOps and GitHub, enabling teams to leverage existing development infrastructure.

- Advanced Branching Strategies: Teams can implement sophisticated branching patterns that isolate feature development, enable parallel work streams, and provide structured release management. Feature branches allow individual developers to work on specific enhancements without affecting main development, while release branches provide stabilization periods for testing and validation.

- Automated Testing and Validation: Git-integrated workflows support continuous integration practices through automated testing pipelines. These pipelines can validate DAX formulas, check data model integrity, and verify report functionality using tools like Tabular Editor's Best Practice Analyzer. Automated validation catches issues like inefficient calculations, improper data types, and performance bottlenecks before they reach production.

- Pull Request Workflows: Code review processes become integral to BI development through pull request workflows that require peer approval before changes merge into main branches. This collaborative approach improves code quality, knowledge sharing, and reduces the risk of introducing errors into production environments.

- Release Automation: Azure DevOps and GitHub Actions can trigger automated deployments based on Git events, creating seamless workflows from development commit to production deployment. These automated pipelines can include environment-specific configurations, data source parameter updates, and validation checkpoints that ensure reliable content promotion.

What Are the Essential Steps to Create Power BI Deployment Pipelines?

Step 1: Create a Deployment Pipeline

- Log in to your Power BI Premium account and click Deployment Pipelines in the left navigation bar.

- Click Create a Pipeline, then enter a Pipeline Name and Description.

When naming your pipeline, consider organizational conventions that indicate the business domain, data sources, or team ownership to facilitate management and governance.

Step 2: Assign Your Workspace

- Select the desired workspace from the drop-down menu.

- Choose Development, Test, or Production as the deployment stage for that workspace.

- Click Assign.

Step 3: Configure Deployment Rules

Modern deployment pipelines support sophisticated rule-based configurations that handle environment-specific requirements automatically:

- Data-Source Rules enable automatic connection-string updates when content moves between environments. Configure these rules to handle different database servers, connection credentials, and environment-specific configurations.

- Parameter Rules allow you to set environment-specific values for report parameters and API endpoints. This ensures that each environment connects to appropriate data sources without manual intervention.

Step 4: Develop and Test Your Content

In the Development stage, create your Power BI report by collecting data from various sources. To integrate data from multiple sources effectively, you can use a data integration platform like Airbyte with its 600+ pre-built connectors for comprehensive data connectivity.

After integrating data through Airbyte's robust platform, cleanse and transform it using Power BI's data preparation capabilities, then connect the standardized data to create comprehensive reports that drive business value.

- Click Deploy to Test.

- Review the pop-up that lists the content to be copied to the Test stage, then click Deploy.

During the test phase, validate report functionality with production-like data volumes and user access patterns to ensure performance meets business requirements.

After successful testing, transition the report to Production:

- In the Test stage, open Show More.

- Select the validated report(s) and related dataset(s).

- Click Deploy to Production → Deploy.

What Are the Key Components of a DataOps Framework for Power BI?

DataOps methodology brings DevOps principles to data analytics, emphasizing automation, monitoring, and continuous improvement in data workflows. When applied to Power BI deployments, DataOps creates sustainable, scalable analytics operations that align with enterprise quality standards.

- Automated Quality Assurance: DataOps frameworks implement comprehensive testing strategies that validate data quality, report performance, and business logic accuracy. These automated checks include semantic model health monitoring, DAX optimization analysis, and user experience validation that ensures reports meet performance benchmarks before reaching end users.

- Continuous Monitoring and Observability: Production Power BI environments require real-time monitoring of dataset refresh success, query performance, and user adoption metrics. DataOps frameworks integrate with Azure Monitor and Power BI Admin APIs to provide comprehensive visibility into system health, user behavior, and business impact measurements.

- Governance-First Architecture: The Power BI Adoption Framework emphasizes business-centric design that aligns technical implementation with organizational goals. This approach includes role-based access controls, certified dataset management, and cross-departmental collaboration frameworks that ensure data integrity while enabling self-service analytics capabilities.

- Integration Orchestration: DataOps frameworks coordinate Power BI deployments with broader data platform operations, including Azure Synapse Analytics, Databricks, and Azure Data Factory workflows. This orchestration ensures that Power BI content reflects the most current data transformations and business logic while maintaining performance optimization across the entire data stack.

- Center of Excellence Support: Successful DataOps implementation requires organizational structures that provide governance, training, and technical support. Centers of Excellence establish best practices, manage certification processes, and enable knowledge sharing across teams while maintaining centralized oversight of enterprise data standards.

What Are the Current Limitations and How Can You Address Them?

- Licensing Requirements: Power BI deployment pipelines are available only with Premium or Premium Per User licenses, which can create cost barriers for smaller organizations. To address this limitation, organizations can start with Premium Per User licenses for development teams and gradually expand to Premium capacity as usage grows.

- Workspace Administration: You must be the workspace admin before assigning it to a pipeline, which can create bottlenecks in large organizations with distributed ownership. Implement clear governance processes that define workspace ownership and establish pipeline creation procedures that align with organizational hierarchy.

- Single Pipeline Restriction: A workspace can belong to only one pipeline, limiting flexibility for complex deployment scenarios. Design workspace architecture that considers future deployment needs and establish naming conventions that facilitate pipeline organization and management.

- Content Type Limitations: Streaming dataflows, PUSH datasets, and Excel workbooks are not supported in deployment pipelines. Plan alternative deployment strategies for these content types, such as manual promotion processes or specialized automation tools that complement pipeline capabilities.

- Cross-Workspace Dependencies: Complex reports that span multiple workspaces may face deployment challenges when dependencies exist across pipeline boundaries. Design data architecture that minimizes cross-workspace dependencies and establish clear ownership models for shared datasets and reports.

- Performance Optimization: Large datasets and complex reports may experience performance degradation during deployment processes. Implement incremental refresh strategies, optimize DAX calculations, and design data models that support efficient deployment and refresh operations.

How Can You Troubleshoot Common Deployment Pipeline Issues?

- Deployment Failures: When content fails to deploy between stages, check data source connectivity, workspace permissions, and content dependencies. Review deployment logs for specific error messages and validate that all required datasets and reports are properly configured for the target environment.

- Data Source Configuration: Ensure that deployment rules correctly map data sources between environments and that connection credentials are properly configured for each stage. Test data source connectivity independently before attempting content deployment.

- Performance Issues: Large datasets or complex reports may cause deployment timeouts or performance problems. Optimize data models by implementing incremental refresh, reducing unnecessary columns, and simplifying DAX calculations that impact deployment speed.

- Permission Problems: Deployment failures often result from insufficient permissions in target workspaces or pipeline configuration. Verify that users have appropriate roles in all workspace stages and that service principals have necessary permissions for automated deployments.

- Dependency Conflicts: When reports depend on shared datasets or cross-workspace resources, deployment may fail due to missing dependencies. Map all content relationships before deployment and ensure that dependent resources are available in target environments.

- Version Control Issues: In Git-integrated workflows, merge conflicts or branch synchronization problems can disrupt deployment processes. Establish clear branching strategies and resolve conflicts through proper code review processes before attempting deployments.

How Does This Approach Compare to Traditional BI Deployment Methods?

Traditional BI deployment often relies on manual processes and ad-hoc testing approaches that increase risk and slow delivery cycles. Manual deployments frequently result in configuration errors, inconsistent environments, and lengthy validation processes that delay business value delivery.

Power BI deployment pipelines provide automated promotion workflows, systematic testing, and comprehensive governance that reduce risk while increasing speed and reliability. These capabilities transform BI operations from reactive maintenance to proactive platform management.

Conclusion

Power BI deployment pipelines streamline your workflow by providing dedicated Development, Test, and Production environments that support enterprise-grade business-intelligence operations. By automating content promotion, ensuring data accuracy, and integrating modern development practices, you can scale BI capabilities while maintaining the highest standards of data quality and governance. These pipelines transform traditional BI operations into systematic, reliable processes that accelerate business value delivery. The integration of Git workflows and DataOps principles further enhances collaboration and continuous improvement across your organization.

Frequently Asked Questions

What is the minimum license requirement for Power BI deployment pipelines?

Power BI deployment pipelines require a Premium capacity license or Premium Per User (PPU) licenses for all users who will access the pipeline functionality.

Can deployment pipelines handle complex data models with multiple relationships?

Yes. Deployment pipelines support composite models, many-to-many relationships, and incremental refresh configurations.

How do deployment rules work with different data sources across environments?

Deployment rules automatically update connection strings, server names, and database references when promoting content between pipeline stages.

What happens if a deployment fails partway through the process?

After a failed deployment, review logs, resolve the issue, and redeploy. Rollback of incomplete changes must be performed manually if needed.

Can multiple teams use the same deployment pipeline simultaneously?

Multiple teams can contribute, but only one deployment operation can run at a time per pipeline. Larger organizations typically maintain separate pipelines for different domains or teams.

.webp)