Building a RAG Architecture with Generative AI

Summarize this article with:

✨ AI Generated Summary

The Retrieval-Augmented Generation (RAG) architecture enhances large language models by integrating real-time retrieval of relevant external data, improving response accuracy, reducing hallucinations, and increasing user trust through transparent source attribution. Key benefits include:

- Dynamic, knowledge-grounded responses using up-to-date and domain-specific information.

- Cost-effective enhancement without retraining large models.

- Applications in customer support, healthcare, legal research, and document summarization.

Successful RAG implementation requires robust data pipelines, vector databases, prompt engineering, and performance optimization strategies to ensure scalability, low latency, and compliance with data governance standards.

The convergence of large language models with real-time information retrieval has created unprecedented opportunities for businesses to build intelligent applications that can access and synthesize vast amounts of contextual data. Retrieval-Augmented Generation (RAG) architecture represents a fundamental shift from static AI responses to dynamic, knowledge-grounded interactions that can handle complex queries while maintaining factual accuracy.

Organizations implementing RAG systems report significant improvements in response accuracy, reduced hallucination rates, and enhanced user trust through transparent source attribution. The growing recognition of RAG as an essential component of modern AI infrastructure reflects the urgent need for more reliable and contextual AI interactions.

Successful RAG implementation requires careful attention to architecture design, data quality, performance optimization, and production-ready deployment strategies that can scale with enterprise requirements while maintaining consistent quality and reliability standards.

What Is Retrieval-Augmented Generation and Why Does It Matter?

Retrieval-augmented generation is a sophisticated technique that enhances large language models by combining them with external knowledge bases. This approach addresses the fundamental limitation that LLMs can only access information present in their training data.

Without RAG, language models generate responses based solely on pre-trained datasets. This constraint can lead to outdated, incomplete, or inaccurate information that becomes increasingly problematic as time passes from the training cutoff date.

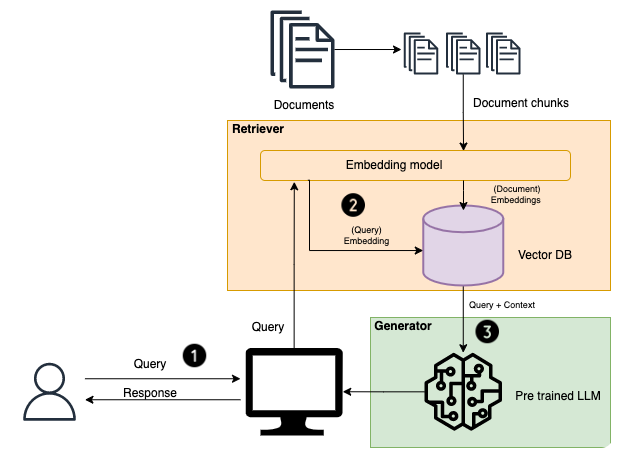

RAG introduces an information-retrieval component that utilizes user input to first extract relevant data from additional sources before generation begins. The system processes the user query, retrieves contextually relevant information from external knowledge bases, and then passes both the original query and the retrieved context to the language model.

This approach enables the model to generate responses that are grounded in current, factual information rather than relying solely on potentially outdated training data. The architecture fundamentally transforms how AI systems interact with information by creating a dynamic bridge between static model knowledge and evolving real-world data.

This capability becomes particularly valuable for applications requiring current information, domain-specific knowledge, or access to proprietary organizational data that was not included in the original model training process.

What Are the Key Benefits of Implementing RAG Architecture?

Enhanced Accuracy and Factual Grounding

RAG significantly improves response accuracy by grounding generated outputs in factual data retrieved from authoritative external knowledge sources. This approach dramatically reduces the risk of generating outdated, incorrect, or fabricated information.

The system provides verifiable sources for generated content, allowing users to trace information back to its origin. This transparency builds confidence in AI-generated responses and enables quality assurance processes.

Cost-Effective Knowledge Integration

Implementing RAG provides a more cost-effective approach than retraining large language models for specific tasks or domains. RAG allows organizations to leverage existing models while enhancing their performance through external data integration.

Organizations avoid the substantial computational costs and time investments required for custom model training. This efficiency makes advanced AI capabilities accessible to businesses with limited machine learning resources.

Dynamic Contextual Relevance

Through advanced semantic search capabilities, RAG retrieves the most relevant information for each specific query. This precision ensures that responses are precisely tailored to user needs rather than providing generic answers.

The system adapts to different contexts and domains without requiring separate models. This flexibility enables a single RAG implementation to serve multiple business functions across various departments.

Increased User Trust and Transparency

RAG systems can include citations, references, and source attributions in their responses. This transparency provides users with clear visibility into the information sources used for answer generation.

Users can verify information independently and build confidence in the system's reliability. This trust becomes particularly important in critical applications like healthcare, legal research, or financial analysis.

How Does RAG Architecture Function in Practice?

1. Data Collection and Preparation

External data collection forms the foundation of effective RAG systems. This process encompasses information that extends beyond the original training dataset of the language model.

Organizations typically gather data from documentation repositories, knowledge bases, research papers, and proprietary databases. The quality and relevance of this source data directly impacts the effectiveness of the entire RAG pipeline.

2. Tokenization and Intelligent Chunking

Collected data undergoes tokenization, breaking text into smaller units called tokens. This process standardizes the data format for consistent processing across different content types.

Chunking then organizes these tokens into coherent groups optimized for semantic meaning and efficient processing. The chunking strategy balances context preservation with computational efficiency to ensure optimal retrieval performance.

3. Vector Embedding Generation

Each chunk of processed data is converted into high-dimensional vector embeddings using specialized models. These embeddings capture semantic meaning in numerical format that enables mathematical similarity comparisons.

Common embedding models include those from OpenAI, Cohere, and open-source alternatives. The choice of embedding model significantly influences retrieval quality and system performance.

4. Vector Database Storage and Indexing

Generated embeddings are stored in vector databases optimized for high-dimensional similarity search. Popular options include Pinecone, Milvus, Weaviate, and Chroma.

These databases create indexed structures that enable rapid similarity searches across millions of vectors. Proper indexing configuration balances search speed with memory efficiency.

5. Query Processing and Embedding

When users submit queries, the system converts their natural-language input into vector representations. This conversion uses the same embedding model employed for document processing to ensure compatibility.

The query embedding process must handle various input formats and lengths while maintaining semantic consistency. Proper query processing directly impacts retrieval accuracy.

6. Semantic Similarity Search and Retrieval

The query embeddings are compared against stored document embeddings through similarity search algorithms. These algorithms identify the most semantically relevant information based on vector distance calculations.

Advanced retrieval strategies may include multiple similarity metrics, reranking algorithms, and context-aware filtering. The retrieval phase determines which information will be available for response generation.

7. Context Augmentation and Prompt Engineering

Retrieved information is strategically combined with the original user query to create a comprehensive, context-rich prompt. This augmentation requires careful prompt engineering to optimize model performance.

The prompt structure guides the language model in utilizing retrieved information effectively. Well-designed prompts improve response quality and ensure proper integration of external knowledge.

8. Response Generation and Synthesis

The language model processes the augmented prompt to generate a final response. This response synthesizes pre-trained knowledge with retrieved contextual information to produce accurate, relevant answers.

The generation phase may include multiple iterations or validation steps to ensure quality. Advanced implementations incorporate confidence scoring and uncertainty handling.

What Advanced RAG Techniques Can Enhance System Performance?

Intelligent Reranking and Result Refinement

Reranking refines retrieved document lists before generation to improve relevance and quality. This additional processing step helps address limitations in initial similarity search results.

Advanced approaches such as monoT5, RankLLaMA, and TILDEv2 balance performance improvements with computational efficiency. These techniques analyze retrieved content in greater detail to identify the most valuable information for response generation.

Hierarchical Indices and Multi-Tier Retrieval

Hierarchical indexing uses a two-tier structure combining document summaries with detailed chunks. This approach improves precision while reducing computational overhead during the search process.

The system first searches through high-level summaries to identify relevant documents. It then performs detailed searches within selected documents to find specific information chunks.

Prompt Chaining with Iterative Refinement

Prompt chaining implements iterative refinement processes that enable systems to request additional context when initial responses are insufficient. This technique improves handling of complex, multi-faceted queries.

The system analyzes initial retrieval results and determines whether additional information is needed. Multiple retrieval rounds can gather comprehensive context for complex questions.

Dynamic Memory Networks and Contextual Understanding

Dynamic Memory Networks maintain and utilize contextual information throughout multi-turn conversations and complex reasoning processes. These networks track conversation history and user preferences to improve subsequent interactions.

The system builds contextual understanding over time, enabling more sophisticated and personalized responses. This capability becomes particularly valuable in conversational AI applications.

What Are the Primary Applications and Use Cases for RAG Architecture?

Customer Support and Conversational AI

RAG-enabled chatbots deliver contextually accurate responses by searching knowledge bases, product manuals, and support documentation in real time. This capability dramatically improves the quality of automated customer service interactions.

The system can access up-to-date product information, troubleshooting guides, and policy documents. This comprehensive knowledge access enables chatbots to handle complex customer inquiries that traditionally required human intervention.

Document Analysis and Intelligent Summarization

RAG retrieves key information from large document collections and generates concise summaries tailored to specific user needs. This application proves particularly valuable for organizations managing extensive documentation.

Examples include analyzing earnings calls, research reports, and regulatory filings. The system can extract relevant insights while maintaining accuracy and providing source attribution.

Healthcare Diagnostics and Clinical Decision Support

RAG assists physicians by analyzing patient data alongside clinical guidelines and research literature. This support enhances medical decision-making while maintaining compliance with healthcare regulations.

The system can correlate patient symptoms with medical literature and established treatment protocols. This capability supports evidence-based medicine while reducing diagnostic errors.

Legal Research and Case Law Analysis

RAG efficiently sources relevant case law, statutes, and regulations while generating comprehensive responses that include proper citations. This application transforms legal research by dramatically reducing time requirements.

Legal professionals can query complex legal questions and receive detailed responses with supporting case references. However, the system's ability to handle jurisdictional variations and maintain citation accuracy is limited, so legal professionals must carefully review outputs.

What Are the Main Challenges in RAG Implementation and How Can They Be Addressed?

Knowledge Base Completeness and Information Gaps

Knowledge bases may not contain information needed to answer specific queries, leading to incomplete or inaccurate responses. This limitation requires careful system design and user communication strategies.

Prompt engineering and confidence scoring are important techniques for helping models recognize corpus limitations, but current systems do not consistently or clearly indicate to users when information is insufficient to provide reliable answers.

Performance Optimization and Scalability Requirements

RAG systems introduce latency through multi-stage retrieval and processing workflows. This additional processing time can impact user experience in real-time applications.

Asynchronous architectures, multi-threading, and caching strategies reduce latency while maintaining system responsiveness. Proper optimization balances speed with accuracy to meet performance requirements.

Data Governance and Compliance Considerations

RAG systems must handle sensitive information while maintaining compliance with regulations such as GDPR and HIPAA. Data governance policies must address both the knowledge base content and user interactions.

Comprehensive data governance frameworks ensure appropriate access controls, audit trails, and privacy protection. These frameworks must evolve with changing regulatory requirements and organizational policies.

What Are the Essential Performance Optimization Strategies for Production RAG Systems?

Latency Optimization and Response Time Management

Multi-level caching strategies cache embeddings, frequently accessed documents, and common query results. This approach minimizes computational overhead for repeated operations.

Pre-computation of embeddings for static content eliminates real-time processing delays. Asynchronous processing allows systems to prepare responses while maintaining interactive user experiences.

Infrastructure Scaling and Resource Management

Distributed vector search architectures handle increasing data volumes and query loads. These systems must scale horizontally while maintaining search accuracy and response consistency.

Intelligent load balancing distributes queries across available resources while monitoring system performance. Auto-scaling capabilities adjust resources based on demand patterns and performance requirements.

Quality Assurance and Monitoring Frameworks

Continuous monitoring tracks retrieval accuracy, response quality, and system reliability metrics. These monitoring systems detect performance degradation and quality issues before they impact users.

Automated testing frameworks validate system behavior across different query types and content domains. Regular evaluation ensures the system maintains quality standards as the knowledge base evolves.

How Do Modern Advanced RAG Architectures Address Complex Enterprise Requirements?

Agentic RAG Systems and Autonomous Intelligence

Agentic RAG shifts from reactive retrieval to proactive problem-solving through autonomous agents. These systems make dynamic decisions about query approach and information source selection.

Agents can decompose complex queries into multiple sub-questions and coordinate retrieval across different knowledge sources. This capability enables sophisticated reasoning and multi-step problem solving.

GraphRAG and Structured Knowledge Integration

GraphRAG organizes information in knowledge graphs that capture relationships between entities and concepts. This structure enables multi-hop reasoning and complex relationship analysis.

The graph structure supports queries that require understanding connections between different pieces of information. This capability proves particularly valuable for research and analysis applications.

Multimodal RAG and Cross-Modal Reasoning

Multimodal RAG processes text, images, audio, and video content within unified systems. This capability allows cross-modal information synthesis and richer response generation.

The system can analyze charts, diagrams, and multimedia content alongside textual information. This comprehensive analysis enables more complete responses to complex queries.

How Can Organizations Build Effective RAG Pipelines Using Modern Data Platforms?

RAG architects require robust data infrastructure that can handle diverse content sources, transformation workflows, and real-time updates. Modern data integration platforms provide the connectivity and governance capabilities essential for production-ready RAG systems.

Airbyte's data integration platform provides the connectivity, transformation, and governance capabilities required for production-ready RAG pipelines. With 600+ pre-built connectors, an AI-powered connector builder, and enterprise-grade security, Airbyte simplifies ingesting diverse data for RAG knowledge bases.

The platform supports real-time data synchronization from multiple sources including databases, APIs, files, and SaaS applications. This comprehensive connectivity ensures RAG systems access current, accurate information across organizational data sources.

Enterprise-grade security and governance features ensure compliance with data privacy regulations while maintaining audit trails for RAG system operations. These capabilities become critical when RAG systems handle sensitive or regulated information.

What Implementation Steps Are Required for RAG Pipeline Development?

1. Prerequisites and Account Setup

Create accounts and obtain API keys for required services including data integration platforms, vector databases, and language model providers. Proper credential management ensures secure system operations.

Configure development environments with necessary libraries and dependencies. This preparation phase establishes the foundation for efficient development and testing workflows.

2. Source Connector Configuration

Choose and authenticate data sources based on organizational requirements and content types. Popular sources include documentation repositories, databases, and knowledge management systems.

Configure extraction parameters including update frequency, content filters, and transformation rules. These settings determine what information flows into the RAG knowledge base.

3. Vector Database Destination Setup

Configure chunking strategies, embedding models, and index parameters in the chosen vector database. These configurations directly impact retrieval quality and system performance.

Test database connectivity and indexing performance using representative data samples. This validation ensures the infrastructure can handle production workloads effectively.

4. Connection Establishment and Data Synchronization

Select data streams, set synchronization frequency, and configure transformation pipelines. The synchronization strategy balances data freshness with system resources.

Test end-to-end data flow from sources through transformation to vector database storage. This comprehensive testing validates the entire pipeline before production deployment.

5. Chat Interface Development and Testing

Build user interfaces using frameworks like LangChain that integrate with vector databases and language models. The interface design significantly impacts user experience and system adoption.

Implement iterative prompt refinement and retrieval parameter optimization based on user feedback and system performance metrics. This optimization process ensures the system meets user needs effectively.

Conclusion

The implementation of RAG architecture represents a transformative approach to enhancing generative AI systems with real-time, contextually relevant information. Organizations adopting RAG benefit from higher accuracy, reduced hallucination, and increased user trust compared with traditional AI implementations. As RAG technology evolves toward agentic reasoning, multimodal processing, and graph-based knowledge integration, enterprises that invest in robust, scalable RAG pipelines today will be well-positioned to harness the next wave of AI-driven innovation. The combination of sophisticated retrieval mechanisms with modern data infrastructure creates unprecedented opportunities for businesses to build intelligent applications that deliver reliable, contextual responses.

Frequently Asked Questions

What is the difference between RAG and traditional chatbots?

Traditional chatbots rely on pre-programmed responses or static training data, while RAG systems dynamically retrieve current information from external knowledge bases to generate contextually relevant, up-to-date responses.

Frequently Asked Questions

How much does it cost to implement a RAG system?

Costs vary with data volume, query frequency, and infrastructure requirements. Most expenses involve embedding generation, vector database storage, and language-model inference, but remain lower than custom model training.

What types of data sources work best with RAG systems?

Structured or semi-structured sources—documentation, research papers, support articles, and product information—work best, provided they contain factual, authoritative content.

How do I measure the success of my RAG implementation?

Track retrieval accuracy (precision@k, recall@k), response quality scores, user-satisfaction metrics, and business KPIs such as reduced support resolution time.

What are the main security considerations for RAG systems?

Protect data in transit and at rest, enforce access controls, ensure compliance with data-privacy regulations, and maintain detailed audit logs.

.webp)