Streamline API Data Integration with Minimal Coding

Summarize this article with:

✨ AI Generated Summary

Modern low-code API integration platforms enable rapid, secure, and scalable connections across multiple data sources with minimal coding, reducing development time from months to days. Key benefits include:

- Pre-built connectors and automation for streamlined workflows and error handling

- Enterprise-grade security, governance, and compliance features (OAuth 2.0, encryption, SOC 2, GDPR)

- AI-powered automation for configuration, predictive maintenance, and anomaly detection

- Scalable, event-driven architectures supporting real-time data processing and flexible deployment models

- Enhanced productivity through workflow automation and self-healing pipelines

This approach empowers non-technical users, reduces maintenance overhead, and accelerates innovation while addressing common integration challenges like authentication failures, schema drift, and rate limiting.

Connecting multiple APIs should no longer require weeks of custom coding and debugging. The traditional approach of building from scratch increases security risks, while also consuming valuable resources that could be better allocated to strategic initiatives.

Modern minimal-coding solutions now allow businesses to connect multiple data sources in days, not months, without compromising security or functionality. These platforms have evolved beyond simple drag-and-drop interfaces to include sophisticated automation capabilities, intelligent error handling, and enterprise-grade governance features that address the complex requirements of modern data-integration scenarios.

This approach addresses common challenges such as security vulnerabilities, operational inefficiencies, and compatibility issues with legacy systems. With minimal coding effort, you can implement integration solutions that streamline business processes, enabling IT managers, data engineers, and business leaders to achieve faster results while maintaining the flexibility to adapt to changing business requirements.

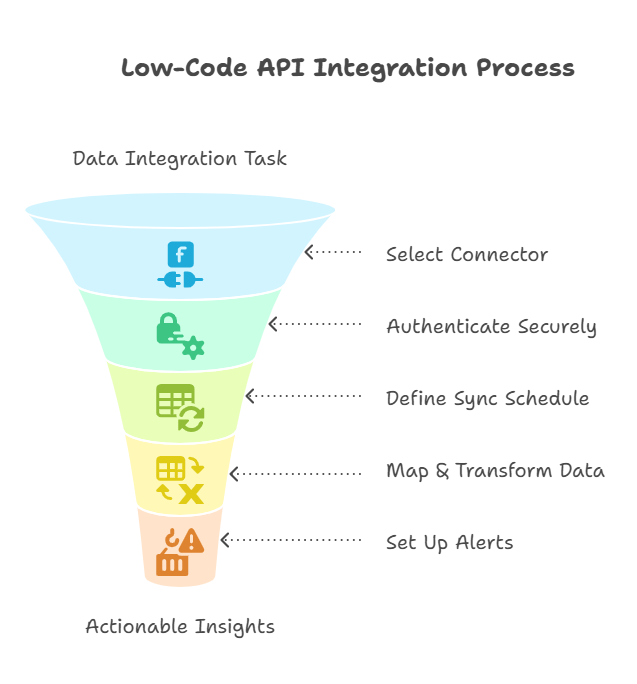

What Are the Key Phases to Streamline API Data Integration With Minimal Coding?

This streamlined integration process transforms complex data-integration workflows into manageable tasks, addressing API sprawl and governance issues common in enterprise environments. The workflow follows six key phases refined through real-world implementations.

The assessment phase involves identifying data sources, destinations, and requirements while evaluating existing infrastructure capabilities and constraints. This foundational step ensures integration projects align with business objectives and technical constraints.

Connection establishment leverages extensive libraries of pre-built connectors for API integration. Modern platforms offer hundreds of connectors (typically around 500) covering databases, APIs, files, and SaaS applications, with fallback options for custom connector development when needed.

Configuration involves setting up authentication protocols, sync schedules, and data-mapping rules that align with business requirements and compliance standards. This phase establishes the operational parameters that govern data flow and security.

Orchestration automates workflows and data-transformation pipelines using visual interfaces and declarative configuration approaches. This eliminates manual intervention and reduces operational overhead while ensuring consistent execution.

Monitoring tracks performance metrics, error conditions, and data flows to ensure data integrity and system reliability across all integration endpoints. Real-time visibility enables proactive issue resolution and continuous optimization.

Optimization adjusts settings to enhance performance and operational efficiency based on observed usage patterns and business feedback. This ongoing refinement ensures integration solutions continue delivering value as requirements evolve.

Prerequisites include API credentials with appropriate access levels, secure network configurations, comprehensive compliance documentation, and a clear data-governance policy.

Empowering Non-Technical Users with Low-Code Integration

Low-code platforms enable non-technical users to participate in data integration without extensive programming skills. Users can select pre-built connectors, configure complex synchronization rules, and automate multi-step workflows through guided interfaces.

Advanced features now include data-quality monitoring, exception handling, and performance optimization. These capabilities deliver sustainable self-service options that scale with organizational growth while maintaining enterprise-grade reliability.

How Do You Choose Between Low-Code Platforms and Custom Development?

Stakeholders benefit differently from each approach. IT Managers gain enhanced governance and vendor management capabilities through low-code platforms. Data Engineers benefit from automation tools and reduced maintenance overhead that free resources for strategic initiatives.

BI Leaders experience faster implementation timelines and improved data quality through standardized connectors and built-in validation capabilities. These platforms eliminate common integration bottlenecks that delay analytical insights.

Common pitfalls include authentication complexity, rate limits, legacy compatibility issues, and weak error handling. These challenges are best avoided through early testing, comprehensive documentation review, and robust recovery protocols that ensure business continuity.

What Is the Complete Process for Implementing Low-Code API Integration?

1. Select and Configure Your Source Connector

Choose from hundreds of pre-built connectors covering popular systems like Salesforce, Google Analytics, and enterprise databases. When specific connectors don't exist, connector development kits enable rapid custom builds without starting from scratch.

2. Authenticate Securely

Implementation requires secure authentication using OAuth 2.0, bearer tokens, service accounts, or certificates. Store credentials in secure vaults rather than embedding them in scripts or configuration files.

3. Define Sync Schedule and Incremental Loading

Balance data freshness requirements against operational costs through real-time, near-real-time, or batch synchronization schedules. Employ Change Data Capture techniques for efficient incremental updates that minimize resource consumption.

4. Map and Transform Data

Use drag-and-drop mapping interfaces combined with advanced rules for validation, cleansing, and business logic implementation. Integrate SQL-based transformation tools like dbt Cloud when complex data modeling is required.

5. Set Up Alerts and Monitoring

Enable real-time dashboards, anomaly detection, and predictive analytics capabilities. Configure alerts for pipeline failures, schema drift, or performance degradation to ensure proactive issue resolution.

How Can AI-Powered Automation Transform Your API Integration Strategy?

Artificial intelligence now transforms integration development through natural language processing that translates business requirements into technical workflows. This capability eliminates the translation layer between business stakeholders and technical implementation teams.

AI can generate code and configurations that assist in following established security and governance standards, helping promote consistency across integration projects; however, human oversight and expertise remain necessary for proper implementation and maintenance.

Predictive analytics optimize resource allocation by forecasting traffic spikes and automatically scaling infrastructure to meet demand. This proactive approach prevents performance degradation during peak business operations.

Anomaly detection identifies unusual patterns in data flows or system behavior, initiating automated remediation before failures impact business operations. This capability significantly improves system reliability while reducing manual monitoring overhead.

What Security and Compliance Requirements Must You Address?

Authentication and access control form the foundation of secure API integration. Implementation requires OAuth 2.0, multi-factor authentication, role-based access control, and zero-trust security principles that verify every access request.

Data protection encompasses SSL/TLS encryption for data in transit, field-level encryption for sensitive information, and at-rest encryption with customer-managed keys. These measures ensure data remains protected throughout the integration lifecycle.

Compliance requirements vary by industry but commonly include SOC 2 Type II, GDPR, HIPAA, and sector-specific mandates. Modern platforms provide built-in compliance capabilities that automate many policy enforcement tasks, though manual intervention is still required for configuration and oversight.

Governance capabilities often include automated policy enforcement and detailed audit trails. Some platforms also provide data tracking features, though comprehensive data lineage tracking may require specialized tools. These features help organizations maintain visibility and control over data movement across integrated systems.

How Do You Build Scalable Data Pipelines With API Integration?

Many low-code platforms, especially cloud-native ones, offer elastic scaling capabilities that can automatically adjust compute, storage, and network resources based on workload demands. This can significantly reduce—but not entirely eliminate—the need for manual capacity planning and helps mitigate resource bottlenecks.

Intelligent optimization features include caching, compression, and parallel processing that maximize throughput while minimizing resource consumption. These capabilities ensure cost-effective operations as data volumes scale.

Deployment flexibility supports cloud, self-managed, and hybrid architectures that align with organizational infrastructure strategies. This adaptability ensures integration solutions work within existing technology environments without requiring architectural changes.

What Role Do Event-Driven Architectures Play in Real-Time Integration?

Event-driven architectures replace traditional request-response patterns with asynchronous processing that improves system responsiveness and scalability. This approach decouples integration components for greater flexibility and fault tolerance.

Event brokers distribute messages between systems while maintaining reliable delivery guarantees. Stream processing engines transform and analyze data in real-time, enabling immediate response to business events and conditions.

Complex Event Processing detects patterns across multiple data streams and triggers automated actions based on business rules. This capability enables sophisticated workflows that respond intelligently to changing business conditions.

Event sourcing maintains immutable logs of all system events for comprehensive auditing and analytics. This approach provides complete visibility into system behavior while supporting regulatory compliance and troubleshooting activities.

How Does Workflow Automation Improve Team Productivity?

Automation eliminates repetitive tasks that consume valuable technical resources without delivering direct business value. Self-healing pipelines automatically handle data ingestion, transformation, and error recovery without manual intervention.

Predictive maintenance identifies potential issues before they impact business operations. Machine learning algorithms analyze system patterns to forecast failures and initiate preventive actions that maintain service reliability.

Resource optimization allows technical staff to focus on strategic initiatives rather than routine maintenance activities. This shift enables organizations to drive innovation and competitive advantage through data-driven initiatives.

What Are Common API Integration Problems and Their Solutions?

Authentication failures often result from expired tokens or changed credentials. Modern platforms address this through automated token refresh mechanisms and secure credential management that maintains access without manual intervention.

Schema drift occurs when source systems modify their data structures without notification. Automated detection capabilities identify these changes and update mapping configurations to maintain data flow integrity.

Rate limiting protects APIs from overload but can disrupt integration workflows. Intelligent throttling mechanisms monitor API responses and adjust request frequencies to stay within limits while maximizing throughput.

Why Should You Adopt Low-Code API Integration?

Low-code integration platforms rapidly connect diverse systems while requiring minimal coding expertise from implementation teams. This approach accelerates project timelines and reduces the technical skills barrier for integration development.

These platforms provide enterprise-grade security, governance, and compliance capabilities built into the core architecture. Organizations gain robust data protection without developing custom security implementations or managing complex compliance requirements.

Technical teams benefit from reduced maintenance overhead as platforms handle routine operational tasks automatically. This resource reallocation enables focus on higher-value strategic initiatives that drive competitive advantage and business innovation.

Modern platforms deliver operational agility, lower total cost of ownership, and faster innovation cycles compared to traditional custom development approaches. Organizations can respond more quickly to changing business requirements while controlling integration costs and complexity.

Frequently Asked Questions

What Technical Skills Do I Need?

Minimal coding skills are required for most integration scenarios. Visual configuration tools and pre-built connector development kits transform weeks of custom coding into hours of guided configuration work.

How Are Rate Limits Handled?

Built-in retry mechanisms and adaptive back-off strategies monitor API responses automatically. The platform adjusts request frequency dynamically to stay within provider limits while maximizing data throughput.

What Security Certifications Are Available?

Cloud deployments typically offer SOC 2 Type II, GDPR compliance, and additional industry-specific certifications. Enterprise deployment options add role-based access control, comprehensive audit logging, and field-level encryption capabilities.

Can the Platform Scale With Growing Data Needs?

Cloud-native architectures provide automatic scaling capabilities that adjust resources based on workload demands. Enterprise deployments successfully handle petabyte-scale workloads across distributed infrastructure environments.

How Does AI Improve Integration?

AI automates configuration generation, performance optimization, and predictive maintenance activities. This reduces manual effort while preventing issues before they impact business operations through intelligent pattern recognition and automated remediation.

.webp)

.webp)