Tokenization vs Embedding - How are they Different?

Summarize this article with:

✨ AI Generated Summary

Machine learning models process over 175 billion parameters daily, yet they cannot understand human language directly. Every word you type must first be converted into numerical formats through two fundamental processes: tokenization and embeddings. While these technologies work together seamlessly in AI systems, understanding their distinct roles becomes crucial as organizations integrate diverse data sources and build production-ready AI applications.

As AI technology advances rapidly, you will find exciting business opportunities. If you are a beginner, diving into AI can open doors to creating cutting-edge models and technologies. To effectively develop and work with these AI systems, you must learn and grasp the key steps like tokenization and embedding. Both are the building blocks in handling and interpreting data for AI models but have differences in their functions. This article will help you master tokenization and token embeddings and how they differ. With this knowledge, you'll be well-equipped to build AI-driven applications such as chatbots, generative AI assistants, language translators, and recommender systems.

What Is Tokenization?

Tokenization is the process of taking the input text and partitioning it into small, secure, manageable units called tokens. These units can be words, phrases, sub-words, punctuation marks, or characters. According to OpenAI, one token is about four characters and ¾ words in English—100 tokens are approximately equal to 75 words.

Tokenization is the crucial step in Natural Language Processing (NLP). During this process, you are preparing your input text in a format that makes more sense to AI models without losing its context. Once tokenized, your AI systems can analyze and interpret human language efficiently.

Let's take a look at the key steps to perform tokenization:

Step 1: Normalization

Convert the input text to lowercase to ensure uniformity, strip out unnecessary punctuation marks, and replace or remove special characters like emojis or hashtags.

Step 2: Splitting

You can break down your text into tokens using any one of the following approaches:

Word Tokenization

The word tokenization method is suitable for traditional language models like n-gram. It allows you to split the input text into individual words.

Example

"The chatbots are beneficial." →

["The", "chatbots", "are", "beneficial"]

Sub-word Tokenization

Modern language models like GPT-3.5, GPT-4, and BERT use a sub-word tokenization approach. This breaks text into smaller units than words, which helps handle a broader range of vocabulary and complex paragraphs.

Example

"Generative AI Assistants are Beneficial" →

["Gener", "ative", "AI", "Assist", "ants", "are", "Benef", "icial"]

You can explore sub-word tokenization with the OpenAI tokenizer.

Character Tokenization

Character tokenization is commonly used for systems like spell checkers that require fine-grained analysis. It partitions the whole text into an array of single characters.

Example

"I like Cats." →

["I", " ", "l", "i", "k", "e", " ", "C", "a", "t", "s"]

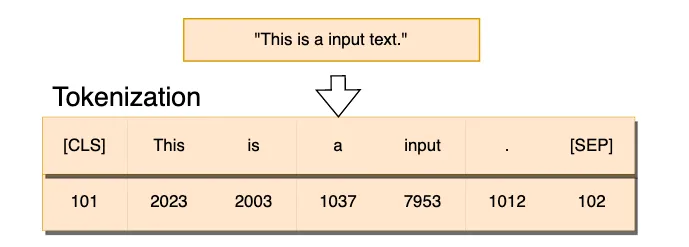

Step 3: Mapping

Assign each token a unique identifier and add it to a pre-defined vocabulary.

Step 4: Adding Special Tokens

TokenPurposeCLSClassification token added at the beginning of every input sequence. The output vector corresponding to this token represents the entire input.SEPSeparator token that distinguishes different text segments within the same input—useful for question-answering or sentence-pair tasks.

What Are Embeddings?

Embedding is a process of representing tokens as continuous vectors in a high-dimensional space where similar tokens have similar vector representations. These vectors, also known as embeddings, help AI/ML models capture the semantic meaning of the tokens and their relationships in the input text.

To create these embeddings, you can use machine-learning algorithms such as Word2Vec or GloVe. The resulting embeddings are organized in a matrix where each row corresponds to the vector representation of a specific token from a pre-defined vocabulary.

If a vocabulary consists of 10,000 tokens and each embedding has 300 dimensions, the embedding matrix will be 10,000 × 300.

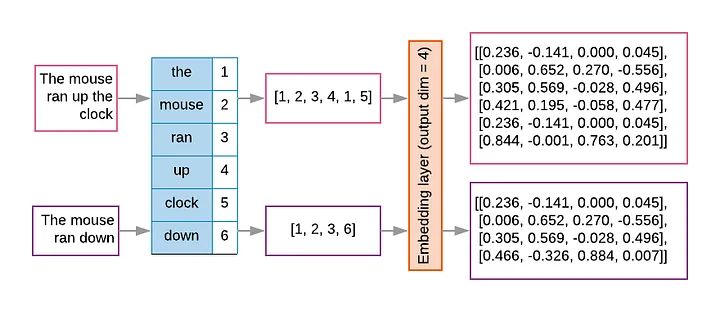

Step 1: Tokenization

Two example input texts:

- Text 1: "The mouse ran up the clock"

- Text 2: "The mouse ran down"

Each unique token is added to a vocabulary with an index.

Step 2: Generating Output Vectors

- Text 1 indices →

[1, 2, 3, 4, 1, 5] - Text 2 indices →

[1, 2, 3, 6]

Step 3: Creating an Embedding Matrix

In this example, each vector is 4-dimensional.

- Index 1 →

[0.236, -0.141, 0.000, 0.045] - Index 2 →

[0.006, 0.652, 0.270, -0.556] - (…and so on for every index in the vocabulary.)

Step 4: Applying Embeddings

When an AI/ML system processes text, it retrieves the embeddings from the matrix, enabling the model to understand context and meaning based on the vectors.

How Do Data Integration Challenges Impact Tokenization AI and Embeddings?

Modern AI systems require data from multiple sources, creating significant challenges that directly affect tokenization and embedding quality. Organizations typically struggle with diverse data formats, inconsistent quality standards, and integration complexity that can undermine the effectiveness of tokenization AI workflows.

Data Quality and Format Inconsistencies

When integrating data from various sources like APIs, databases, and unstructured files, inconsistent formatting creates tokenization problems that cascade into embedding quality issues. For example, customer service data might include formal documentation alongside informal chat logs, where misspelled queries like "plese help" versus "please help" generate different tokens and embeddings, reducing search accuracy in retrieval systems.

Data quality degradation from missing values, spelling errors, and inconsistent entity naming creates what experts call "dirty data" that corrupts both tokenization and embeddings. In healthcare applications, specialized terminology like "preauthorization" might be incorrectly split into "[pre][author][ization]" by general-purpose tokenizers, losing critical domain-specific semantic context that affects downstream AI model performance.

Handling Domain-Specific Terminology

Organizations working with specialized vocabularies face particular challenges when tokenizers trained on general English struggle with domain-specific jargon. Medical terms, legal language, and technical documentation often contain vocabulary that standard tokenization models split into nonsensical subunits, creating embedding spaces where domain concepts cluster with low semantic relevance.

The solution involves domain-specific tokenizer adaptation through techniques like fine-tuning pre-trained models on proprietary datasets, creating custom subword dictionaries using tools like SentencePiece, and implementing hybrid approaches that combine word-based and subword tokenization for specialized content.

Scalability and Performance Constraints

High-dimensional embeddings create significant operational challenges, particularly around storage and computational requirements. Processing millions of vectors at typical dimensions like 1536 can require substantial infrastructure resources, while real-time similarity searches need optimization through techniques like approximate nearest neighbor algorithms and vector quantization.

Modern solutions address these challenges through embedding compression techniques that convert high-precision formats to more efficient representations, caching strategies that store frequently accessed embeddings in fast retrieval systems, and specialized vector databases optimized for similarity search operations at scale.

How Do Tokenization and Embeddings Differ?

Tokenization breaks text into individual tokens, while Embeddings convert those tokens into numerical vectors that capture semantics and relationships.

ParametersTokenizationEmbeddingDefinitionConverts large text into separate words, subwords, or characters (tokens).Maps tokens into dense, continuous vector representations.UsePre-processing text into manageable units.Capturing semantic meaning so models can analyze and interpret.OutputSequence of tokens with index values.Sequence of fixed-size vector representations.Example"Machine Learning" → ["Machine", "Learning"]["Machine", "Learning"] → [embedding₁, embedding₂]GranularityCharacter-level → very fine; word-level → coarser; sub-word → intermediate.Higher granularity → more detailed vectors; lower → more abstract.Language DependencyVaries across languages due to different token structures.Language-independent after tokenization; embeddings capture semantics.Data RequirementNeeds a pre-defined vocabulary from the training dataset.Needs pre-trained models or data to learn embeddings.Tool StackTokenizers: Byte Pair Encoding, SentencePiece, WordPiece.Embeddings: GloVe, BERT, Word2Vec, DeBERTa.Common LibrariesspaCy, nltk, transformers.torch.nn.Embedding, gensim, transformers.

What Are the Latest Fairness and Bias Considerations in Tokenization and Embeddings?

Recent research reveals that tokenization decisions significantly impact AI system fairness, with bias introduced at the earliest stages of text processing that compounds through embedding generation and model predictions. Understanding these bias mechanisms becomes essential for organizations deploying AI systems across diverse populations and use cases.

How Tokenization Shapes Bias in AI Systems

Subword tokenization methods can inadvertently amplify social biases when they split words in ways that reinforce stereotypical associations. For instance, tokenizing compound words like "Bluetooth" into "blue" and "tooth" risks reinforcing gender stereotypes if "blue" has strong gendered associations in the training data. This effect becomes more pronounced in languages with rich morphological structures where tokenization choices directly impact semantic interpretation.

Low-resource and minority languages face particular challenges as tokenization models optimized for dominant languages like English often oversegment text from agglutinative languages such as Turkish or Finnish. This creates embedding spaces where minority language concepts receive fragmented representation, potentially marginalizing speakers of these languages in AI system outputs.

Embedding Bias and Mitigation Strategies

Word embeddings trained on general web data often encode historical social biases, creating vectors where professional terms cluster closer to gendered concepts. For example, embeddings might associate "doctor" more strongly with male pronouns and "nurse" with female pronouns, reflecting historical professional demographics rather than contemporary equality standards.

Mitigation approaches include adversarial training techniques that explicitly remove protected attribute associations from embedding spaces, using fairness-aware training objectives that balance accuracy with demographic parity, and implementing bias detection tools that monitor embedding associations across different demographic groups throughout model development and deployment.

Multilingual Fairness Challenges

Cross-lingual embeddings face unique fairness challenges when alignment techniques favor high-resource languages, potentially degrading representation quality for minority languages. Recent advances in multilingual models like sentence-transformers address these issues through careful data balancing and language-specific optimization techniques that ensure equitable performance across different linguistic communities.

Organizations can implement fairness monitoring by establishing evaluation frameworks that test tokenization and embedding quality across diverse demographic groups, implementing regular bias audits using tools like the Word Embedding Association Test, and creating feedback mechanisms that allow affected communities to report problematic system behaviors for continuous improvement.

How Can Airbyte Enhance Your Tokenization and Embedding Workflows?

AI models like LLMs (Large Language Models) are trained on vast amounts of data, enabling them to answer a wide range of questions across various topics. However, when it comes to extracting information from proprietary data, LLMs can be limited and lead to inaccuracies. In such cases, consider leveraging Airbyte, a no-code data integration and replication platform.

Airbyte allows you to integrate data from all sources into a target system using its 600+ pre-built connectors. If no existing connector meets your needs, you can build a new one with basic coding knowledge through its CDK feature.

Airbyte also supports RAG-based transformations, like LangChain-powered chunking and OpenAI-enabled embeddings. This simplifies the data integration process and enables LLMs to produce more accurate, relevant, and up-to-date text.

Key Features of Airbyte

- Modern Generative-AI workflows: Load unstructured data into vector databases such as Pinecone, Milvus, and Weaviate for efficient similarity search.

- Efficient Data Transformation: Integrate with dbt to create custom transformations in your pipelines.

- Developer-Friendly Pipelines: Use the open-source PyAirbyte Python library to programmatically connect to connectors.

- Data Synchronization: Change Data Capture (CDC) tracks source updates and replicates them to your destination.

- Open-Source Foundation: Deploy Airbyte locally or on a VM with the open-source edition while maintaining enterprise-grade security and governance capabilities.

- Enterprise-Grade Security: TLS/HTTPS encryption, SSH tunneling, RBAC, credential management, plus ISO 27001 and SOC 2 Type II compliance for production deployments.

Summary

Tokenization and embeddings are essential steps in processing text for machine-learning workflows. While tokenization provides structure, embedding offers a way to represent that structure numerically, enabling models to understand context and nuance. Together, they enhance the performance of models that interpret and generate human language.

What Are Common Questions About Tokenization and Embeddings?

What is the difference between tokens and embeddings?

Tokens are individual units of text (words, subwords, or characters). Embeddings are numerical representations of those tokens that capture semantic meaning.

Should you tokenize before embedding?

Yes. Tokenization must be performed before embedding because embeddings operate on tokens.

Is tokenization the same as word embedding?

No. Tokenization breaks text into units, whereas word embedding converts those units into vectors.

What is the difference between vectorization and tokenization?

Vectorization counts word frequencies; tokenization splits text into tokens.

What is the difference between token and tokenization?

A token is a unit of text; tokenization is the process of splitting text into tokens.

What is tokenization in NLP?

Tokenization in NLP divides large paragraphs or sentences into smaller chunks (words, subwords, or characters) for analysis.

Suggested Read:

.webp)